Motion artifact reduction method based on flight time depth camera

A depth camera and motion artifact technology, applied in radio wave measurement systems, instruments, etc., can solve the problems of motion artifact, limited image matching accuracy, and high algorithm complexity, and achieve the effect of reducing time difference and motion artifact

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

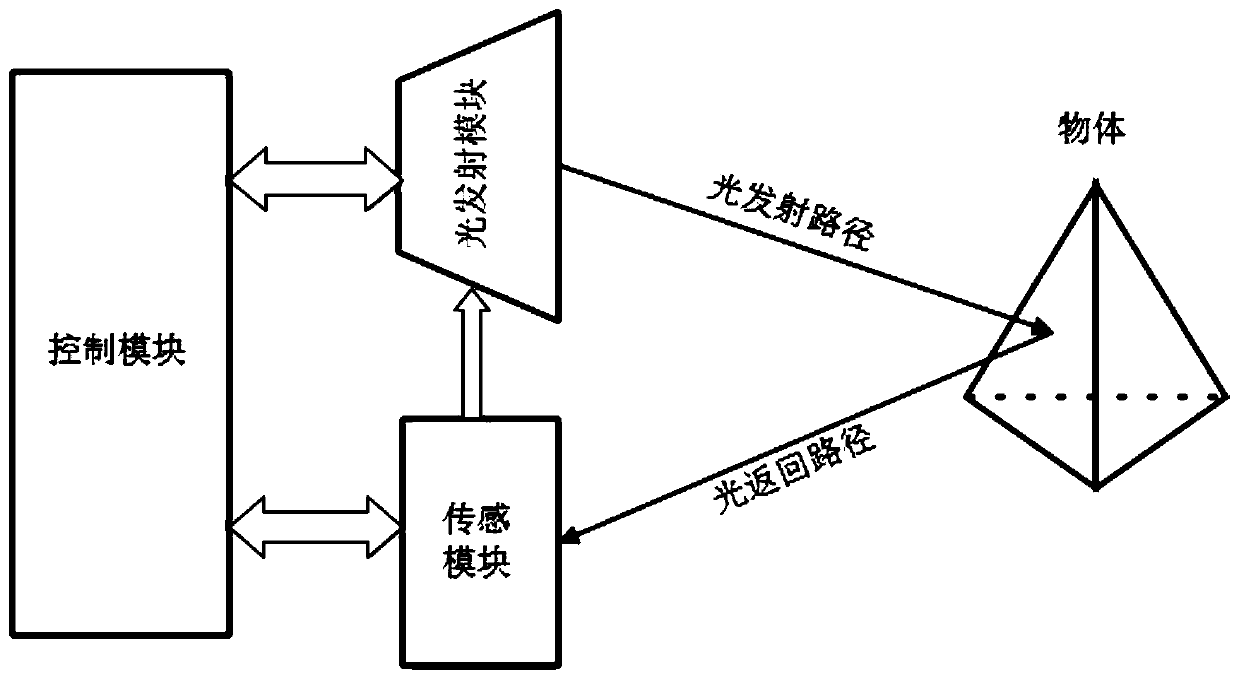

[0045] like figure 1 As shown, in the first embodiment of the present invention, the time-of-flight depth camera includes a light emitting module, a sensing module and a control module connected to each other. The light emitting module emits modulated light; the sensing module receives reflected light from objects within the receiving range; the control module controls the on / off of the light emitting module and the working status of the sensing module, and receives light signals from the sensing module, And process the light signal to get the distance between the object and the depth camera.

[0046] The motion artifact reduction method based on the above-mentioned time-of-flight depth camera of the present implementation includes the following steps:

[0047] In the first step, the light emitting module of the time-of-flight depth camera emits light outward.

[0048] As a preferred embodiment, the light emitting module is adjusted by the control module to adjust the light ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com