Human body action recognition method

A human motion recognition and motion technology, applied in character and pattern recognition, instruments, computer components, etc., can solve the problems of inflexible motion description, inability to receive sample labels, and reduce the effectiveness and differentiation of motion descriptions.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0123] The following will clearly and completely describe the technical solutions in the embodiments of the present invention with reference to the accompanying drawings in the embodiments of the present invention. Obviously, the described embodiments are only some, not all, embodiments of the present invention. Based on the embodiments of the present invention, all other embodiments obtained by persons of ordinary skill in the art without making creative efforts belong to the protection scope of the present invention.

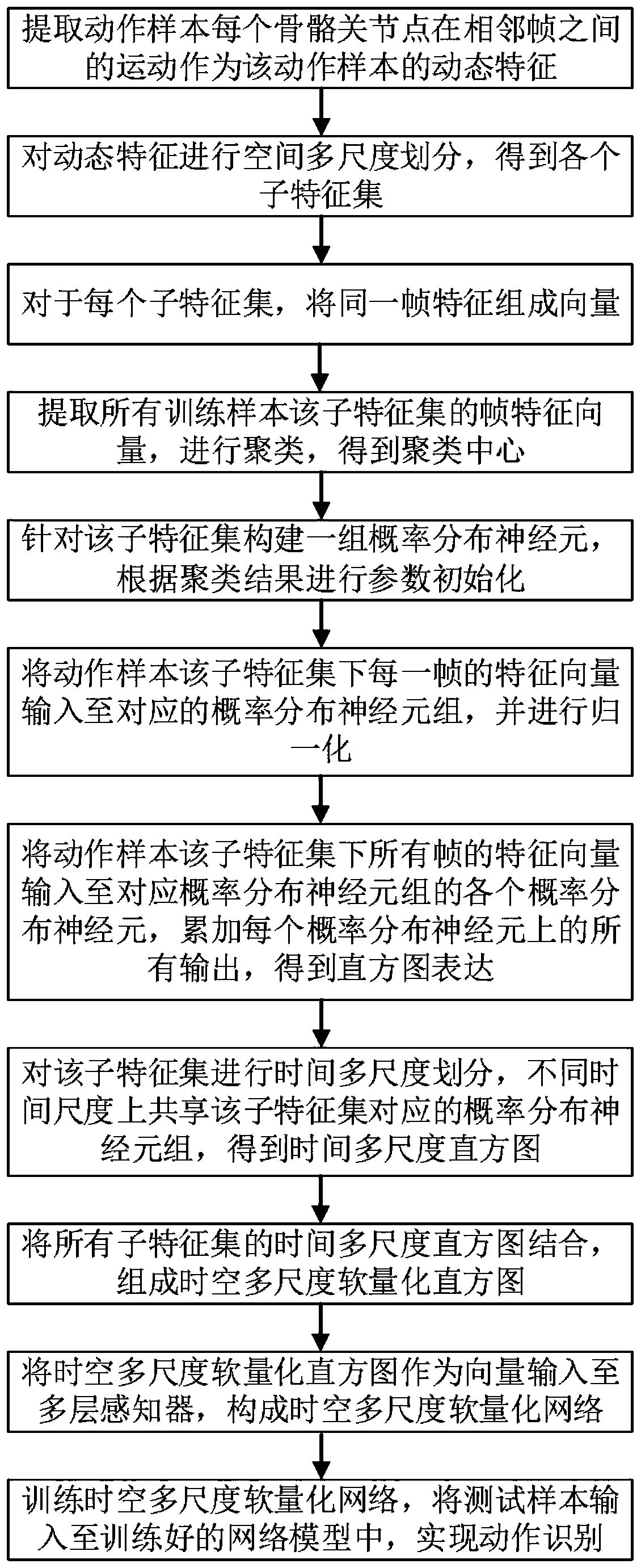

[0124] Such as figure 1 As shown, a human action recognition method includes the following process:

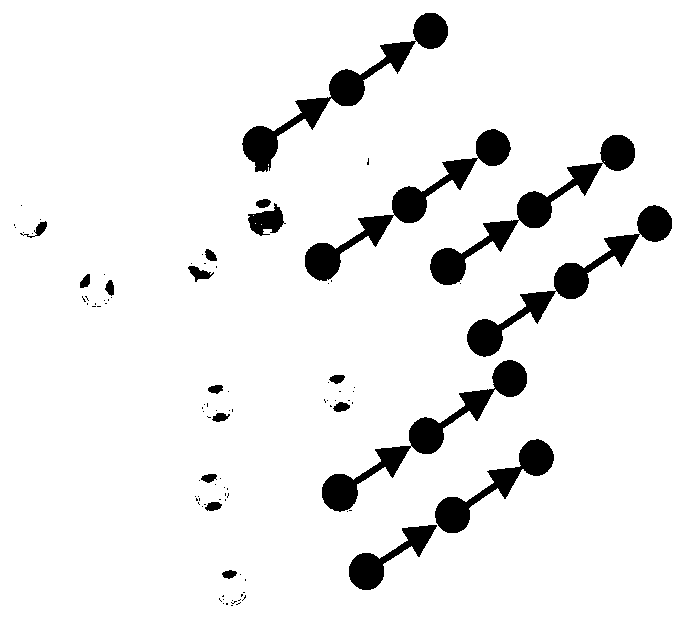

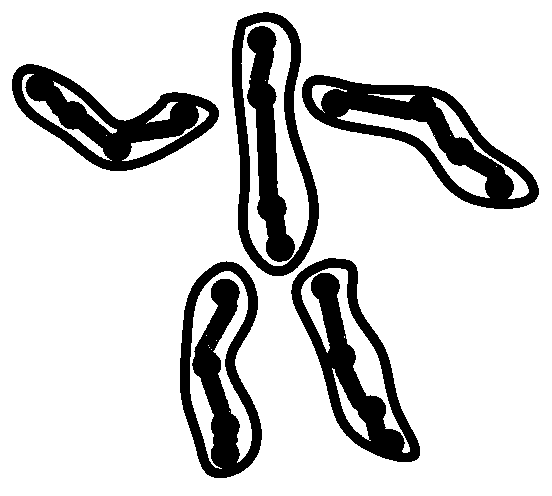

[0125] 1. The total number of samples in the action sample set is 200. There are 10 action categories in total, and each action category has 20 samples. Three quarters of the samples in each action category are randomly selected as the training set, and the remaining quarter is divided into the test set, resulting in a total of 150 training samples and 50 tes...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com