Method for hand posture estimation from single color image based on attention mechanism

A color image, pose estimation technology, used in image enhancement, image analysis, image data processing and other directions

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

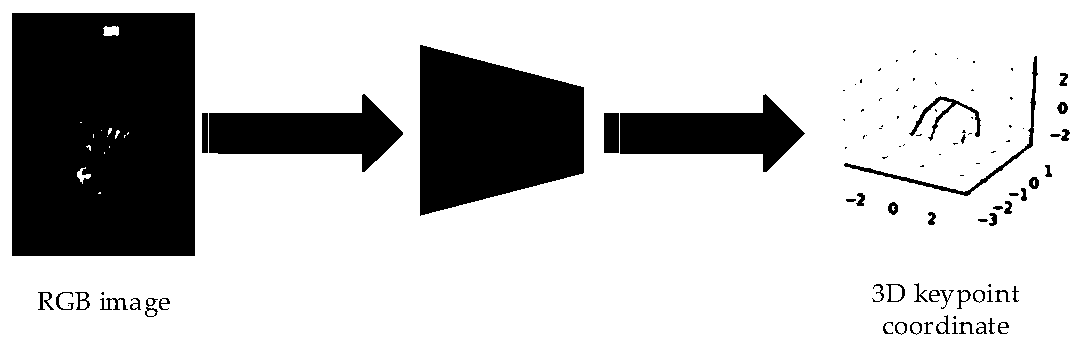

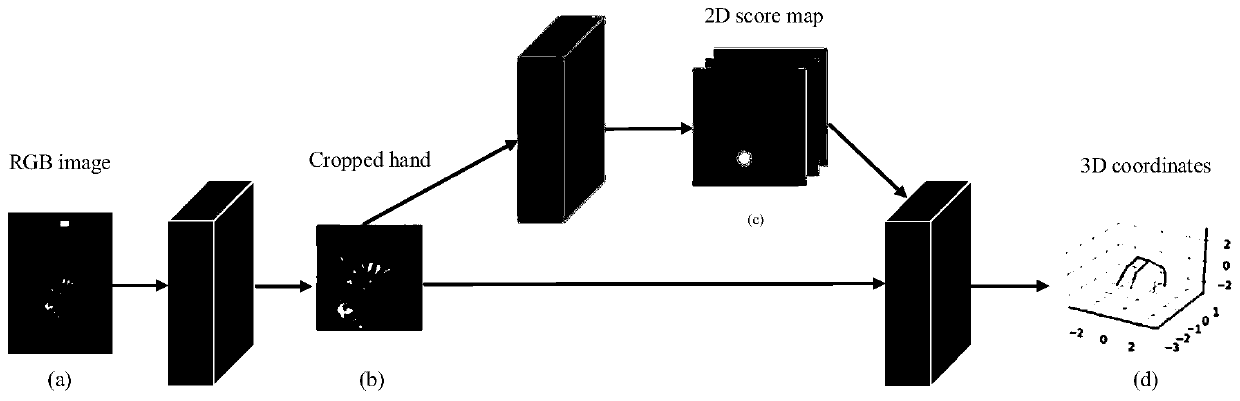

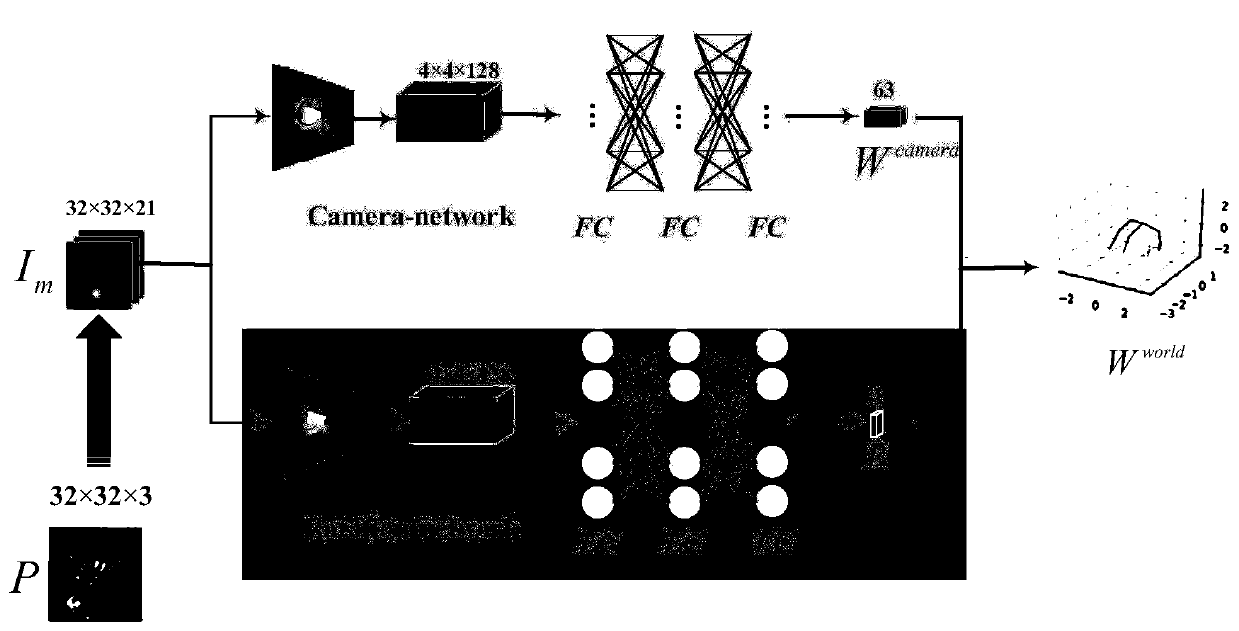

[0042] as attached figure 1 As shown, the task of the present invention is to input an image containing a human hand, and obtain 21 hand node 3D poses through an end-to-end neural network to estimate the hand pose. In this embodiment, J is used to represent different joint points of the hand, and there are 21 joint points in the hand, and J={1,21}. W = {w J =(x,y,z),i.e.,J∈[1,21]} represents the 3D coordinates of the hand joint points. The input RGB image is I∈R w×h×3 , the segmented hand image is The segmented hand image is an image containing hands that is slightly larger than the hand region and smaller than the input image. R=(R x , R y , R z ) represents the rotation angle of the camera coordinate system relative to the world coordinate system. (u,v) is the 2D position of each hand joint point. We add Gaussian noise to the 2D joints to obtain a heat map with Gaussian noise. Each joint point corresponds to a heat map, so there are 21 heat maps P=p J (u,v), i.e.,...

Embodiment 2

[0063] This example verifies CFAM through experiments, runs on 1080ti based on TensorFlow, and sets the training batch size to 8. During the training process, when the loss value does not decrease for many times, the training is stopped and the Adam training strategy is adopted. In this embodiment, the learning rate is set to (1e-5, 1e-6, 1e-7), and the learning rate changes after 30000 steps and 60000 steps. In this embodiment, improvements and tests are made on joint heat map detection and gesture estimation. In the table, some errors for wrist predictions are 0 because two decimal places are reserved, and these errors are less than 0.01 and are rounded to 0. These errors are 0 because the prediction of wrist is more accurate, the error is less than 0.01.

[0064] This embodiment is based on a single labeled RGB image. The commonly used depth image-based gesture estimation datasets MSRA and NYU are not suitable for this embodiment. Therefore, this embodiment selects two ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com