Mobile inspection video quality correction method based on significance multi-feature fusion

A multi-feature fusion and video quality technology, which is applied in digital video signal modification, TV, image data processing, etc., can solve the problems of inability to evaluate and correct mobile inspection video quality, and achieve improved user experience, high recognition accuracy, and The results are accurate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

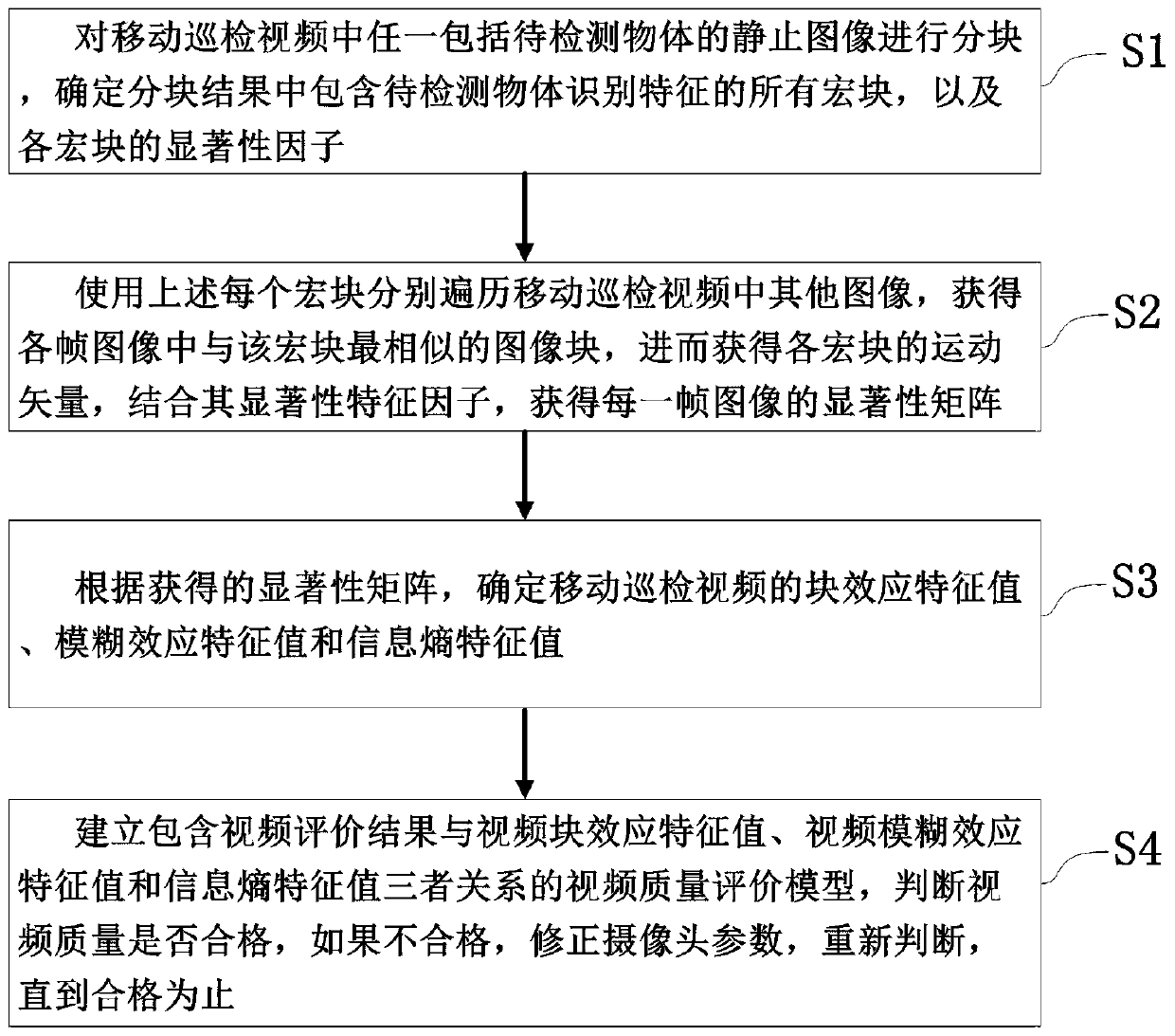

[0089] A specific embodiment of the present invention discloses a mobile inspection video quality correction method based on salient multi-feature fusion, such as figure 1 shown, including the following steps:

[0090] S1. Block any still image including the object to be detected in the mobile inspection video, and determine all macroblocks containing the identification features of the object to be detected in the block result, and the significance factor of each macroblock;

[0091] S2. Use each of the above macroblocks to traverse other images in the mobile inspection video, obtain the image block most similar to the macroblock in each frame image, and then obtain the motion vector of each macroblock, combined with its significant feature factor, to obtain The saliency matrix of each frame image;

[0092] S3. According to the obtained significance matrix, determine the block effect eigenvalue, blur effect eigenvalue and information entropy eigenvalue of the mobile inspectio...

Embodiment 2

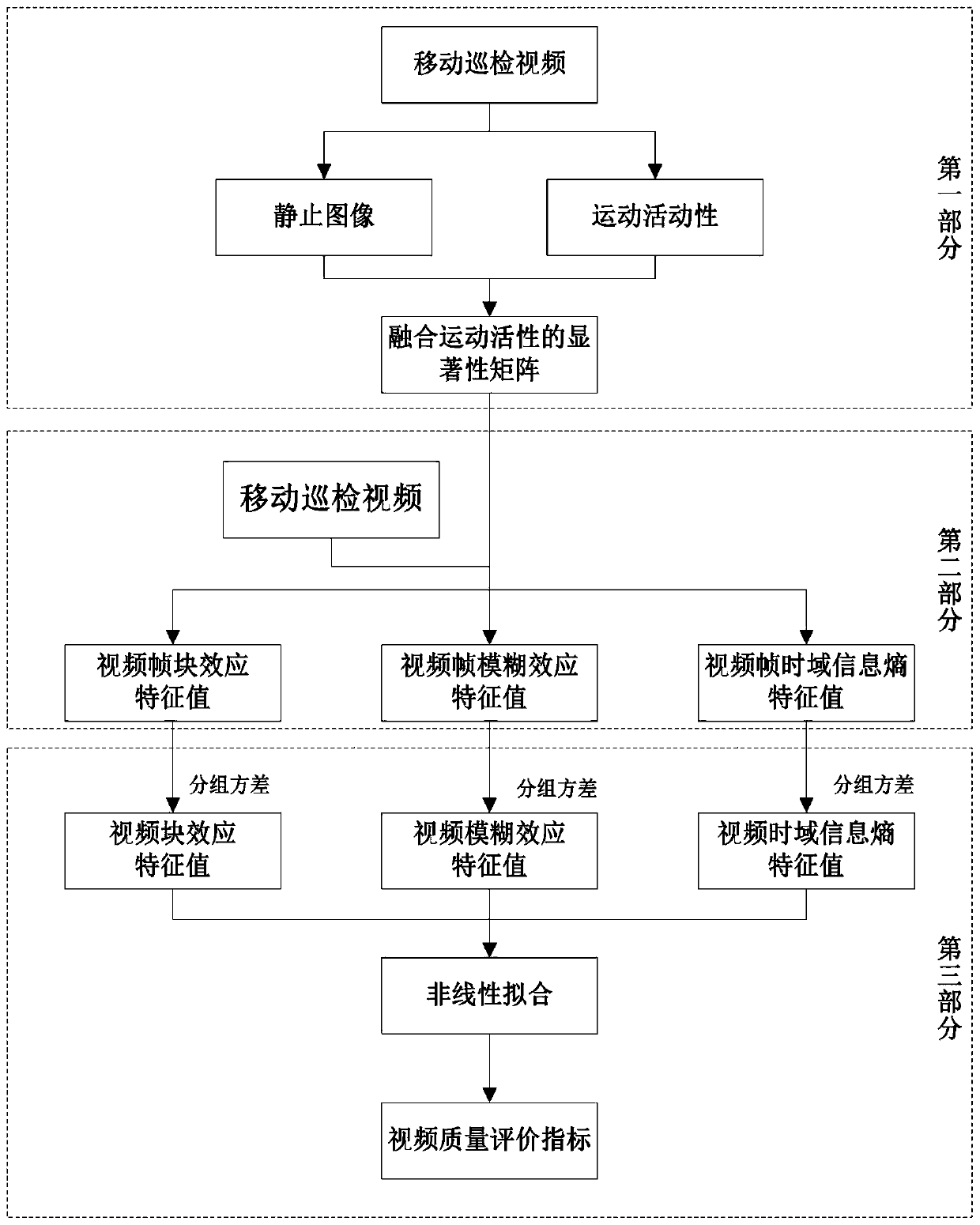

[0096] Optimizing on the basis of Example 1, the overall idea is as follows figure 2 As shown, in step S1, the saliency factor of each macroblock can be determined by the following formula

[0097] S SDSP (i,j)=S F (x)·S C (x)·S D (x) (1)

[0098] In the formula, S F (x) is the frequency prior, S C (x) is the color prior, S D (x) is the position prior, x is the pixel point matrix corresponding to the macroblock, and (i, j) represents the pixel point.

[0099] Specifically, S F (x) can be obtained by the following steps:

[0100] S111. Perform Lab color space conversion on the macroblock to obtain L channel, a channel and b channel in the three color channels.

[0101] S112. According to the three-color channels obtained above, combined with band-pass filtering, the frequency prior S is obtained by the following formula F (x)

[0102]

[0103] in

[0104] g(x)=f(G(u)) (3)

[0105]

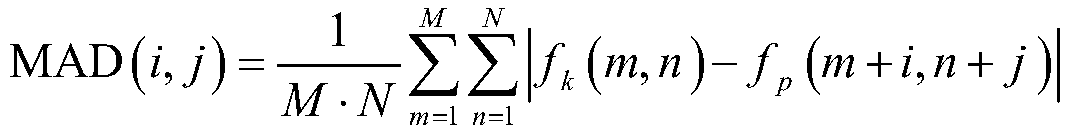

[0106] In the formula, u=(u,v)∈R 2 is the frequency domain coordinate of t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com