A sparse neural network accelerator and its implementation method

A technology of neural network and implementation method, which is applied in the field of sparse neural network accelerator and its implementation, can solve the problem of large memory power consumption, and achieve the effect of reducing the total size

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0041] In order to more clearly illustrate the technical solutions in the embodiments of the present invention or the prior art, the following will briefly introduce the drawings that need to be used in the description of the embodiments or the prior art. Obviously, the accompanying drawings in the following description are the For some embodiments of the invention, those skilled in the art can also obtain other drawings based on these drawings without creative effort.

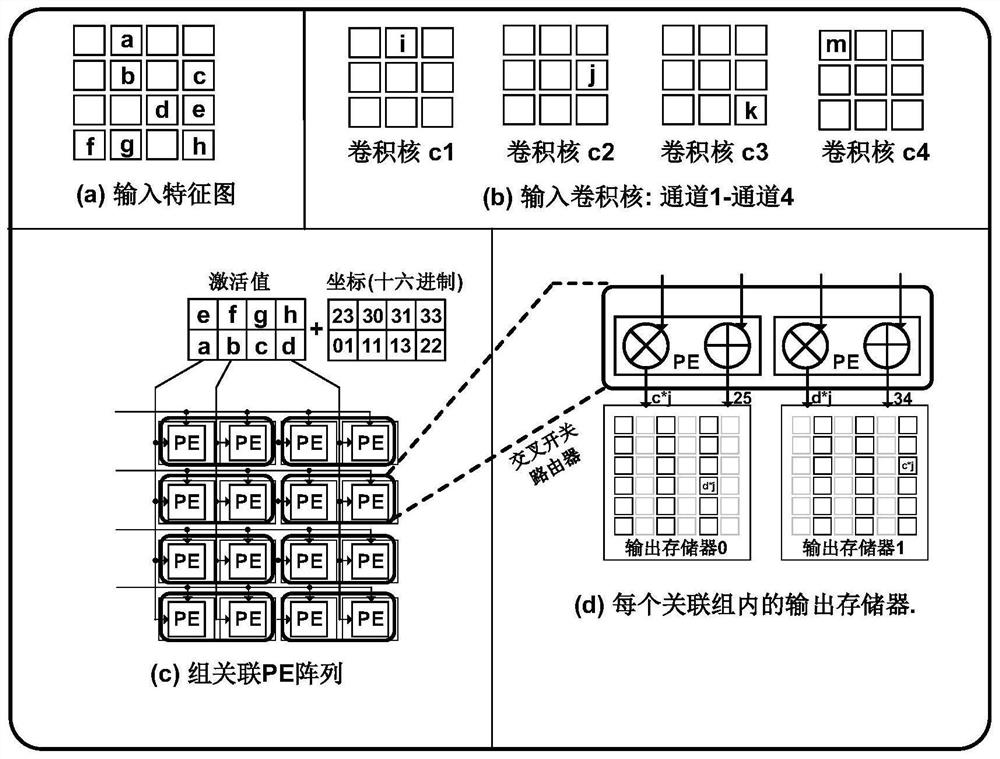

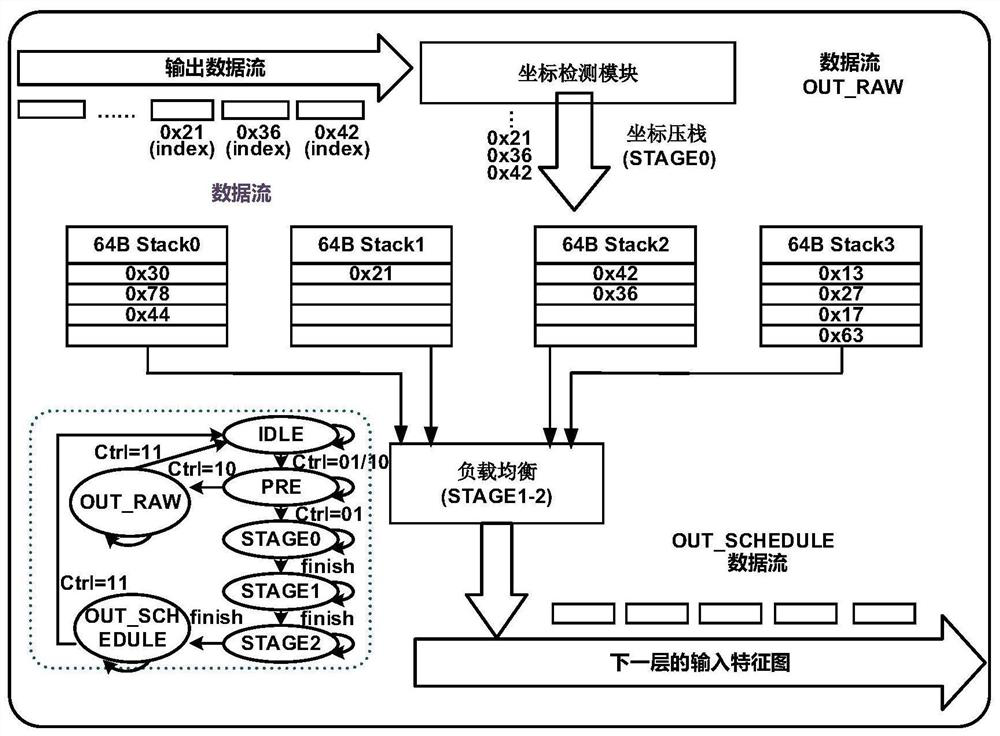

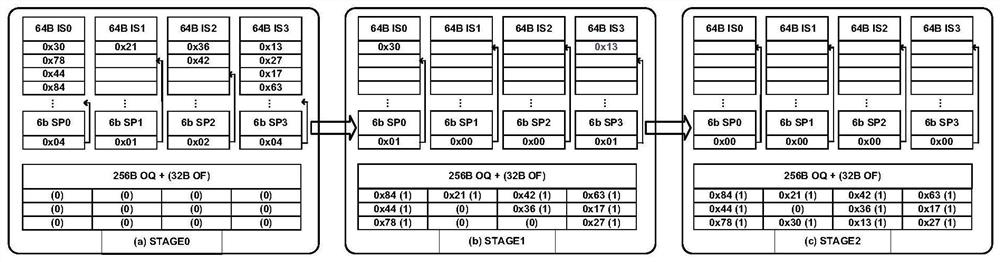

[0042] In one embodiment of the present invention, a sparse neural network accelerator is provided, figure 1 The sparse neural network accelerator provided for the embodiment of the present invention includes a PE array and an output memory, and the PE array is divided into multiple PE groups, wherein each PE group and the corresponding output memory form an association groups, the number of PE units in each associated group is equal to the number of output memories. For each PE unit in any association group,...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com