A device and method for executing LSTM operations

A technology of computing modules and computing results, applied in the field of artificial neural networks, can solve problems such as high power consumption, no multi-layer artificial neural network computing, off-chip bandwidth performance bottlenecks, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

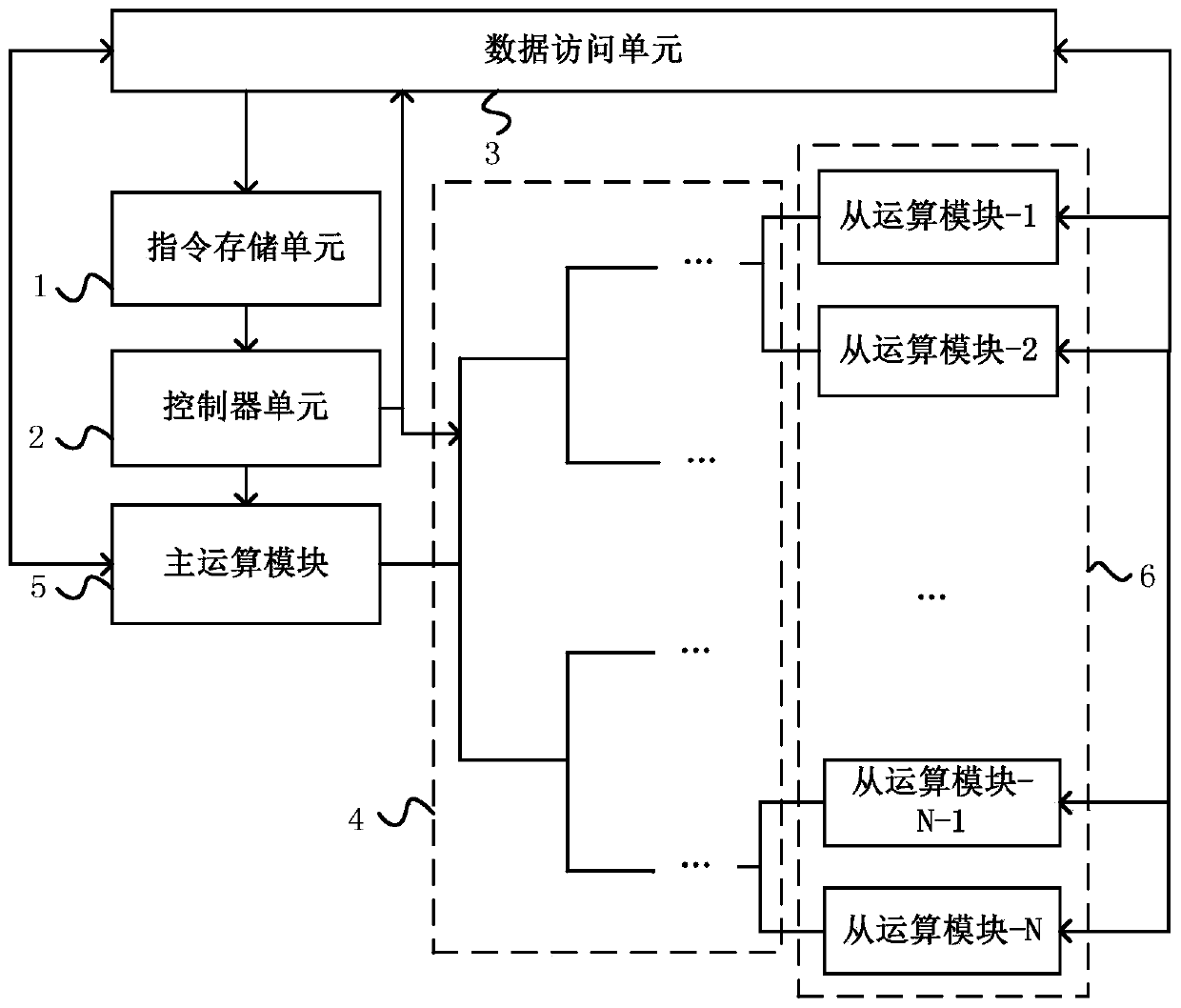

[0018] figure 1 A schematic diagram of the overall structure of the device for performing recurrent neural network and LSTM operations according to the embodiment of the present invention is shown. Such as figure 1 As shown, the device includes an instruction storage unit 1 , a controller unit 2 , a data access unit 3 , an interconnection module 4 , a master computing module 5 and a plurality of slave computing modules 6 . The instruction storage unit 1, the controller unit 2, the data access unit 3, the interconnection module 4, the main operation module 5 and the slave operation module 6 can all be connected through hardware circuits (including but not limited to FPGA, CGRA, application specific integrated circuit ASIC, analog circuit and memristors).

[0019] The instruction storage unit 1 reads instructions through the data access unit 3 and caches the read instructions. The instruction storage unit 1 can be realized by various storage devices (SRAM, DRAM, eDRAM, memris...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com