Deep learning accelerator for accelerating BERT neural network operation

A neural network and deep learning technology, applied in the field of deep learning accelerators, can solve the problem of wasting storage units, and achieve the effect of reducing required space, data interaction, computing time and power consumption

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0021] The invention is described more fully hereinafter in reference to the examples illustrated in the illustrations, providing preferred embodiments but should not be considered limited to the embodiments set forth herein.

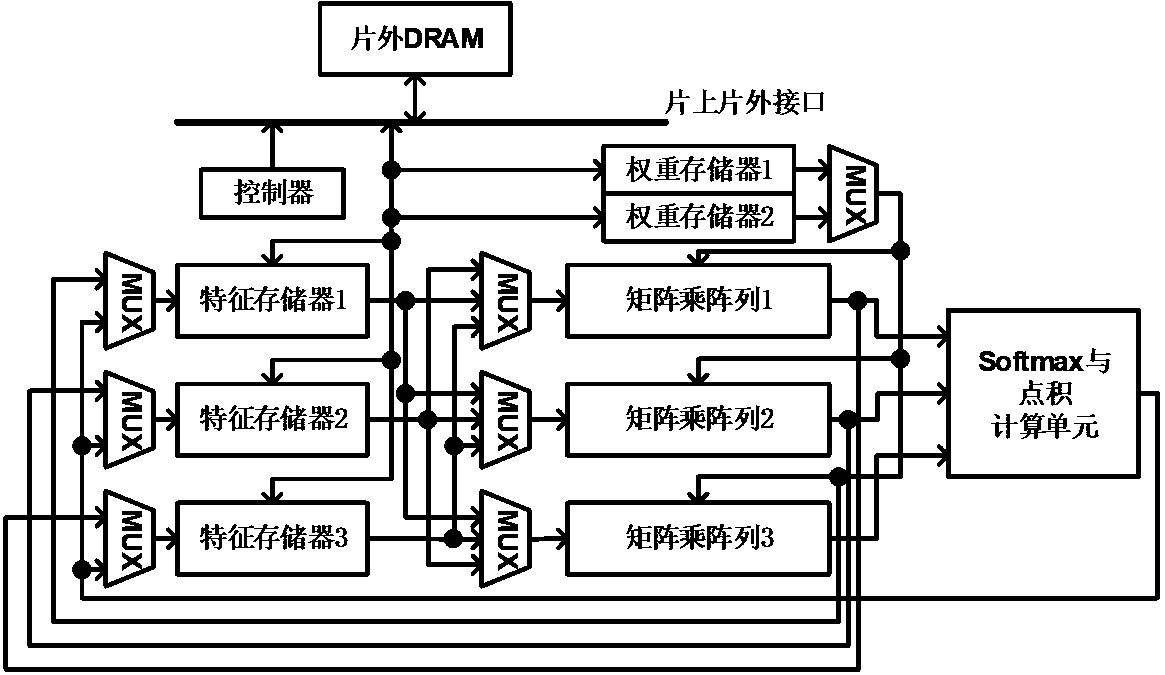

[0022] Embodiment is a deep learning accelerator for accelerating BERT neural network operations, figure 1 is its top-level circuit block diagram.

[0023] The accelerator includes three eigenvalue memories, two weight memories, three matrix multiplication arrays, a Softmax and dot product calculation unit, a controller and on-chip and off-chip interfaces.

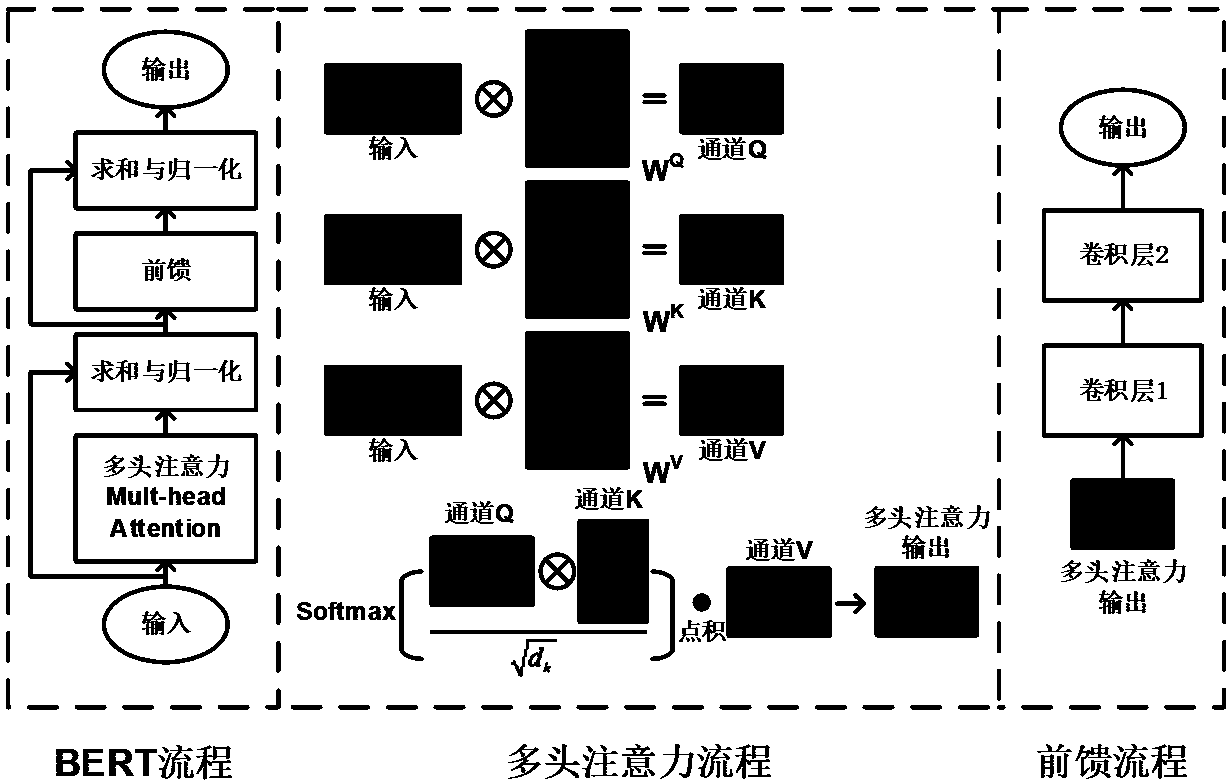

[0024] figure 2 It is an accelerated BERT neural network calculation flow chart. It mainly includes two parts: multi-head attention branch neural network structure and feed-forward end-to-end neural network structure. When calculating the multi-head attention neural network, the same input is matrix multiplied with the weights of the three branches respectively, and the output eigenvalues obta...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com