Large-scale image retrieval method for deep strong correlation hash learning

An image retrieval and strong correlation technology, applied in the field of image processing, can solve the problems of increasing computing overhead and not being suitable for large-scale image retrieval, and achieve fast computing speed, efficient large-scale image retrieval, and the effect of preventing overfitting

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

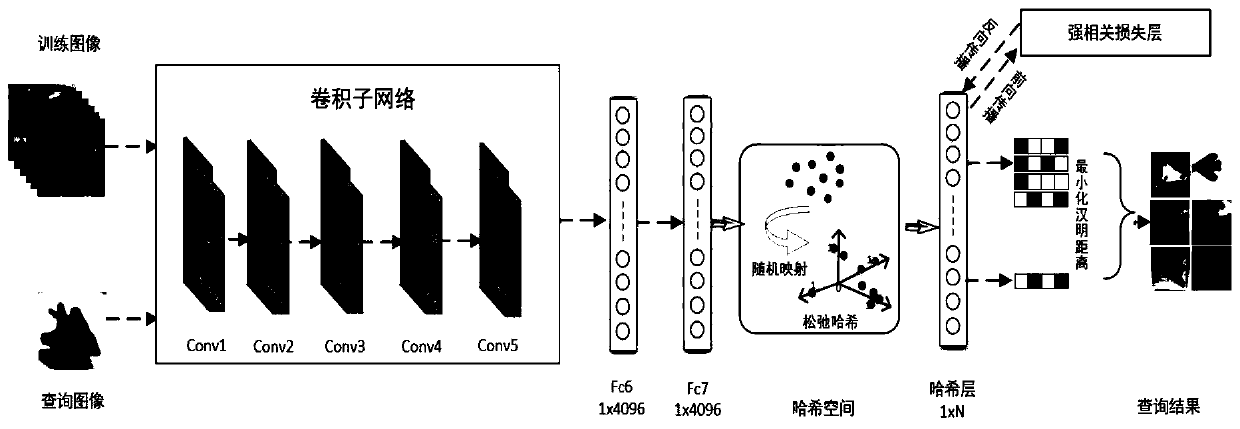

[0035] Embodiment 1: as Figure 1-4 As shown, a large-scale image retrieval method of deep strong correlation hash learning, the specific steps of the large-scale image retrieval method of deep strong correlation hash learning are as follows:

[0036] Step1. Extract data from the image data set to form training image data, and then perform preprocessing operations on the image. The input image passes through the convolutional sub-network, and the image information is mapped to the feature space to obtain a local feature representation;

[0037] Step2, and then through the fully connected layer, map the local feature representation obtained by the upper layer into the sample label space, and then enter the hash layer for dimensionality reduction and hash coding;

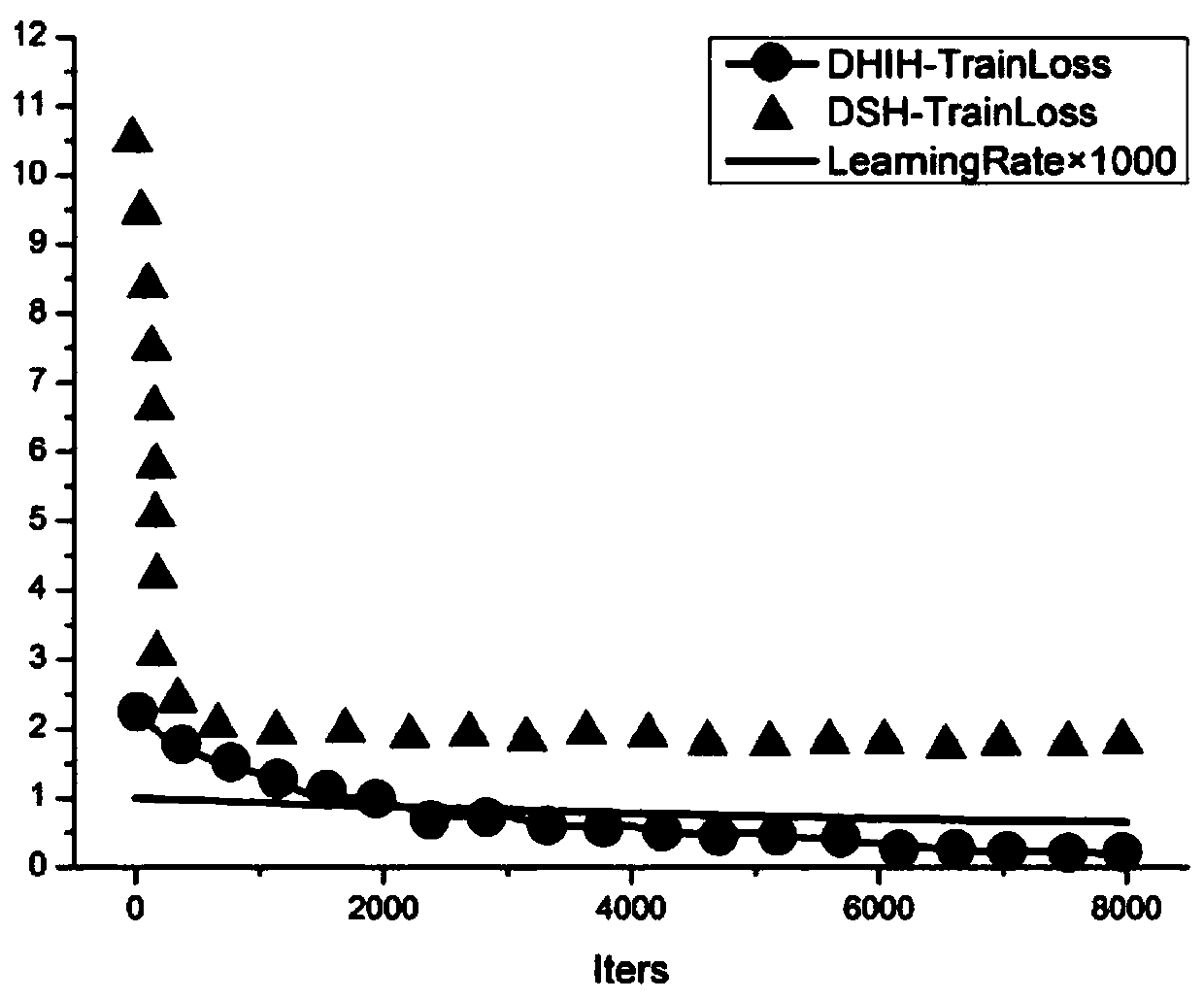

[0038] Step3, then enter the strong correlation loss layer, use the strong correlation loss function to calculate the loss value of the current iteration; finally return the loss value, update the network parameters a...

Embodiment 2

[0063] Embodiment 2: as Figure 1-4 As shown, a large-scale image retrieval method of deep strong correlation hash learning, the specific steps of the large-scale image retrieval method of deep strong correlation hash learning are as follows:

[0064] This embodiment is the same as Embodiment 1, the difference is:

[0065] The model trained in Step 3 of this embodiment uses AlexNet, and the deep strong correlation hash learning method is applied to AlexNet to obtain a deep strong correlation hash model.

[0066]In the steps Step1 and 2, the configuration of the convolutional sub-network, the fully connected layer, and the hash layer is shown in Table 1, where Hashing is the hash layer, and N is the number of hash codes.

[0067] Table 1 Network structure of AlexNet-based strong correlation hashing learning model

[0068]

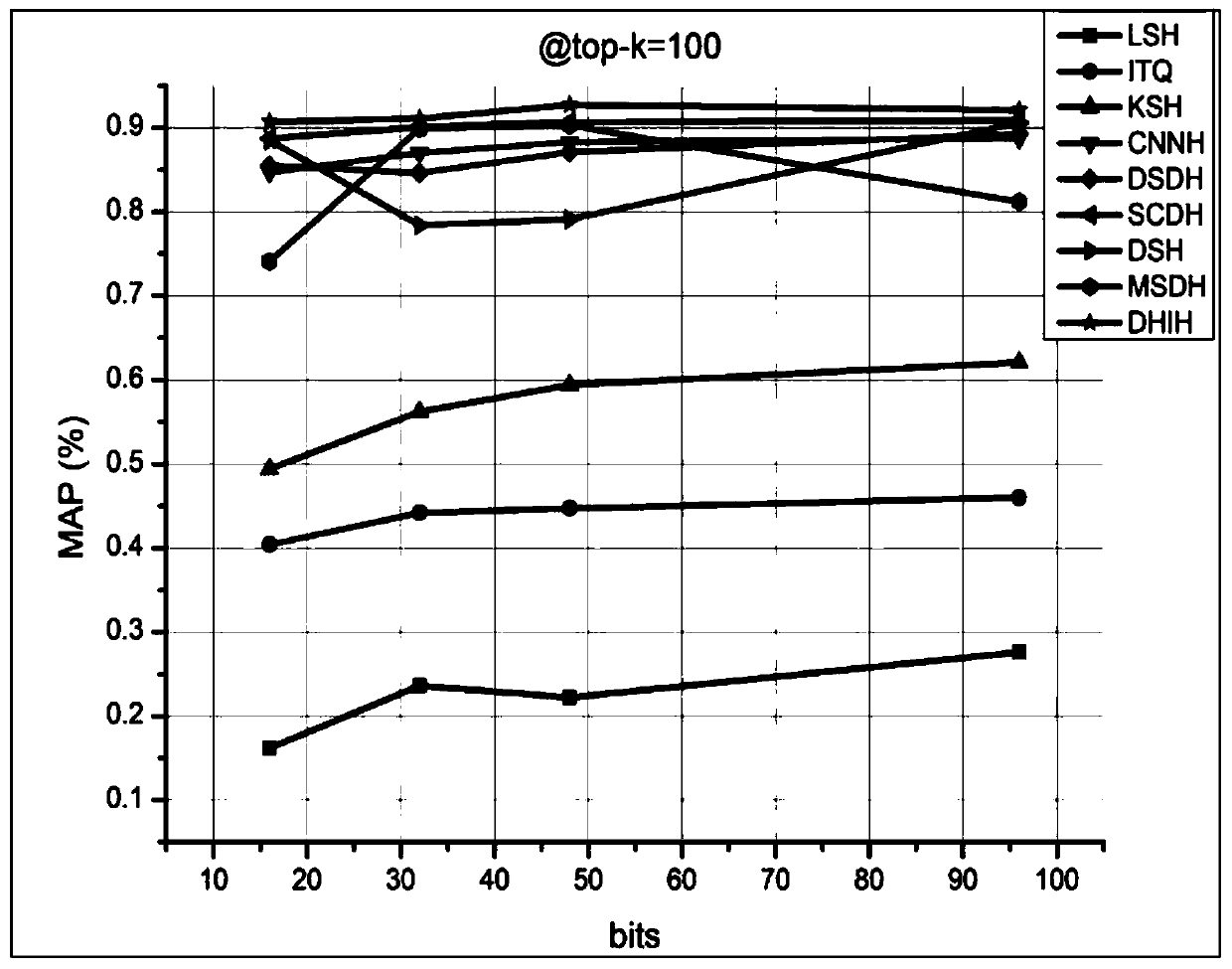

[0069] Further, the method of this embodiment and the comparison method use a unified network structure, as shown in Table 1. The model uses the pre-t...

Embodiment 3

[0071] Embodiment 3: as Figure 1-4 As shown, a large-scale image retrieval method of deep strong correlation hash learning, the specific steps of the large-scale image retrieval method of deep strong correlation hash learning are as follows:

[0072] This embodiment is the same as Embodiment 1, the difference is:

[0073] The model trained in Step 3 of this embodiment uses Vgg16NET, and the deep strong correlation hash learning method is applied to Vgg16NET to obtain a deep strong correlation hash model.

[0074] In the step Step2, since Vgg16 cannot output a hash code, we extract the output matrix of the second fully connected layer of Vgg16 (with a dimension of 1×4096) for retrieval.

[0075] In Step 4, top-q=100 is used for retrieval, and Vgg16NET uses Euclidean distance to calculate similarity. The experimental results are shown in Table 2. Bits is the number of digits in the current output matrix; time is the time it takes to calculate the similarity and return the fir...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com