Underwater robot parameter adaptive backstepping control method based on double-BP neural network Q learning technology

A BP neural network, underwater robot technology, applied in the direction of adaptive control, general control system, control/regulation system, etc., can solve the problems of low learning efficiency and difficult real-time online adjustment of parameters.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach 1

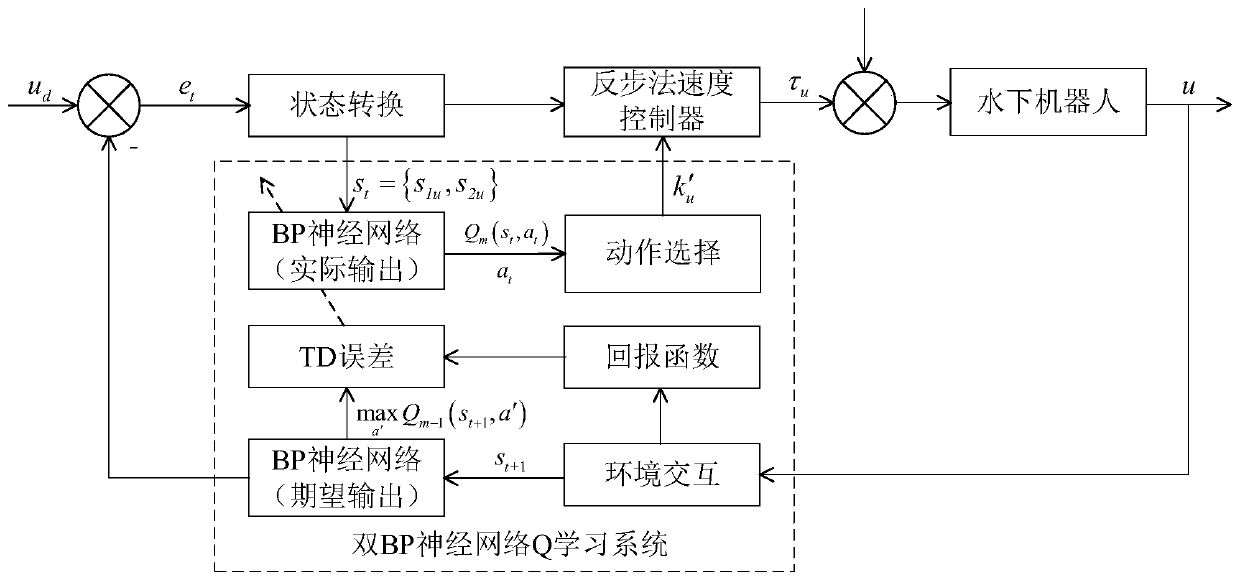

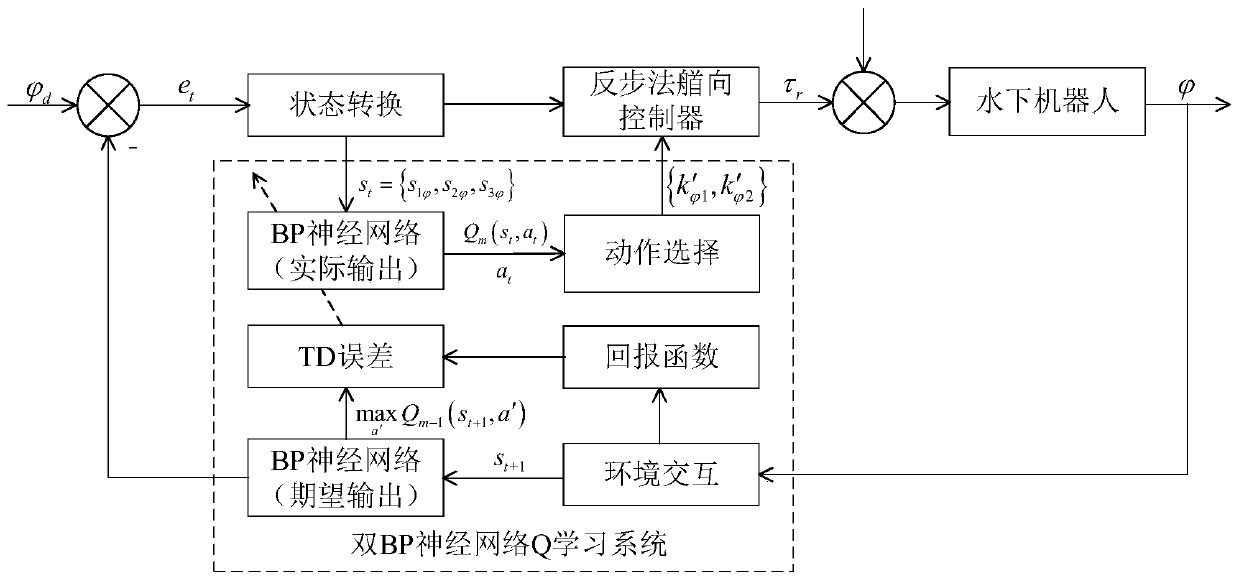

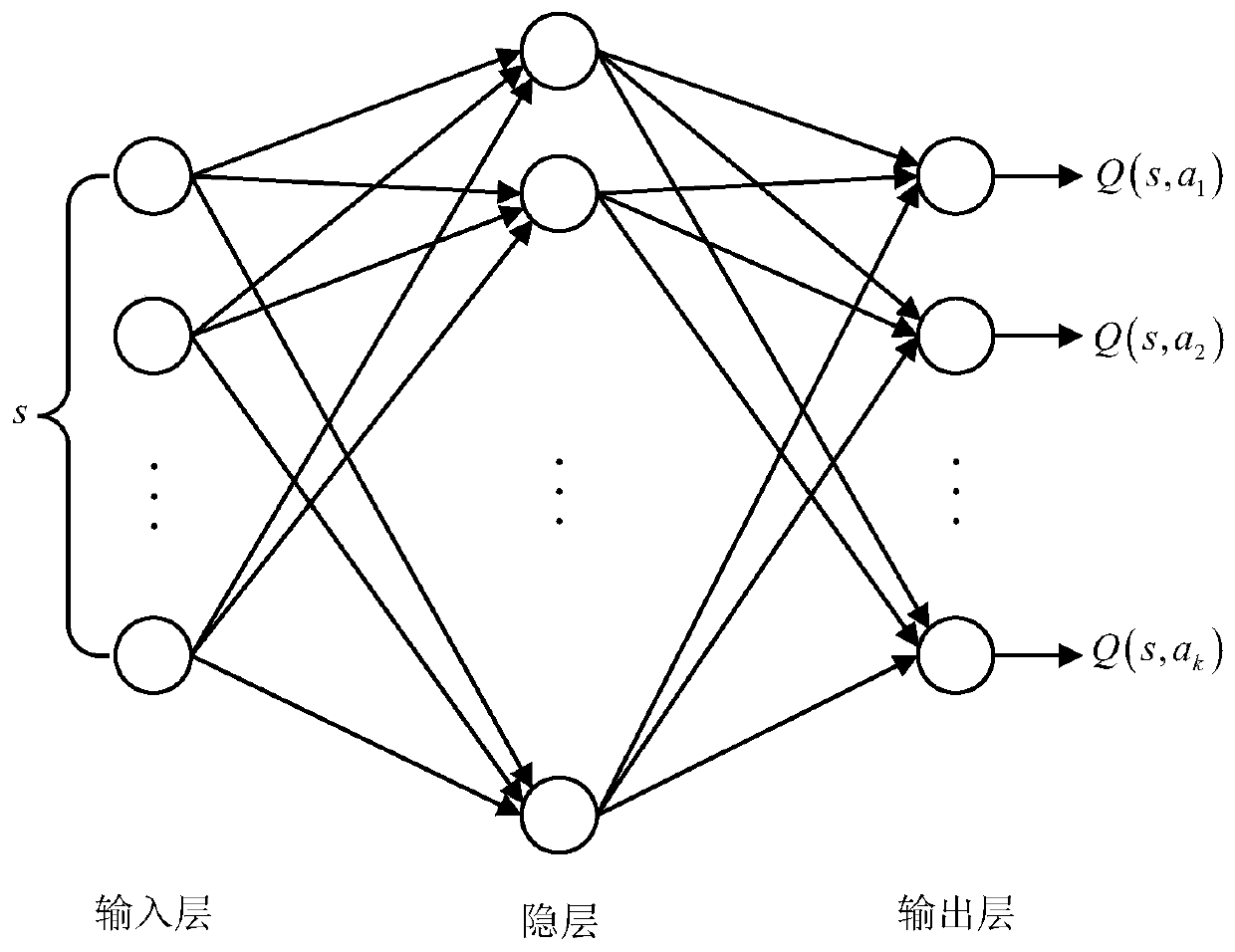

[0052] Specific implementation mode 1: The method for adaptive backstepping control of underwater robot parameters based on dual BP neural network Q learning technology described in this embodiment specifically includes the following steps:

[0053] Step 1: Design the speed control system and the heading control system of the underwater robot separately based on the backstepping method, and then determine the control law of the speed control system and the heading control system according to the designed speed control system and heading control system;

[0054] The speed control system of the underwater robot is shown in formula (1):

[0055]

[0056] Among them, m is the quality of the underwater robot, And X u|u| Are all dimensionless hydrodynamic parameters, u is the longitudinal velocity of the underwater robot, |u| is the absolute value of u, Is the longitudinal acceleration of the underwater robot, τ u Is the longitudinal thrust of the propeller, v is the lateral speed of the ...

specific Embodiment approach 2

[0110] Specific embodiment two: this embodiment is different from specific embodiment one in that in the second step, the output is the action value set k′ u , And then use the ε greedy strategy from the action value set k′ u Select the optimal action value corresponding to the current state vector; the specific process is:

[0111] Define the action space to be divided as k′ u0 , K′ u0 ∈[-1, 2], put k′ u0 Every 0.2 is divided into 16 action values, and the 16 action values form the action value set k′ u ; Then use the ε greedy strategy from the action value set k′ u Select the optimal action value k" corresponding to the current state vector in u .

[0112] Action value set k′ u = {-1, -0.8, -0.6, -0.4, -0.2,...,1.4,1.6,1.8,2}.

[0113] The adaptive backstepping speed controller and heading controller based on reinforcement learning, its action selection method is ε greedy strategy, ε ∈ (0,1), when ε=0, it means pure exploration, when ε=1, it means pure Use, so its value is betwee...

specific Embodiment approach 3

[0114] Specific embodiment three: This embodiment is different from specific embodiment one in that in the third step, the first current BP neural network is in the current state S t Choose the best action a t And the reward value obtained after execution is r t+1 (S t+1 ,a), r t+1 (S t+1 The expression of a) is:

[0115] r t+1 (S t+1 ,a)=c 1 ·S 1u 2 +c 2 ·S 2u 2 (13)

[0116] Where c 1 And c 2 All are positive numbers greater than zero.

[0117] The reward and punishment function has a relatively clear goal, which is used to evaluate the performance of the controller. Usually the quality of a controller is based on its stability, accuracy and rapidity. It is hoped that it can reach the expected value faster and more accurately , Reflected on the response curve, should have a faster ascent speed, and have a smaller overshoot and oscillation. c 1 And c 2 Both are positive numbers greater than zero, which respectively represent the proportion of the influence of the deviation and the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com