Humanoid robot motion control method and system based on deep reinforcement learning

A robot motion, humanoid robot technology, applied in the field of humanoid robot motion control based on deep reinforcement learning, can solve the problems of slow training, poor anti-interference ability, difficult parameter adjustment, etc., to improve stability and reliability, improve The effect of learning speed and improving training efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0032] The present invention will be further described below in conjunction with the accompanying drawings and specific preferred embodiments, but the protection scope of the present invention is not limited thereby.

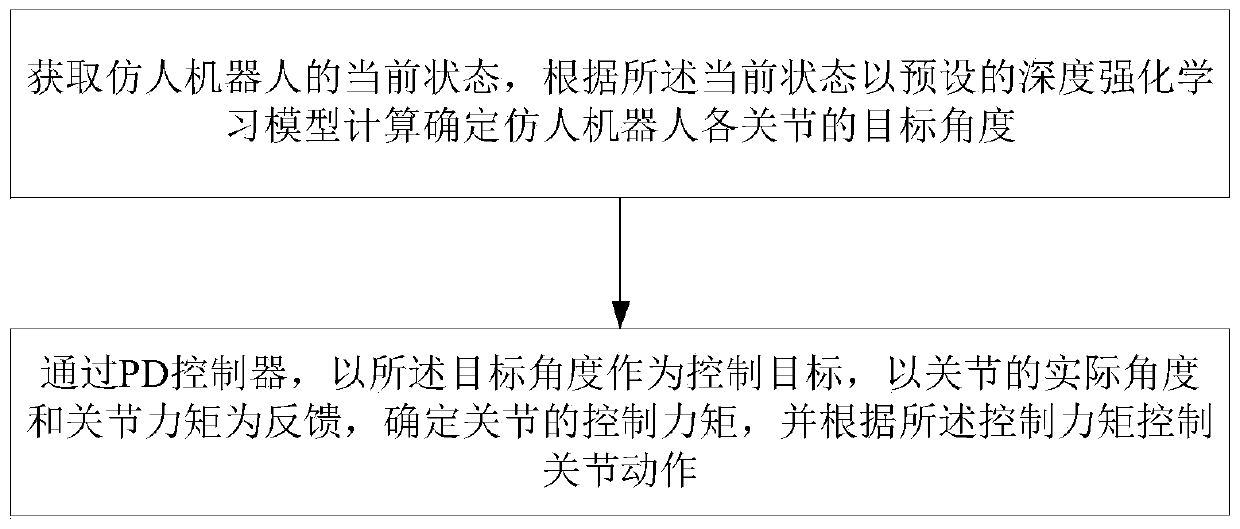

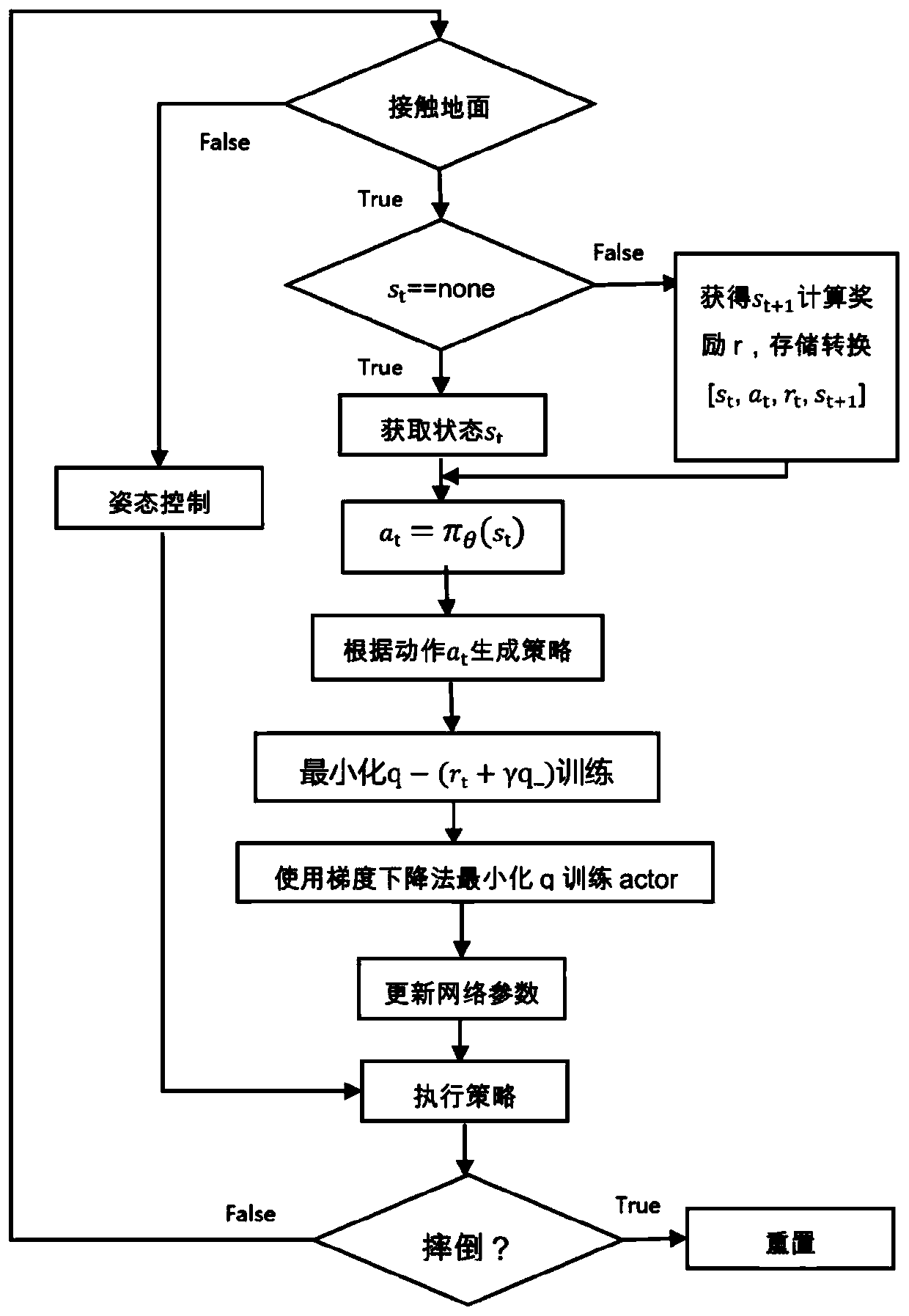

[0033] Such as figure 1 As shown, the humanoid robot motion control method based on deep reinforcement learning in this embodiment includes: S1. Simulation control: obtain the current state of the humanoid robot, and calculate and determine the humanoid robot according to the current state with a preset deep reinforcement learning model The target angle of each joint; S2.PD control: through the PD controller, the target angle is used as the control target, and the actual angle and joint torque of the joint are used as feedback to determine the control torque of the joint, and control the joint action according to the control torque.

[0034] In this embodiment, a specific humanoid robot model is taken as an example for illustration, such as figure 2 shown, and...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com