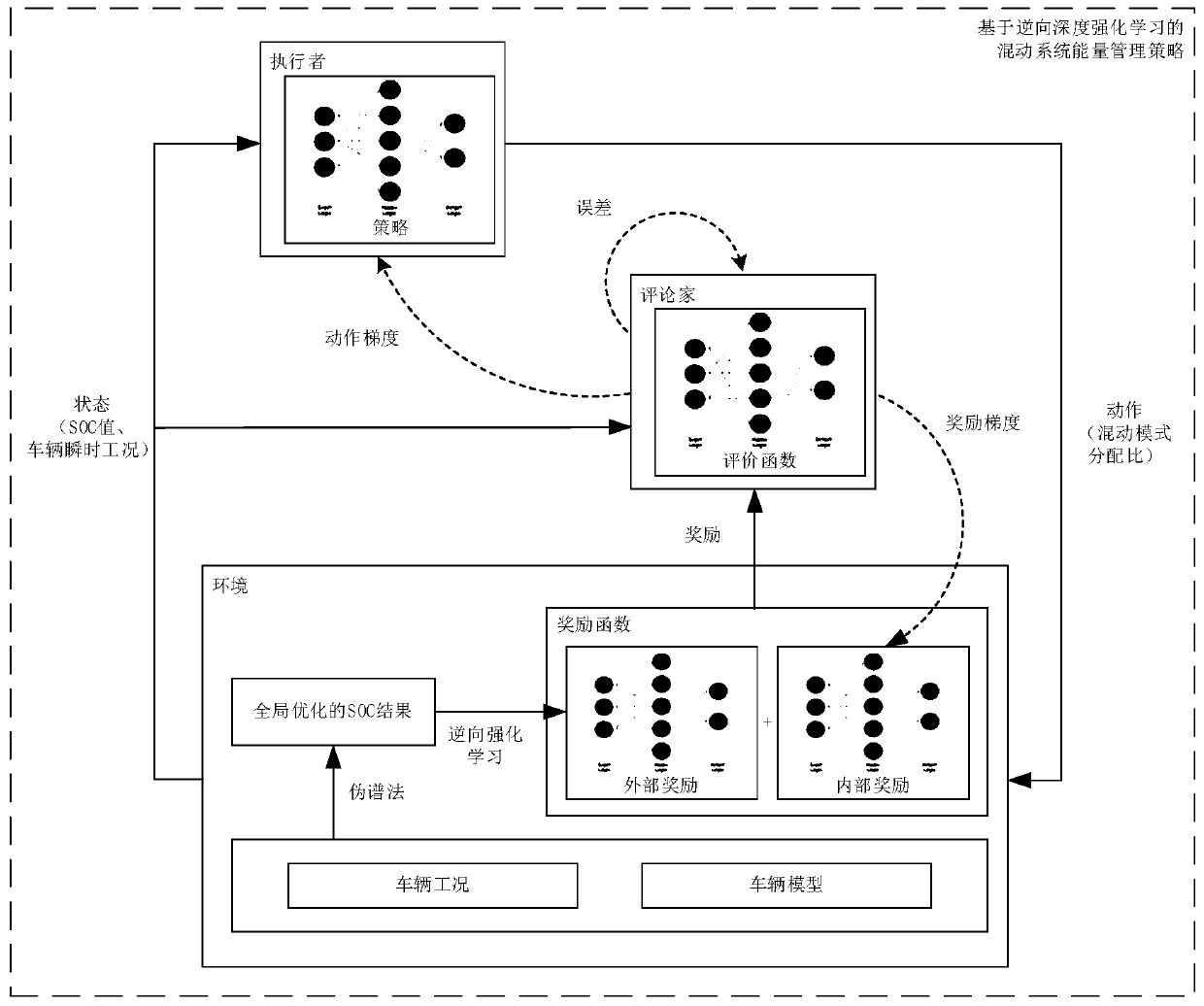

Hybrid power system energy management strategy based on reverse deep reinforcement learning

A technology of reinforcement learning and energy management, applied in general control systems, control/regulation systems, instruments, etc., can solve problems such as inability to carry out online applications, and achieve the effect of deep reinforcement learning, good real-time performance, and fast calculation speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

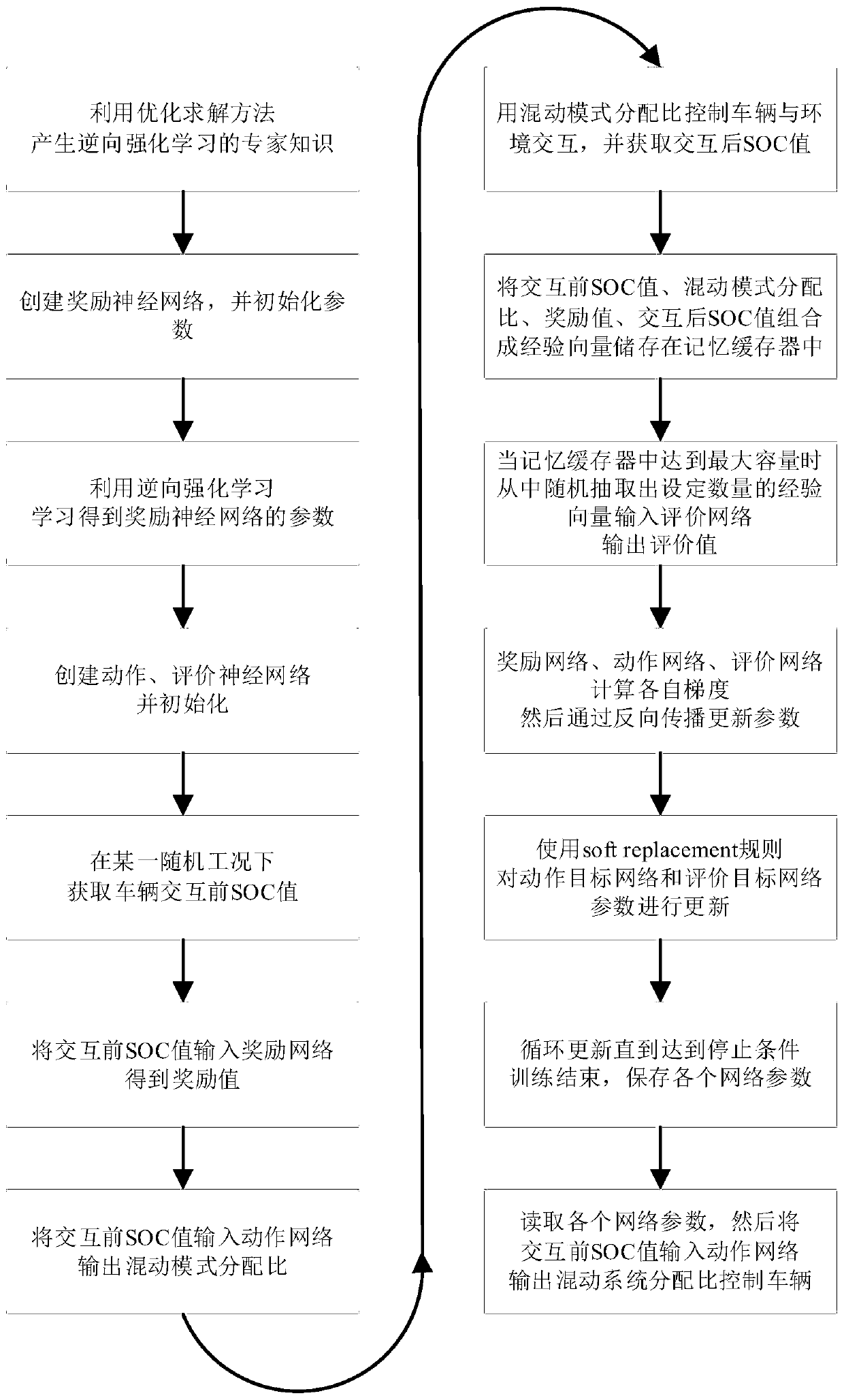

[0039] like figure 1 , figure 2 As shown, a hybrid system energy management strategy based on reverse deep reinforcement learning includes the following steps:

[0040] S1: Use the optimization solution method to calculate the global hybrid mode allocation ratio and the global optimized SOC result under one of the complete working conditions, and form an expert state-action pair as expert knowledge for reverse reinforcement learning; the optimization solution method includes Pseudospectral method, dynamic programming method, genetic algorithm.

[0041] S2: Create a reward function neural network and initialize parameters;

[0042] The reward function neural network is composed of a fully connected neural network, a convolutional neural network, and a long-term short-term memory neural network stacked in the order of a convolutional neural network, a long-term short-term memory neural network, and a fully connected neural network; the fully connected neural network consists ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com