Method for optimizing throughput rate and response time of decision engine

A technology of response time and throughput rate, applied in the field of decision-making engine, can solve problems such as reducing system response efficiency, frequent fluctuation of system TPS and responsetime, affecting service TPS and response time, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0022] like figure 1 As can be seen, the present invention includes the following processing flow:

[0023] Step 1: Use the blocking queue to create a task buffer pool for tasks, initialize the tasks and put them in the queue, take out the first task and assign it to the current task, and the initialization is complete;

[0024] Step 2: When the request comes, use the current task to process the request, and when the request counter and the threshold modulo are 0, log off the current task;

[0025] Step 3: Generate a new task and put it in the queue, take out the top task from the task buffer pool at this time and assign it to the current task, and continue to process the rule execution request;

[0026] Step 4: Merge the unregistered tasks and generated tasks into the queue.

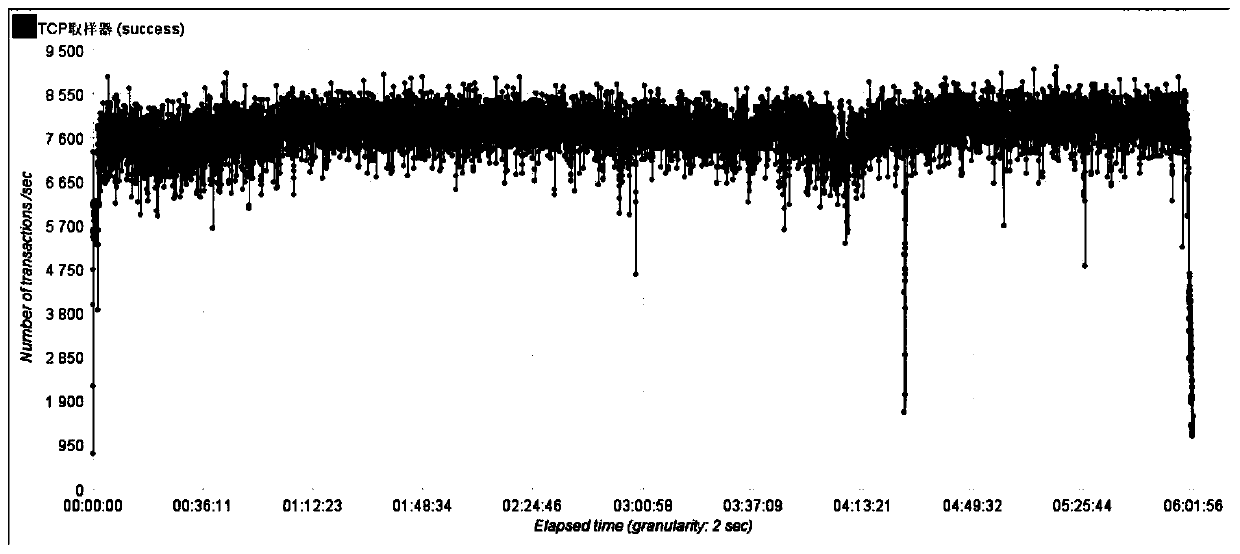

[0027] In use, according to the attached figure 1 As shown, after introducing the above method, in the jmeter test curve of the rule engine stress test, the TPS is stable at around 8000, the system i...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com