Large-scale graph deep learning calculation framework based on hierarchical optimization normal form

A technology of deep learning and computing framework, applied in the field of deep learning, can solve problems such as not supporting GNNs well, not being able to express and support graph structures, not supporting effective implementation of graph propagation operators, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

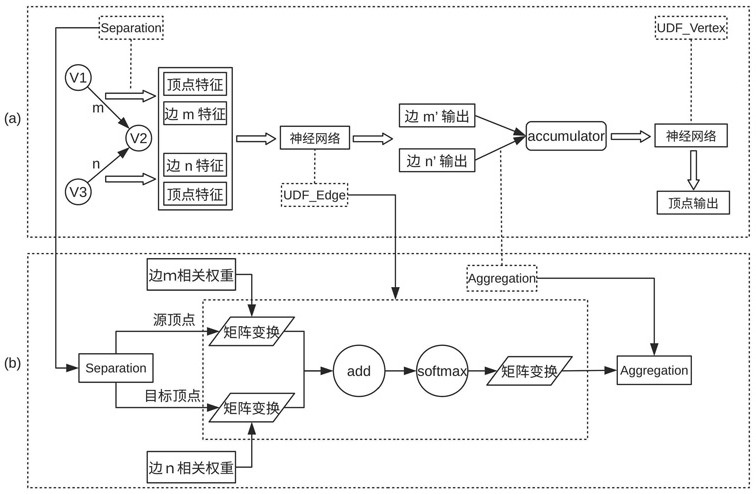

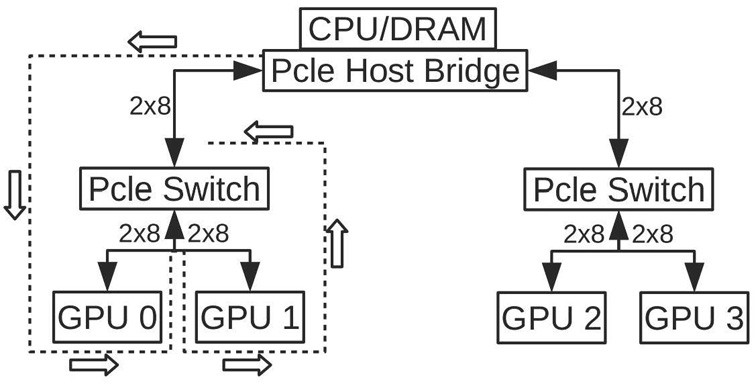

[0021] refer to figure 1 and figure 2 , the present invention discloses a large-scale graph deep learning computing framework based on layered optimization paradigm, including: block-based data flow conversion, data flow graph optimization, kernel propagation on GPU, and multi-GPU parallel processing. The implementation of large-scale graph deep learning is as follows:

[0022] 1) Block-based data flow conversion: In order to achieve scalability beyond the physical limitations of the GPU, it divides the graph data with vertices and edges into blocks, and converts the GNN algorithm represented in the model into data with block-granularity operators Streamgraph, which enables block-based parallel stream processing on single or multiple GPUs. First, starting from the Separation stage, the tensor data vertex of each vertex is passed to the adjacent edge to form the edge data, including source and target vertex data. The subsequent UDF_Edge stage calls the parallel computing fu...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com