Convolution operation memory access optimization method based on GPU

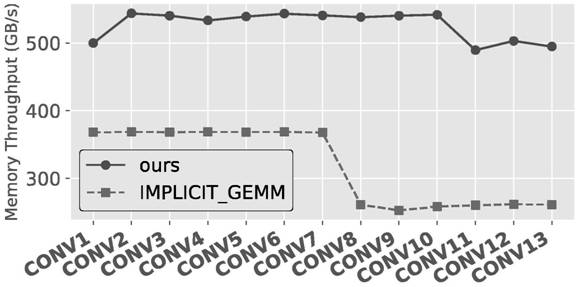

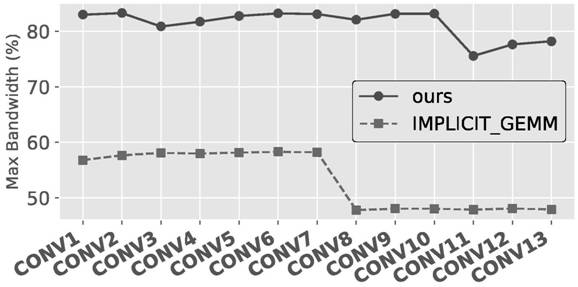

A memory access and convolution operation technology, which is applied in the field of convolution operation memory access optimization, can solve the problems of large convolution operation memory access overhead and reduced number of convolution memory accesses.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment approach

[0072] Embodiments of the present invention are shown below through the aforementioned examples.

[0073] In order to achieve the purpose of memory access optimization, an embodiment of the present invention is such as Image 6 shown, including:

[0074] S1: Load the convolution kernel data into the shared memory.

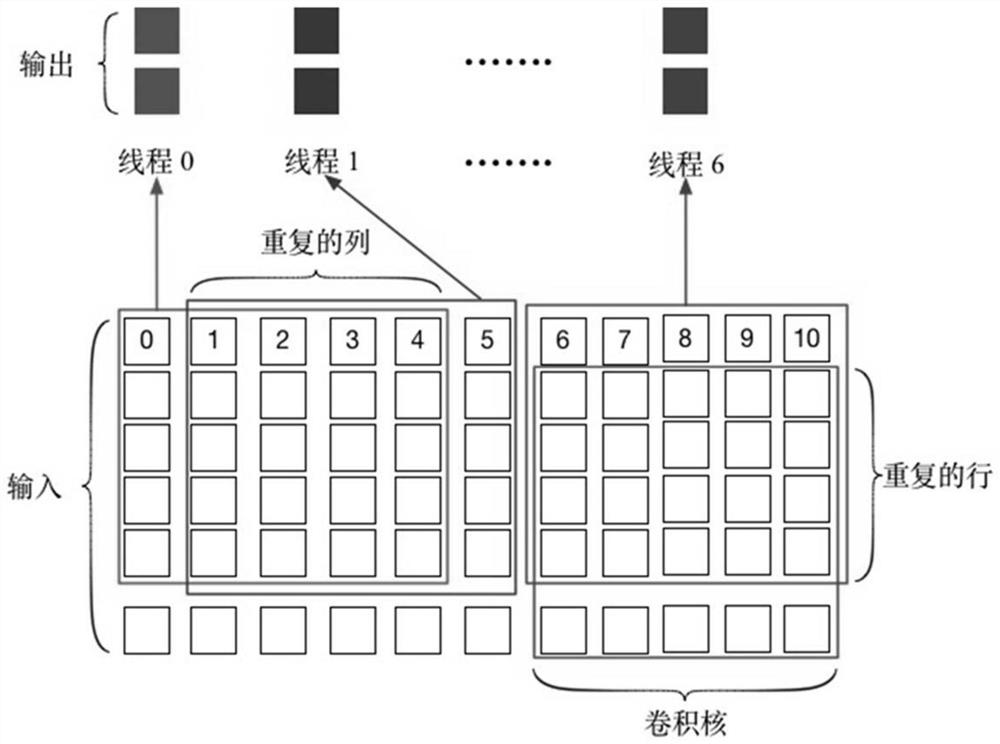

[0075] S2: Divide the convolution output into sub-blocks in units of 32 columns to obtain several sub-blocks containing 32-column data and one sub-block with less than 32-column data. which is Figure 5 The division method of the real place.

[0076] S3: It is assumed that there are N threads for processing sub-blocks; each thread calculates the index of the first data required by the thread. The index of the first data is the first and the left and right data required by each thread shown in FIG. 2 . Other required data can be obtained through the index operation of the first data.

[0077] S4: Each thread acquires the remaining required input data from the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com