Pedestrian re-identification method based on multi-branch fusion model

A pedestrian re-identification and fusion model technology, which is applied in character and pattern recognition, neural learning methods, biometric recognition, etc., can solve problems such as camera angle changes, pedestrian posture changes, and low resolution of captured pictures

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

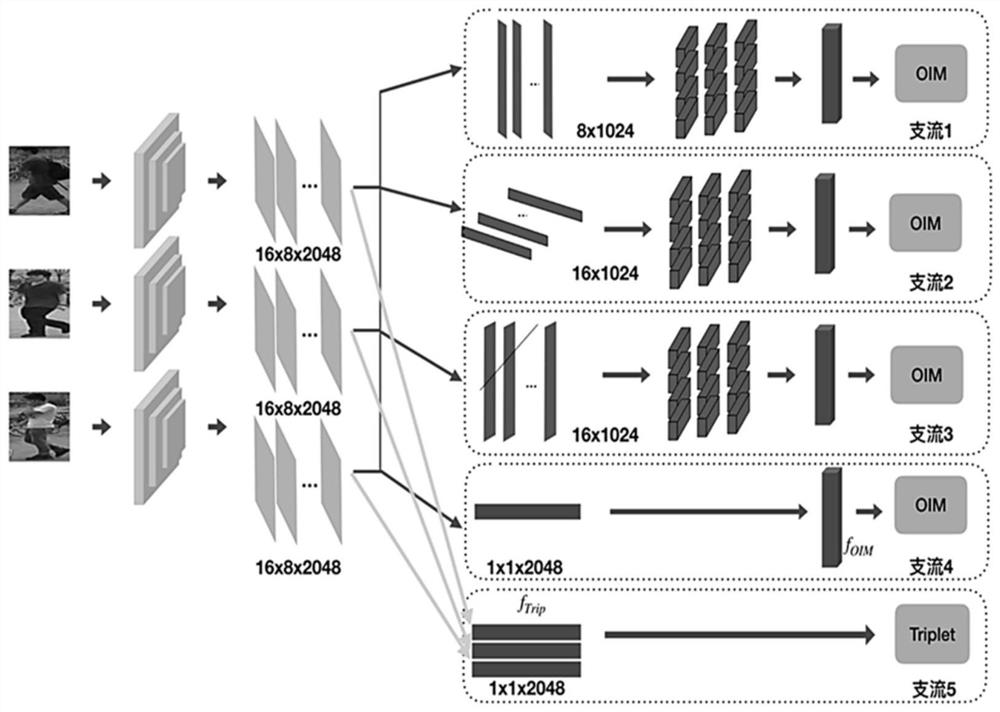

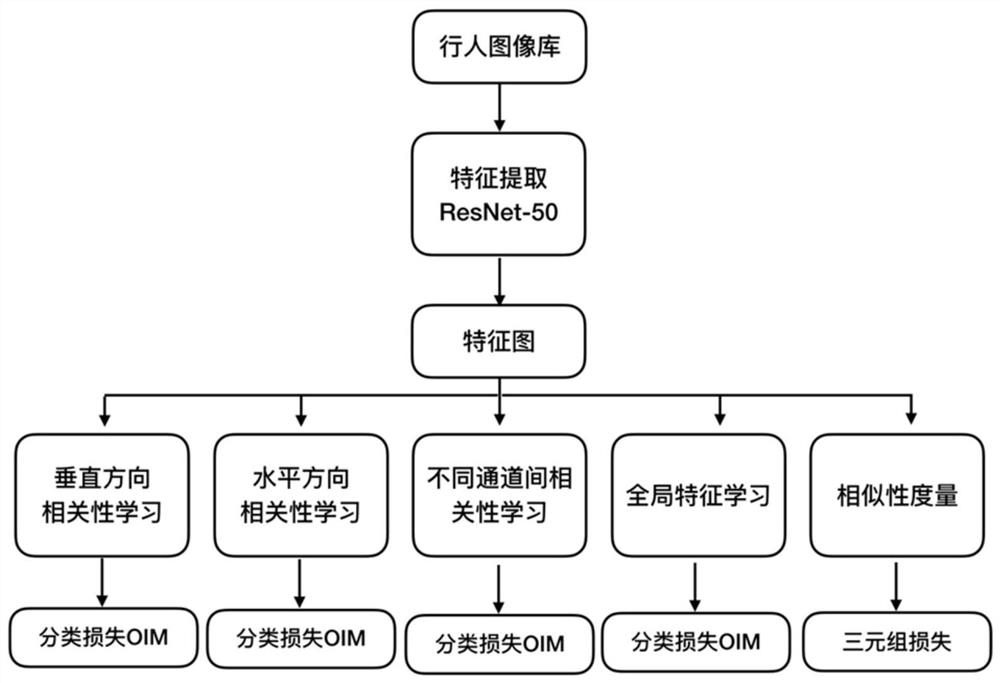

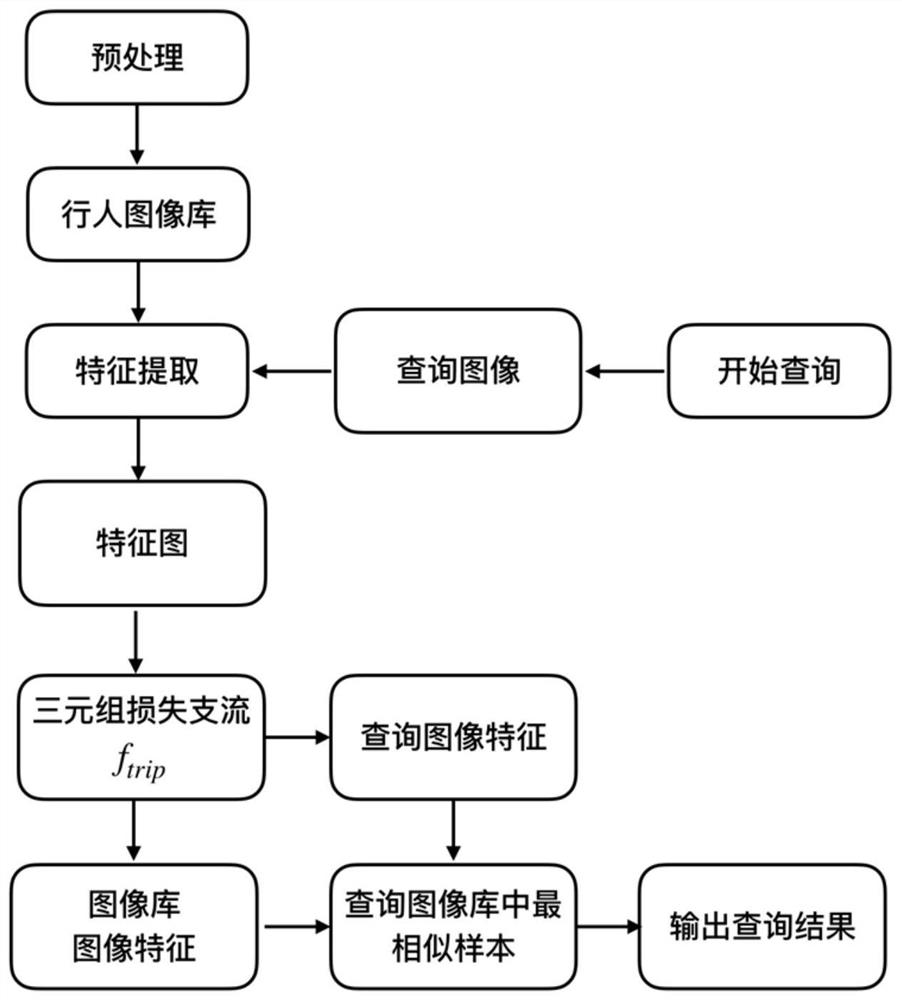

Method used

Image

Examples

Embodiment

[0031] First define some variables that need to be used:

[0032] x represents the features of labeled pedestrian images in the batch data;

[0033] y represents the label of the input pedestrian image;

[0034] Q indicates the size of the queue;

[0035] L represents the number of rows in the query table;

[0036] p j Indicates the probability that the feature vector x is regarded as the jth type of person;

[0037] Represents the transposition of the kth category of the circular queue;

[0038] Indicates the transposition of the jth column of the query table;

[0039] τ represents the flatness of the probability distribution;

[0040] R j Indicates the probability that the feature vector x is regarded as the jth unlabeled pedestrian;

[0041] L oim Indicates OIM loss;

[0042] L T-batch Represents the hard sampling loss;

[0043] f(x) represents the image features extracted by the deep network;

[0044] D(x,y) represents the distance between x and y;

[004...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com