Patents

Literature

56results about How to "Feature valid" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

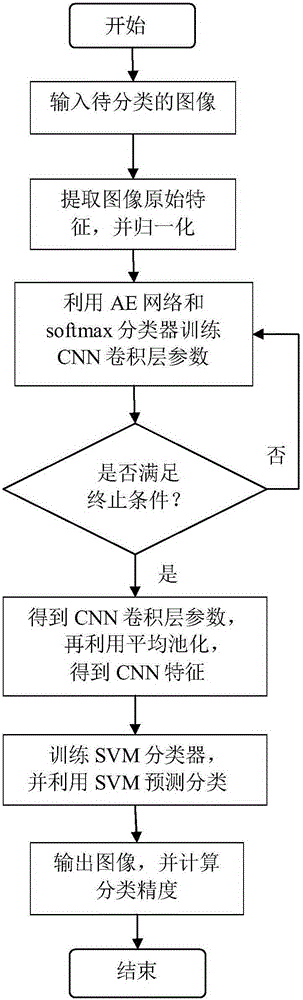

Polarization SAR image classification based on CNN and SVM

ActiveCN105184309AImprove classification accuracyFeature validCharacter and pattern recognitionClassification resultConvolution

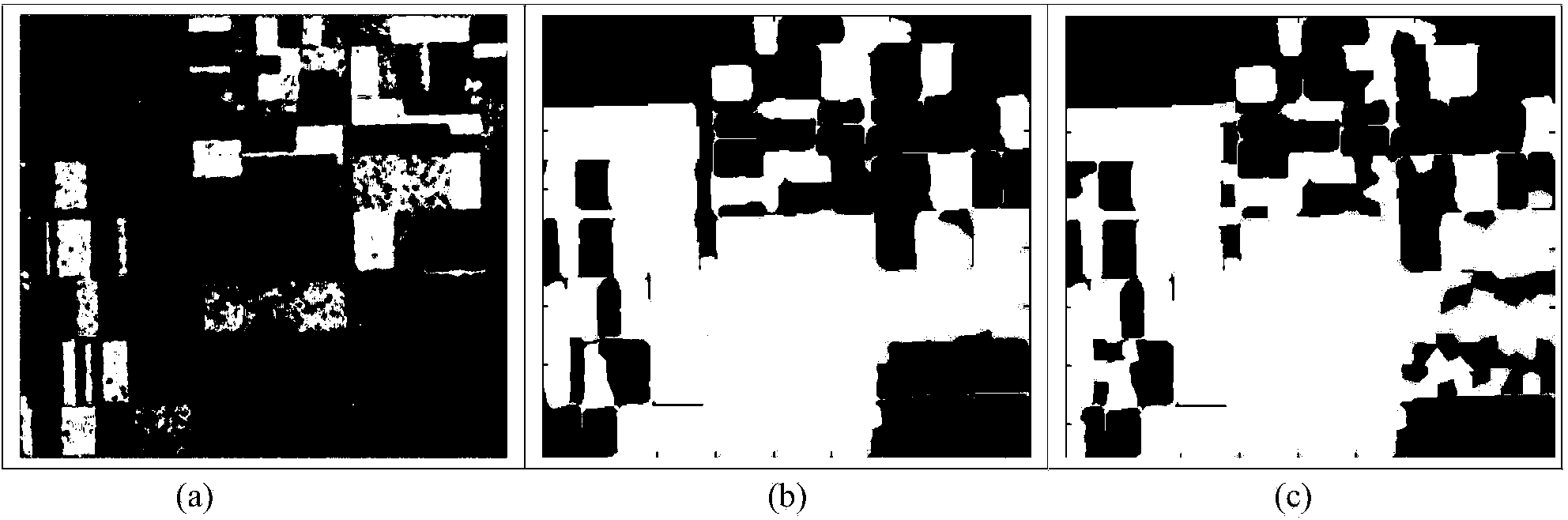

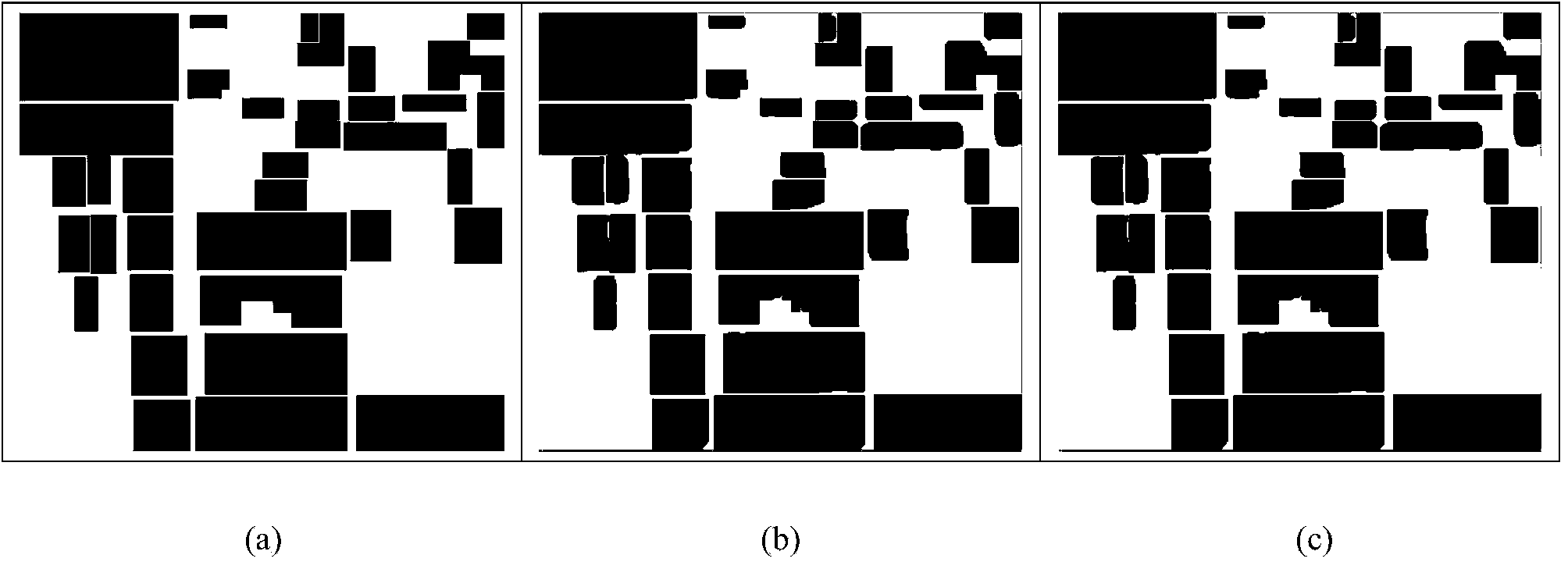

The invention discloses a polarization SAR image classification method based on CNN and SVM, and mainly aims to solve the problem of the existing polarization SAR image classification method that the classification precision is low. The method comprises the steps as follows: (1) inputting a to-be-classified polarization SAR image after filtering; extracting and normalizing the original feature of each pixel point based on a polarization coherence matrix and by taking the neighborhood into consideration; training an AE network, and obtaining the parameter of a CNN convolution layer through softmax fine-tuning; setting a CNN pooling layer as average pooling, and determining the parameter of the CNN pooling layer; and sending the features of CNN learning to an SVM for classification to obtain the classification result of the polarization SAR image. Compared with the existing methods, the spatial correlation of the image is fully considered, a new neighborhood processing method is proposed based on CNN, features more conductive to polarization SAR image classification can be extracted, the classification accuracy is obviously improved, and the method can be used for polarization SAR image surface feature classification and object identification.

Owner:XIDIAN UNIV

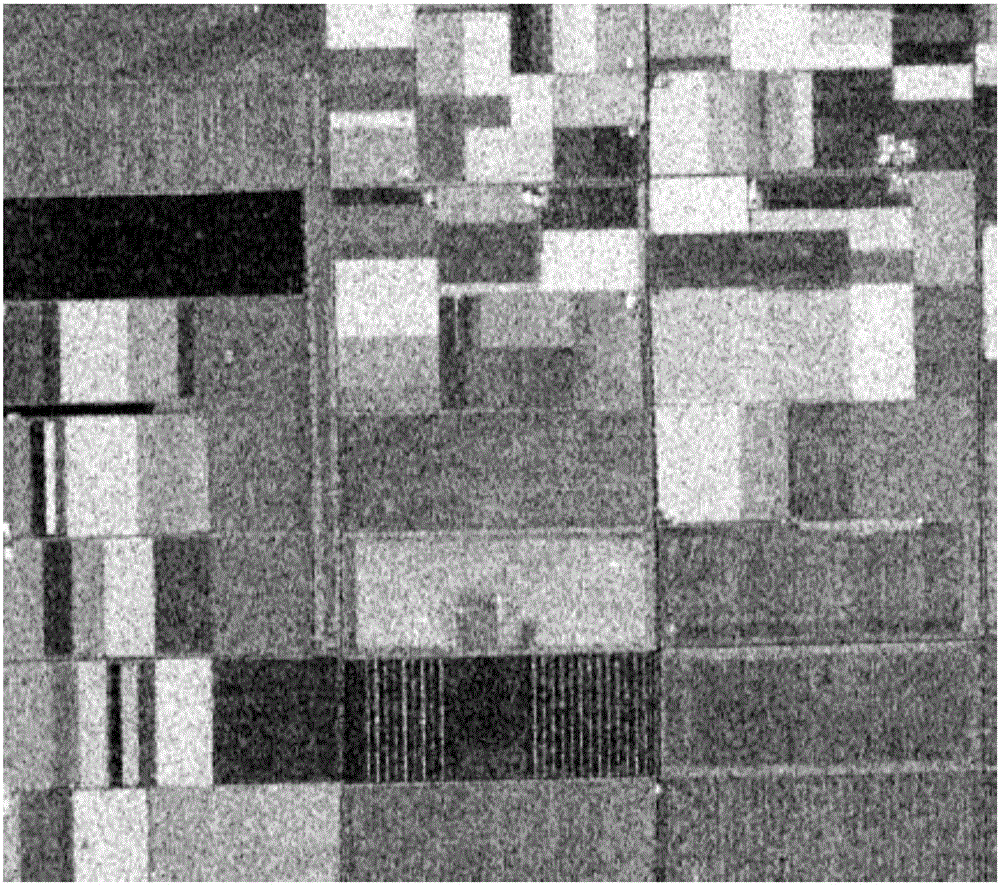

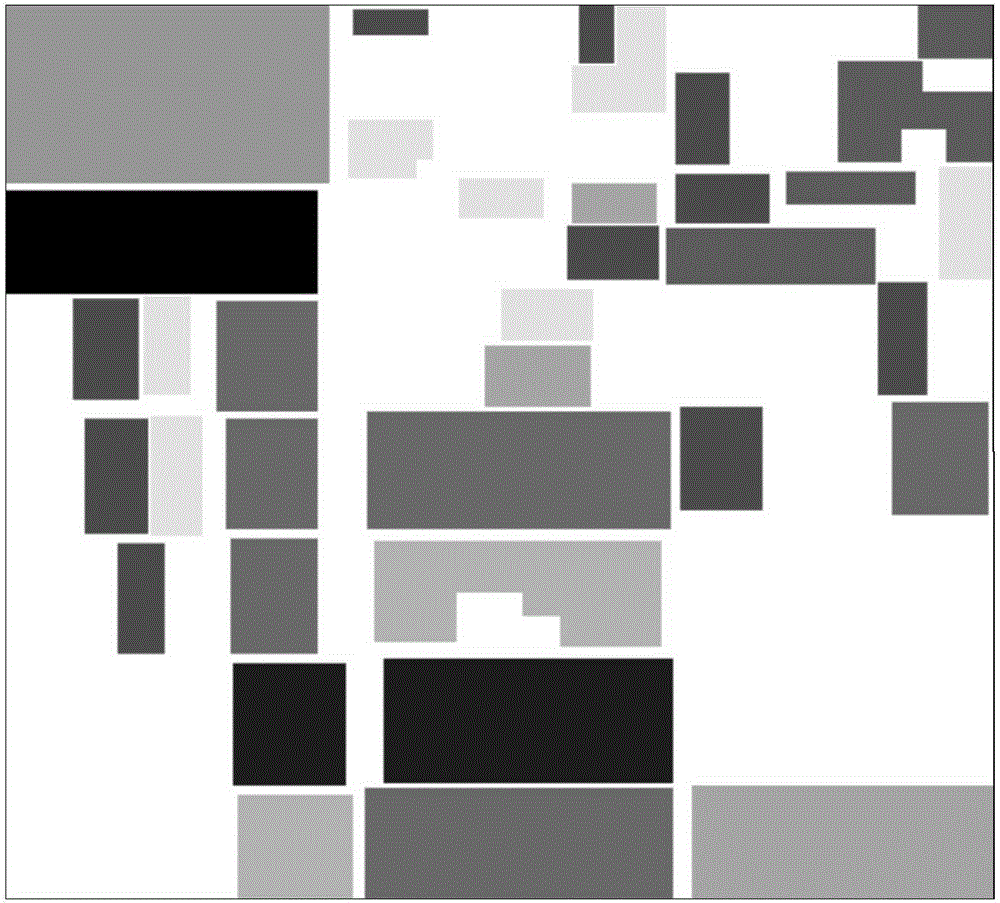

Polarization SAR image classification method based on deep neural network

ActiveCN104077599AEfficient extractionFeature validImage analysisCharacter and pattern recognitionPower diagramData set

The invention discloses a polarization SAR image classification method based on a deep neural network. The method mainly solves the problems that traditional polarization SAR image classification accuracy is low and boundaries are disorderly. The method includes the classification steps that a power diagram I is acquired from polarization SAR data through Pauli decomposition, the power diagram I is segmented in advance, and then a plurality of small blocks are acquired; a training data set U is selected from a polarization SAR image, input into a two-layer self-coding structure for training and then classified through a Softmax classifier; a test data set V is selected from the polarization SAR image and input into the trained two-layer self-coding structure, and then classification labels are acquired through the Softmax classifier; in the pre-segmented small blocks, the classification labels and channel information of the power diagram I are combined, and then small block labels are acquired. The polarization SAR image classification method has the advantages that the recognition rate is high, result region consistency is good, and the method can be used for polarization SAR homogeneous region terrain classification.

Owner:XIDIAN UNIV

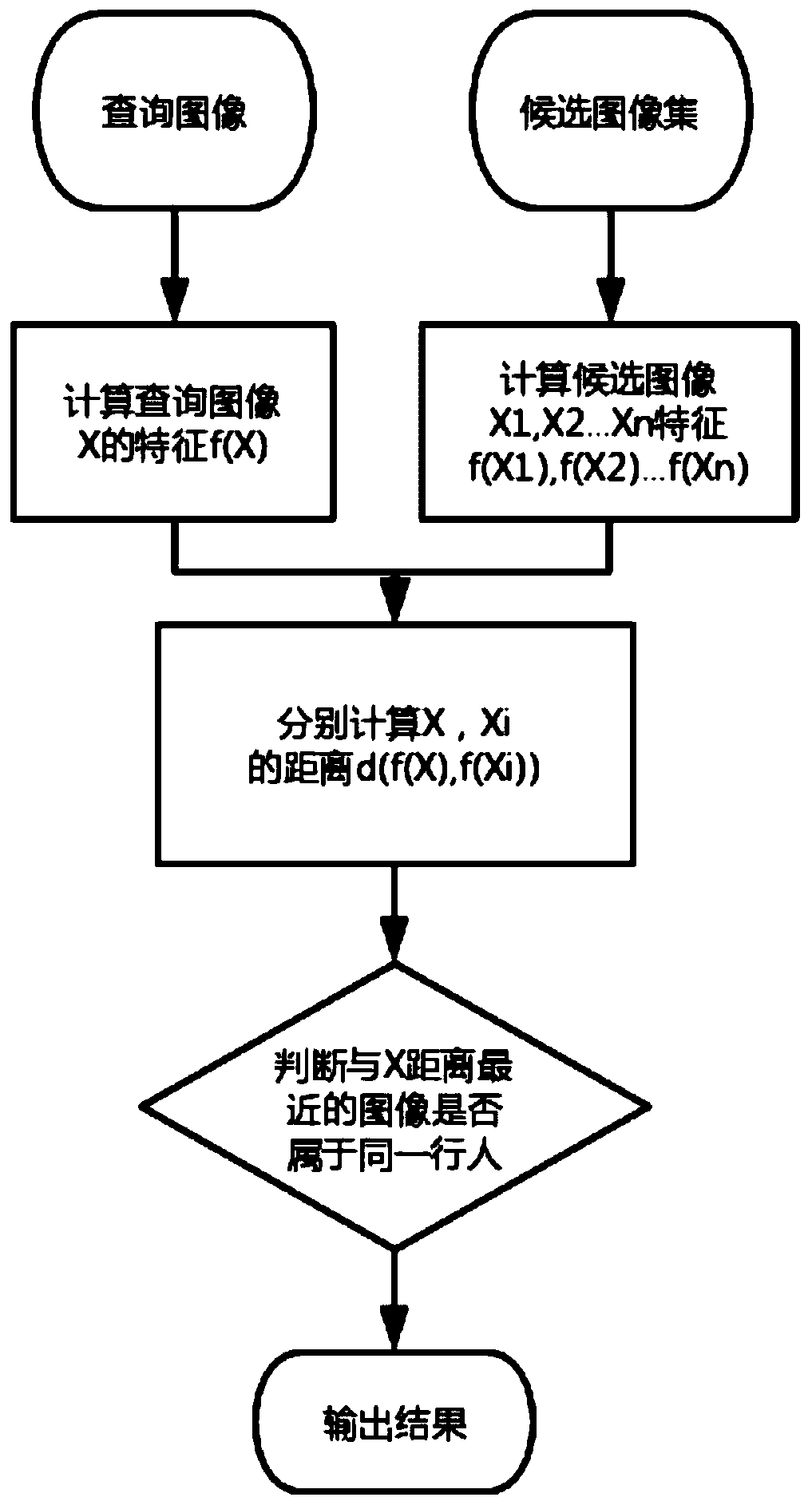

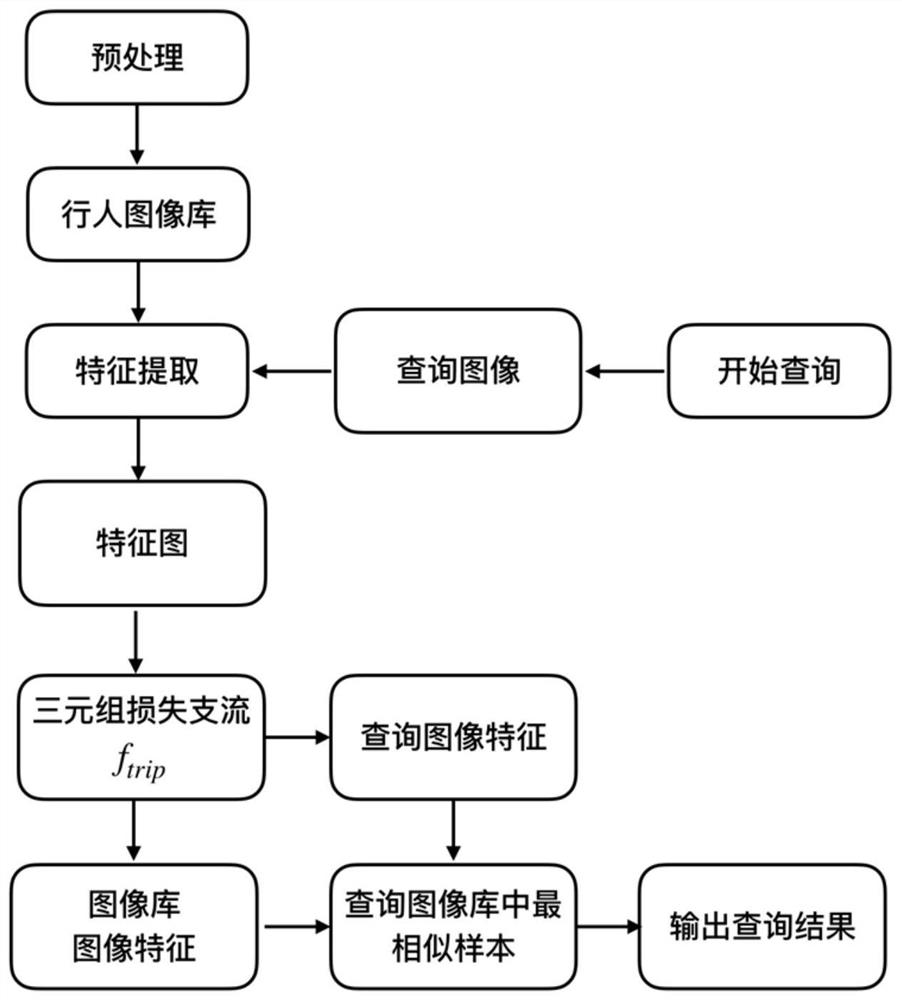

Pedestrian re-identification method based on depth multi-loss fusion model

PendingCN110008842AReduce environmental differencesImprove re-identification performanceImage enhancementGeometric image transformationData setFeature extraction

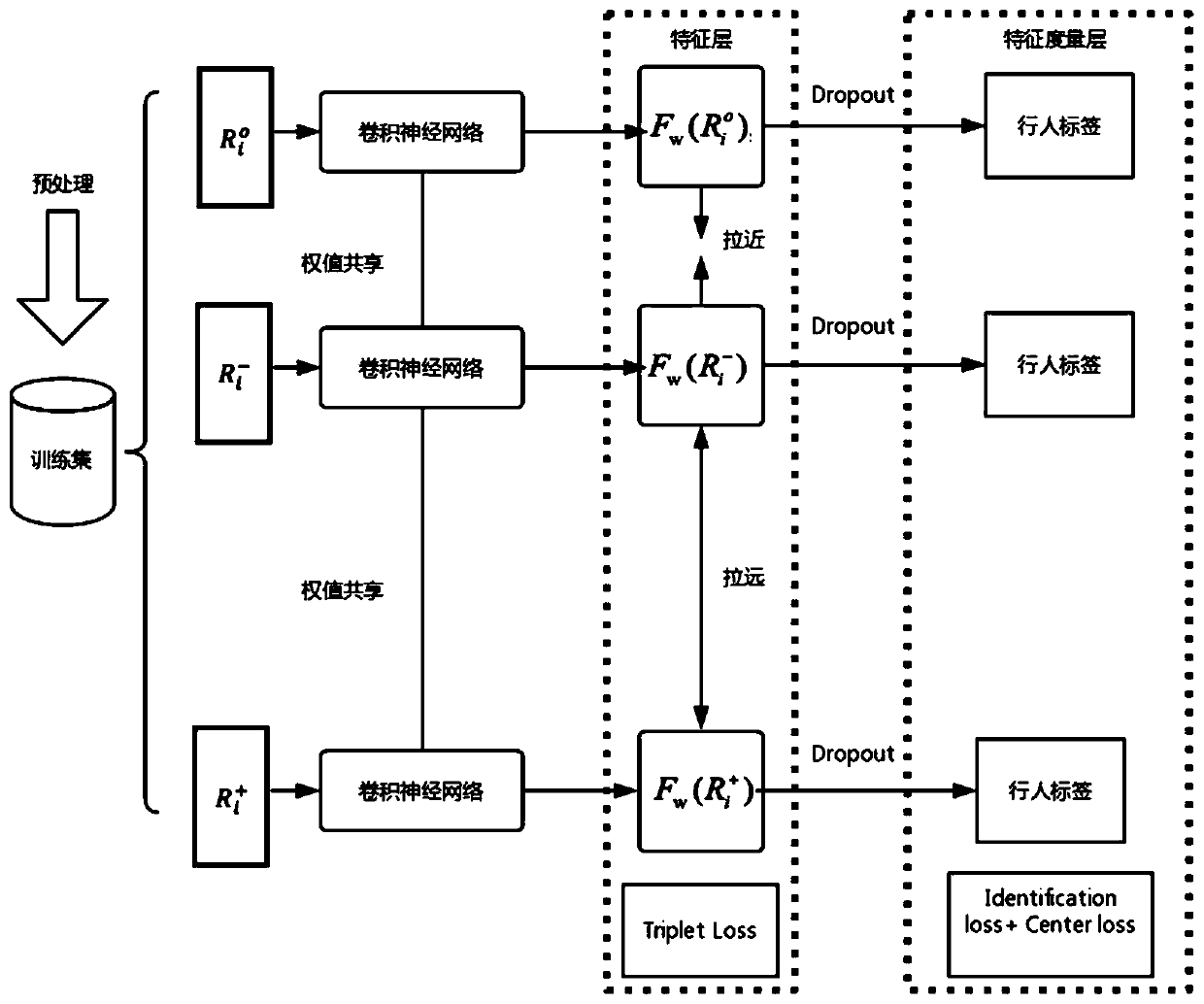

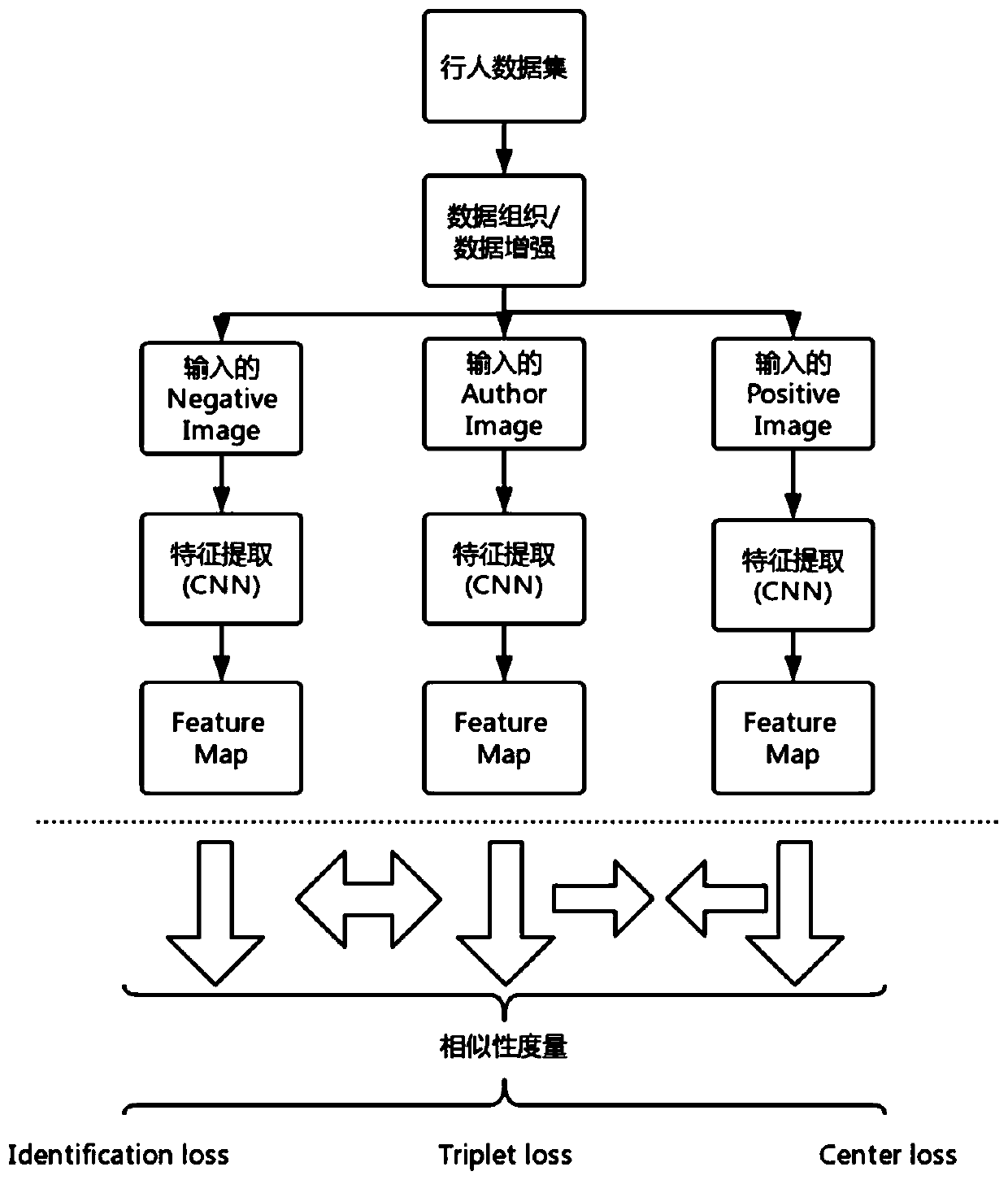

The invention relates to a pedestrian re-identification method based on a depth multi-loss fusion model. According to the method, a deep learning technology is used, pre-processing operations such asoverturning, cutting, random erasing and style migration are carried out on the training set picture. Feature extraction is carried out through a basic network model. A plurality of loss functions areused for carrying out fusion joint training on the network. Compared with a pedestrian re-identification algorithm based on deep learning, since the method uses a plurality of preprocessing modes, fusion of three loss functions and an effective training strategy, the pedestrian re-identification performance on the data set is greatly improved. On one hand, multiple preprocessing modes can expanda data set, the generalization capability of the model is improved, the occurrence of an overfitting condition is avoided, and on the other hand, the three loss functions have respective advantages and disadvantages, and when the three loss functions are effectively combined, the used model can obtain a better recognition result.

Owner:TONGJI UNIV +2

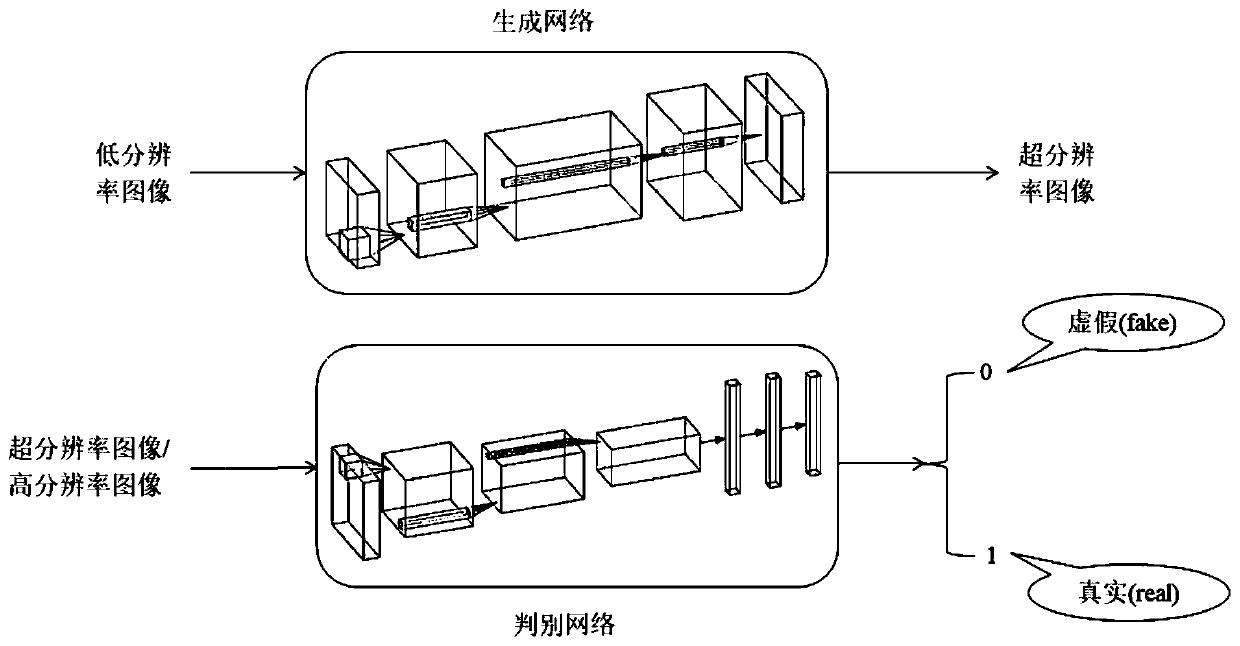

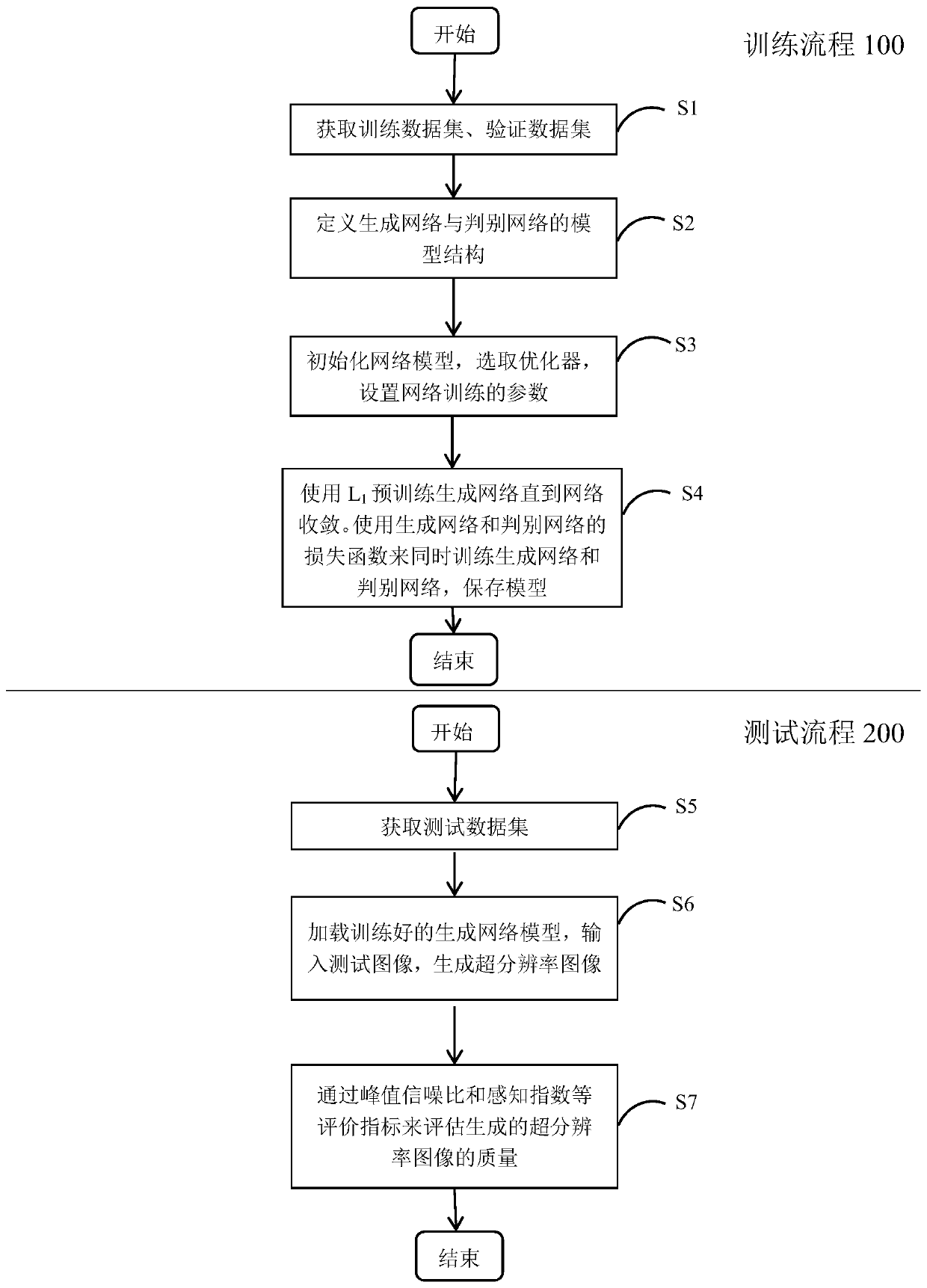

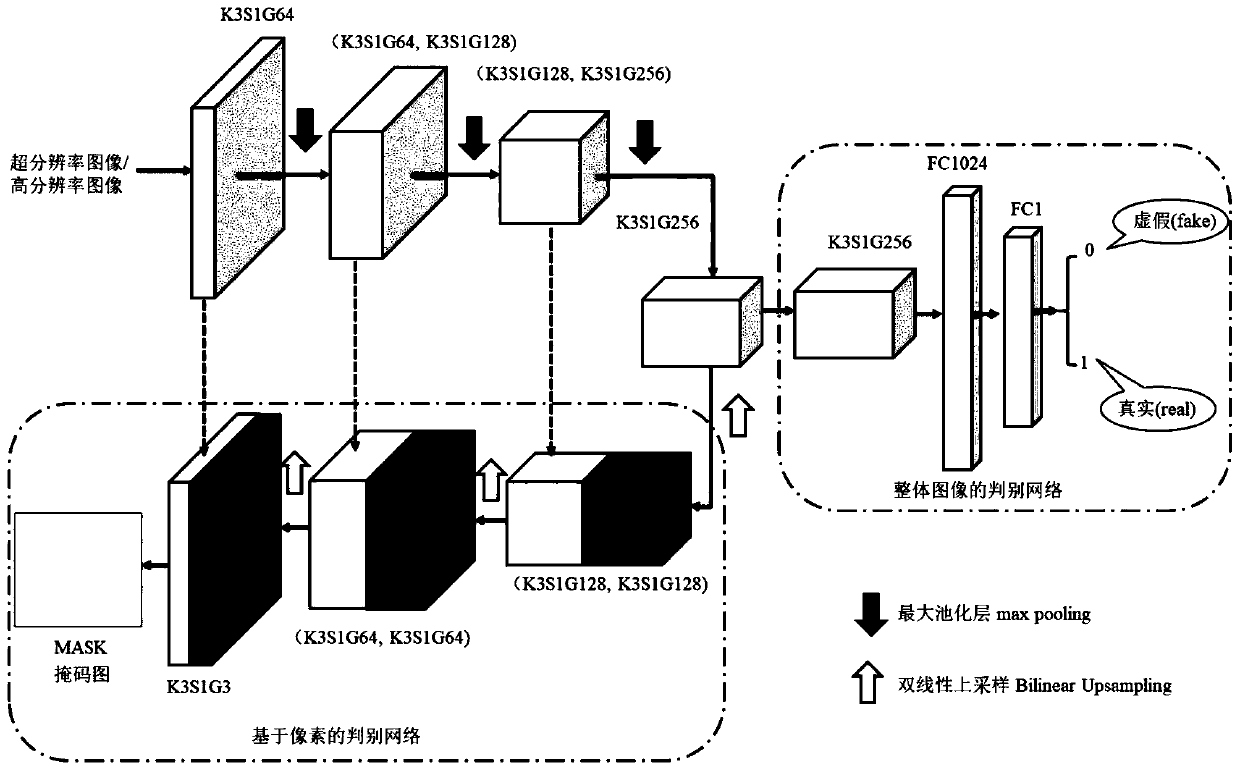

Image super-resolution method based on generative adversarial network

PendingCN111583109AImprove reconstruction effectQuality improvementGeometric image transformationCharacter and pattern recognitionPattern recognitionData set

The invention discloses an image super-resolution method based on a generative adversarial network. The method comprises the following steps: obtaining a training data set and a verification data set;constructing an image super-resolution model, wherein the image super-resolution model comprises a generation network model and a discrimination network model; initializing weights of the establishedgenerative network model and the discriminant network model, initializing the network model, selecting an optimizer, and setting network training parameters; simultaneously training the generative network model and the discriminant network model by using a loss function until the generative network and the discriminant network reach Nash equilibrium; obtaining a test data set and inputting the test data set into the trained generative network model to generate a super-resolution image; and calculating a peak signal-to-noise ratio between the generated super-resolution image and a real high-resolution image, calculating an evaluation index of the image reconstruction quality of the generated image, and evaluating the reconstruction quality of the image. According to the method, the performance of reconstructing the super-resolution image by the network is improved by optimizing the network structure, and the problem of image super-resolution is solved.

Owner:SOUTH CHINA UNIV OF TECH

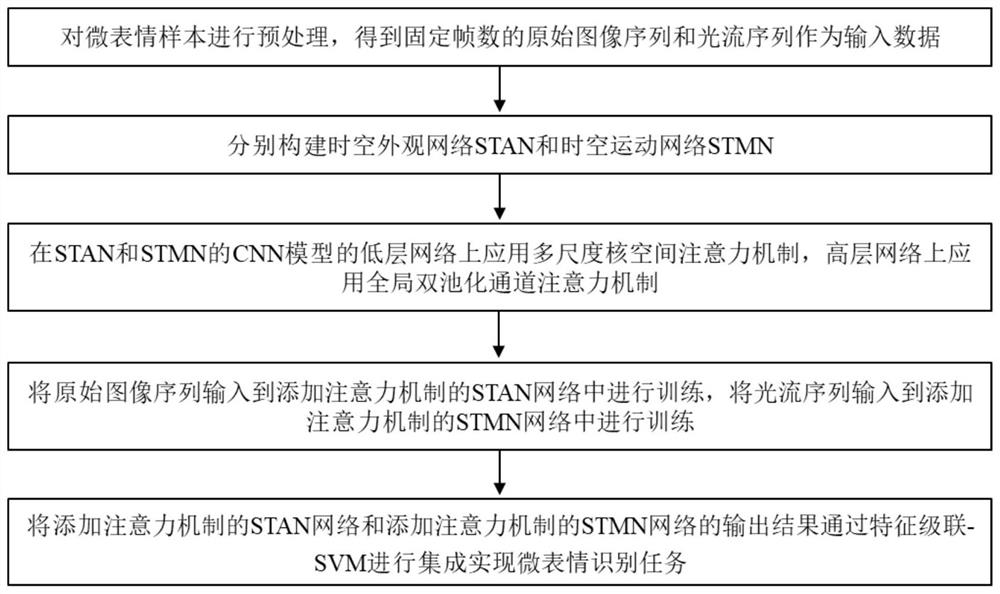

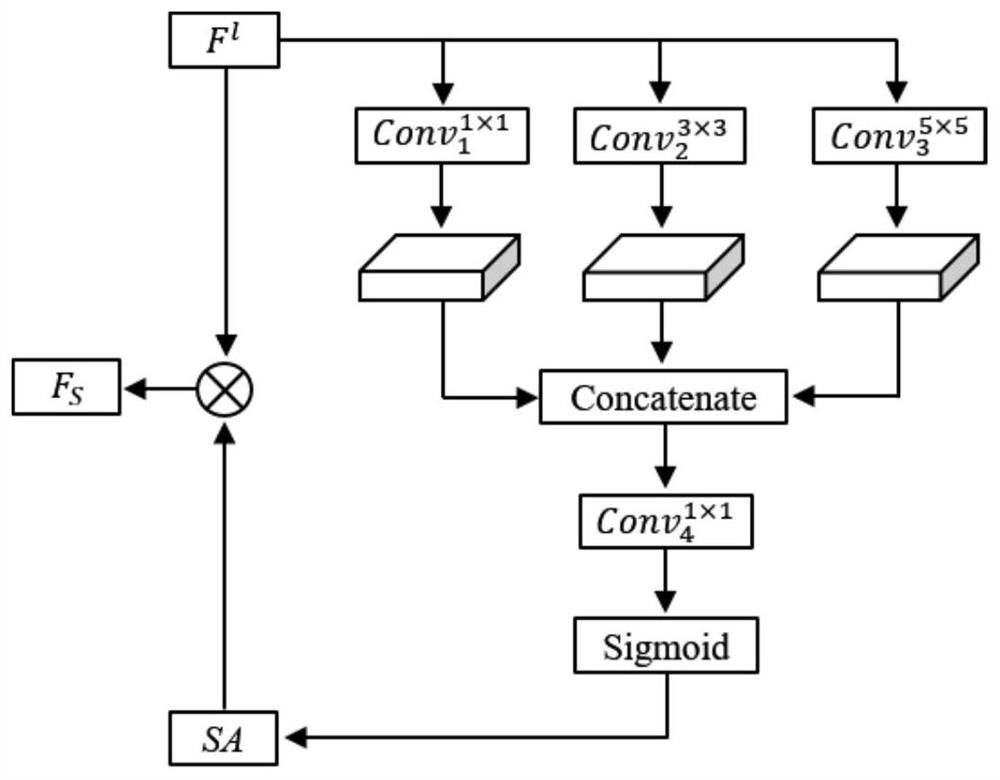

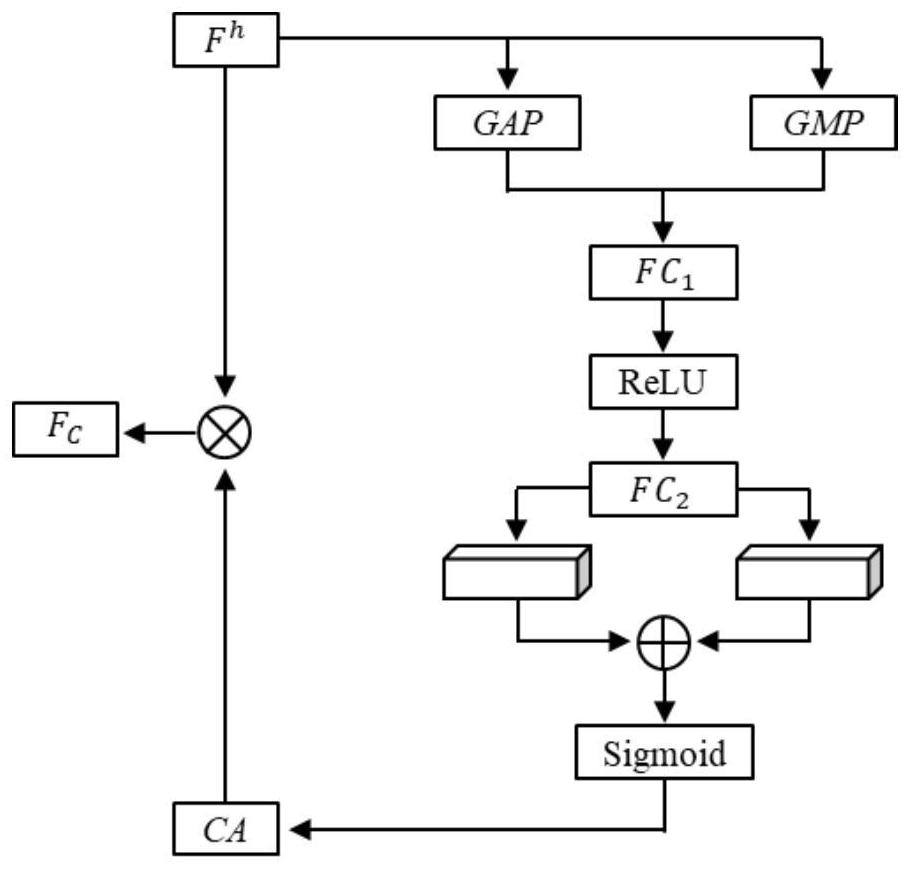

Micro-expression recognition method based on space-time appearance movement attention network

ActiveCN112307958ASuppression identifies features with small contributionsTake full advantage of complementarityCharacter and pattern recognitionNeural architecturesPattern recognitionNetwork on

The invention relates to a micro-expression recognition method based on a space-time appearance movement attention network, and the method comprises the following steps: carrying out the preprocessingof a micro-expression sample, and obtaining an original image sequence and an optical flow sequence with a fixed number of frames; constructing a space-time appearance motion network which comprisesa space-time appearance network STAN and a space-time motion network STMN, designing the STAN and the STMN by adopting a CNN-LSTM structure, learning spatial features of micro-expressions by using a CNN model, and learning time features of the micro-expressions by using an LSTM model; introducing hierarchical convolution attention mechanisms into CNN models of an STAN and an STMN, applying a multi-scale kernel space attention mechanism to a low-level network, applying a global double-pooling channel attention mechanism to a high-level network, and respectively obtaining an STAN network added with the attention mechanism and an STMN network added with the attention mechanism; inputting the original image sequence into the STAN network added with the attention mechanism to be trained, inputting the optical flow sequence into the STMN network added with the attention mechanism to be trained, integrating output results of the original image sequence and the optical flow sequence through the feature cascade SVM to achieve a micro-expression recognition task, and improving the accuracy of micro-expression recognition.

Owner:HEBEI UNIV OF TECH +2

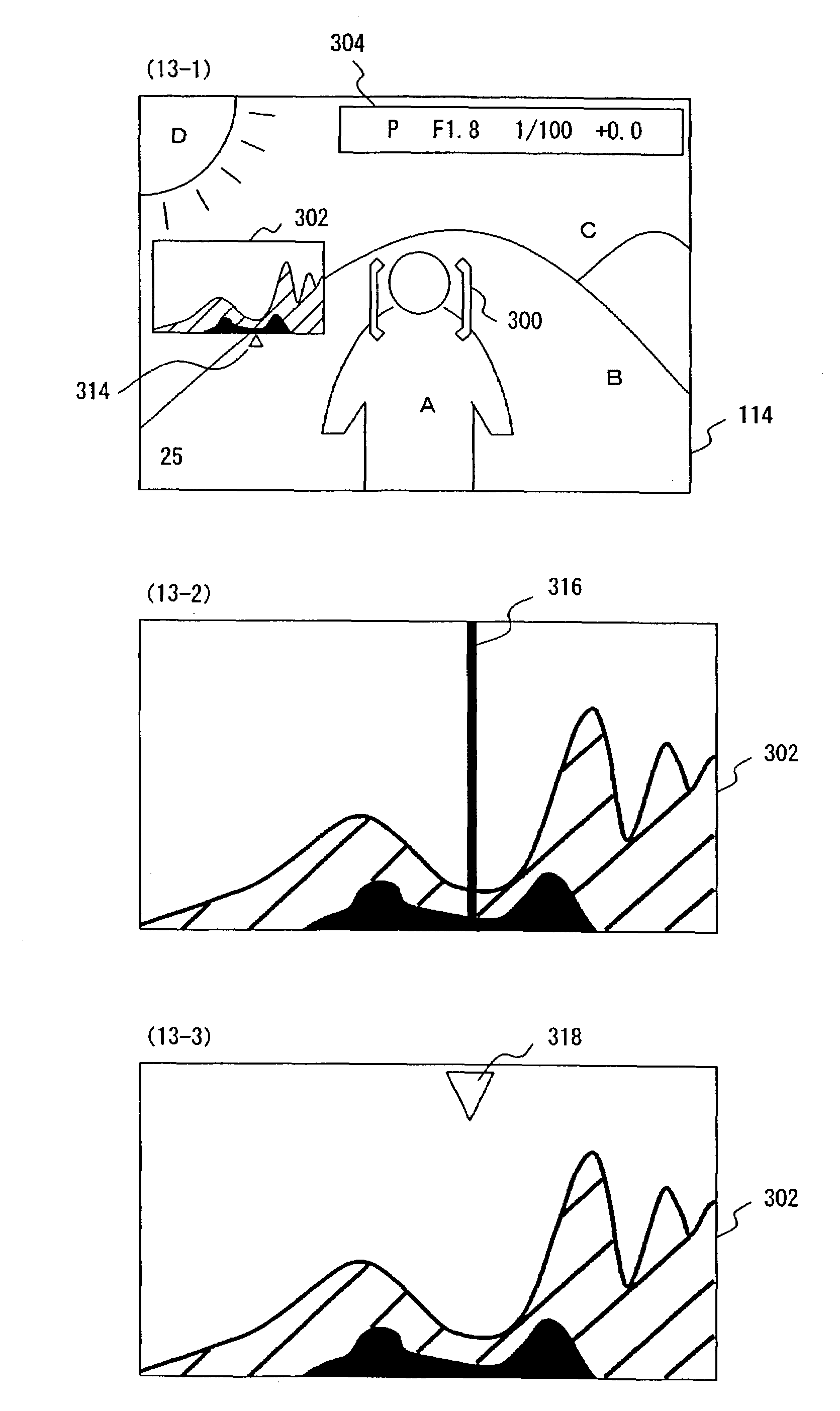

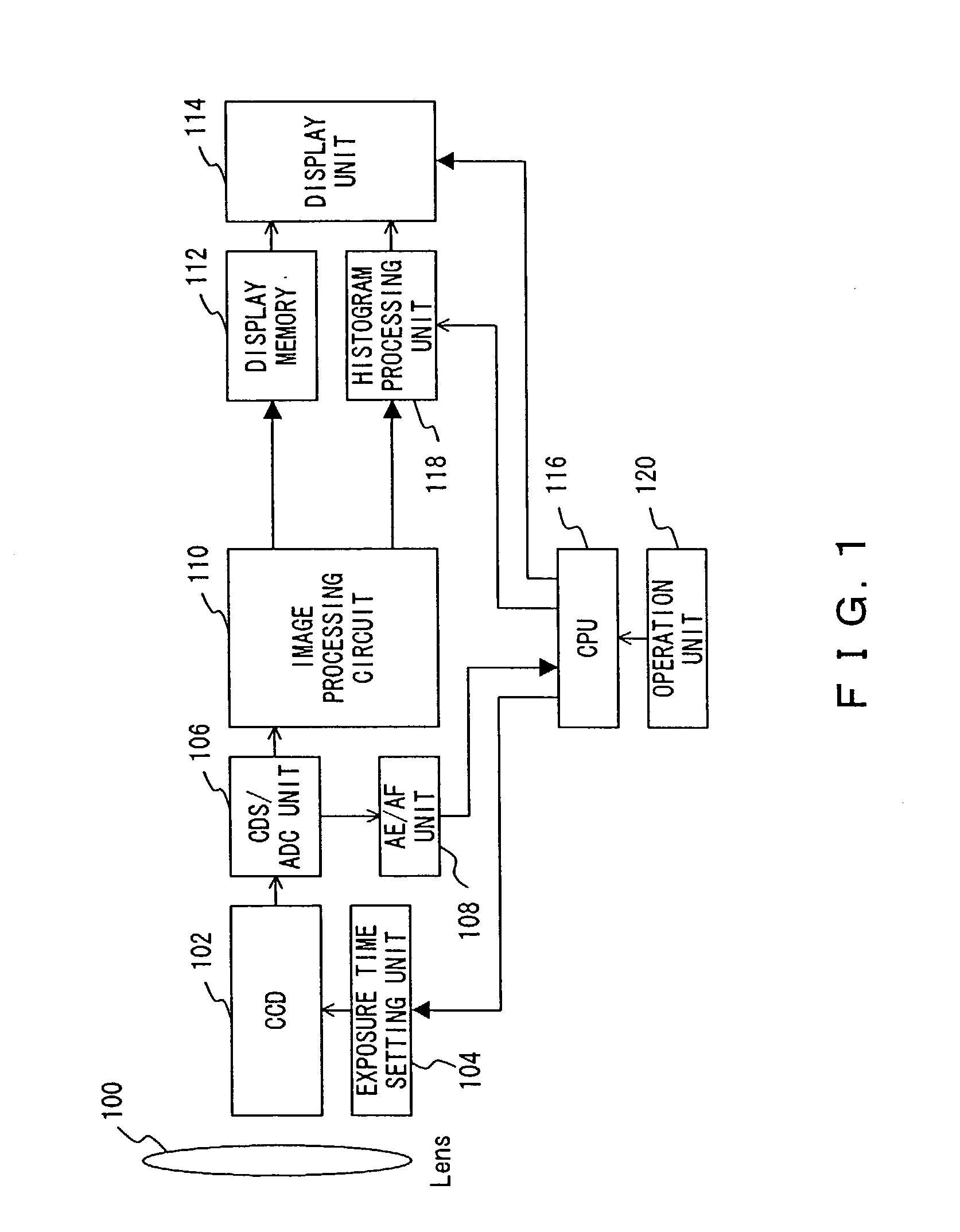

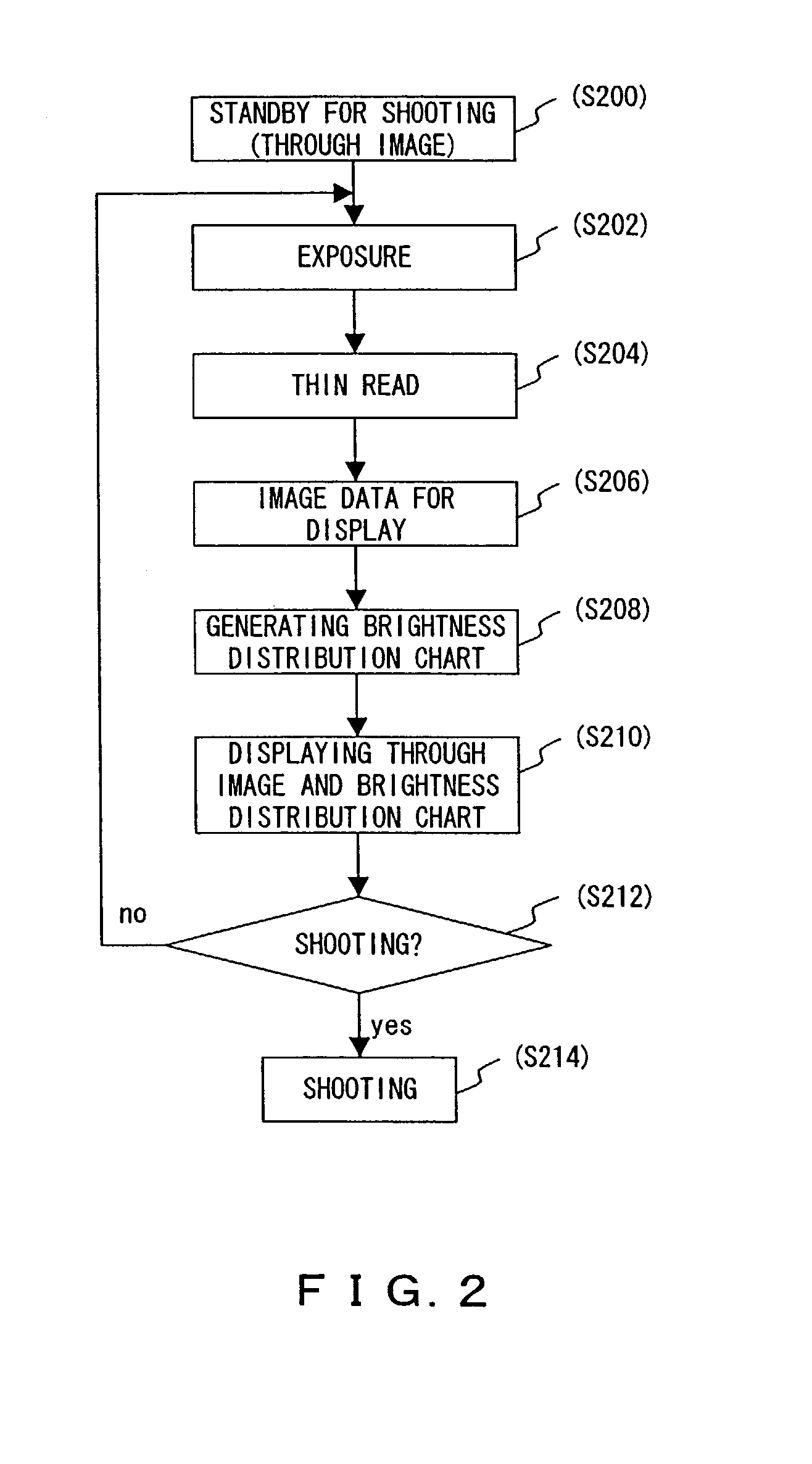

Image pickup apparatus with brightness distribution chart display capability

ActiveUS7271838B2Easy to adjustFeature validTelevision system detailsColor signal processing circuitsComputer graphics (images)Lightness

An image pickup apparatus includes an image pickup unit for outputting an electronic subject image, a metering unit for computing a metering value from the subject image, a brightness distribution chart generation unit for generating a brightness distribution chart from the subject image, and a display unit for overlaying the brightness distribution chart on the subject image and displaying them, and provides the brightness distribution chart for the display unit in various styles.

Owner:OM DIGITAL SOLUTIONS CORP +1

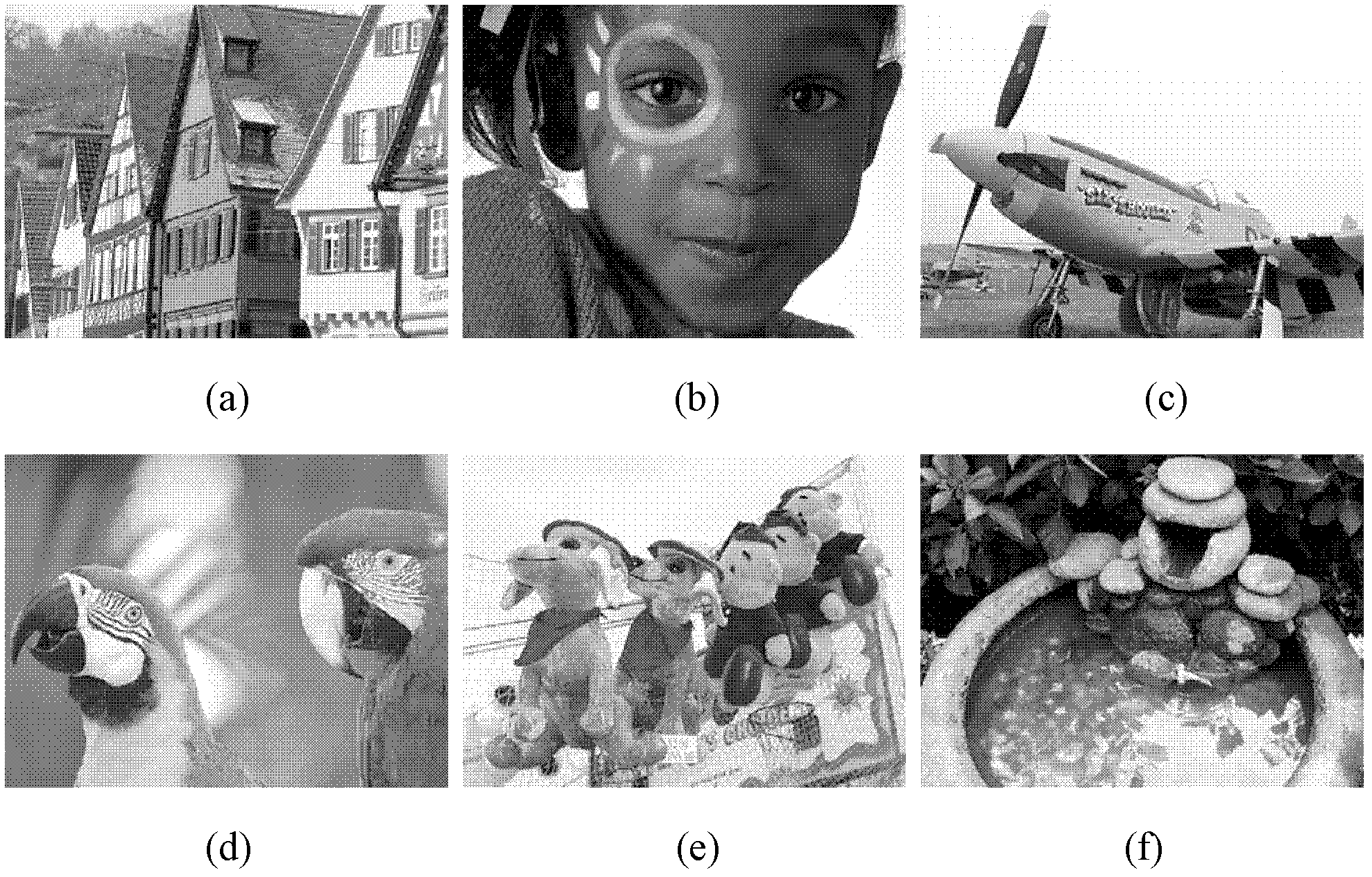

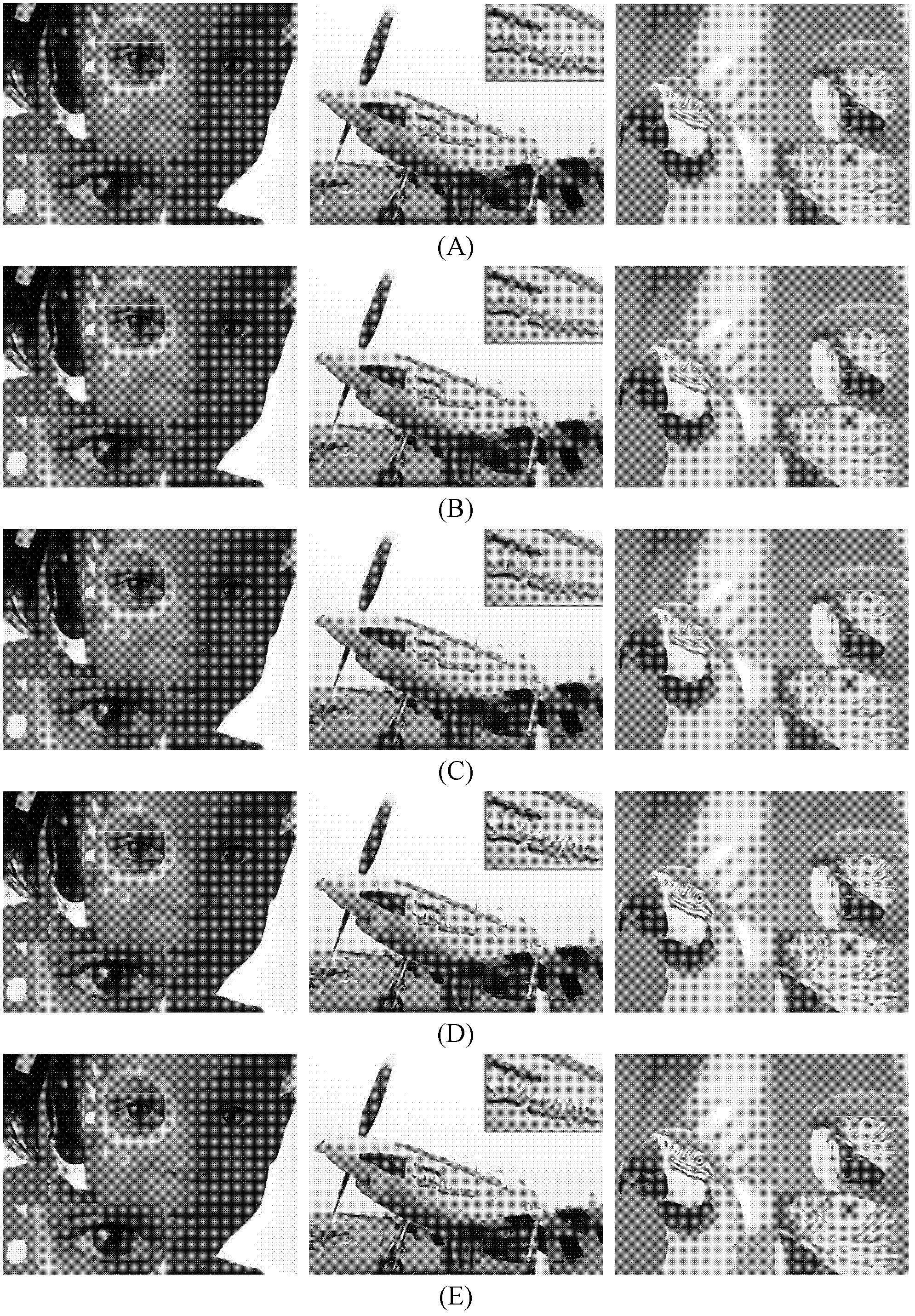

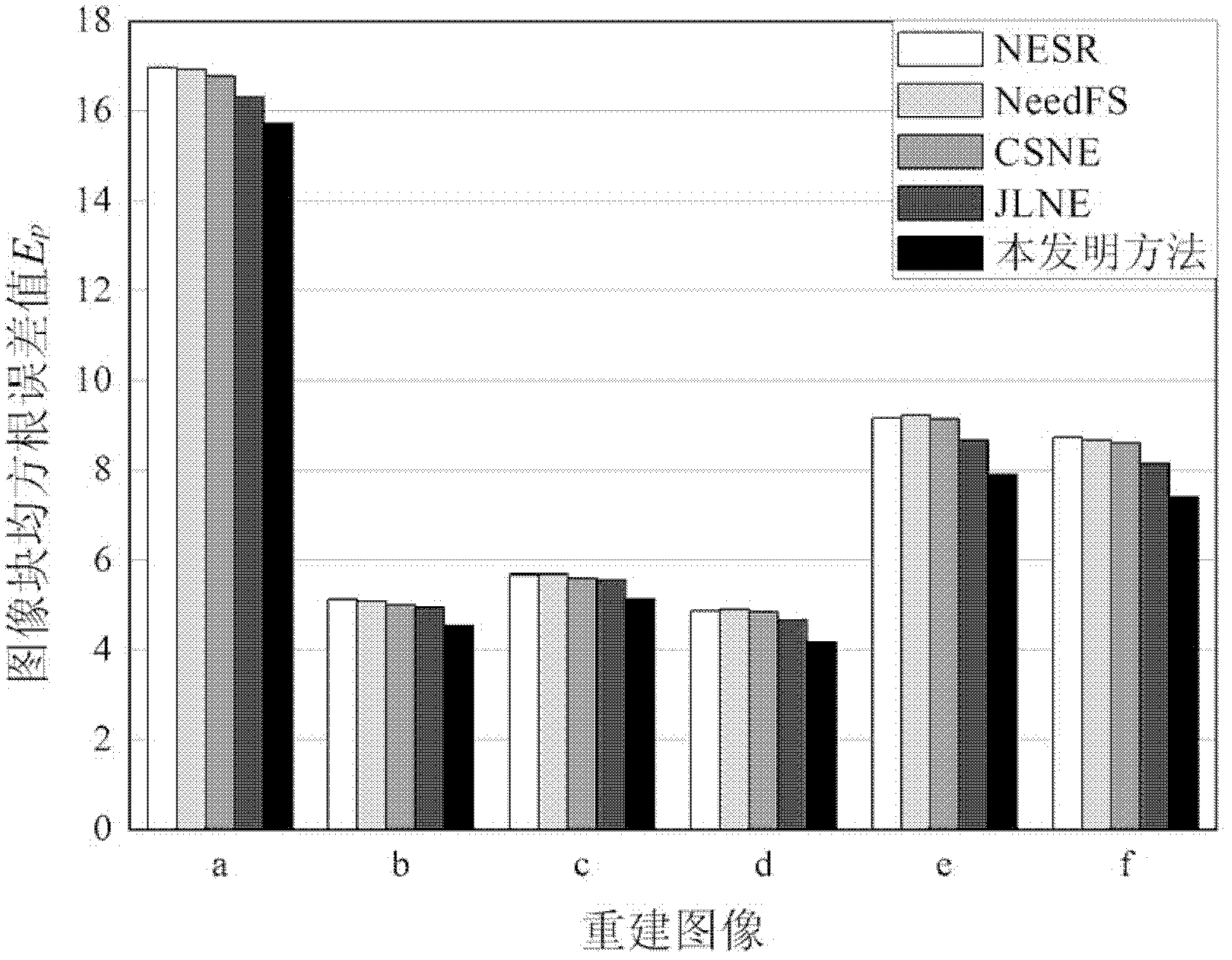

Image super resolution (SR) reconstruction method based on subspace projection and neighborhood embedding

InactiveCN102629374AFeature validReduce dimensionalityImage enhancementOriginal dataWeight coefficient

The invention discloses an image super resolution (SR) reconstruction method based on subspace projection and neighborhood embedding. The method is characterized by: using first and secondary subspace projection methods to project original high-dimensional data to a low-dimensional space, using dimension reduction feature vectors to show a feature of a low-resolution image block so that global structure information and local structure information of original data can be maintained; comparing a Euclidean distance between the dimension reduction feature vectors in the low-dimensional space, finding a neighborhood block which is most matched with the low-resolution image block to be reconstructed, using a similarity and a scale factor between the feature vectors to construct an accurate embedded weight coefficient so that a searching speed and matching precision can be increased; then constructing the similarity and the scale factor between the feature vectors, calculating the accurate weight coefficient and acquiring more high frequency information from a training database; finally, according to the weight coefficient and the neighborhood block, estimating the high-resolution image block with high precision, reconstructing the image which has the high similarity with a real object, which is good for later-stage real object identification processing.

Owner:SOUTHWEST JIAOTONG UNIV

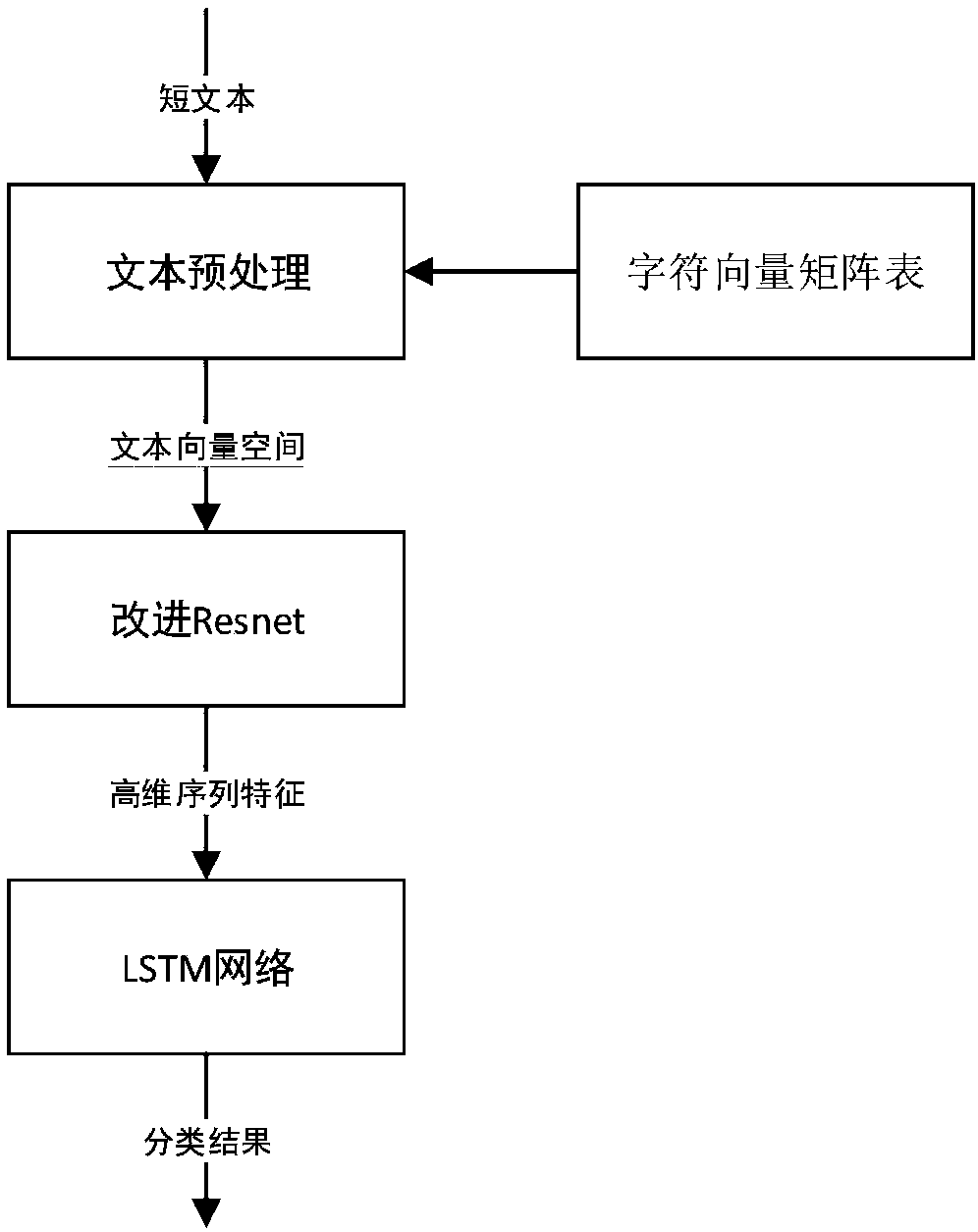

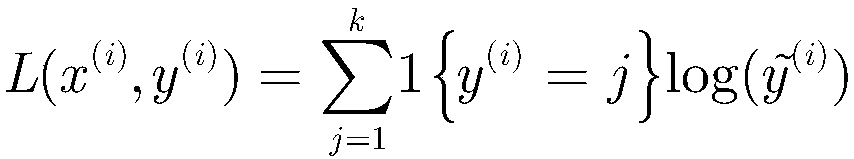

Character-level nested deep network-based text classification method

ActiveCN107832458AValid conversionFeature validNeural architecturesSpecial data processing applicationsFeature extractionText categorization

The invention relates to a character-level nested deep network-based text classification method. The method comprises the following steps of S1, constructing a character vector matrix table; S2, performing short text preprocessing; S3, extracting high-dimensional sequence features by improved Resnet; and S4, performing LSTM network classification. The character level-based text conversion can effectively perform conversion on all texts; compared with a conventional vector space model, the dimension is reduced remarkably; all the texts can be effectively converted; and low-frequency words are not ignored. In addition, the improved Resnet can self-learn a feature extraction method; and compared with conventional methods such as a TF-IDF formula, a mutual information quantity, an informationgain, an x2 statistical quantity and the like, the extracted features are more effective and abstract. Finally, the LSTM network classification can consider a sequence relationship between the words,so that the text classification can be performed more accurately.

Owner:SUN YAT SEN UNIV

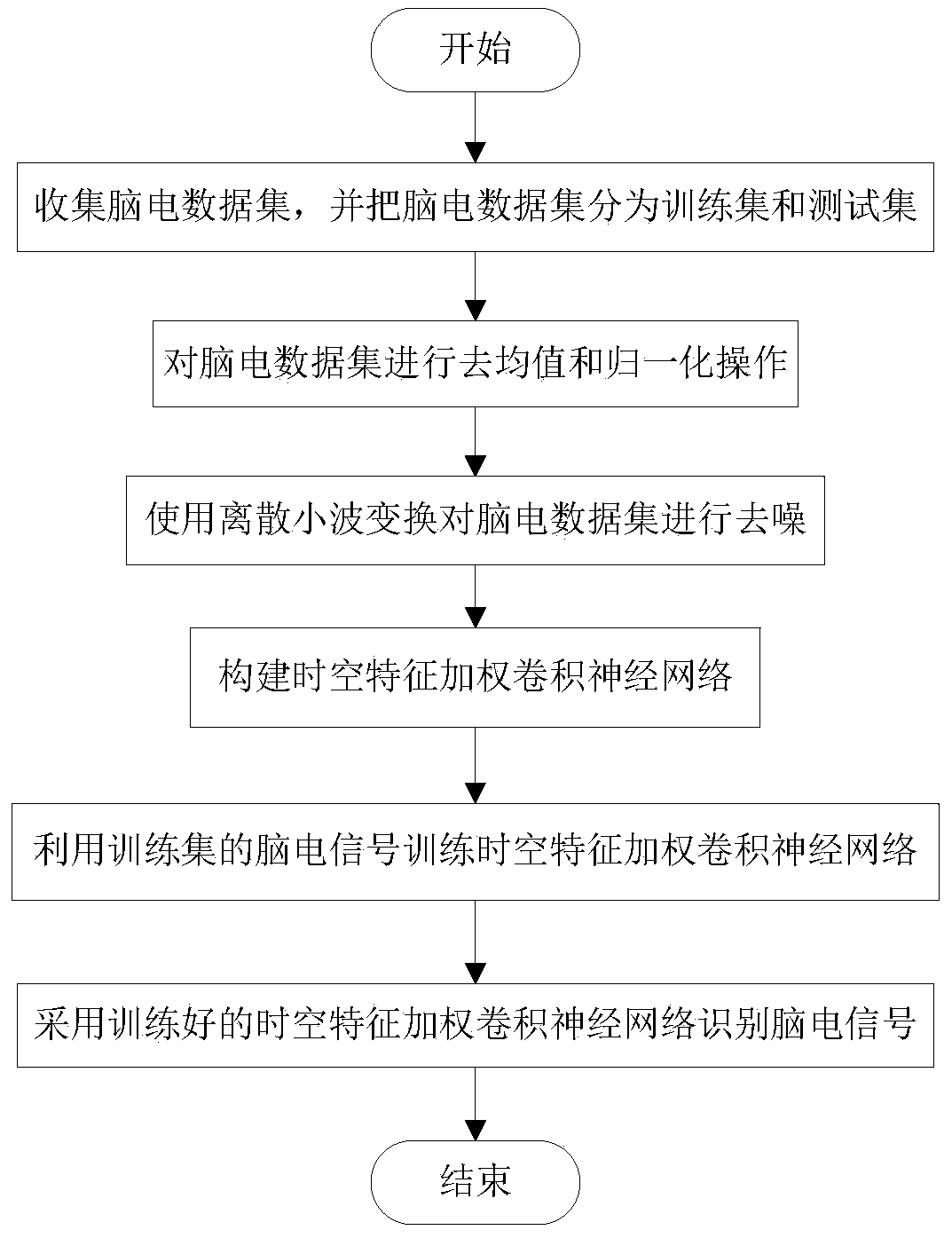

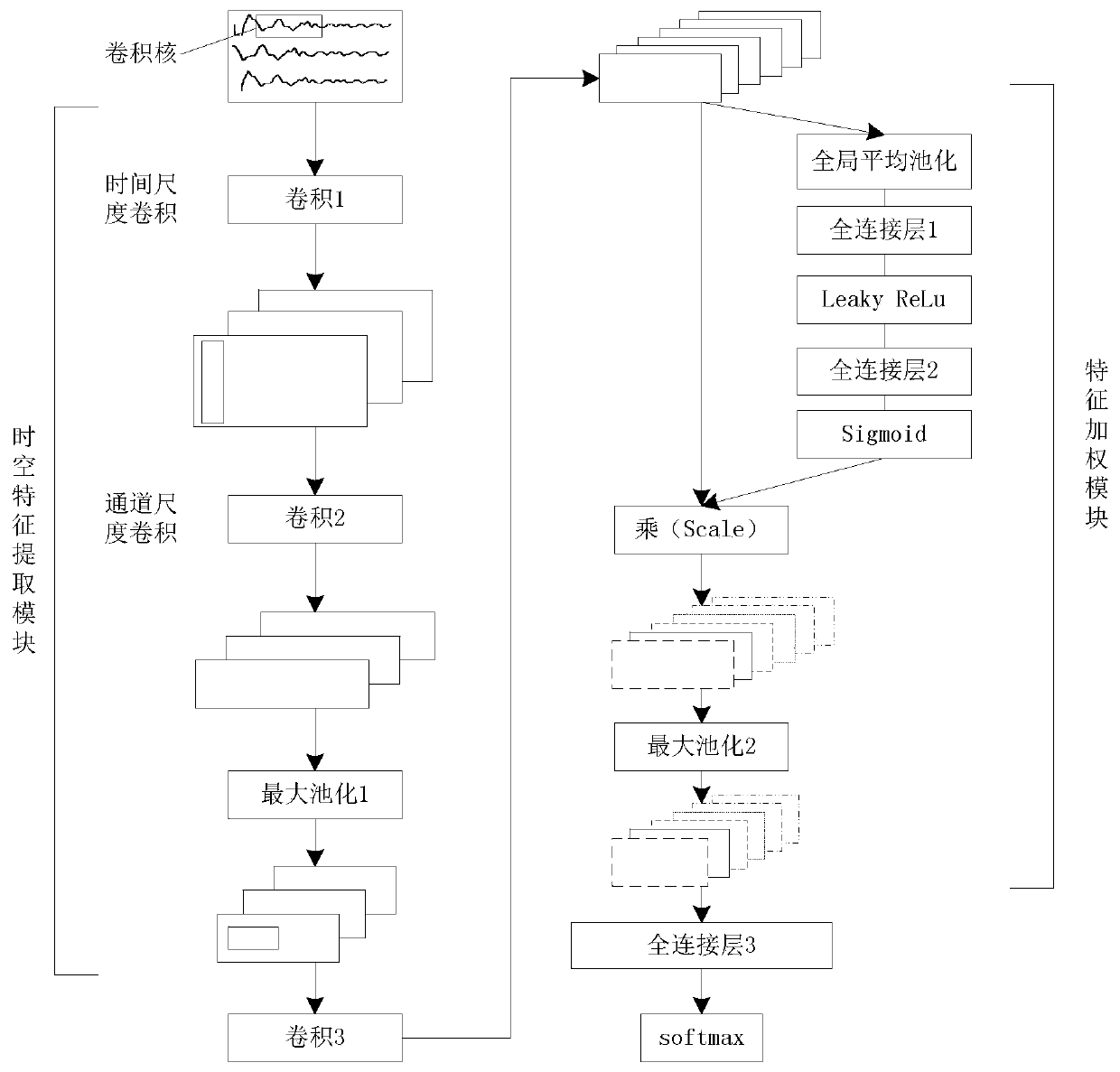

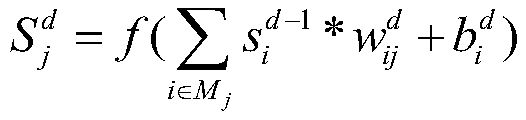

Electroencephalogram signal recognition method based on spatiotemporal feature weighted convolutional neural network

PendingCN110929581ADistinctive featuresSimple structureCharacter and pattern recognitionNeural architecturesFeature extractionMotor imagery

The invention requests to protect an electroencephalogram signal identification method based on a spatiotemporal feature weighted convolutional neural network. The method comprises the following steps: firstly, de-noising a motor imagery electroencephalogram signal by using discrete wavelet transform; designing a spatial-temporal feature weighted convolutional neural network to perform feature extraction on the processed electroencephalogram signal, wherein the convolution operation of the first layer is carried out on the time scale of the motor imagery electroencephalogram signal, and the convolution operation of the second layer is carried out on the channel scale, so that the extracted features include the space-time characteristics of the motor imagery electroencephalogram signal; because the importance degrees of the extracted features are different, a feature weighting module is added into the network, so that the important features are highlighted, and the unimportant featuresare weakened. Characteristics extracted by the model can reflect the characteristics of various motor imagery electroencephalogram signals more effectively, and the recognition accuracy of the motor imagery electroencephalogram signals can be improved.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

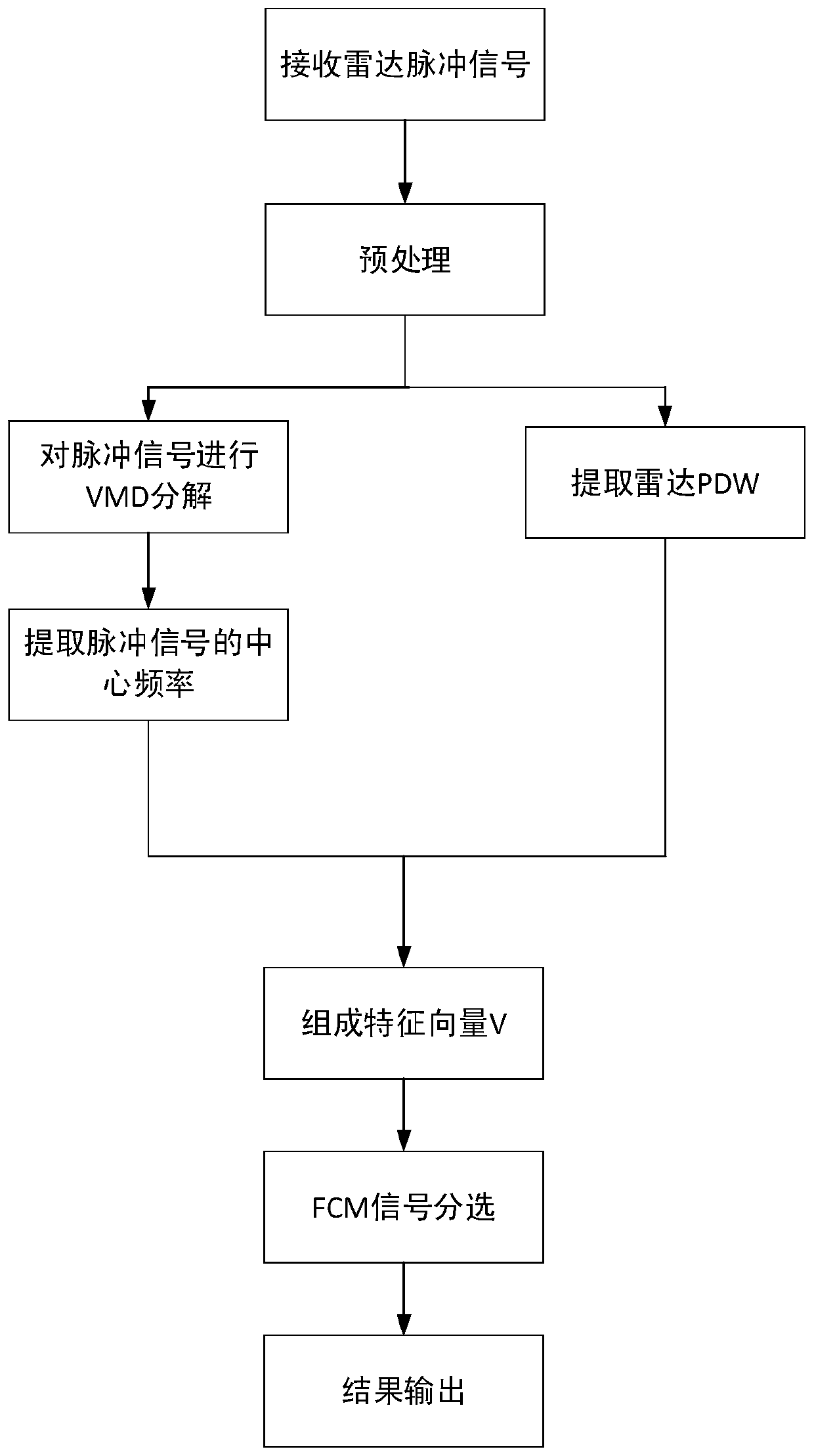

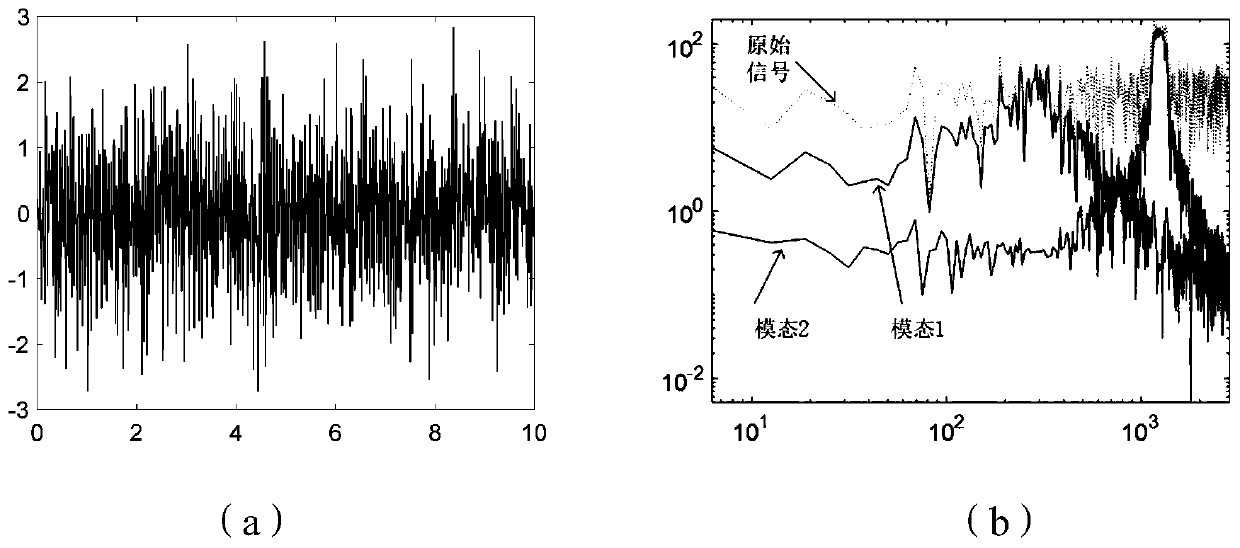

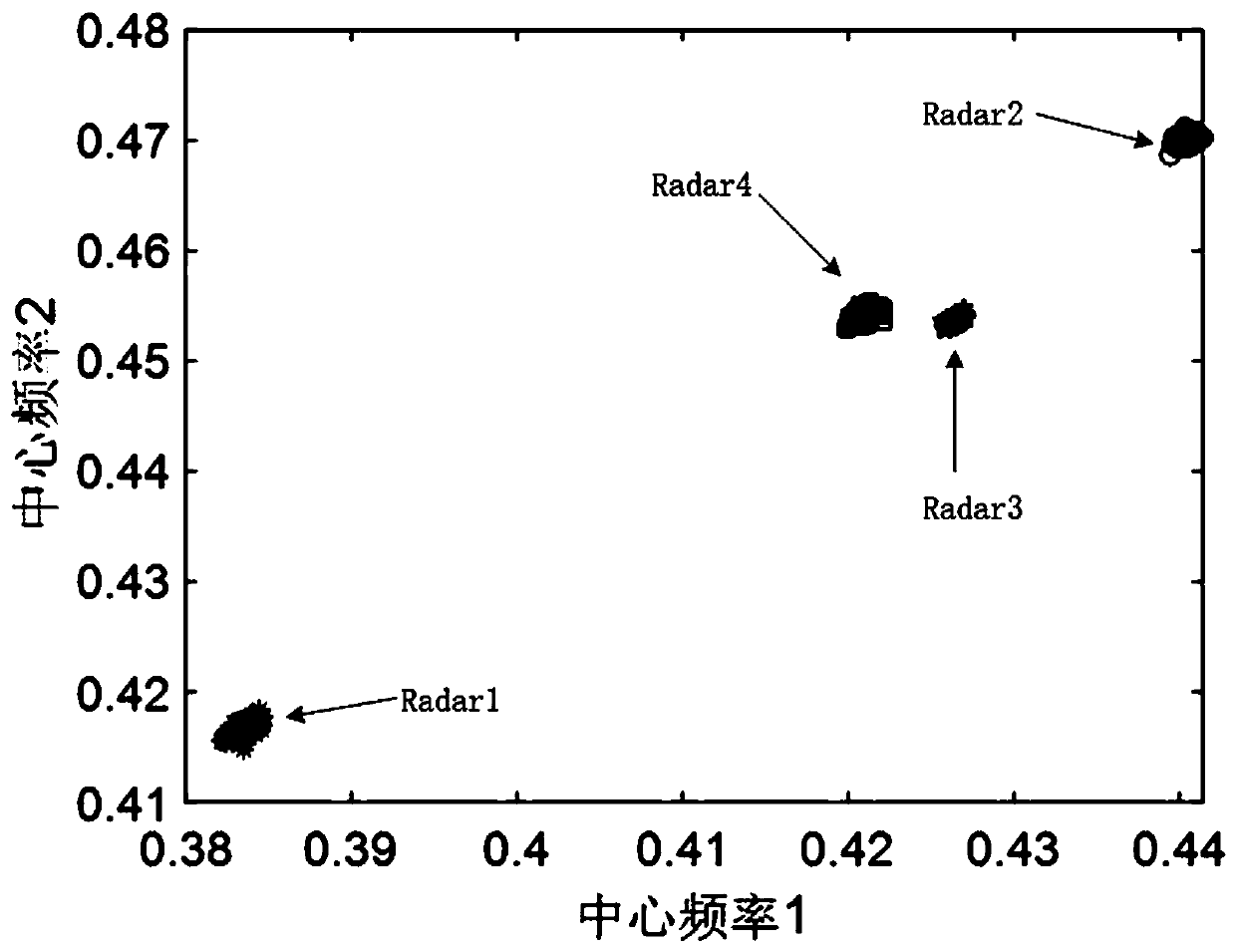

Radar radiation source feature extraction and classification method based on variational mode decomposition

PendingCN110188647AGood separation between classesFeature validCharacter and pattern recognitionSignal-to-noise ratio (imaging)VIT signals

The invention discloses a radar radiation source feature extraction and classification method based on variational mode decomposition, and the method comprises the steps of firstly, receiving a high-frequency pulse signal of a radar, carrying out the preprocessing of the high-frequency pulse signal, and obtaining a preprocessing signal; utilizing the digital channelization processing to generate apulse description word parameter of the pre-processed signal, and extracting an arrival angle and a pulse width in the pulse description word parameter; preprocessing the central frequency intra-pulse characteristics of the signals through a variational mode decomposition method, taking the central frequency intra-pulse characteristics as the supplement of conventional parameters, forming the characteristic parameters of a radar radiation source, and finally adopting a fuzzy-C means method to sort the radar radiation sources. The method can be used for classifying the frequency modulation type radar radiation sources, has the very good noise suppression capability, and can effectively improve the classification effect of the radar radiation sources under the low signal-to-noise ratio.

Owner:XIDIAN UNIV

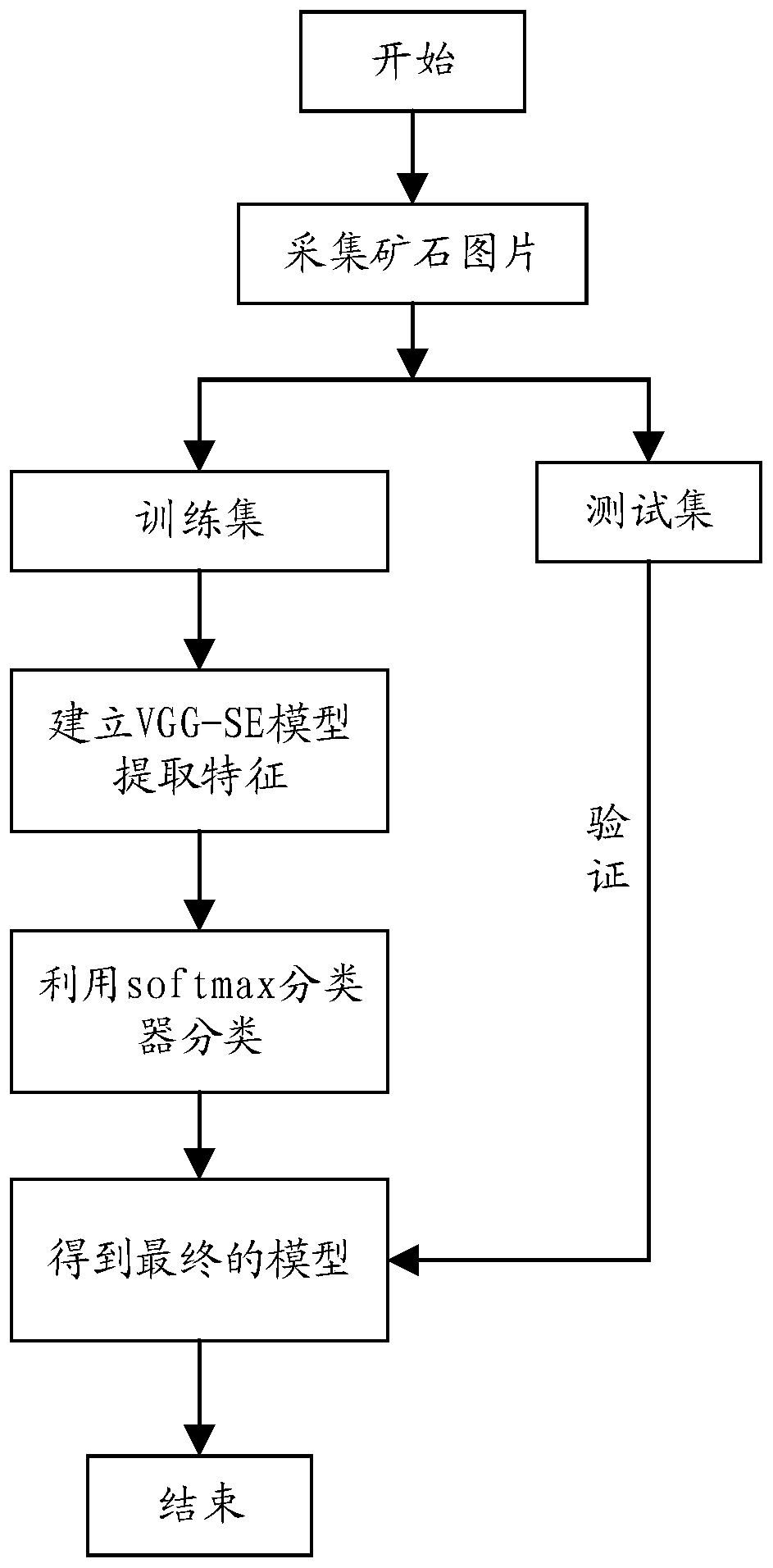

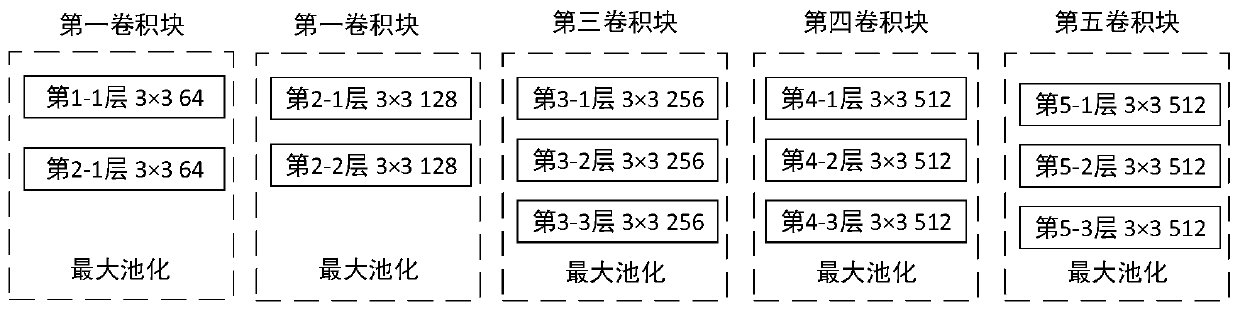

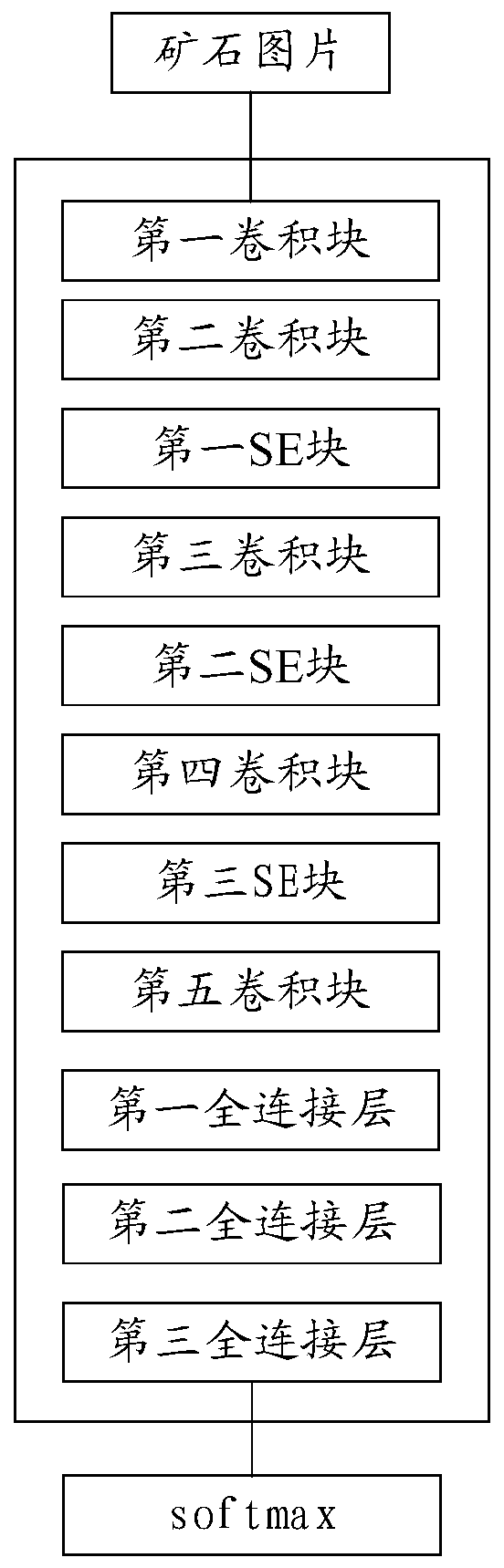

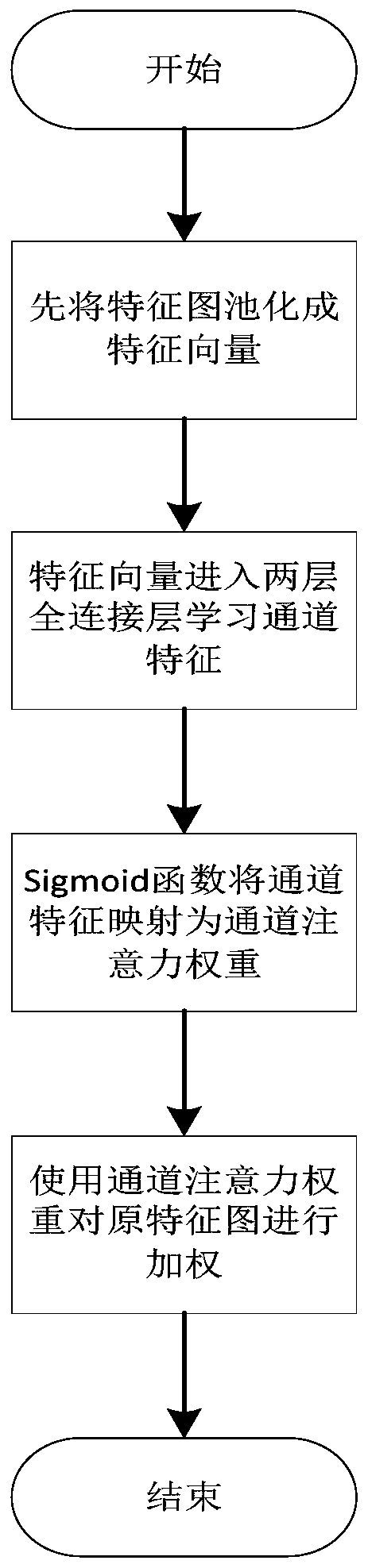

An ore sorting method based on a convolutional neural network

InactiveCN109740656APlay the role of feature recalibrationEasy extractionCharacter and pattern recognitionFeature vectorFeature extraction

The invention provides an ore sorting method based on a convolutional neural network. The method comprises the following steps: making a training set and a test set of ore pictures; building VGG-SE model for inputting the ore pictures in the training set into the VGG-SE model and using VGG-SE model to carry out feature extraction on the ore picture to obtain a group of feature vectors; inputting agroup of obtained feature vectors into a softmax classifier, and classifying the ore pictures through the softmax classifier to obtain a trained VGG-SE model; inputting ore pictures in a test set toa trained VGG-SE modle to perform prediction to obtain a prediction result. The method has the advantages that compared with existing manual classification, machine classification is used for replacing manual classification, the classification accuracy is greatly improved, and meanwhile economic benefits are improved.

Owner:HUAQIAO UNIVERSITY

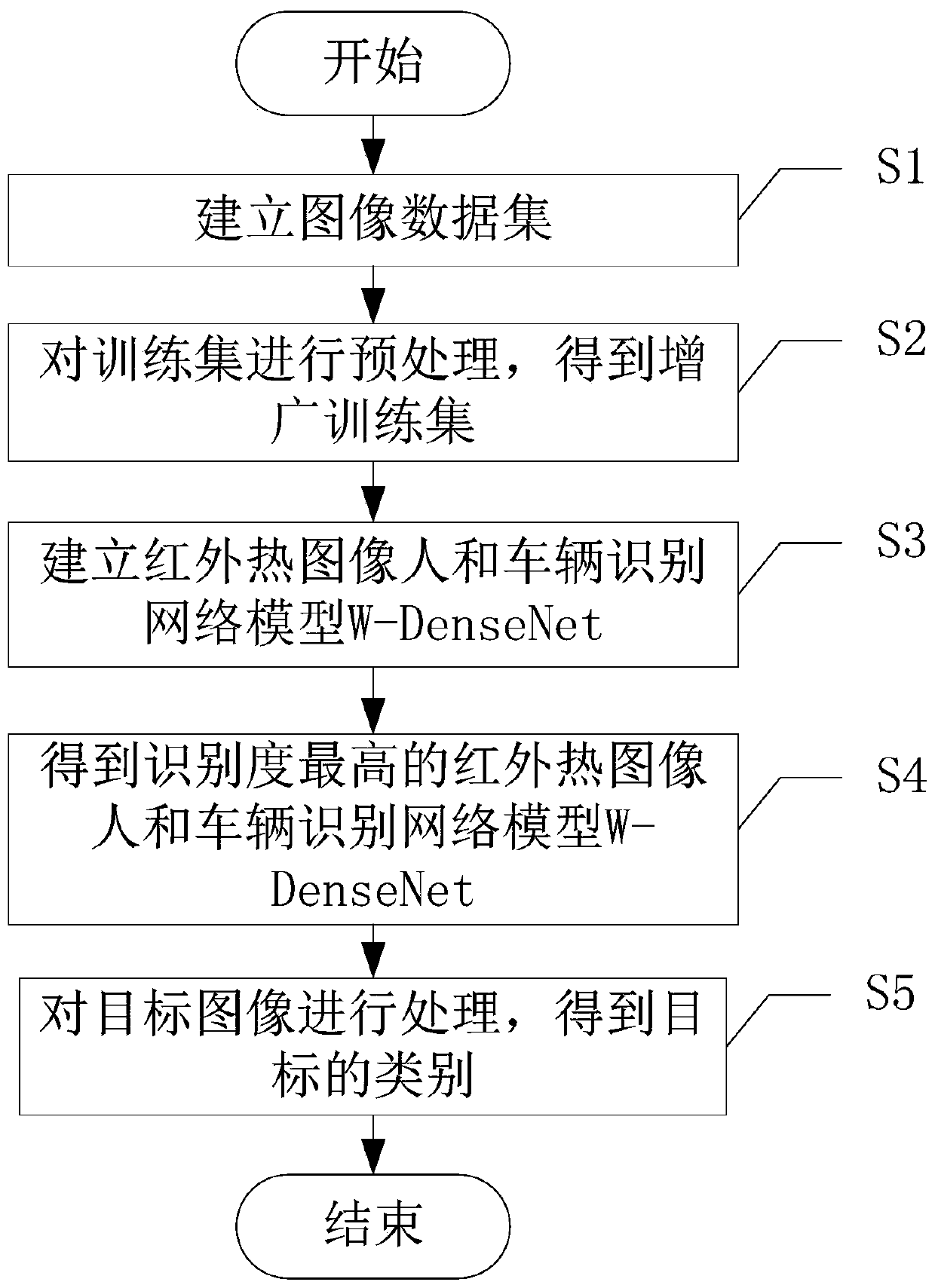

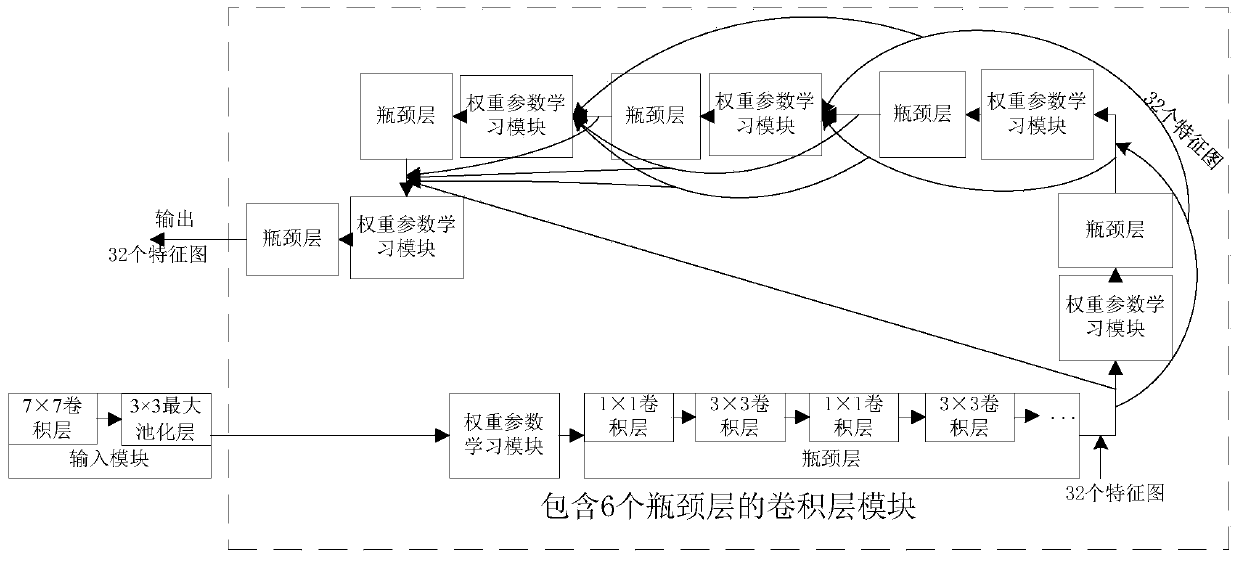

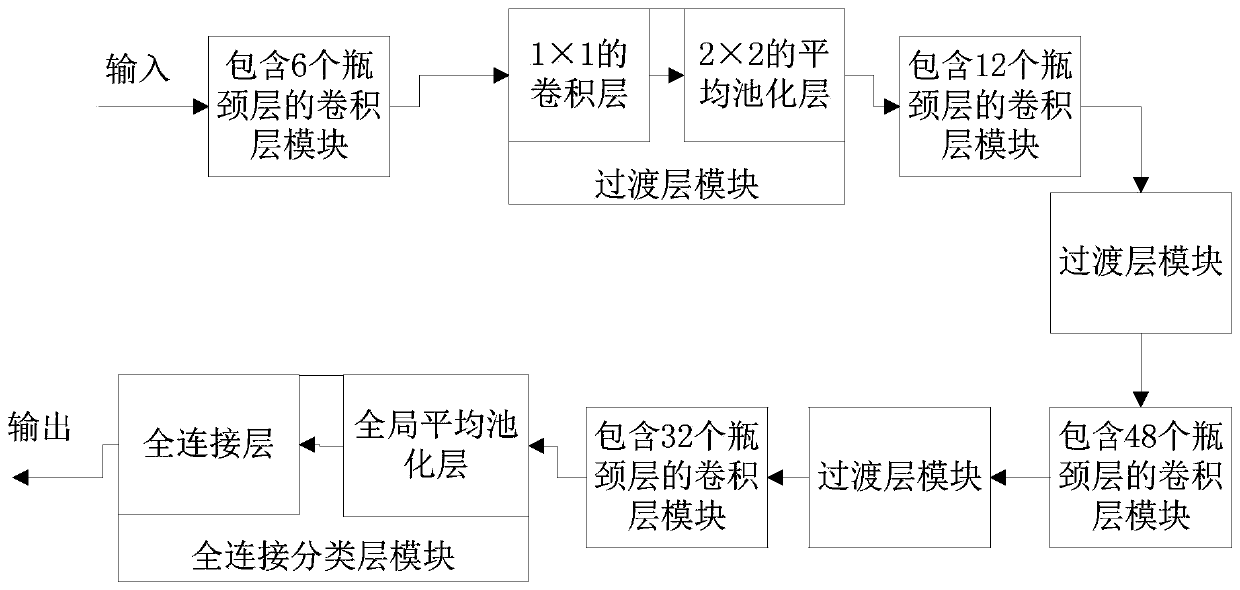

Human and vehicle infrared thermal image recognition method based on convolutional neural network

ActiveCN111046964AEffective resolutionEasy to identifyBiometric pattern recognitionNeural architecturesNetwork structureNetwork model

The invention discloses a human and vehicle infrared thermal image recognition method based on a convolutional neural network. After the training set is augmented, the established infrared thermal image human and vehicle identification network model W-DenseNet is trained; a test set is used for verification to obtain an infrared thermal image human and vehicle identification network model W-DenseNet with high identification degree; the infrared thermal image human and vehicle identification network model W-DenseNet extracts features of different levels of data layer by layer, so that a machineobtains higher level of feature expression and understanding ability, the target category is effectively distinguished, and the purpose of identifying people and vehicles is achieved. A weight parameter learning module is added, so that weight parameters learned in network structure training are weighted on the corresponding convolution layer input feature map, important features are enhanced, invalid features are suppressed, and more effective features are extracted, and therefore, the accuracy of the network model for identifying the gender of men and women in the infrared thermal image canbe improved.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

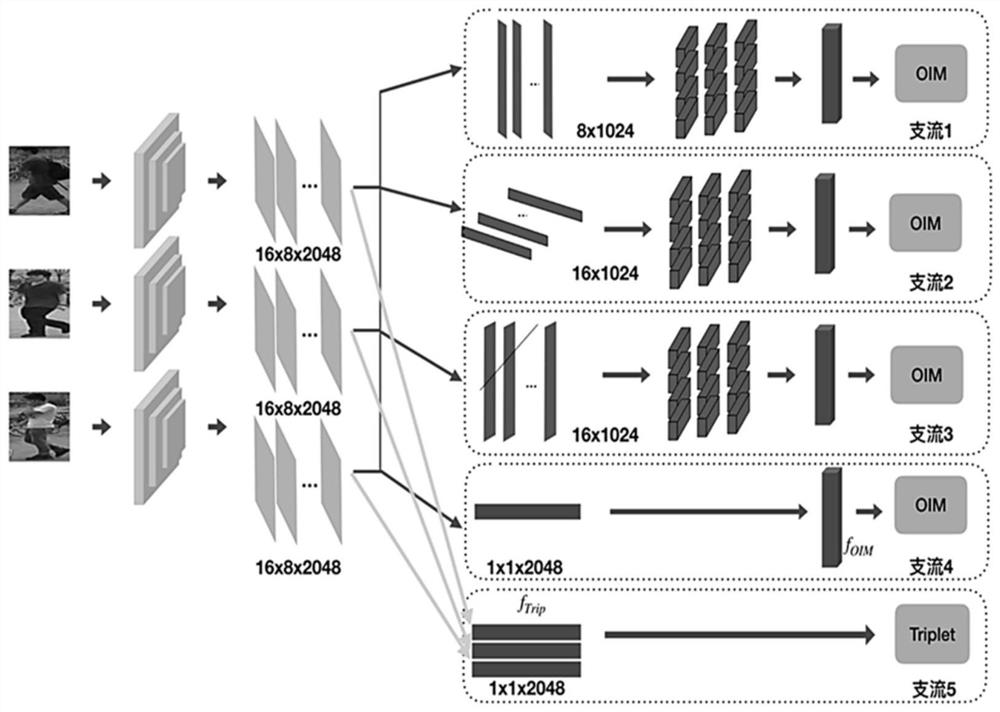

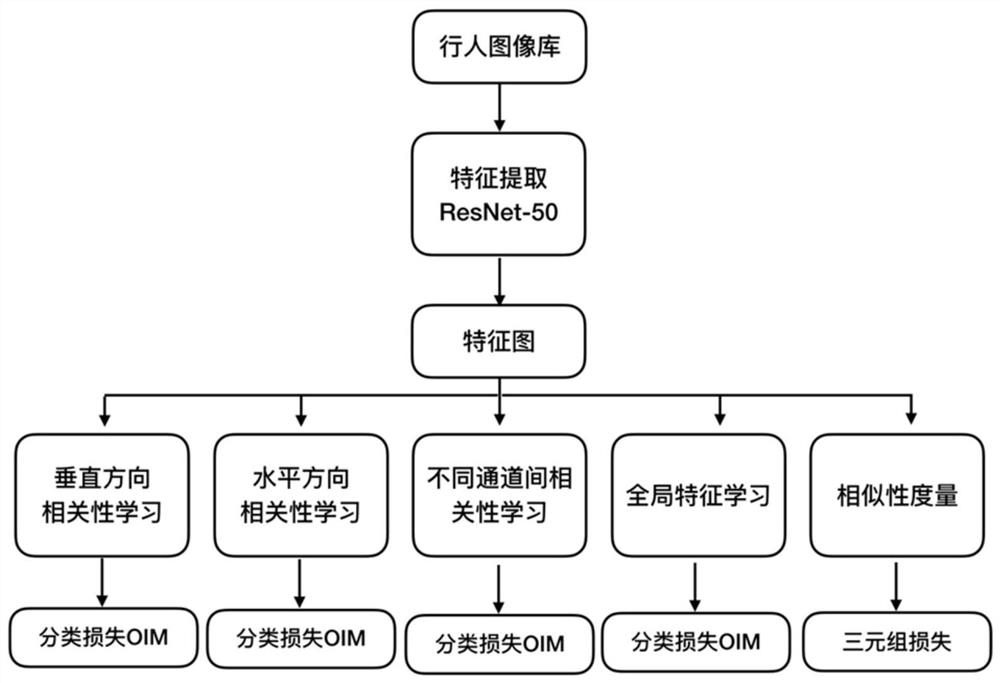

Pedestrian re-identification method based on multi-branch fusion model

ActiveCN111814845AImprove robustnessImprove accuracyImage enhancementImage analysisFeature extractionAlgorithm

The invention relates to a pedestrian re-identification method based on a multi-branch fusion model. According to the method, a deep learning technology is used; preprocessing operations such as overturning, cutting and random erasing are carried out on the training set pictures; feature extraction is carried out through a basic network model; a plurality of branch loss functions are used for carrying out fusion joint training on the network; in the first branch and the second branch, a capsule network is used for extracting spatial relations of slices at different positions from the horizontal direction and the vertical direction; the third branch uses a capsule network to learn correlations between different channels of the obtained feature map, the fourth branch is used for learning global features, the fifth branch is used for carrying out corresponding similarity measurement; the mutual relation between different segmentation areas is considered through fusion of multiple branch models, the body part features in the horizontal direction can be effectively obtained, and then the features extracted by the network are more effective.

Owner:TONGJI UNIV

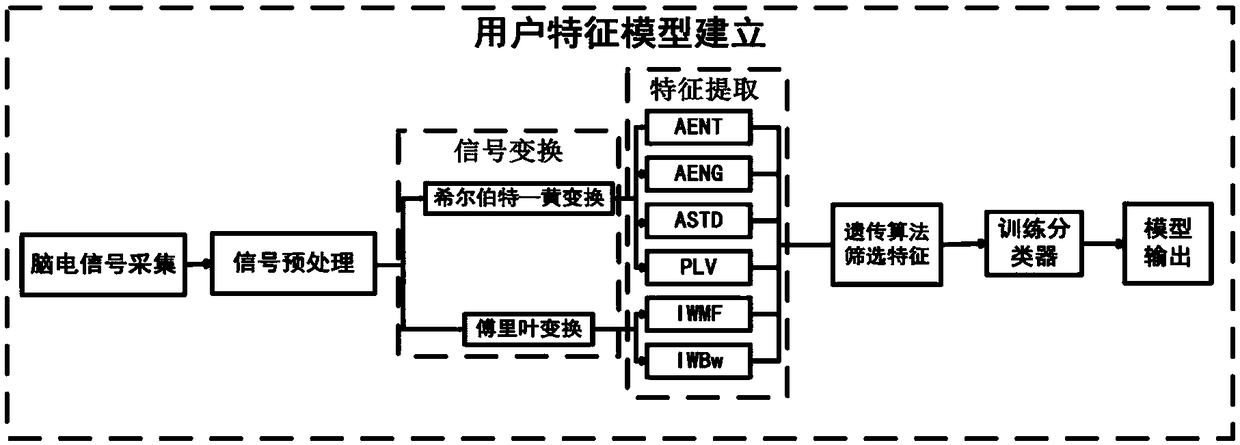

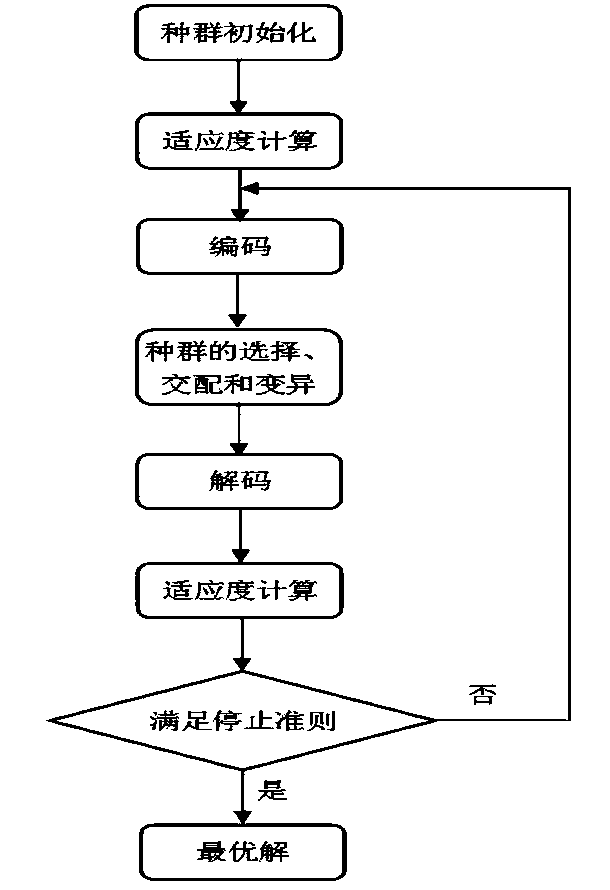

User characteristic model establishment method and system based on brain-computer interface and storage medium

ActiveCN108363493AImprove classification accuracyFeature validInput/output for user-computer interactionGraph readingFeature screeningBrain computer interfacing

The invention discloses a user characteristic model establishment method and system based on a brain-computer interface and a storage medium. The method comprises the steps that motor imagery electroencephalogram signals are acquired, and acquired motor imagery electroencephalogram signals are preprocessed; Fourier transformation is conducted on the preprocessed motor imagery electroencephalogramsignals to obtain frequency spectrum, meanwhile Hilbert-Huang transformation is conducted on the preprocessed motor imagery electroencephalogram signals to obtain instantaneous amplitude and instantaneous phase, and feature extraction is conducted on the frequency spectrum, the instantaneous amplitude and the instantaneous phase; a genetic algorithm is utilized to conduct feather screening on extracted features, and the screened features are utilized to train a classifier; the trained classifier serves as a user feature model output. Accordingly, the accuracy rate and stability of a model areimproved. Different users are large in electroencephalogram signal difference, and a model having optimum electroencephalogram signal features is determined for a specific user.

Owner:SHANDONG JIANZHU UNIV

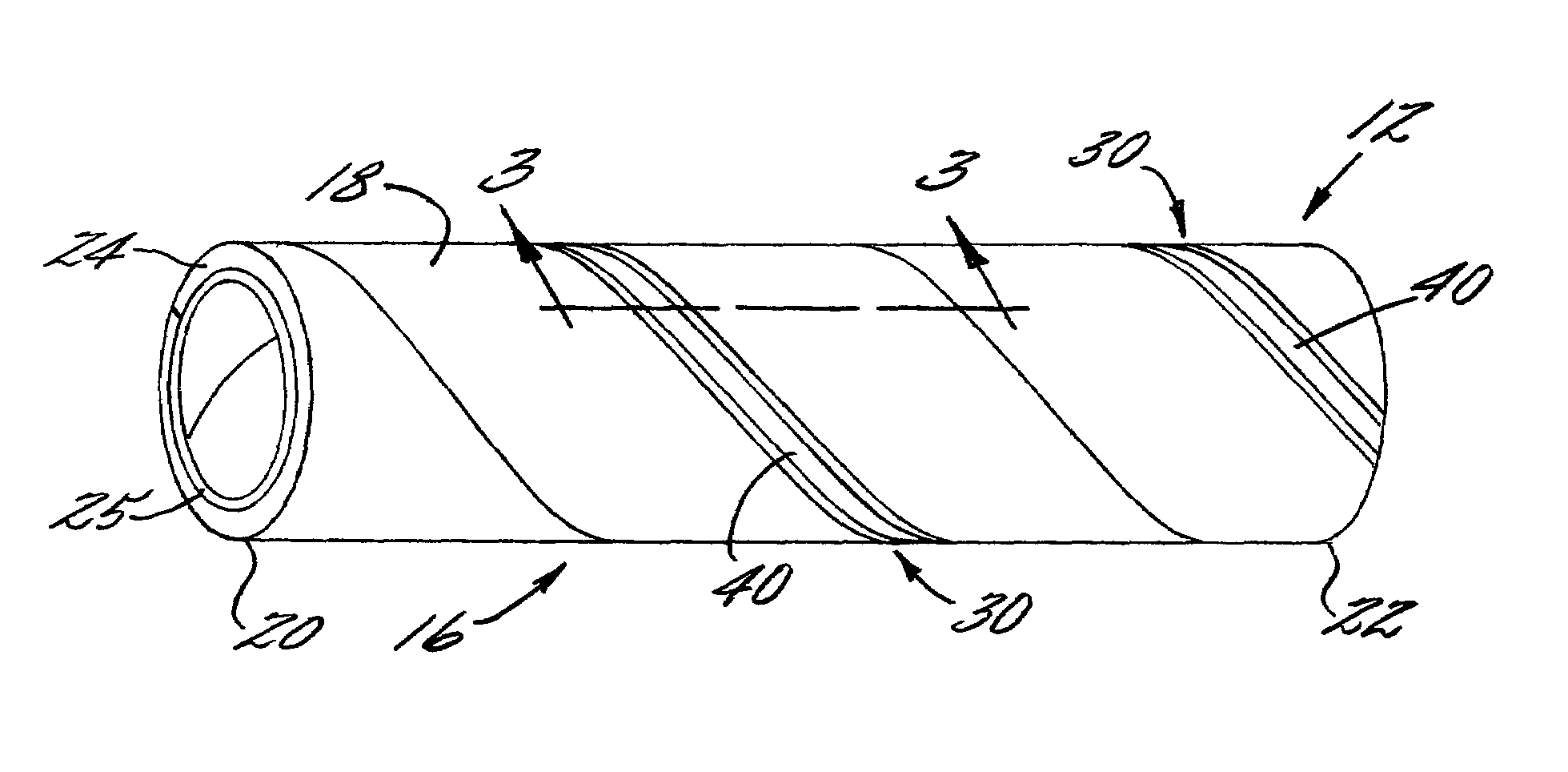

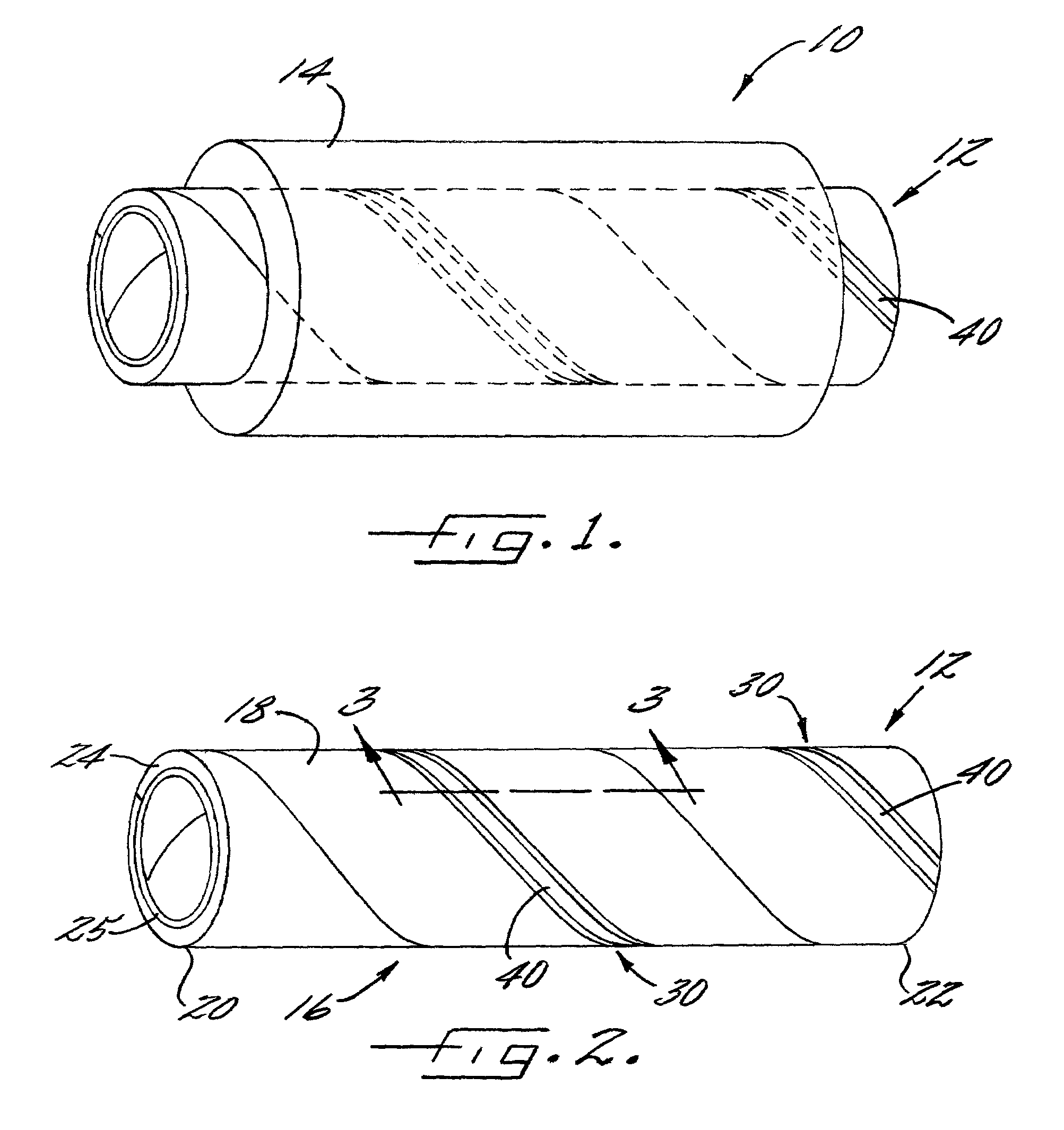

Textile carrier having identification feature and method for manufacturing the same

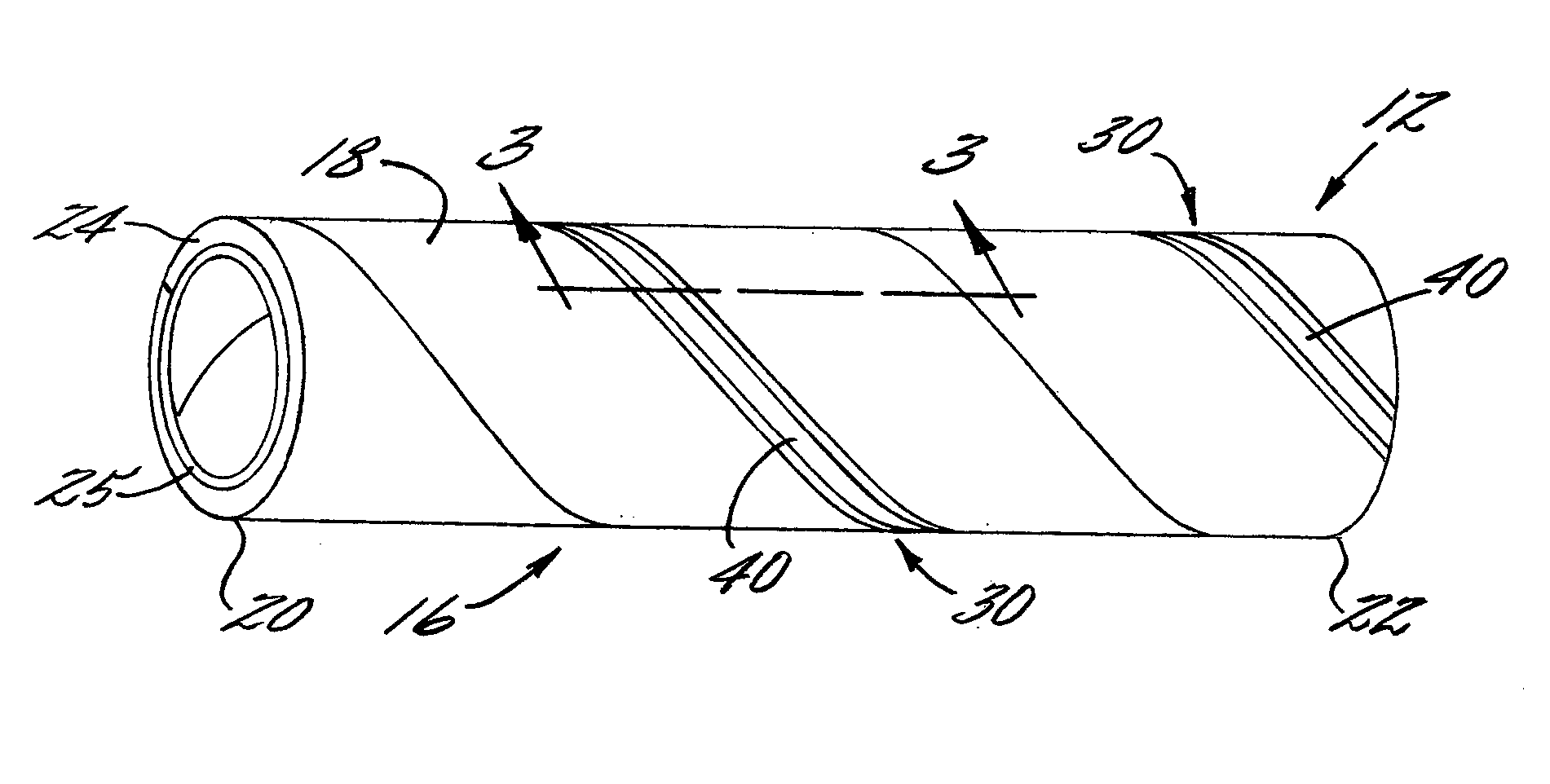

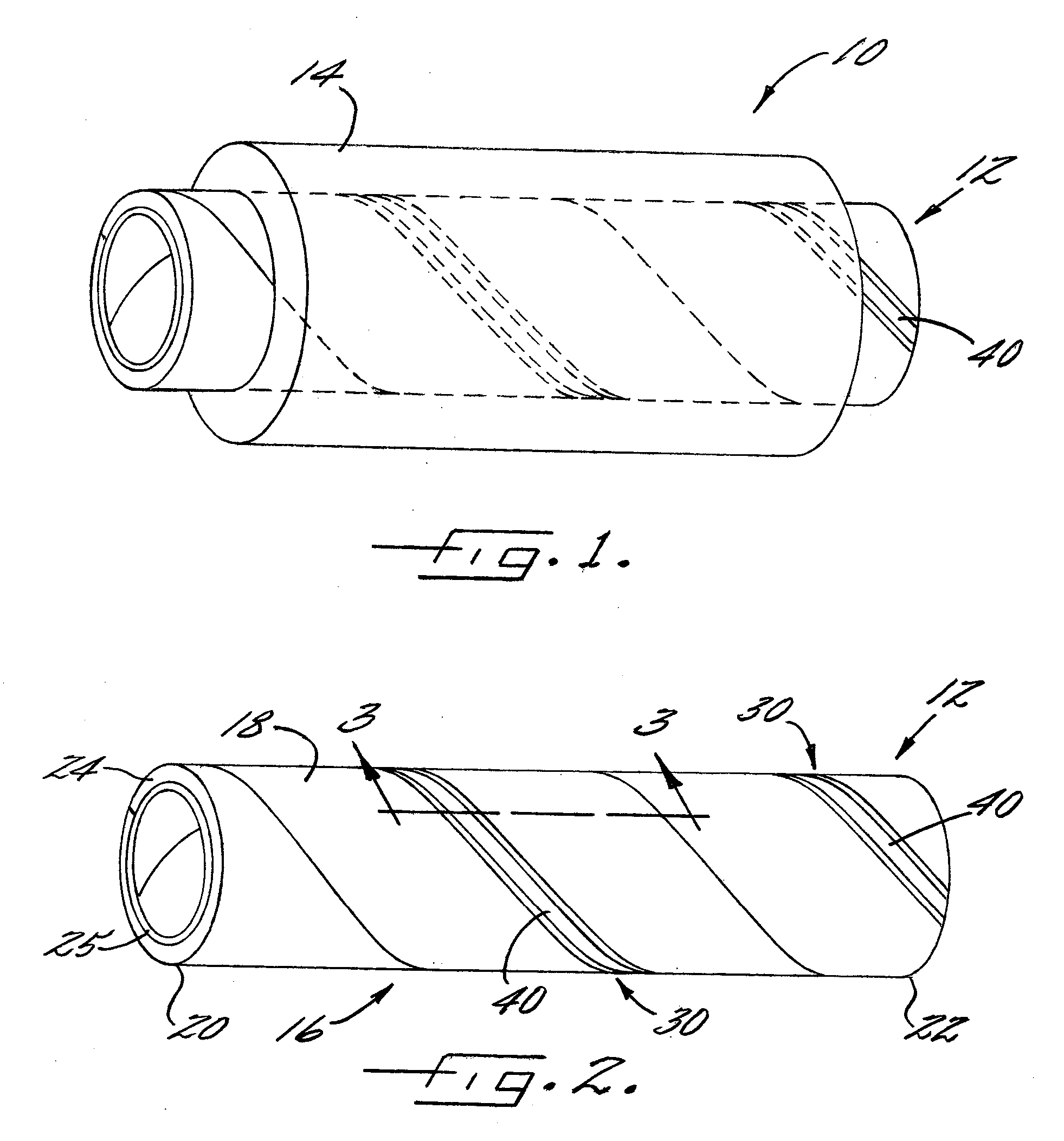

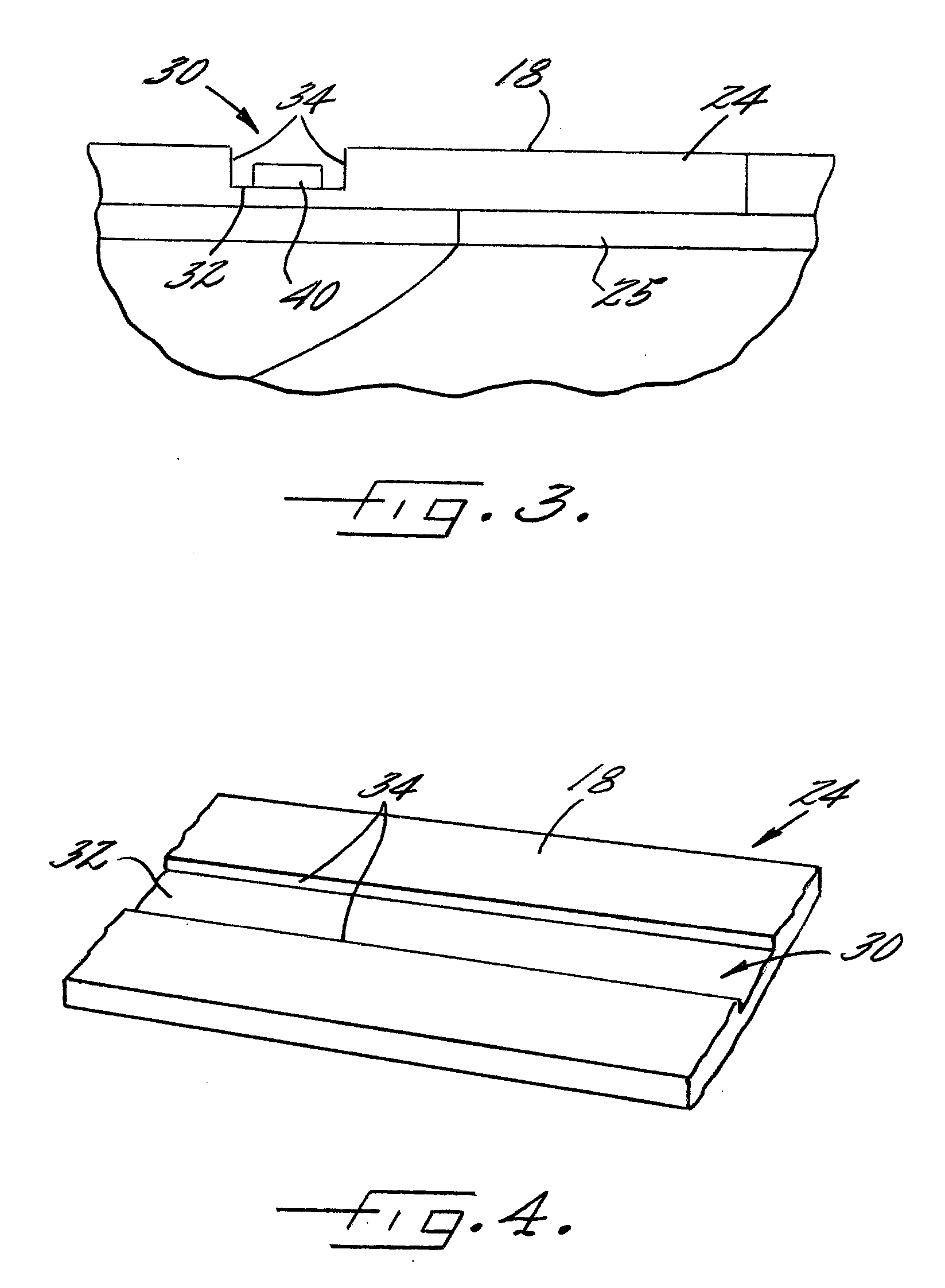

InactiveUS20070012815A1Feature validCost effectivePaper/cardboard wound articlesEngineeringMechanical engineering

A spirally wound tube for wrapping textiles or other materials thereon. The tube has an identification feature. The tube is made by spirally winding a number of plies together. The outermost ply defines a groove that substantially extends spirally along the length of the tube. The groove is for containing an identification marking for identifying the textile or other material wrapped onto the tube. In particular, the tube may include an identification stripe that extends along the groove. The identification stripe contains a marking system or identification markings to indicate the type or nature of the textile material wrapped on the tube. The marking system may include the color or colors of the stripe, or patterns, codes, readable indicia, or any combination of markings on the stripe.

Owner:SONOCO DEV INC

Method for sorting radar two-dimension image base on multi-dimension geometric analysis

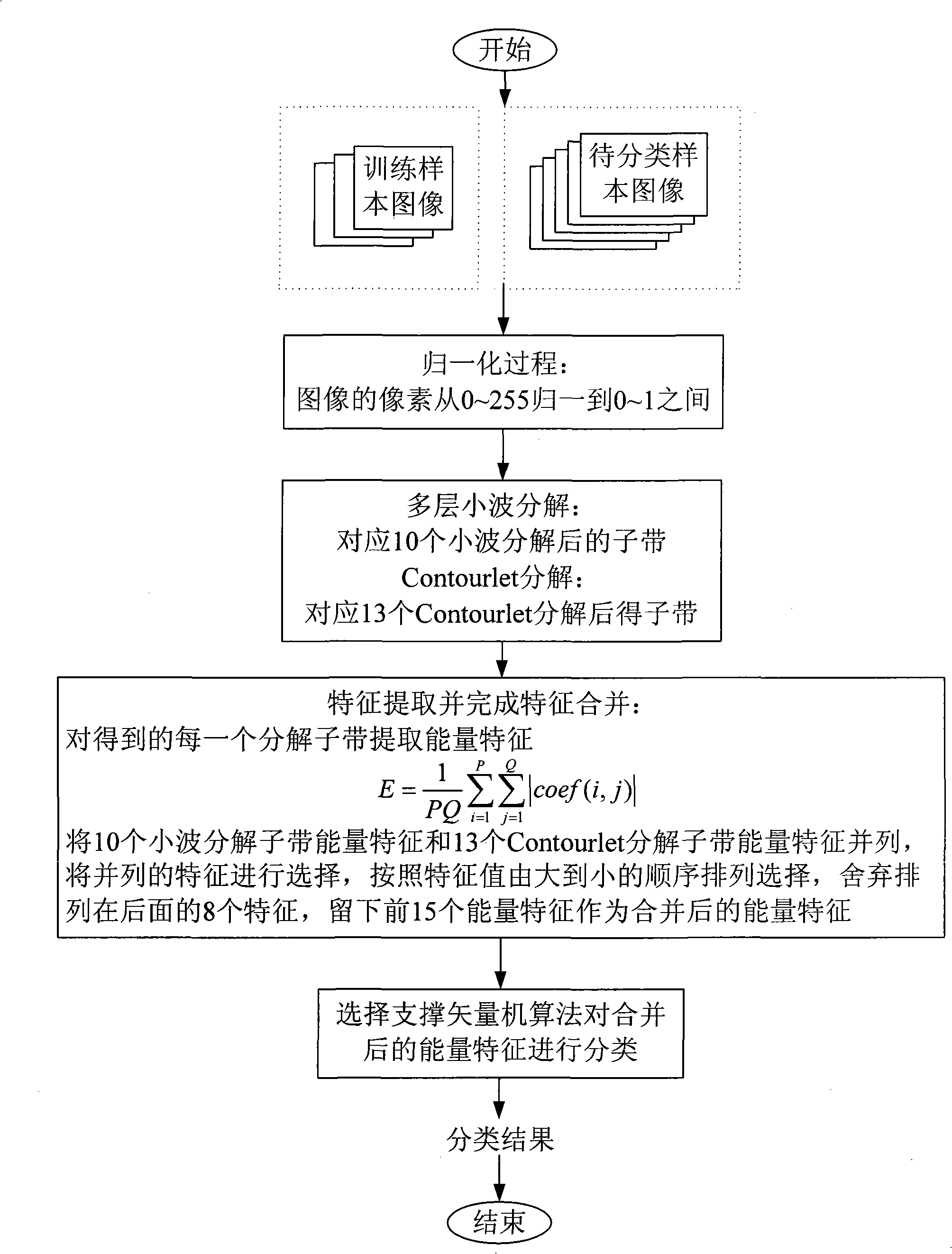

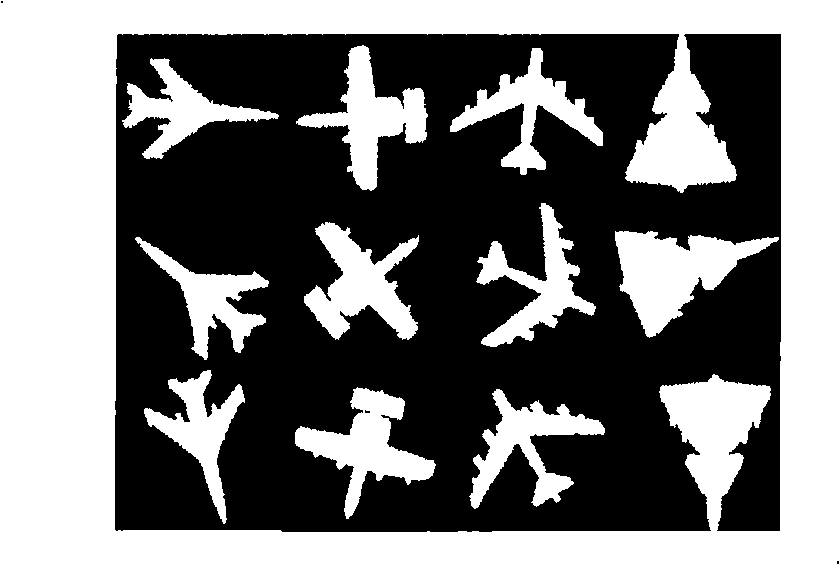

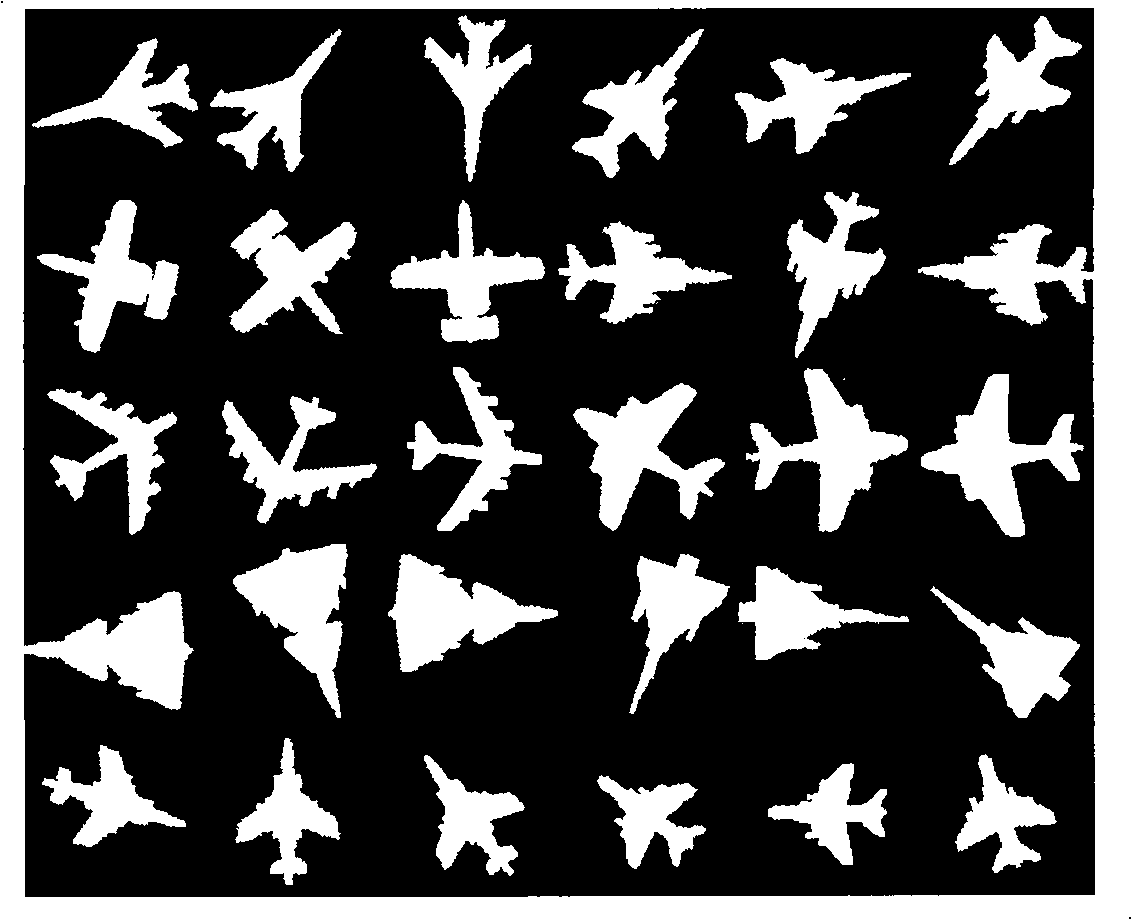

ActiveCN101408945AFeature validEnergy signature validCharacter and pattern recognitionRadio wave reradiation/reflectionDecompositionMultiscale geometric analysis

The invention discloses a radar two-dimensional image classifying method based on multi-dimension geometric analysis, belongs to the technical field of image processing, and mainly overcomes the defect that an existing method can not effectively express a radar two-dimensional image. The method comprises the following steps: firstly, a sample image set is input, and each image in the sample image set is normalized; secondly; three-layer wavelet decomposition and Contourlet decomposition are carried out on each normalized sample image to obtain ten wavelet decomposition subbands and thirteen Contourlet decomposition subbands which are respectively corresponding to the sample image; thirdly, energy characteristics are carried out on the obtained decomposition subbands and are merged by utilizing a characteristic merging method; and fourthly, the merged characteristics are classified by selecting support vector machine (SVM) algorithm. The invention has better classification accuracy rate and lower complexity, and can be used for classification of radar two-dimensional images and texture images as well as identification of bridge targets in SAR images.

Owner:探知图灵科技(西安)有限公司

Vehicle model audio feature extracting method based on LMD (local mean decomposition) and energy projection methods

ActiveCN104637481AEnhanced characteristic frequency componentsFeature validSpeech recognitionFeature DimensionAlgorithm

The invention provides a vehicle model audio feature extracting method based on LMD (local mean decomposition) and energy projection methods, and relates to the field of intelligent traffic recognition. Vehicle model audio signals are decomposed by adopting a self-adapting LMD method, then, a new PF component is reconstituted by a relevant weighted analysis method, and the feature frequency component is enhanced by the PF component subjected to weighted optimization, so that the vehicle model feature is more effective, and further, the classification accuracy is improved. The vehicle model audio feature extracting method has the advantages that the energy distribution condition of the vehicle model information can be analyzed and reflected in the energy accumulation feature frequency band, the energy of signals X(t) is projected into several respective frequency bands through molecular frequency band division, the calculation quantity is reduced, the feature dimension is reduced, and the real-time performance of an algorithm is improved.

Owner:沈阳易达讯通科技有限公司

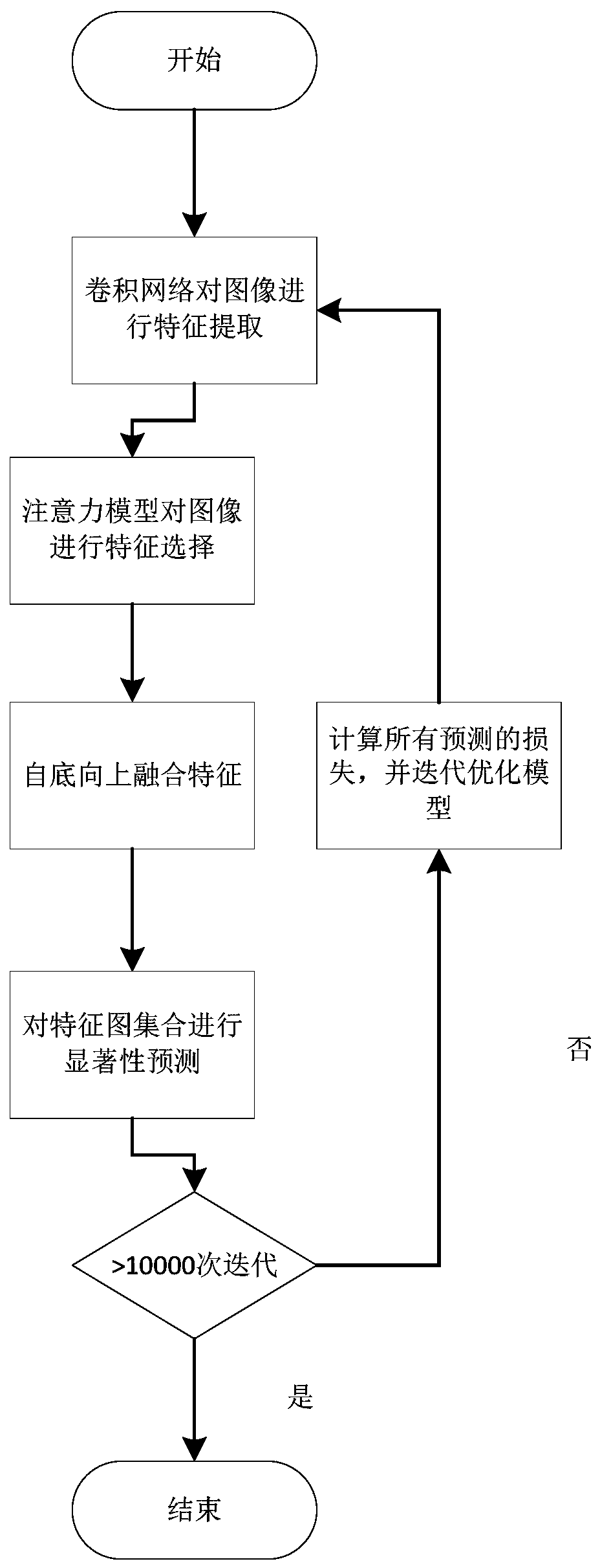

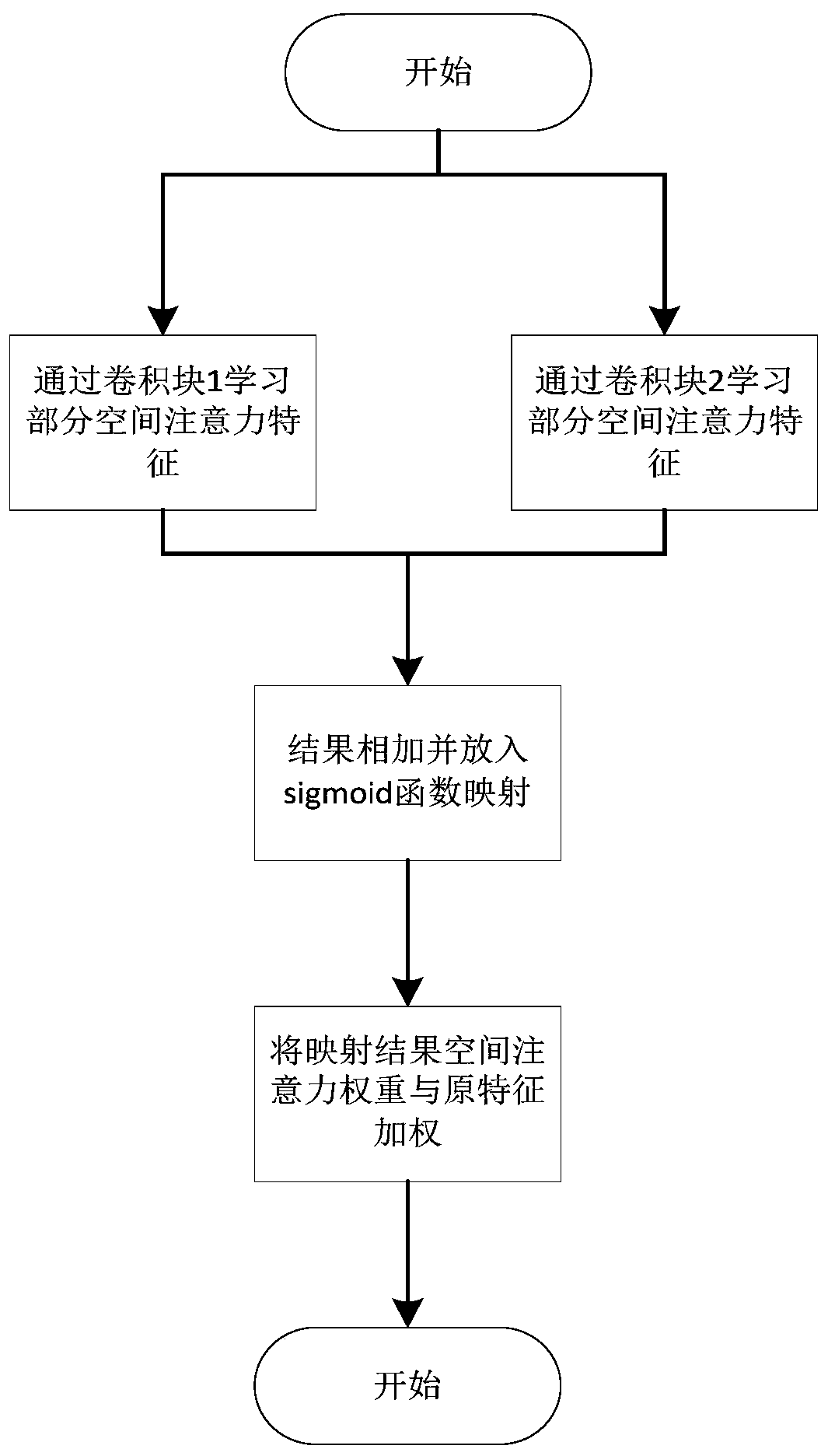

Image saliency detection method based on feature selection and feature fusion

ActiveCN111275076AImprove featuresFeature validCharacter and pattern recognitionNeural architecturesAttention modelModel network

The invention discloses an image saliency detection method based on feature selection and feature fusion, and the method comprises the following steps: carrying out the feature extraction of an inputimage, and adding features to a feature pyramid set; performing feature selection on the feature pyramid set to obtain a new feature pyramid set; performing feature fusion on features in the new feature pyramid set from bottom to top to obtain a mixed feature pyramid set; and training the saliency prediction network model by using features in the mixed feature pyramid set, and performing saliencydetection on a to-be-detected image by using the trained model. According to the invention, feature selection is carried out on features of an image by using an attention model; according to the method, the characteristics related to the image target are enhanced, so that the characteristics are more effective, detail characteristics of a bottom layer and semantic characteristics of a high layer are effectively fused by adopting a bottom-up characteristic fusion structure, the characterization capability of the characteristics is greatly improved, and the detection accuracy is higher than thatof a common saliency model network.

Owner:NANJING UNIV OF SCI & TECH

Textile carrier having identification feature and method for manufacturing the same

InactiveUS7562841B2Cost effectiveFeature validPaper/cardboard wound articlesEngineeringMechanical engineering

A spirally wound tube for wrapping textiles or other materials thereon. The tube has an identification feature. The tube is made by spirally winding a number of plies together. The outermost ply defines a groove that substantially extends spirally along the length of the tube. The groove is for containing an identification marking for identifying the textile or other material wrapped onto the tube. In particular, the tube may include an identification stripe that extends along the groove. The identification stripe contains a marking system or identification markings to indicate the type or nature of the textile material wrapped on the tube. The marking system may include the color or colors of the stripe, or patterns, codes, readable indicia, or any combination of markings on the stripe.

Owner:SONOCO DEV INC

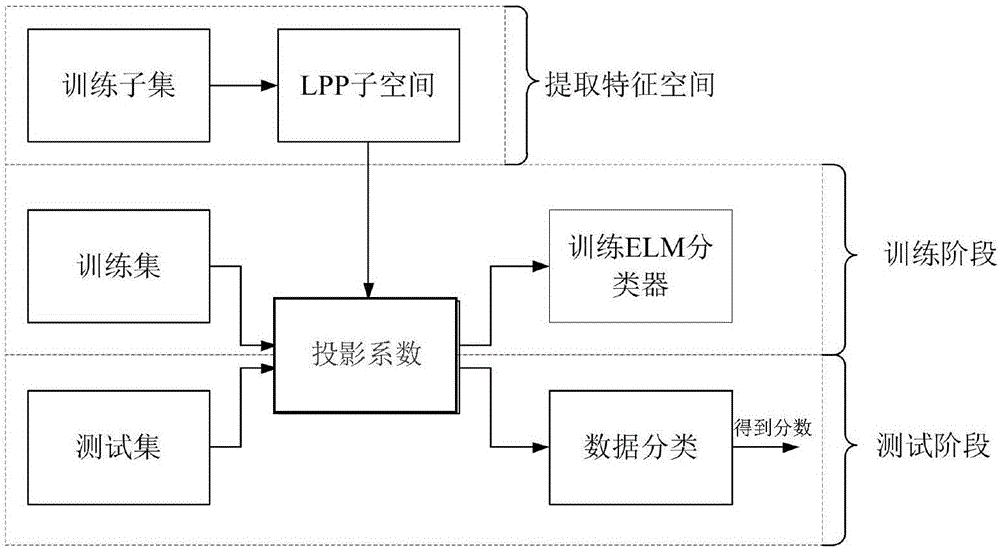

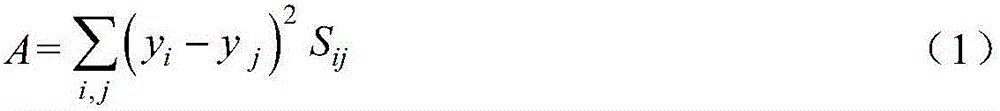

LPP-ELM based objective stereoscopic image quality evaluation method

InactiveCN106384364AReduce image dimensionalityFeature validImage enhancementImage analysisTest sampleAlgorithm

The invention discloses an LPP-ELM based objective stereoscopic image quality evaluation method. The method comprises the steps that (1) a training sample and a test sample are selected; (2) an LPP algorithm is used to carry out feature extraction and dimension reduction on the training and test samples; and (3) an ELM is used to classify the training sample. Compared with the prior art, the method improves the accuracy of an identification system due to use of the LPP-ELM algorithm, manual intervention is little, and effective approaches are provided for real-time environment and system popularization of objective stereoscopic image quality evaluation; and compared with PCA-ELM and PCA-SVM, LPP-ELM is higher in the whole performance of objective stereoscopic image quality evaluation, and is feasible in practicality.

Owner:TIANJIN UNIV

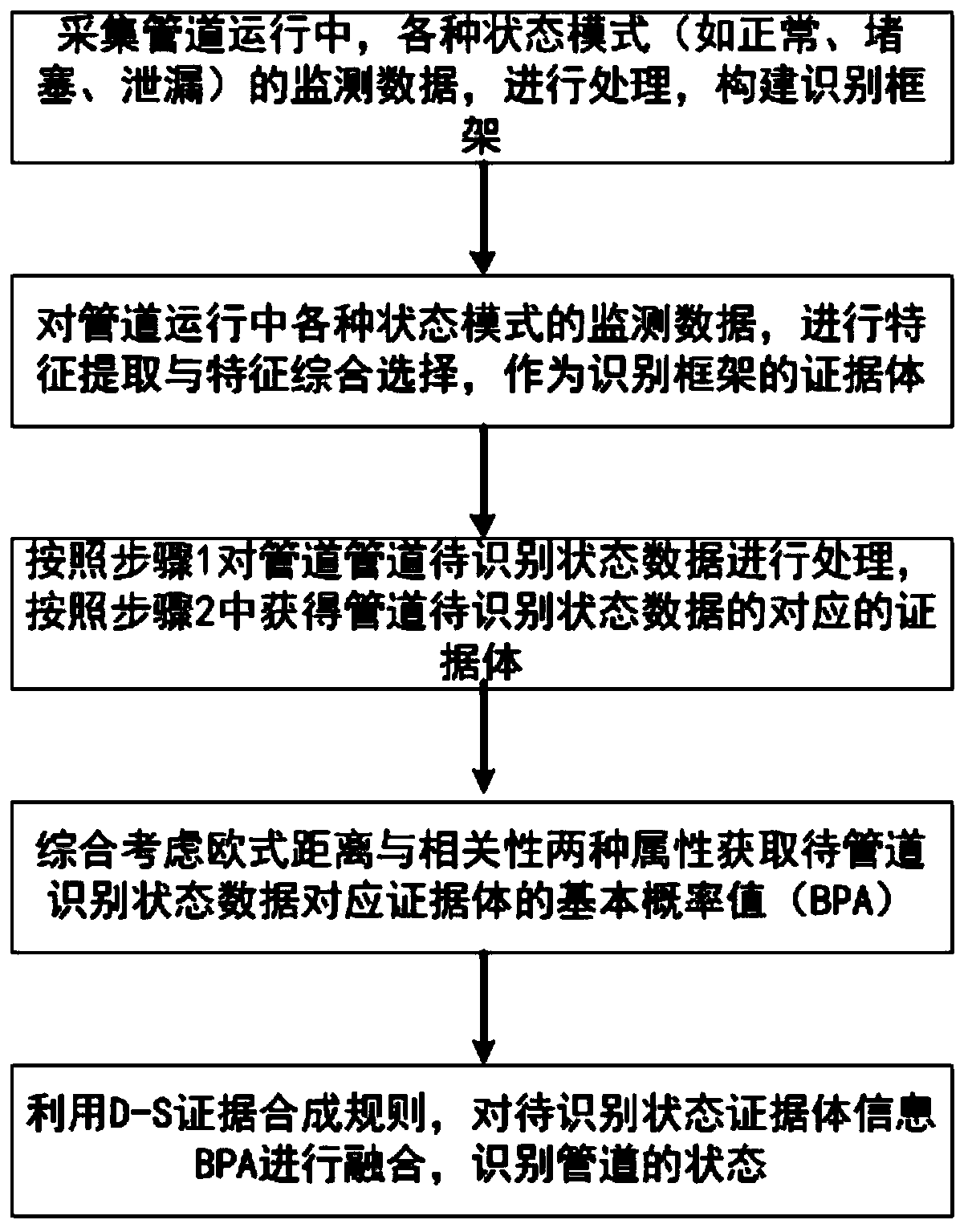

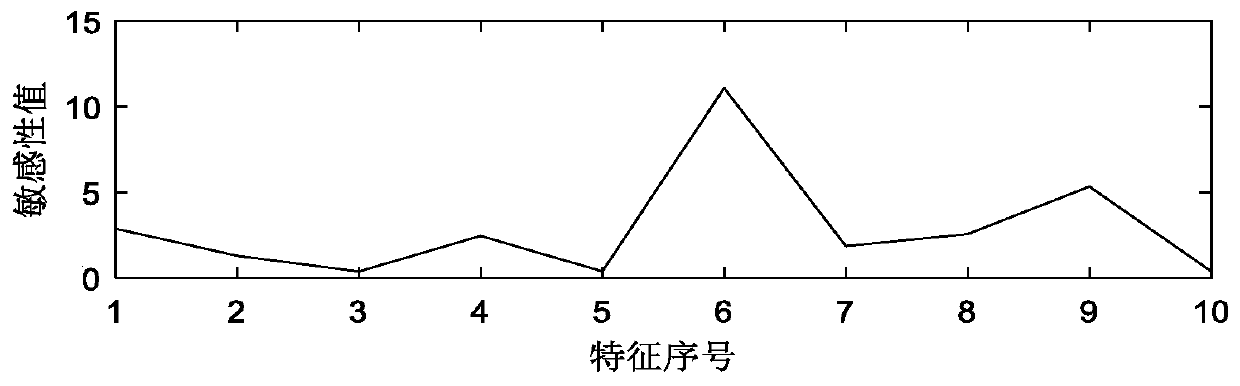

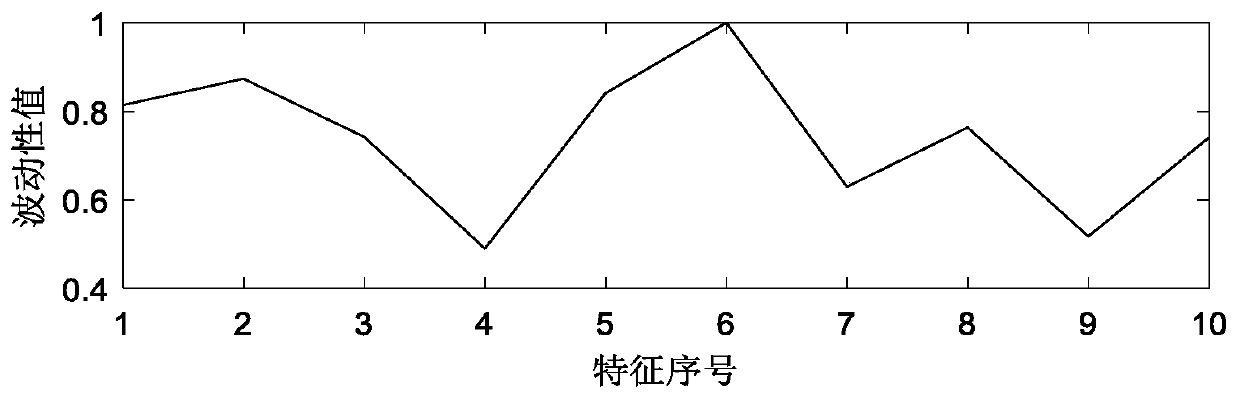

Pipeline state recognition method based on monitoring data multi-attribute feature fusion

ActiveCN110046651AAchieve effective characterizationThe basic probability assignment is accurateCharacter and pattern recognitionPattern recognitionAcquired characteristic

The invention discloses a pipeline state recognition method based on monitoring data multi-attribute feature fusion, and the method comprises the steps: building a state recognition framework based onpipeline state monitoring data, enabling the interior of the framework to comprise normal, blocked and leaked state modes, and extracting the data features of a pipeline operation state based on thestate modes contained in the state recognition framework; selecting the data features by adopting an evaluation index of comprehensive sensitivity and volatility, taking the obtained features as evidence bodies of a state recognition framework, processing the to-be-recognized state data of the pipeline according to the to-be-recognized state data of the pipeline, obtaining corresponding evidence bodies of the to-be-recognized state data of the pipeline, obtaining basic probability values of the evidence bodies, and utilizing D- S evidence theory synthesis rule to perform fusion analysis on allevidence information of the current state, determining the type of the current state belonging to an identification framework, and realizing the identification and judgment of the pipeline operationstate. The invention provides a pipeline operation state identification technology with guiding significance, which is of great significance for improving the accuracy of technical personnel for deciding and judging the pipeline operation state.

Owner:XI AN JIAOTONG UNIV

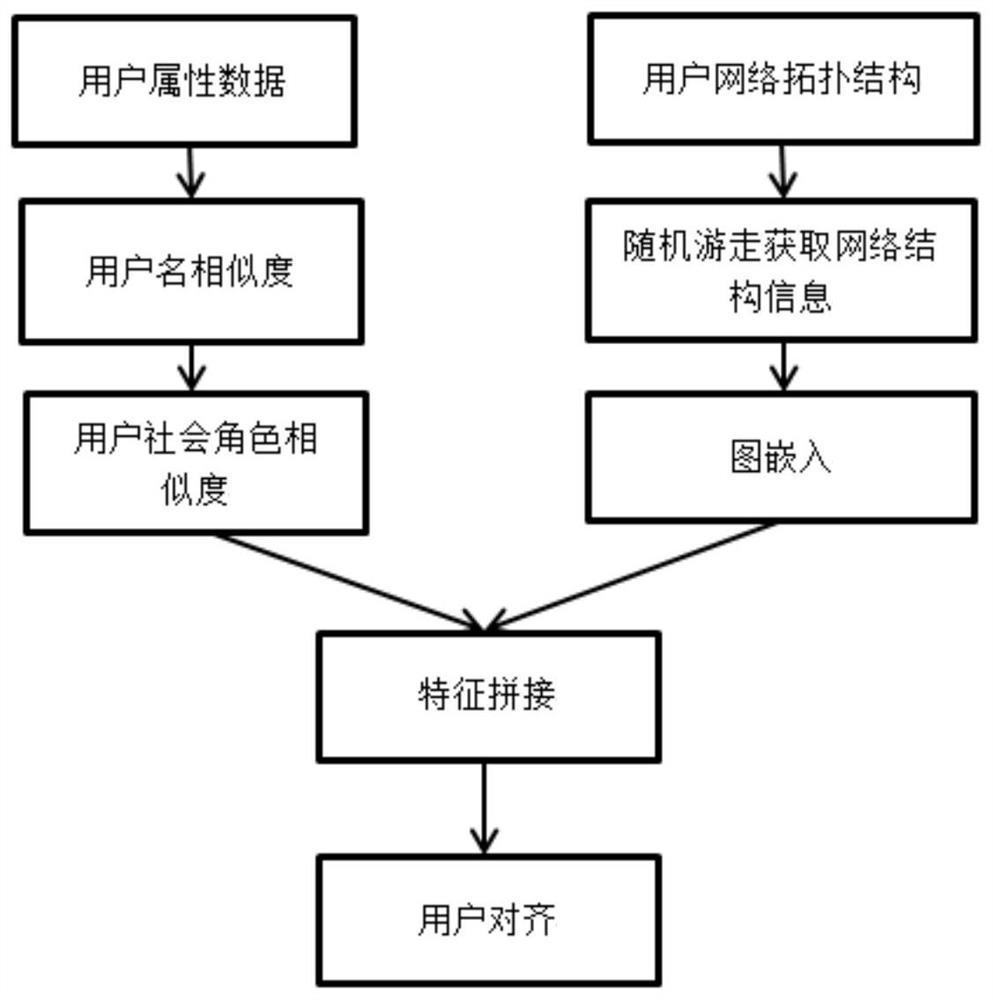

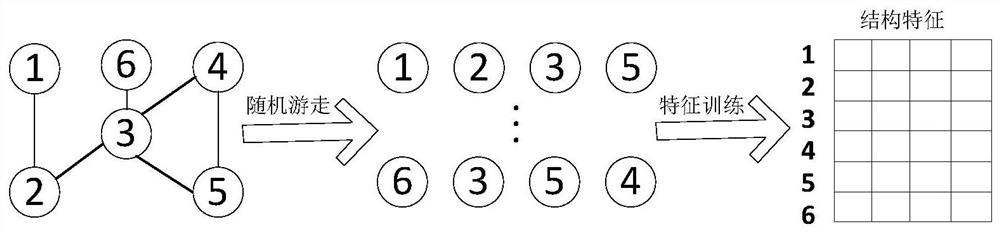

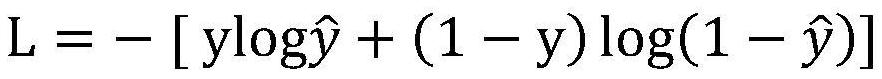

Multi-source heterogeneous network user alignment method based on graph embedding

ActiveCN112084373AEasy accessFeature validData processing applicationsNatural language data processingGraph basedHeterogeneous network

The invention discloses a multi-source heterogeneous network user alignment method based on graph embedding. The multi-source heterogeneous network user alignment method is characterized by comprisingthe following steps: 1) calculating the similarity of user attributes through a user name and a social role; 2) obtaining a node sequence of the heterogeneous network through a random walk algorithm,and analyzing a mutual relation between nodes; 3) calculating the node sequence by using an embedding algorithm to obtain an embedded representation of the network; and 4) training a multi-layer neural network to align the users according to the attribute similarity and the structural features of the users. The multi-source heterogeneous network user alignment method based on graph embedding canbe used for user alignment of an online social network, has important application in the fields of recommendation systems, figure portrait completion and the like, is low in calculation complexity ofan algorithm, can quickly align the same user in the network, and is high in applicability to real data.

Owner:NAT COMP NETWORK & INFORMATION SECURITY MANAGEMENT CENT

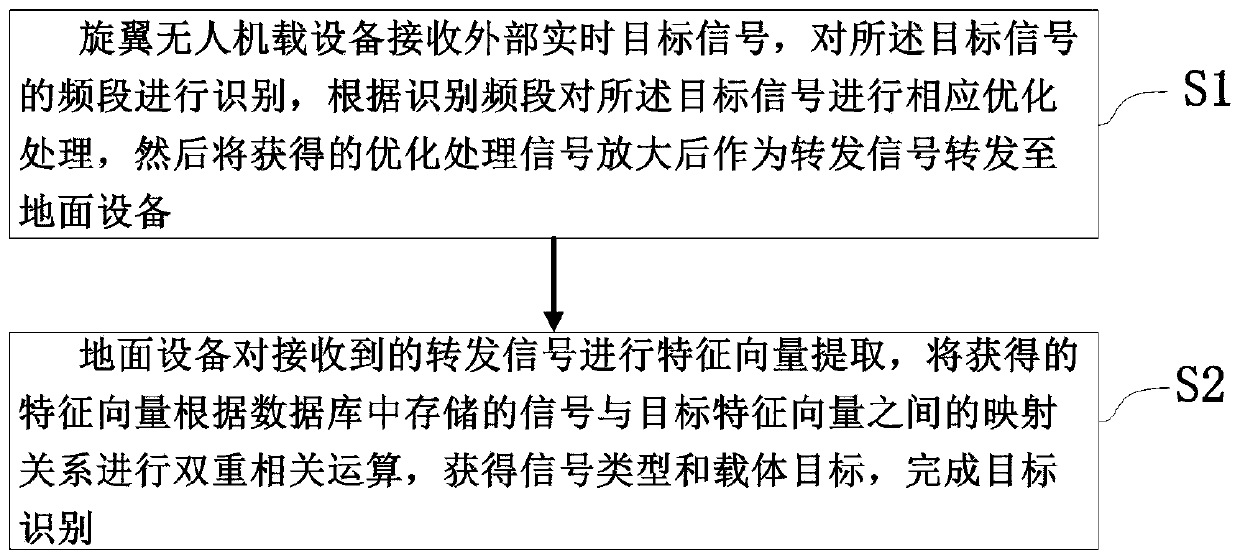

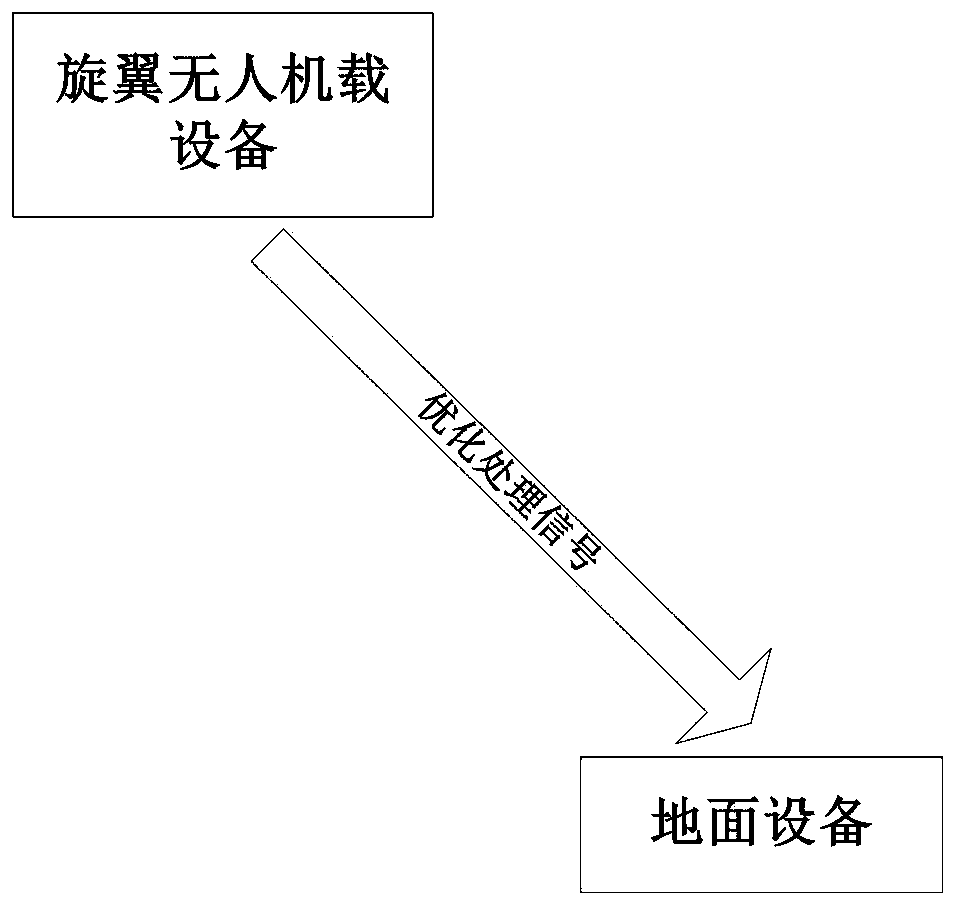

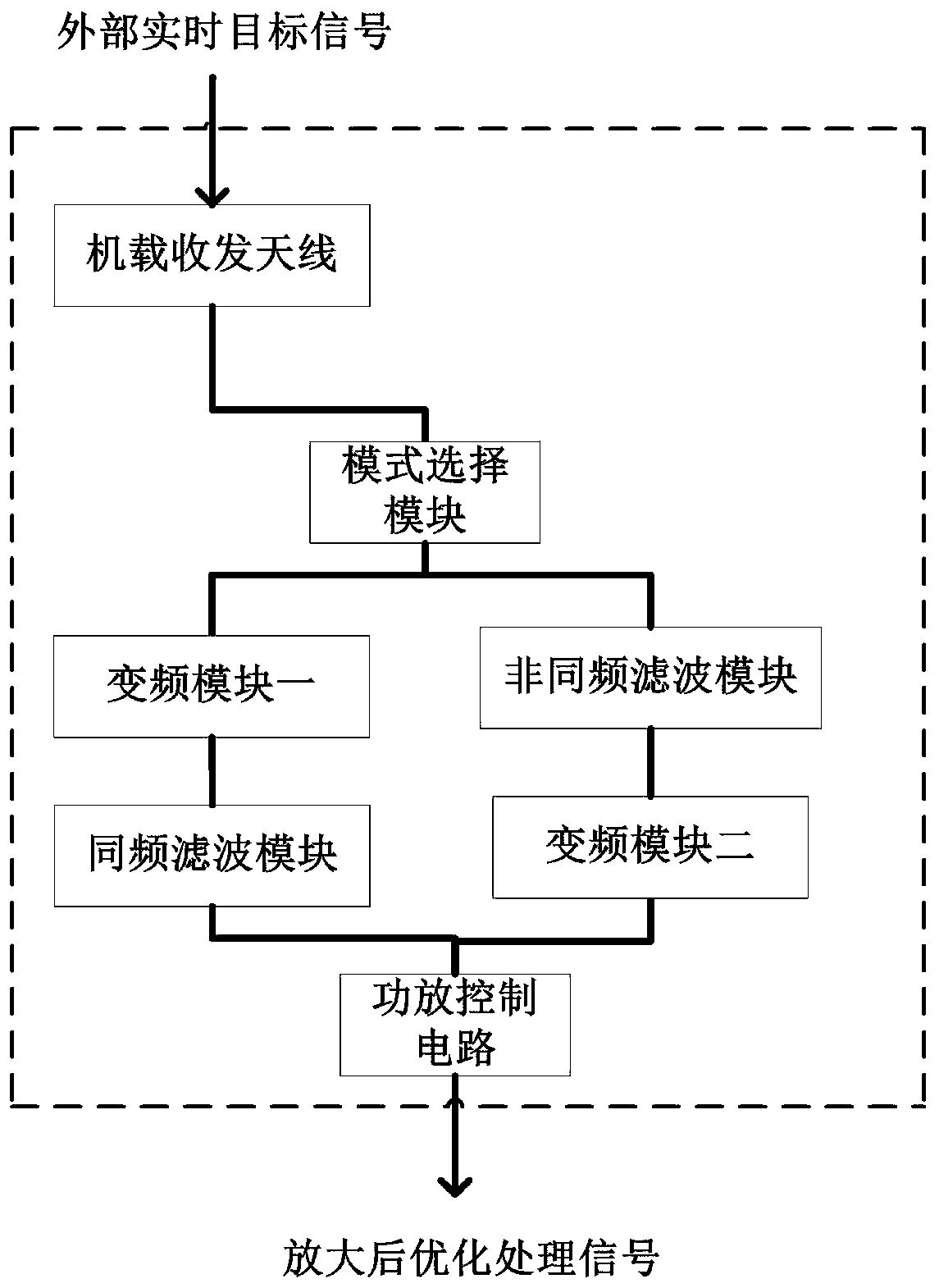

Target signal identification method based on rotor unmanned aerial vehicle-mounted equipment and ground equipment

ActiveCN110401479AAccurate target recognition resultsImprove stabilityRadio transmissionReturn timeFrequency band

The invention relates to a target signal identification method based on rotor unmanned aerial vehicle-mounted equipment and ground equipment, belongs to the technical field of communication reconnaissance, and solves the problems that air communication reconnaissance equipment in the prior art is not suitable for long-time reconnaissance, the signal return time is relatively long, the reconnaissance distance of the ground communication reconnaissance equipment is short, and the signal processing time is long. The method comprises the following steps: rotor unmanned aerial vehicle equipment receiving an external real-time target signal, identifying the frequency band of the target signal, correspondingly optimizing the target signal according to the identified frequency band, amplifies theobtained optimized signal, and forwarding the amplified optimized signal to ground equipment as a forwarding signal; and the ground equipment performing feature vector extraction on the forwarded signal, performing dual correlation operation on the obtained feature vector according to the mapping relationship between the signal stored in the database and the target feature vector, and further obtaining a signal type and a carrier target to complete target signal identification. The method applies a dual correlation operation identification technology, and is high in target identification precision and reliable.

Owner:36TH RES INST OF CETC

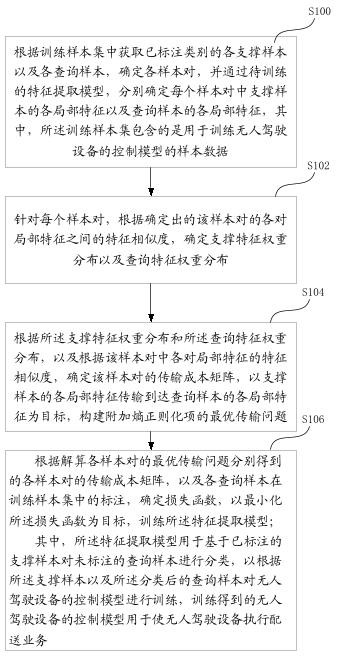

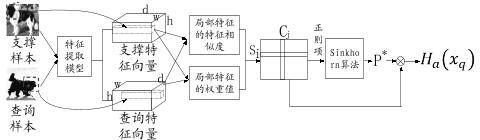

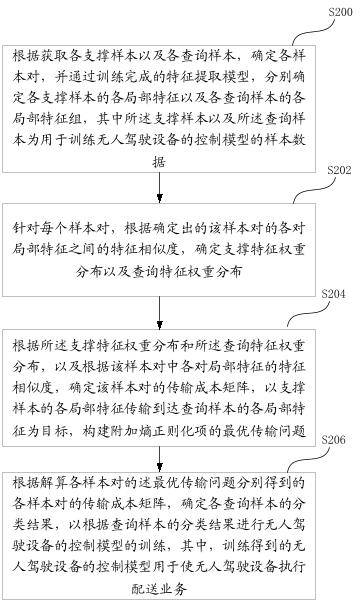

Training model and small sample classification method and device

ActiveCN112598091AFeature validImprove accuracyCharacter and pattern recognitionMachine learningCost matrix

The invention discloses a training model and small sample classification method and device, the similarity of local features between different samples is calculated, the subsequently determined weightdistribution can better reflect the importance degree of symbiotic information between the samples, and when an optimal transmission problem is constructed, the optimal transmission problem is solvedby adding an entropy regularization item. Therefore, a transmission cost matrix can be obtained through iterative optimization, and finally, a loss function is determined based on the transmission cost matrix of each sample pair and the annotation of each query sample in the training sample set, so that the feature extraction model learns to extract more effective features for classifying the query samples. Therefore, the training model does not learn the transmission cost matrix used for weighting, but calculates each sample pair to obtain the transmission cost matrix, so that the embodimentprovided by the specification is not limited by the learned general weighting matrix, and can adapt to samples of different types of scenes.

Owner:BEIJING SANKUAI ONLINE TECH CO LTD +1

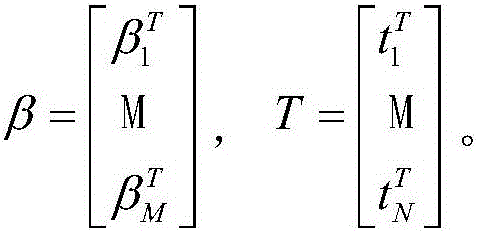

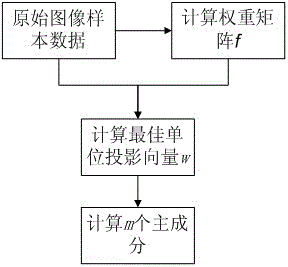

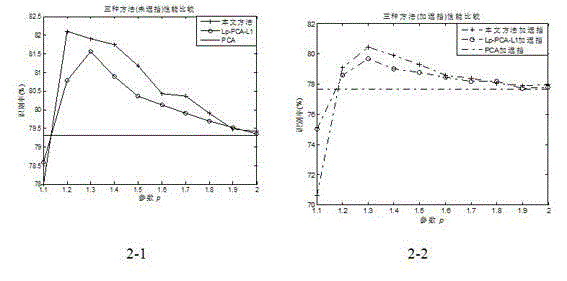

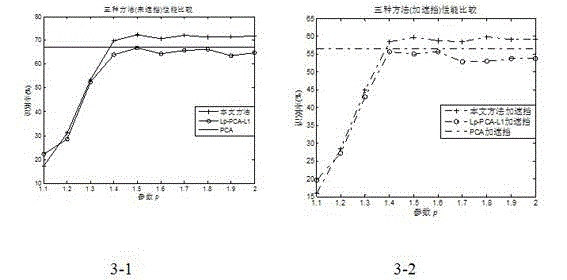

Lp norm-based sample couple-weighting facial feature extraction method

InactiveCN103150570AAvoid mean effectsReduce sensitivityCharacter and pattern recognitionLocal optimumFeature extraction

An Lp norm-based sample couple-weighting facial feature extraction method belongs to a feature extraction method in pattern recognition. The Lp norm-based sample couple-weighting facial feature extraction method includes the following steps: (1) n facial images, the sizes of which are M multiplied by N, are represented in the form of column vectors, wherein the dimensionality of Xi is d, and the column vectors are formed into a sample matrix; (2) different functions are adopted as weighting functions for facial sample couples of the same kind and facial sample couples of different kinds; (3) a sample couple-weighting optimization model with Lp norm constraints is created, and an iterative optimization algorithm is utilized to obtain a locally optimal unit projection vector w; and (4) a greedy algorithm is used for reducing the initial d dimensions of the features of the facial images to m dimensions, and thereby the reduction of dimensionality and the extraction of effective features are implemented. The method can flexibly carry out feature extraction on different types of data sets and decrease the sensitivity on abnormal values, and can be more adapted to the complexity of facial images; and since sample couples are weighted, the affection of sample mean is avoided, and extracted features are more effective. Under the condition of blocking, the performance of the method is enhanced by 2 to 5 percent in comparison with the performance of PCA (principal component analysis) and Lp-PCA-L1.

Owner:CHINA UNIV OF MINING & TECH

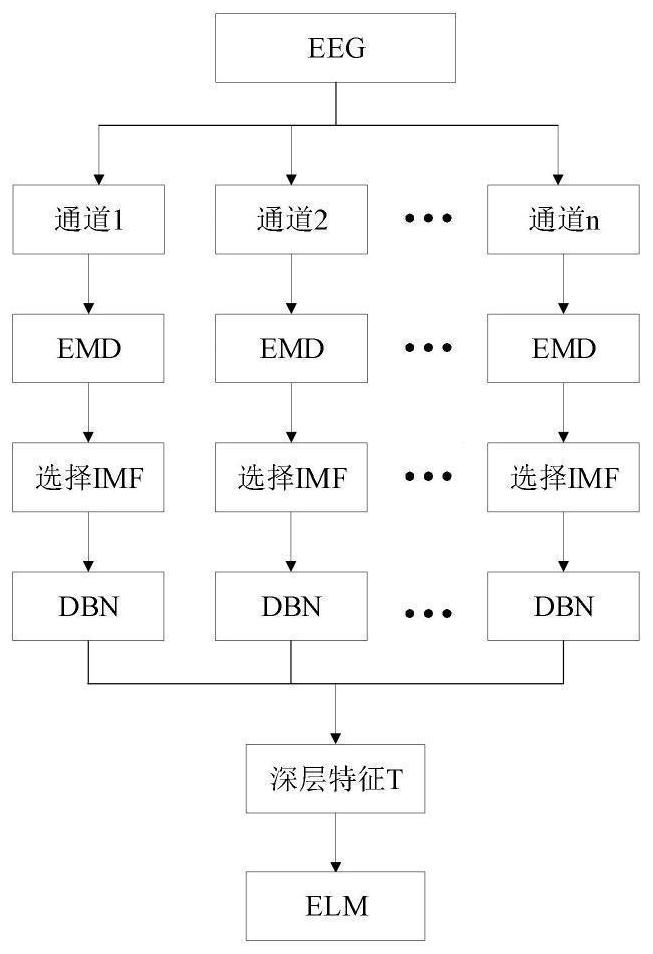

Electroencephalogram emotion recognition method based on DEDBN-ELM

PendingCN112508088AFine divisionRealize automatic extractionCharacter and pattern recognitionNeural architecturesLearning machineData set

The invention relates to an electroencephalogram emotion recognition method based on DEDBN-ELM, and belongs to the field of human-computer interaction. The method comprises the steps that S1, decomposing electroencephalogram signals of all channels through EMD, and obtaining a series of IMF components; S2, selecting an IMF component according to the variance contribution rate; S3, constructing a DBN network for each electroencephalogram channel to extract the selected IMF components, and deep features of each channel are obtained respectively; and S4, taking the deep features of the pluralityof channels as the input of an extreme learning machine (ELM), and carrying out feature learning and classification. According to the method, it can be guaranteed that the recognition rate is high androbustness is good when feature extraction and classification are carried out on the positive emotion state, the negative emotion state and the neutral emotion state in the electroencephalogram dataset.

Owner:重庆邮智机器人研究院有限公司 +1

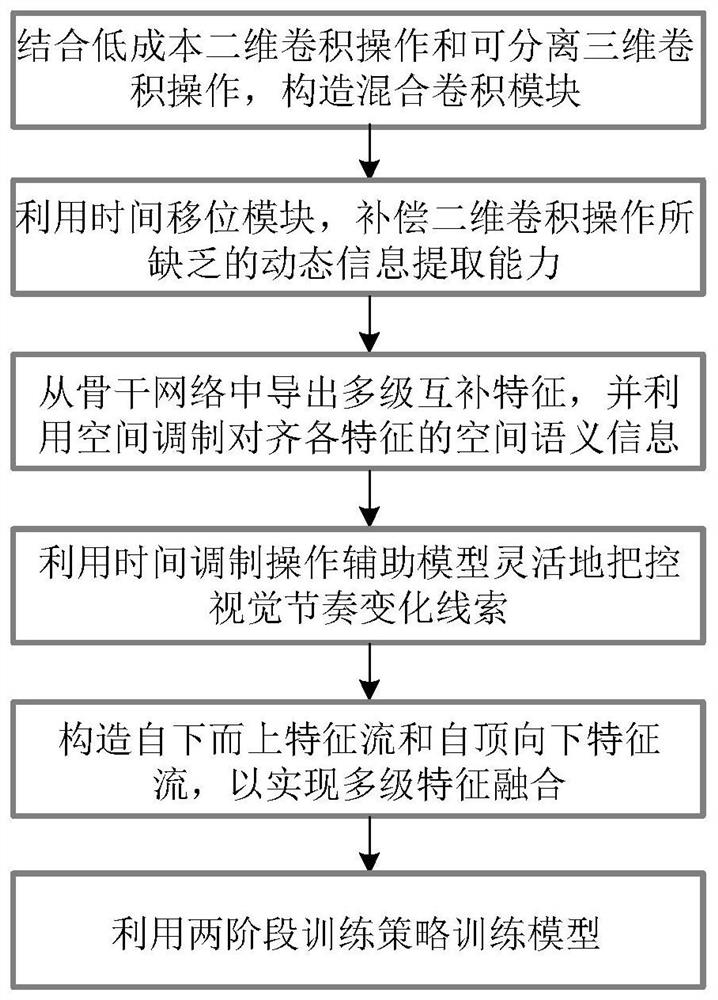

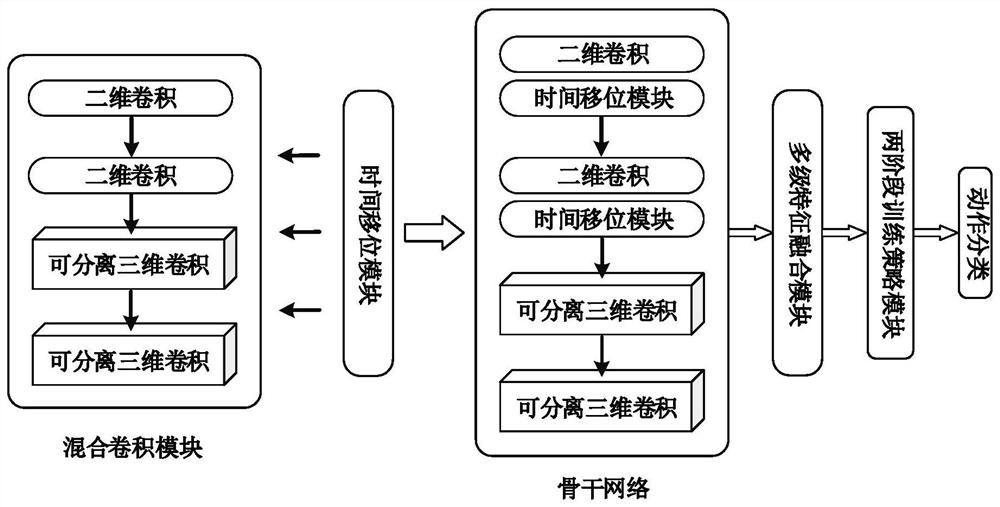

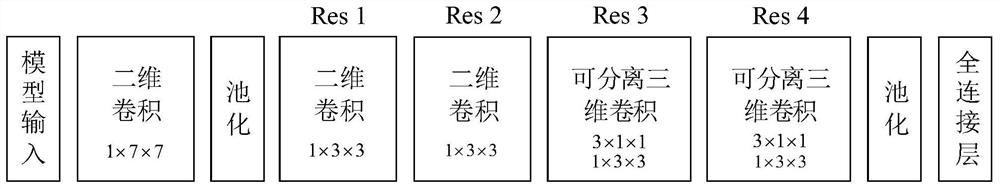

Video action recognition method and system of multi-level feature fusion model based on hybrid convolution

ActiveCN113128395ACompensation for dynamic feature extraction capabilitiesEfficient integrationCharacter and pattern recognitionNeural architecturesVisual technologyEngineering

The invention relates to a video action recognition method and system of a multi-level feature fusion model based on hybrid convolution, and belongs to the technical field of computer vision. The method comprises the steps: constructing a hybrid convolution module through employing two-dimensional convolution and separable three-dimensional convolution; performing channel shift operation on each input feature along the time dimension, constructing a time shift module, promoting information flow between adjacent frames, and compensating the defect of capturing the dynamic feature by the two-dimensional convolution operation; exporting multi-level complementary features from different convolutional layers of the backbone network, and performing spatial modulation and time modulation on the multi-level complementary features, so that the features of each level have consistent semantic information in the spatial dimension and have variable visual rhythm clues in the time dimension; according to the method, feature flows from bottom to top and feature flows from top to bottom are constructed, so that the features supplement each other, and the feature flows are processed in parallel to realize multi-level feature fusion; and carrying out model training by utilizing a two-stage training strategy.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

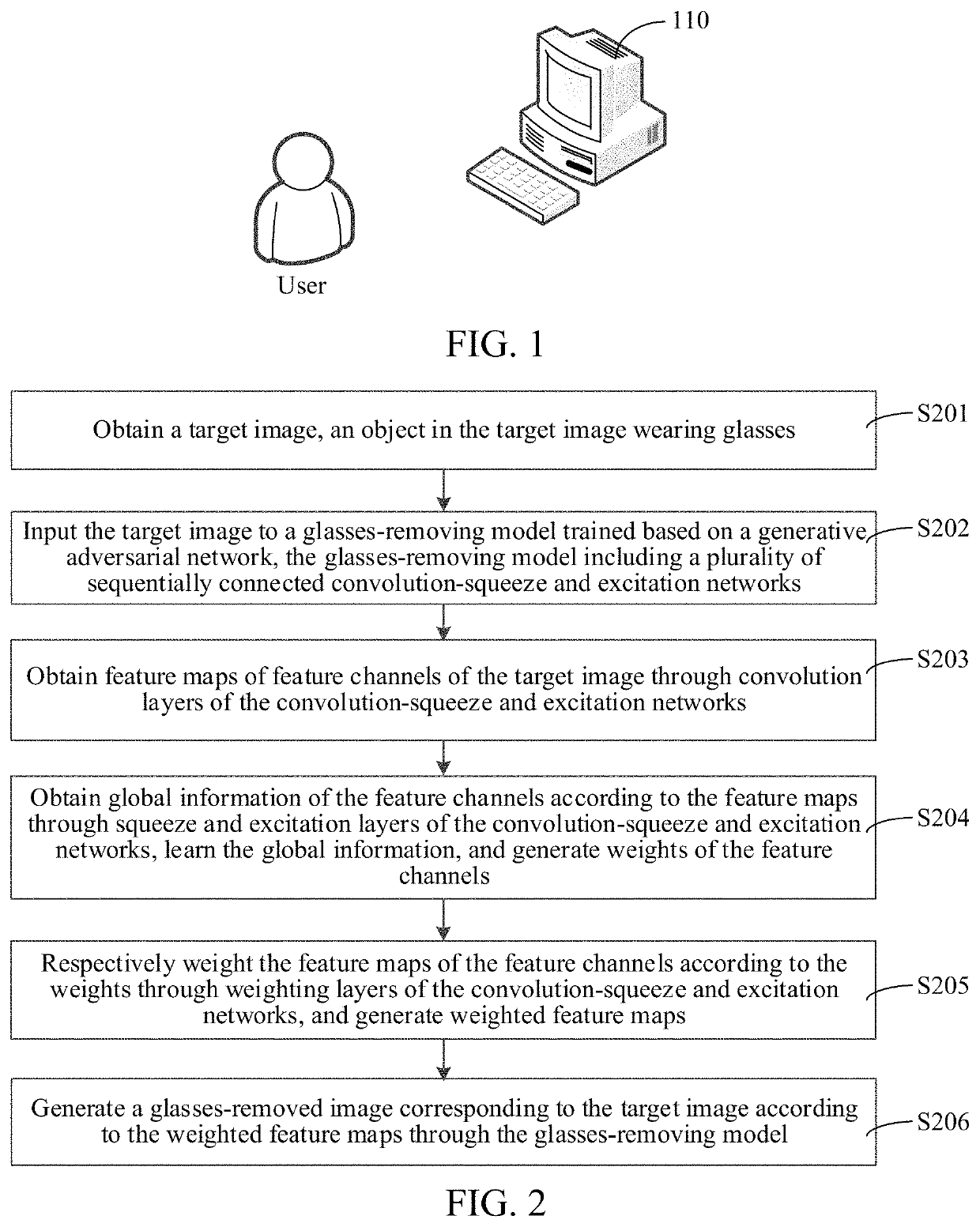

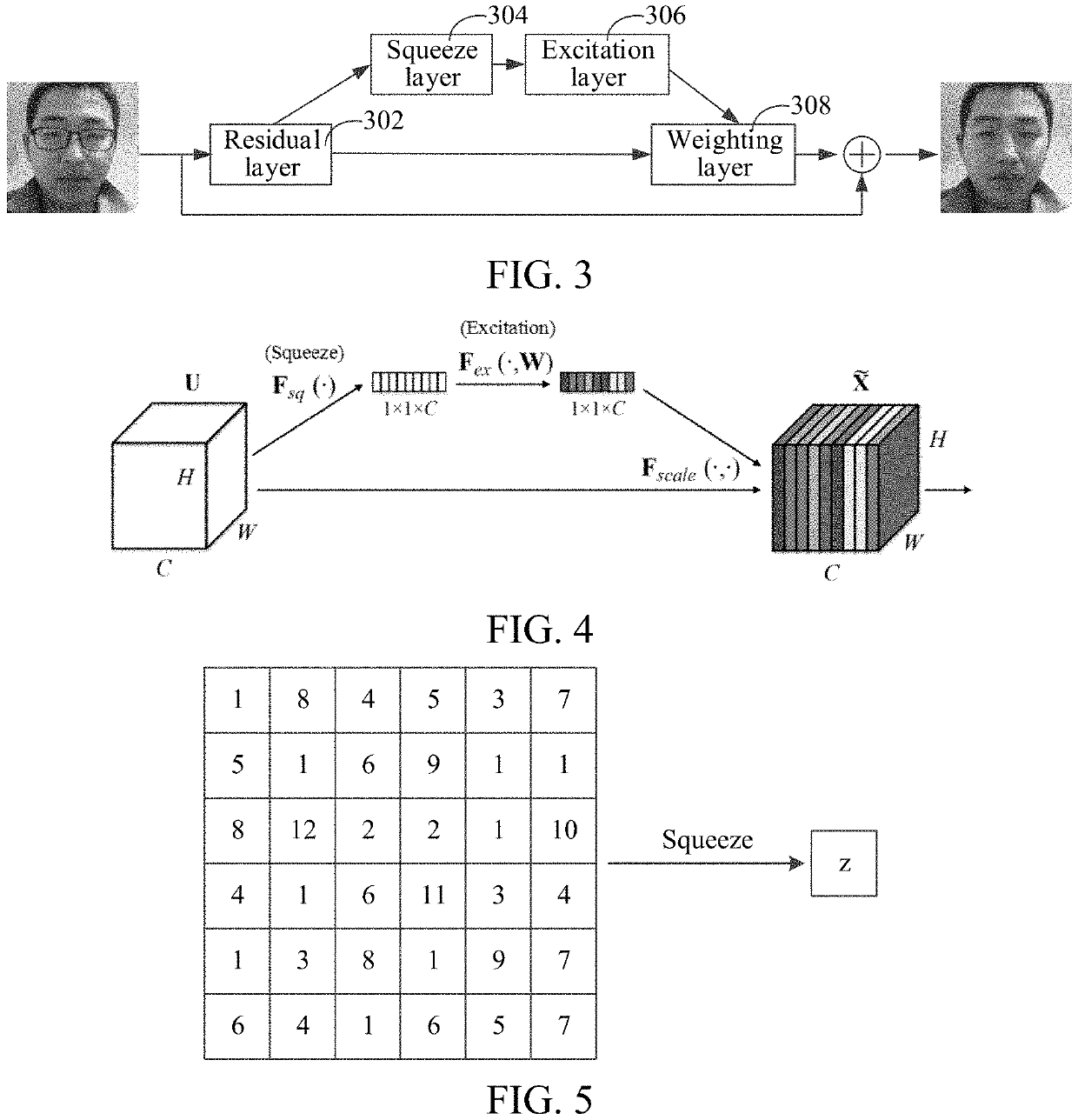

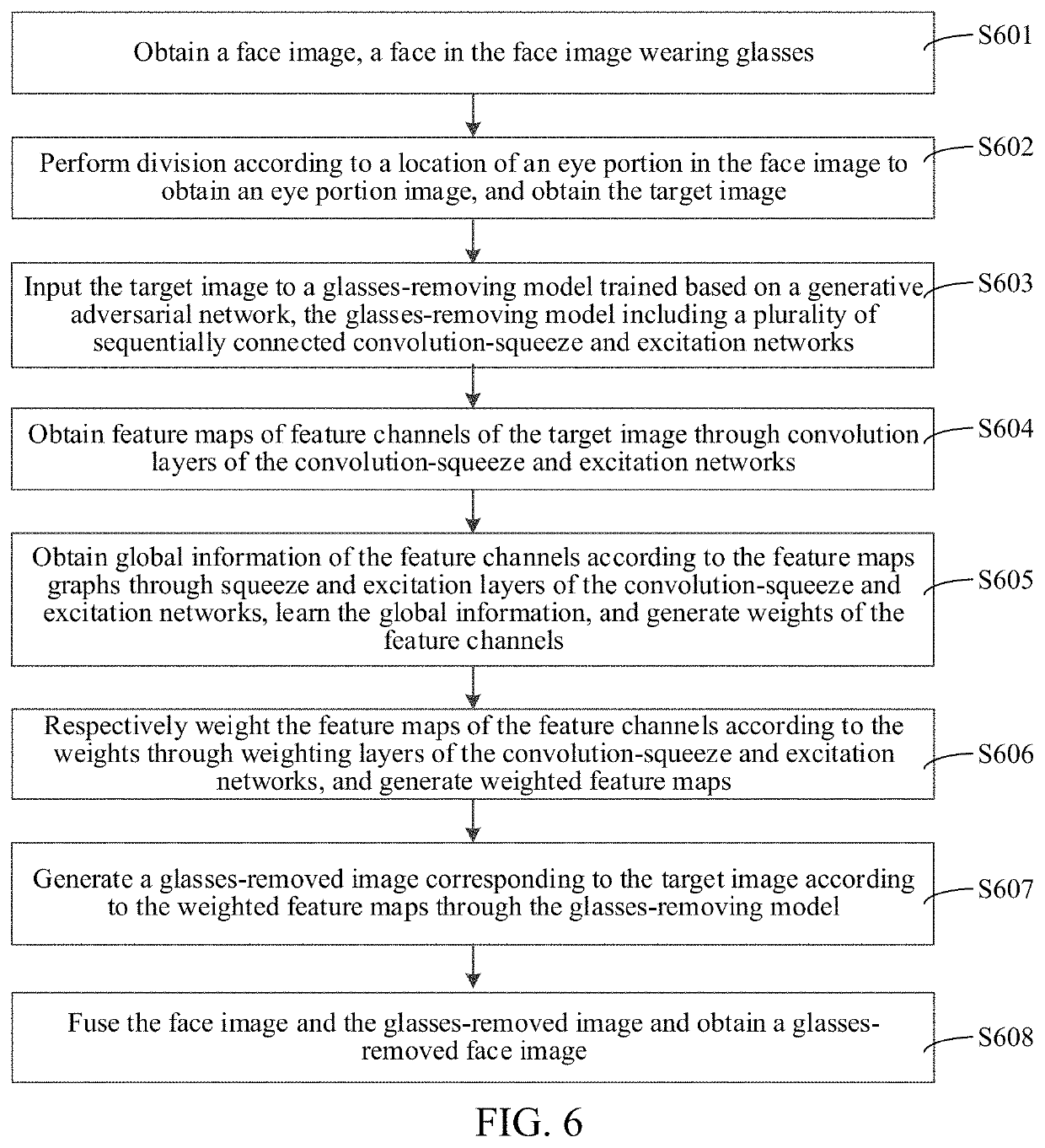

Image processing method and apparatus, facial recognition method and apparatus, and computer device

ActiveUS11403876B2Improve learning effectSuppressing ineffective or slightly effective featuresImage enhancementImage analysisComputer equipmentWears glasses

This application relates to an image processing method and apparatus, a facial recognition method and apparatus, a computer device, and a readable storage medium. The image processing method includes: obtaining a target image comprising an object wearing glasses; inputting the target image to a glasses-removing model comprising a plurality of sequentially connected convolution squeeze and excitation networks; obtaining feature maps of feature channels of the target image through convolution layers of the convolution squeeze and excitation networks; obtaining global information of the feature channels according to the feature maps through squeeze and excitation layers of the convolution squeeze and excitation networks, learning the global information, and generating weights of the feature channels; weighting the feature maps of the feature channels according to the weights through weighting layers of the convolution squeeze and excitation networks, respectively, and generating weighted feature maps; and generating a glasses-removed image corresponding to the target image according to the weighted feature maps through the glasses-removing model. The glasses in the image can be effectively removed using the method.

Owner:TENCENT TECH (SHENZHEN) CO LTD

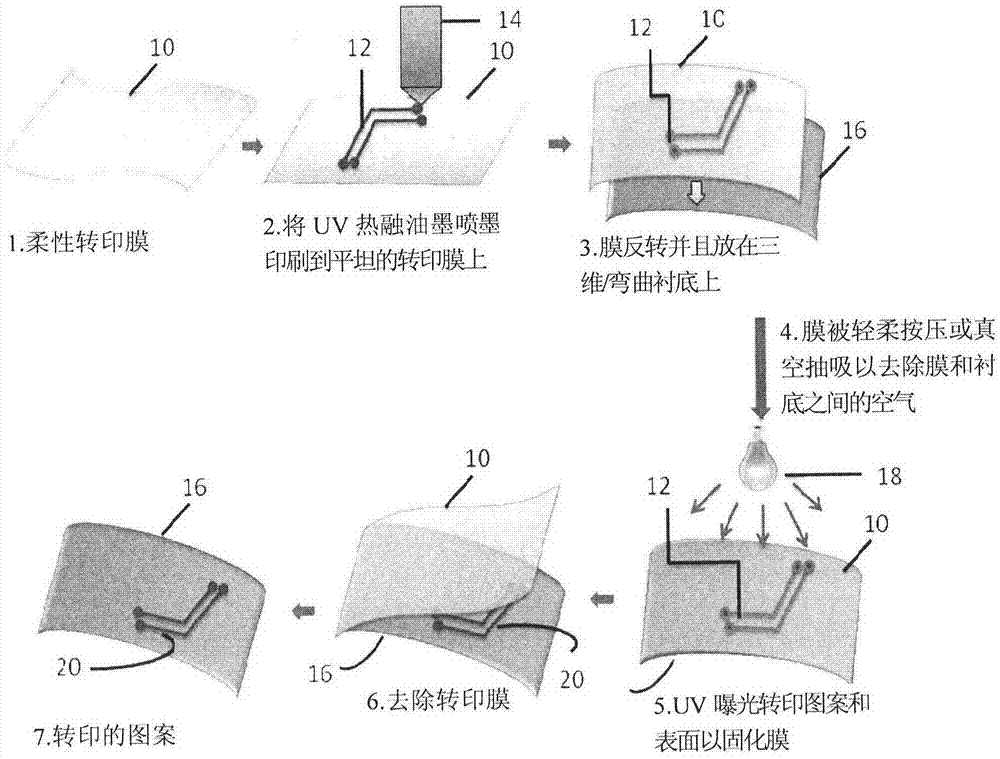

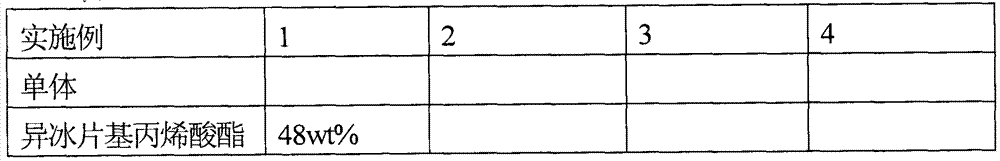

Imaging three dimensional substrates using a transfer film

InactiveCN104749877AFine featuresSmall featuresLiquid surface applicatorsPhotomechanical coating apparatusMonomerPhysics

Actinic radiation curable inks are selectively applied to flexible films which allow the transmission of actinic radiation and then are applied such that the side of the flexible films on which the curable inks are applied contact surfaces of three dimensional substrates. Actinic radiation is applied to the flexible films which cause the ink to cure on the three dimensional substrate. The flexible films are removed leaving the cured ink on the substrates.

Owner:ROHM & HAAS ELECTRONICS MATERIALS LLC

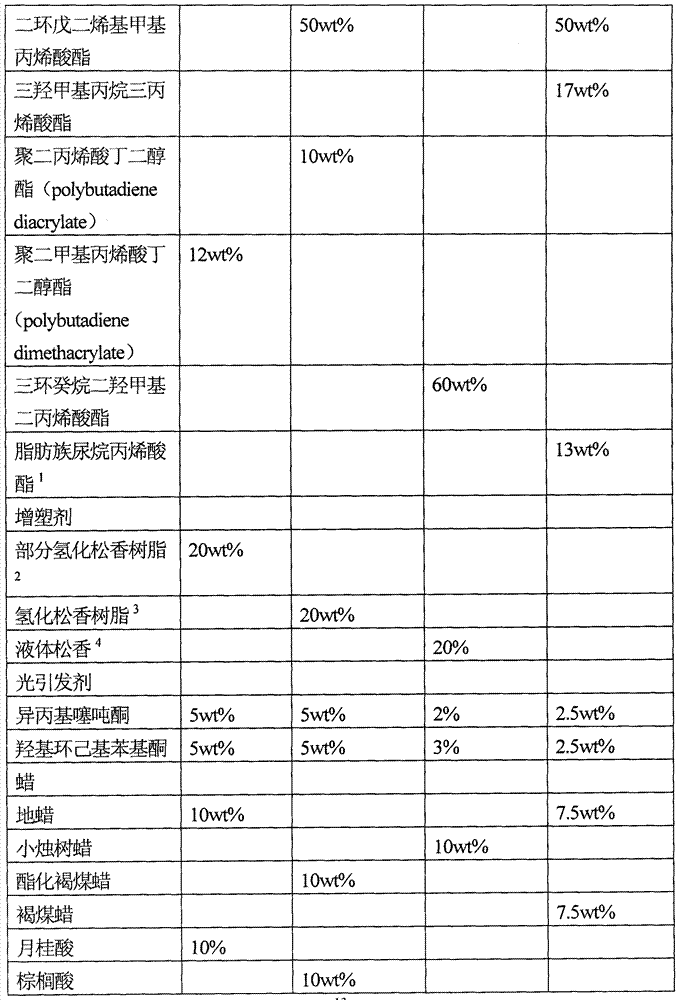

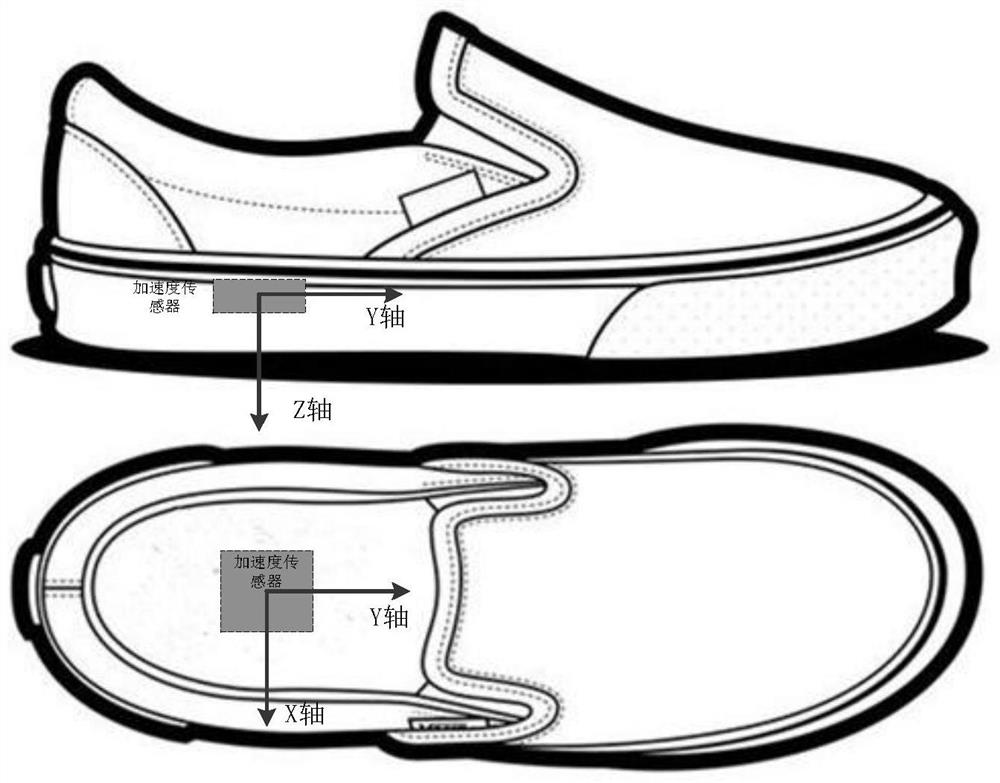

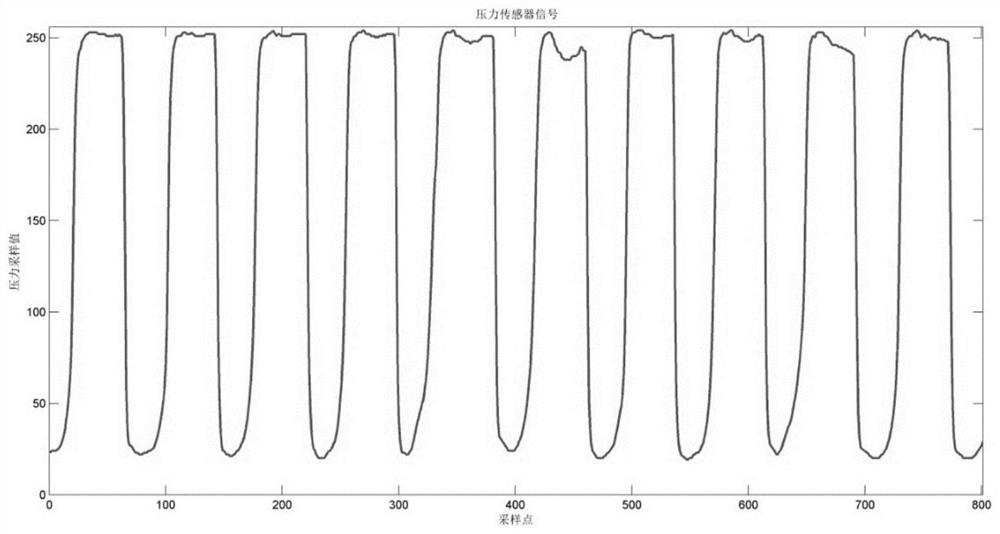

Lower limb action recognition method based on pressure and acceleration sensor

InactiveCN107092861BFeature validEffective Feature ExtractionCharacter and pattern recognitionDiagnostic recording/measuringFeature extractionMedicine

The invention discloses a lower limb movement recognition method based on a pressure and acceleration sensor. The specific implementation steps of the method are as follows: firstly, the pressure sensor signal of the lower limb movement of the human body is collected in real time, and after preprocessing the pressure sensor signal, according to the pressure sensor data rising The edge and falling edge mark the start and end of the lower limb movement. When the rising edge of the pressure is detected, the three-axis acceleration signal of the acceleration sensor will be collected and stored. When the falling edge of the pressure is detected, the three-axis acceleration signal of the acceleration sensor will be collected. The three-axis signal of the acceleration sensor collected between the edge and the falling edge is called the acceleration signal segment. Then the frequency domain features and statistical features are extracted from the acceleration signal segment extracted in the previous step. After the features are extracted, the data dimensionality reduction is performed on the extracted features. Finally, the trained classifier is used to classify the feature data after dimension reduction, and the classification result of the action pattern is obtained.

Owner:SOUTH CHINA UNIV OF TECH +1

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com