Standing long jump evaluation method based on deep learning attitude estimation

A pose estimation and deep learning technology, applied in neural learning methods, computing, computer components, etc., can solve the problem of low judgment accuracy and achieve high accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0028] A standing long jump evaluation method based on deep learning attitude estimation, comprising the following steps:

[0029] A. Collect the image before take-off and zoom the image. In this step, the image is preferably zoomed to 640x480.

[0030] B. Define the long jump detection area. The long jump detection area is the maximum width distance from the starting point of the long jump to the landing point. There is no limit to the height, as long as it meets the detection range of the long jump area. Detect the feature points of the human body and complete the limb connection through the deep convolutional neural network, mainly including the key points of the limbs below the human head, including the neck, left shoulder, right shoulder, left elbow, right elbow, left wrist, right wrist, left thigh, Right thigh, left knee, right knee, left ankle and right ankle.

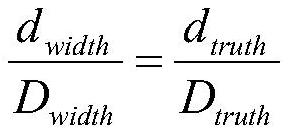

[0031] C. Collect the final landing point of the human body and detect whether the final landing point of th...

Embodiment 2

[0034] Based on the above-mentioned embodiments, this embodiment specifically discloses the structure and construction method of the deep convolutional neural network in the above-mentioned embodiments, and will be described in conjunction with the standing long jump.

[0035] Deep Convolutional Neural Network Training Dataset:

[0036] The deep convolutional neural network requires a large number of data sets as the basis for deep learning network training, and its data set is a very important part of the evaluation accuracy. The human body key point evaluation training data set in this embodiment uses two kinds of data: COCO2016 and self-labeling Among them, COCO2016 is an open source competition data set, and Keypoint Evaluation completes the evaluation of key points of the human body; for the self-labeled data set, we mainly collect a large number of posture images such as human dances, and use LabelMe to complete the labeling of key points of the human body. The purpose o...

Embodiment 3

[0051] Based on the principle of Embodiment 1 and the deep convolutional neural network trained in the embodiment, this embodiment discloses a specific example.

[0052] A. Collect the image before take-off and scale the image to 640x480.

[0053] B. Designate the long jump detection area, such as figure 1 In the area A, the feature points of the human body are detected through the deep convolutional neural network and the limbs are connected, mainly including the key points of the limbs below the head of the human body, including the neck, left shoulder, right shoulder, left elbow, right elbow, left wrist, right Wrist, left thigh, right thigh, left knee, right knee, left ankle and right ankle, such as figure 1 shown.

[0054] C. When the human body lands, judge whether the landing point is within the detection area. If so, determine which limb feature points of the human body are within the detection area. Delay for a certain period of time, such as 1 second or 2 seconds, t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com