Video hybrid encoding and decoding method and device based on deep learning, and medium

A technology of deep learning and video mixing, which is applied in the field of video coding, can solve problems such as the difficulty of further improving the compression rate, and achieve the effect of improving video coding performance, improving video compression performance, and solving the problem that the compression rate is difficult to increase

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

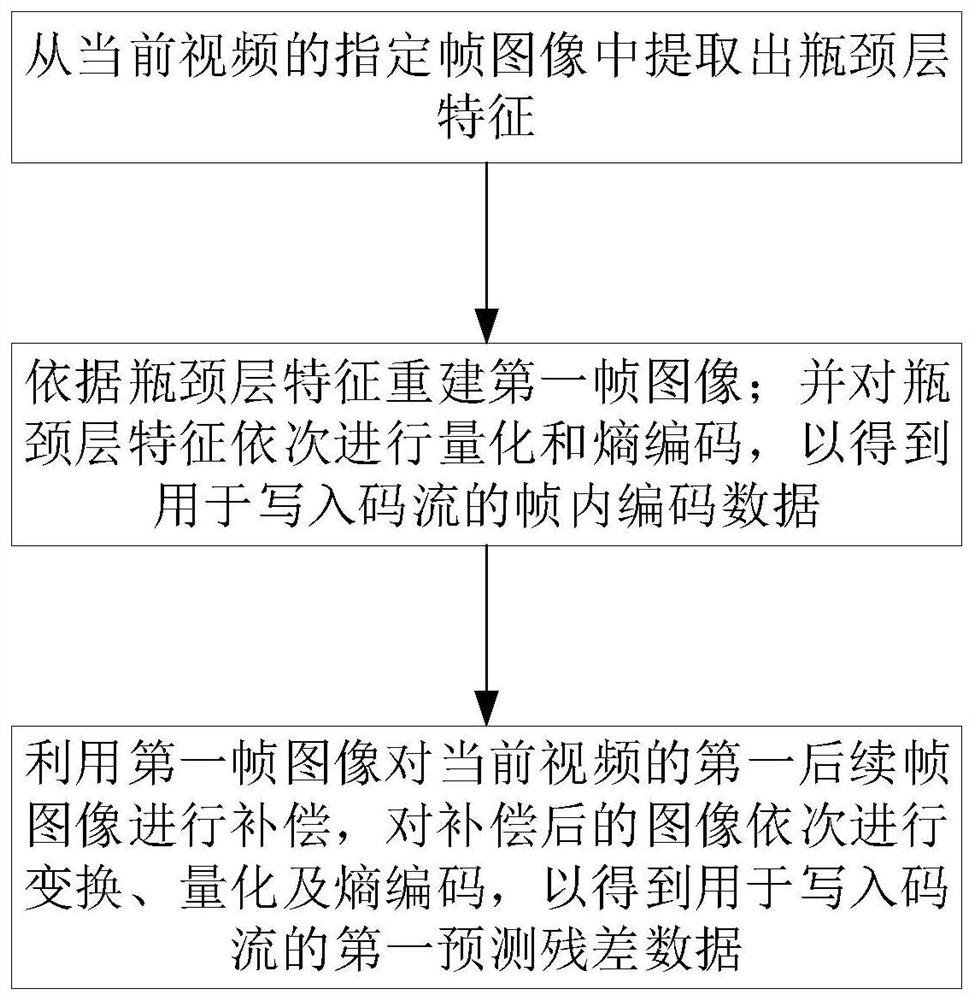

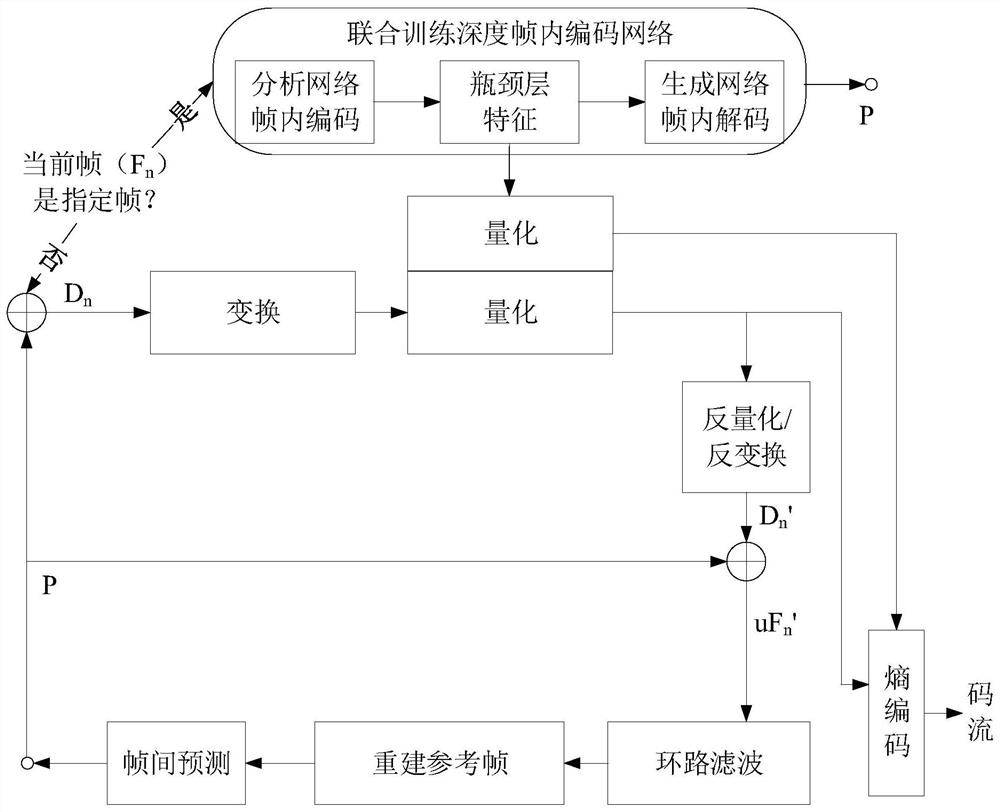

[0051] like figure 1 , 2 As shown, this embodiment provides a video hybrid coding method based on deep learning, which can perform intra-frame coding work through a deep learning autoencoder. Specifically, the video hybrid encoding method may include but not limited to the following steps.

[0052]First, the intra-frame coding process is performed to extract the bottleneck layer features from the specified frame image of the current video. In some preferred embodiments of the present invention, the process of extracting the bottleneck layer feature from the specified frame image of the current video includes: grouping all the frame images of the current video in order from front to back, so as to obtain multiple sets of image, the first frame image of each group of images is taken as the designated frame image; if the current video has 1600 frames, it can be divided into 100 groups, each group has 16 frames; each group of images includes the first subsequent frame image and ...

Embodiment 2

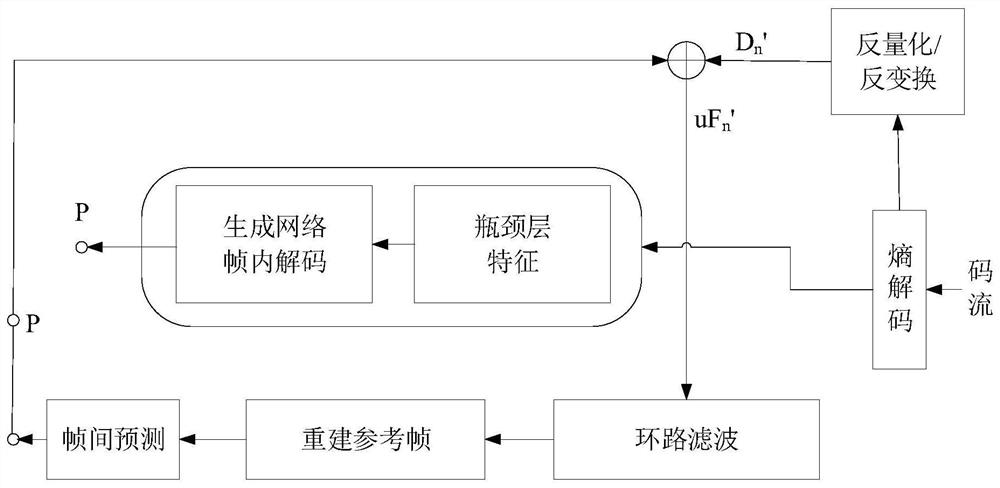

[0059] like image 3 , 4 As shown, this embodiment provides a video decoding method based on deep learning, which corresponds to the video encoding method in any embodiment of the present invention, and this embodiment is used to generate a video hybrid encoding method in any embodiment of the present invention. The corresponding data is decoded accordingly.

[0060] Specifically, the video decoding method based on deep learning in this embodiment includes but is not limited to the following steps.

[0061] First, entropy decoding is performed on the intra-frame coded data in the received code stream to obtain the bottleneck layer feature, and then the specified frame image is decoded according to the bottleneck layer feature.

[0062] Then, perform entropy decoding, inverse quantization, inverse transformation and compensation with the specified frame image on the first prediction residual data in the received code stream, and then perform loop filtering on the compensated ...

Embodiment 3

[0065] Based on the same inventive concept as the first embodiment, this embodiment can specifically provide a video hybrid encoding device based on deep learning. The device can provide a video coding framework that integrates deep intra-frame autoencoder and inter-frame motion compensation prediction, and can select intra-frame mode or non-intra-frame mode at the coding end to realize efficient video coding and complete further video compression. , the video hybrid encoding device includes but is not limited to the following modules.

[0066] Analyze the network module, the input is the original signal, and the output is the bottleneck layer feature. The visible analysis network module is used to extract the bottleneck layer features from the specified frame image of the current video. In this embodiment, all frame images of the current video are grouped and set in sequence from front to back, so as to obtain multiple groups of images, and the first frame image of each grou...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com