Intelligent vehicle target real-time detection and positioning method based on bionic vision

A real-time detection, smart car technology, applied in image data processing, instrument, character and pattern recognition, etc., can solve the problem of unable to reduce the amount of visual data calculation in unmanned driving, unable to build semantic SLAM online in real time, etc., to achieve positioning accuracy High, fast processing speed, and the effect of increasing the observation angle

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

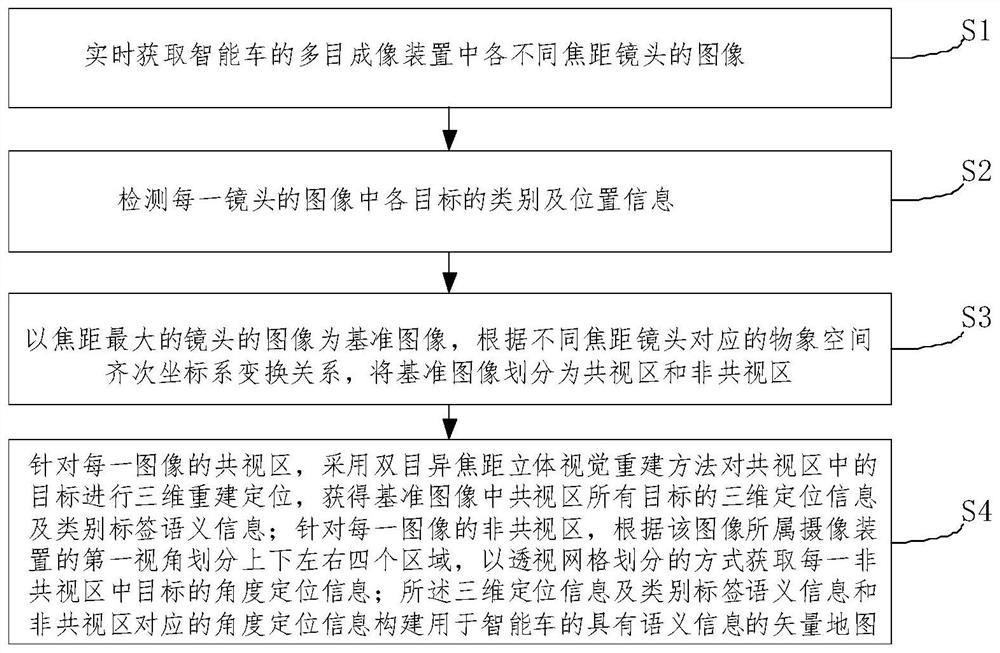

[0074] like figure 1 As shown, the present embodiment provides a method for real-time detection and positioning of smart car targets based on bionic vision, which specifically includes the following steps:

[0075] Step S1, acquiring images of lenses with different focal lengths in the multi-eye imaging device of the smart car in real time;

[0076] Step S2, detecting the category and position information of each object in the image of each shot.

[0077] For example, the YOLOv5 target real-time detection algorithm can be used to detect the category and position information of each target in the image of each shot.

[0078] The targets of this embodiment may include: traffic lights, speed limit signs, pedestrians, small animals, vehicles or lane markings, etc.; this embodiment is only for illustration and not limitation, and is determined according to actual images.

[0079] The above category and position information includes: position information, size information and cate...

Embodiment 2

[0120] The embodiment of the present invention faces the engineering requirements of unmanned driving on the embedded platform for real-time detection and positioning, and provides a smart car target based on bionic vision in order to greatly improve the target detection and positioning efficiency of the unmanned visual information processing system. Real-time detection and localization methods, such as Figure 9 shown.

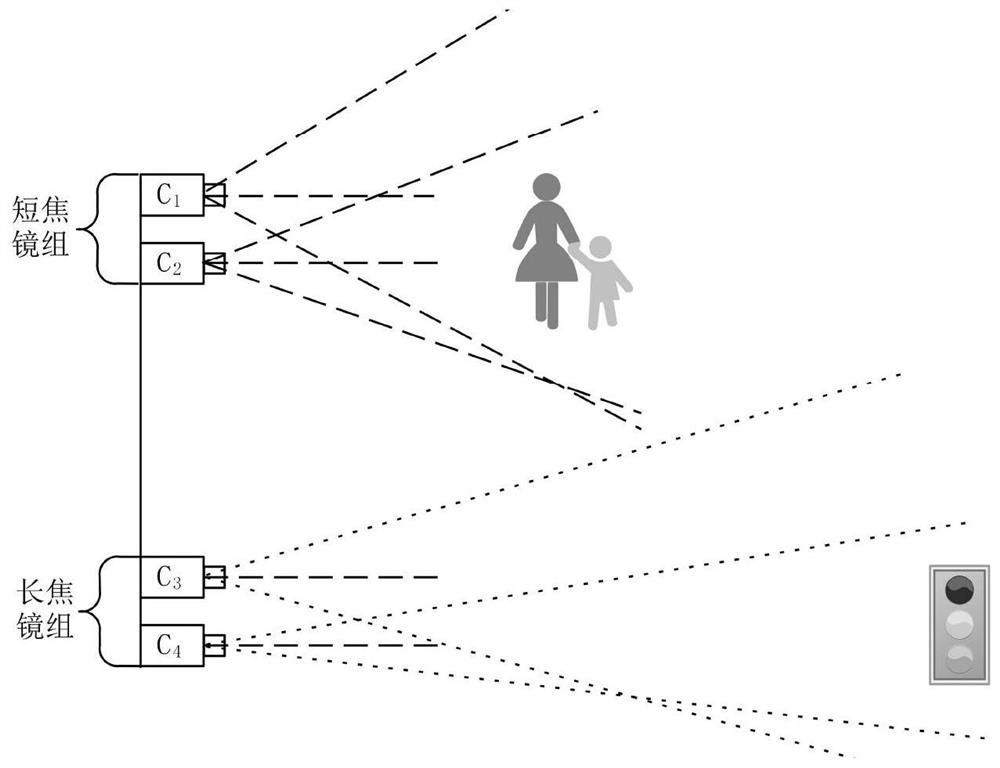

[0121] The method of the embodiment of the present invention simulates the insect compound eye imaging system to form a multi-eye imaging system to increase the field of view, and uses the YOLOv5 deep learning target detection method to classify and identify multiple targets, and segment the targets of interest in the entire image one by one. Then, the overlapping and non-overlapping areas of the sub-fields of view of the multi-eye imaging system are divided into common-view and non-common-view areas, and data coordinate conversion is performed on the common-...

Embodiment 3

[0163] According to another aspect of the embodiment of the present invention, the embodiment of the present invention also provides an intelligent vehicle driving system, the intelligent driving system includes: a control device and a multi-eye imaging device connected to the control device, and the multi-eye imaging device includes: At least one short focal length lens, at least one long focal length lens;

[0164] After the control device receives the images collected by the short focal length lens and the long focal length lens, it uses the real-time detection and positioning method of the smart car described in any one of the first or second embodiment above to build a three-dimensional semantic map of the smart car online in real time.

[0165] In practical applications, the short-focus lens of the multi-eye imaging device in this embodiment is an 8cm focal length lens and a 12cm focal length lens, and the telephoto lens is a 16cm focal length lens and a 25cm focal length...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com