Lightweight convolutional neural network reconfigurable deployment method based on FPGA

A convolutional neural network, lightweight technology, applied in the field of artificial intelligence, can solve the problems of high computational and storage complexity of neural network algorithms, difficult to apply, and difficult to achieve high computing performance and energy efficiency.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

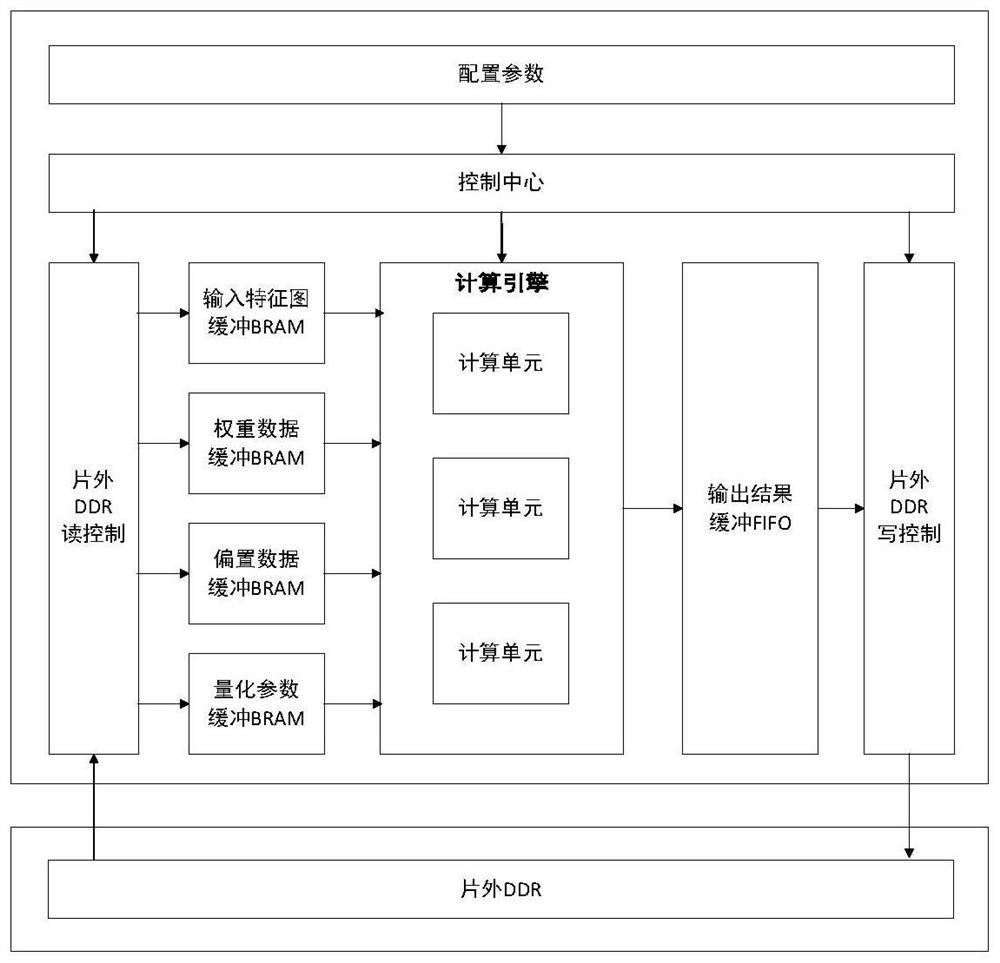

[0081] Such as figure 1 As shown, the FPGA-based lightweight convolutional neural network accelerator is designed and implemented on the Xilinx ZCU102 heterogeneous computing platform, and the convolution acceleration part is mainly deployed on the FPGA platform. The number of available DSPs on the Xilinx ZCU102 platform is 2520, and the number of available 36K BRAMs is 912. At present, the high-end FPGA platform integrates hard-core DSP for high-speed computing. DSP refers to the on-chip computing resources of the FPGA. The number of available DSPs refers to the unoccupied amount of the hard-core DSP on the FPGA platform, which is used to evaluate the FPGA on-chip computing. Capacity can be increased.

[0082] In this embodiment, the Yolo-Tiny backbone network is used as the target network, which includes 9 convolutional layers, 9 ReLU layers and 6 maximum pooling layers. The input feature map size of the first layer is 416×416×3, The output feature map size of the last lay...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com