Underwater environment three-dimensional reconstruction method based on binocular vision

A technology for 3D reconstruction and underwater environment, which is applied in the field of 3D reconstruction of underwater environment and computer vision, and can solve problems such as not achieving ideal results

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach

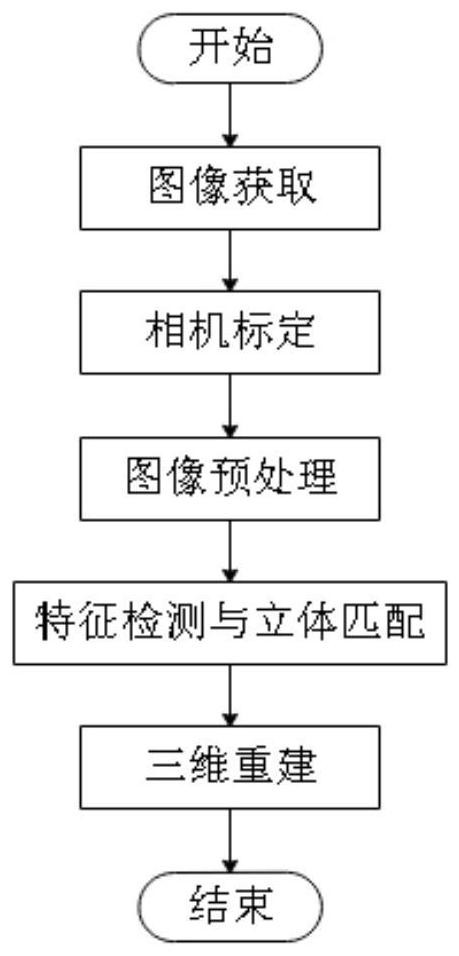

[0175] Step 1: Collect and obtain underwater images, and use Zhang Zhengyou's calibration method to calibrate the binocular camera underwater to obtain the required parameters of the binocular camera. The main theoretical basis is formula (3), (4).

[0176] Step 2: Preprocessing the collected underwater images, including image denoising, image enhancement, image sharpening, image restoration, and underwater image defogging. Based on formulas (5), (7), (8), (12), (14).

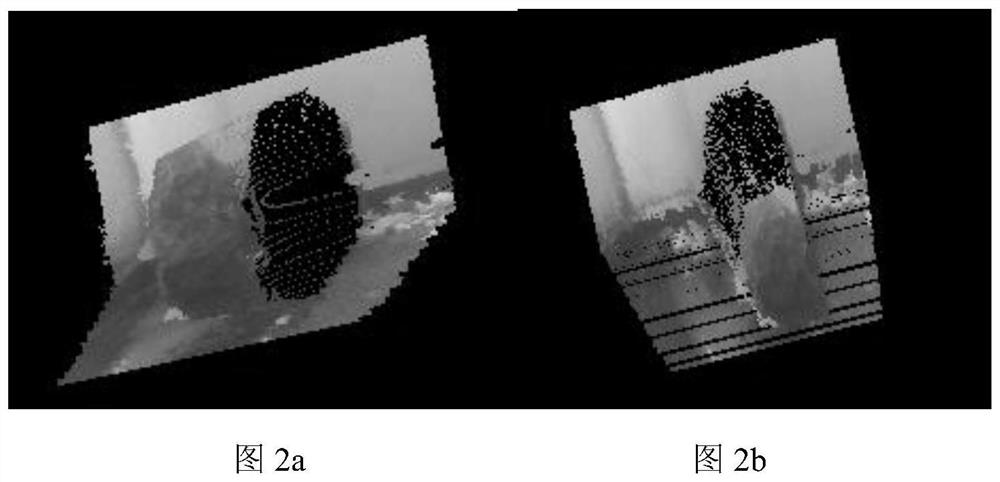

[0177] Step 3: Perform feature detection on the preprocessed binocular image described in step 2, and perform stereo matching using the improved stereo matching algorithm fused by Census and NCC to obtain a disparity map containing depth information. Based on formula (15).

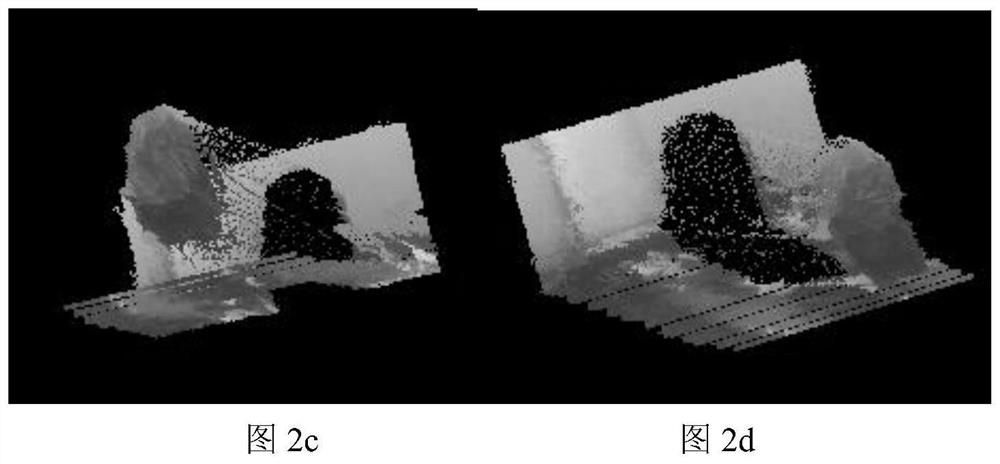

[0178] Step 4: Using the PCL 3D reconstruction method that introduces the moving least squares method, perform 3D reconstruction on the disparity map described in Step 3, and restore the underwater 3D environment in the image.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com