Cross-view gait recognition method combining LSTM and CNN

A gait recognition and combination technology, applied in the field of cross-view gait recognition, can solve problems such as application scene restrictions

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0042] The present invention is described in further detail below in conjunction with accompanying drawing:

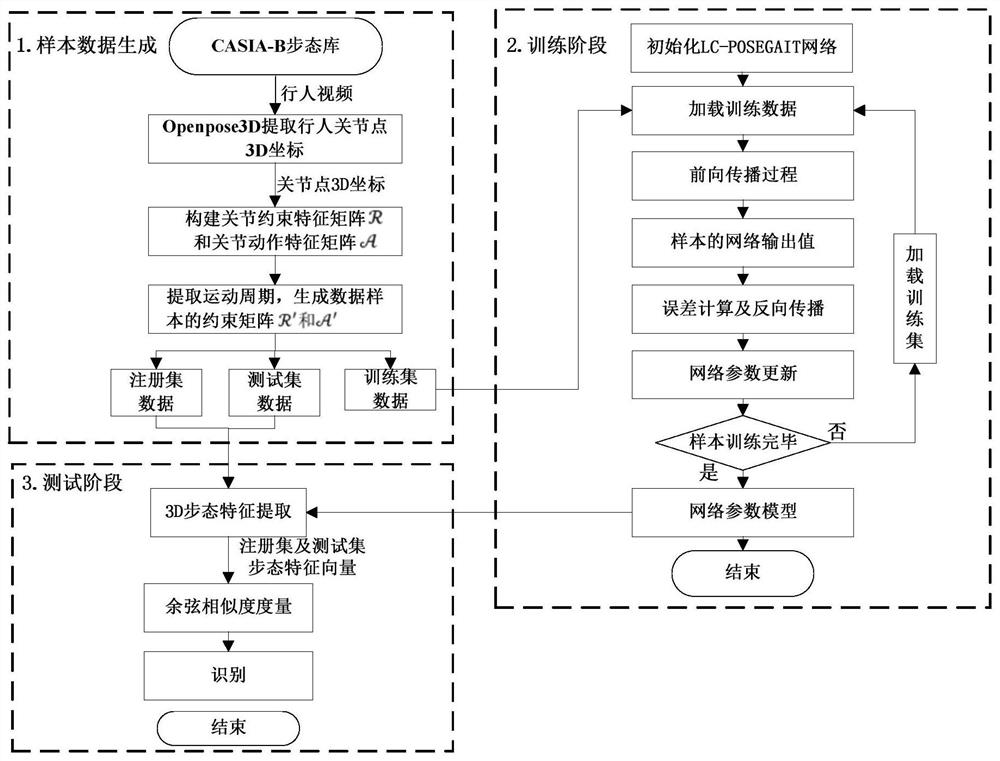

[0043] refer to figure 1 , the cross-view gait recognition method that LSTM of the present invention combines with CNN comprises the following steps:

[0044] 1) From the CASIA-B gait video data set, use OpenPose3D to extract the 3D pose data of pedestrians in the video;

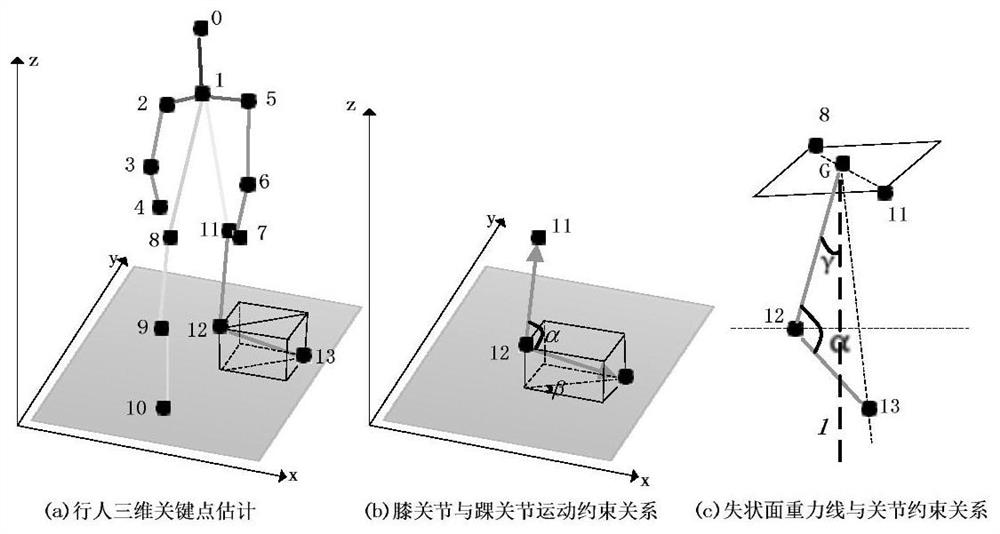

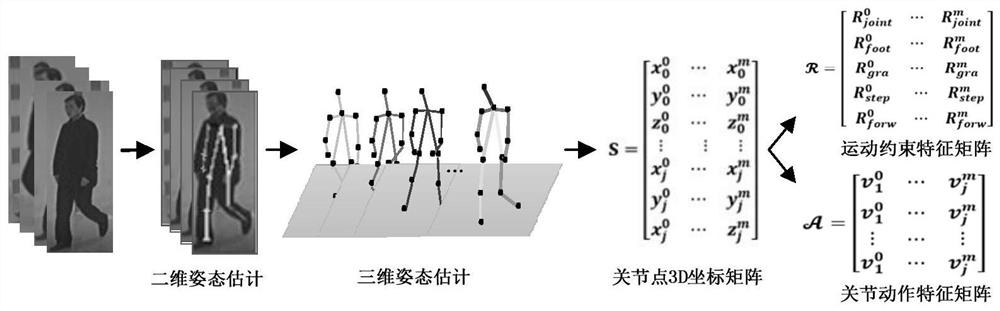

[0045] From the CASIA-B gait video dataset released by the Institute of Automation, Chinese Academy of Sciences, collect OpenPose3D to extract 3D pose data of 124 pedestrians in the video in, Represents the three-dimensional coordinates of the i-th joint point in the m-th frame of the pedestrian, including 10 walking postures and 11 viewing angles under 3 states (backpack, wearing a coat, normal walking), a total of 124×10×11=13640 videos ,refer to figure 2 ;

[0046] 2) Extract the motion constraint data and joint action data of video pedestrian joint points from the 3D pose data obtained in...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com