Dynamic vision sensor and laser radar data fusion method

A visual sensor and lidar technology, applied in instrumentation, surveying and navigation, photogrammetry/video surveying, etc., can solve the problems of not reaching pixel-level accuracy, large amount of calculation, etc., to reduce the amount of calculation and improve the accuracy , avoid the effect of influence

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

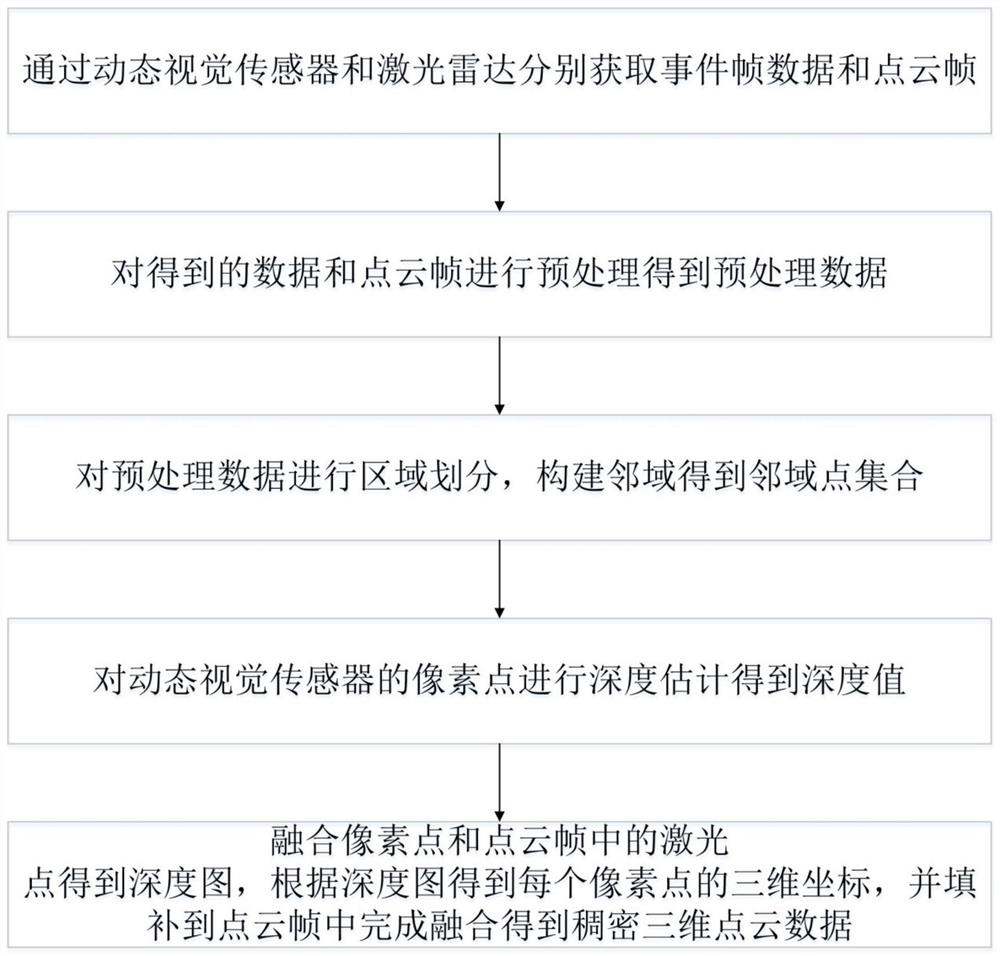

[0063] Such as figure 1 Shown is the embodiment of the method for fusion of dynamic visual sensor and laser radar data of the present invention, comprising the following steps:

[0064] S1: Obtain event frame data and point cloud frames through dynamic visual sensors and lidar respectively;

[0065] S2: Preprocessing the obtained data and point cloud frames to obtain preprocessed data;

[0066] S3: Divide the preprocessed data into regions, construct neighborhoods to obtain a set of neighborhood points;

[0067] S4: Perform depth estimation on the pixels of the dynamic vision sensor to obtain a depth value;

[0068] S5: Fusion pixel points and points in the point cloud frame to obtain a depth map, obtain the three-dimensional coordinates of each pixel point according to the depth map, and fill it into the point cloud frame to complete the fusion to obtain dense three-dimensional point cloud data.

[0069] The data produced by dynamic vision sensors is a stream of events. A...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com