Knowledge distillation method, device and system for pre-trained language model BERT

A technology of language model and distillation method, which is applied in the field of knowledge distillation for the pre-trained language model BERT, can solve the problems of BERT with many parameters, difficult engineering deployment, complex structure, etc., and achieve small parameters, simple structure, and easy to obtain in large quantities Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

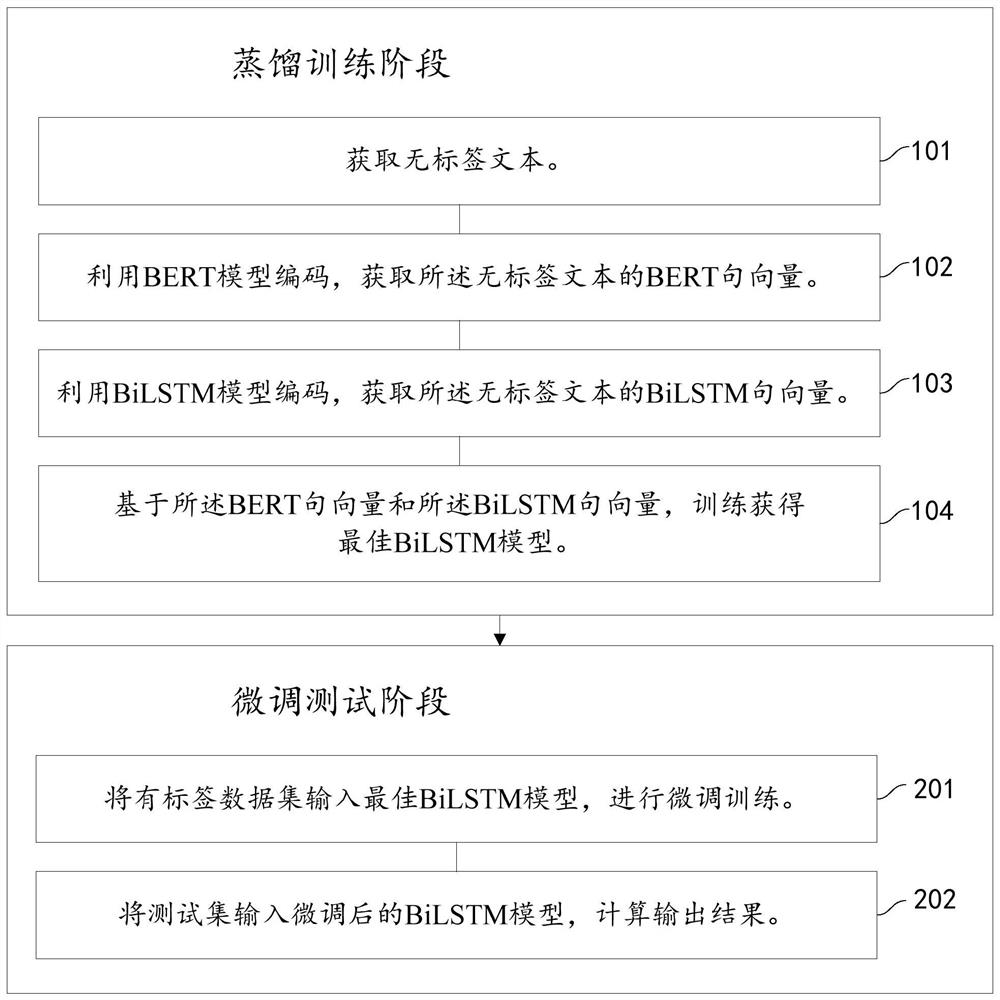

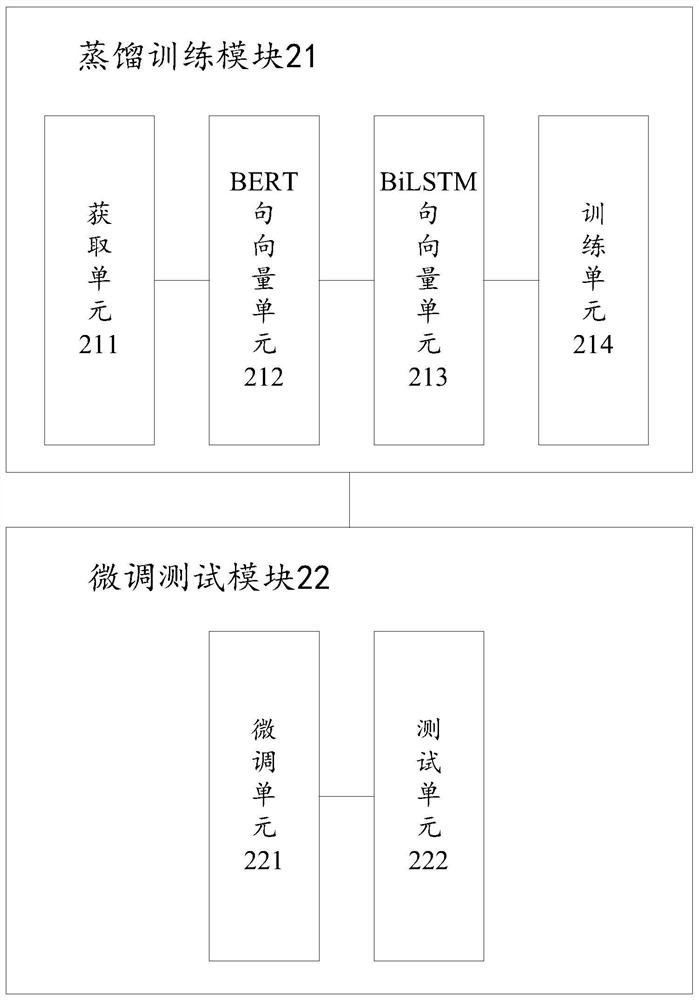

[0044] The application will be further described in detail below in conjunction with the accompanying drawings and embodiments. It should be understood that the specific embodiments described here are only used to explain related inventions, rather than to limit the invention. It should also be noted that, for the convenience of description, only the parts related to the related invention are shown in the drawings.

[0045] It should be noted that in other embodiments, the steps of the corresponding methods may not necessarily be performed in the order shown and described in this specification. In some other embodiments, the method may include more or less steps than those described in this specification. In addition, a single step described in this specification may be decomposed into multiple steps for description in other embodiments; multiple steps described in this specification may also be combined into a single step in other embodiments describe.

[0046] In the case...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com