Low-illumination image target detection method based on image fusion and target detection network

A technology of target detection and image fusion, which is applied in the fields of image processing and machine vision, can solve problems such as difficult to accurately identify targets, decrease in accuracy, and poor distinction, and achieve the effect of improving detection accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0046] Now in conjunction with embodiment, accompanying drawing, the present invention will be further described:

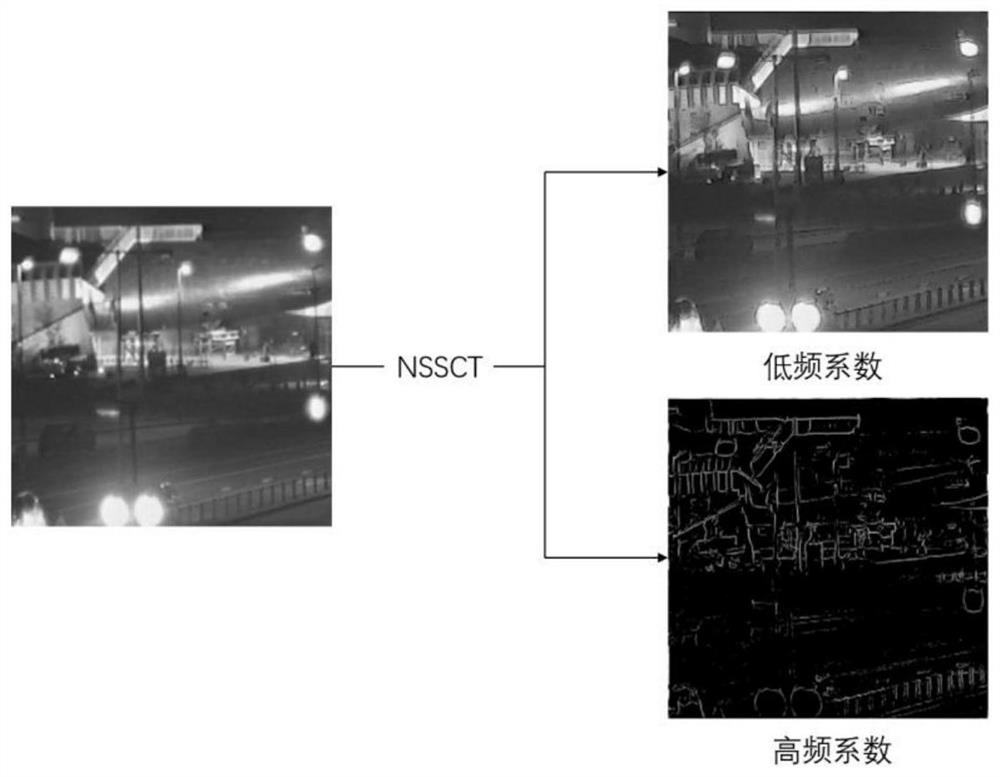

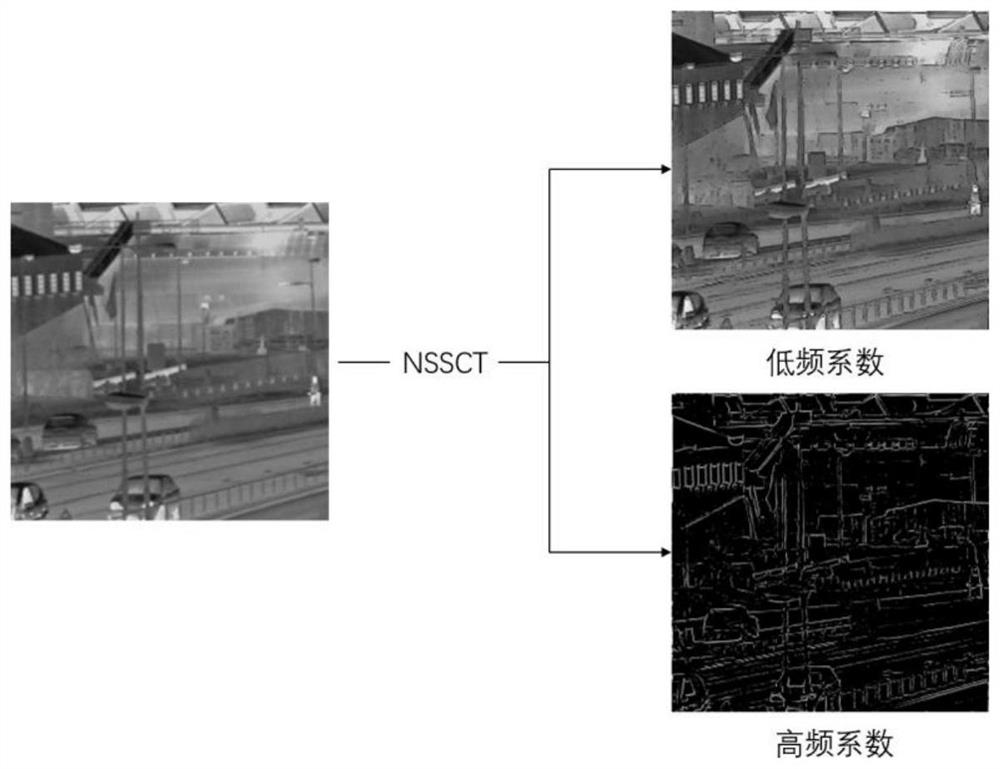

[0047] 1. Perform NSSCT transformation on visible light images and infrared images respectively

[0048] Compared with visible light images, the gray value of infrared images is generally higher. Only relying on the transformation of image intensity and other spatial domain processing methods will generally cause the infrared image components in the fused image to be heavier. Therefore, in order to maintain edge details while improving The contrast of fusion image, the present invention has adopted the method for NSSCT transformation, specifically introduces as follows:

[0049] The NSSCT transform is processed on the basis of the shearlet transform by using a non-subsampling scale transformation and a non-subsampling direction filter, and has good translation invariance. Therefore, the NSSCT transform is an optimal approximation to sparse representations of ima...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com