A method for flexible scheduling of GPU resources based on heterogeneous application platforms

An application platform and scheduling method technology, applied in the directions of resource allocation, multi-program device, inter-program communication, etc., can solve the problems of inconsistent GPU resource scheduling information, limited to the inside of the platform, and resource occupation conflicts, etc., to achieve maximum effect of chemical utilization

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0038] The present invention will be further described below in conjunction with the accompanying drawings and embodiments.

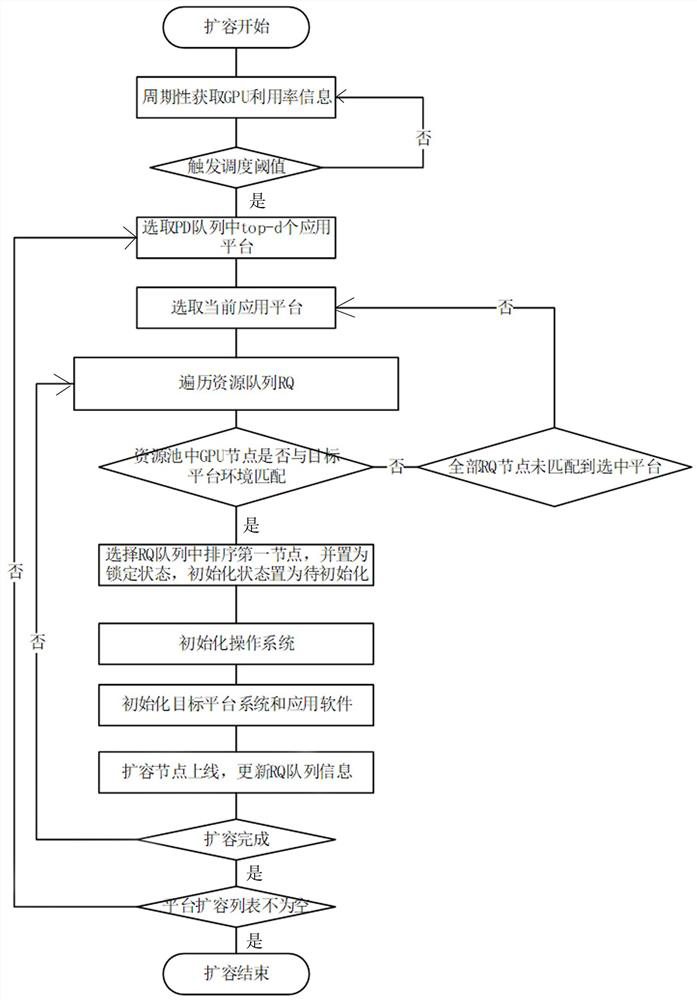

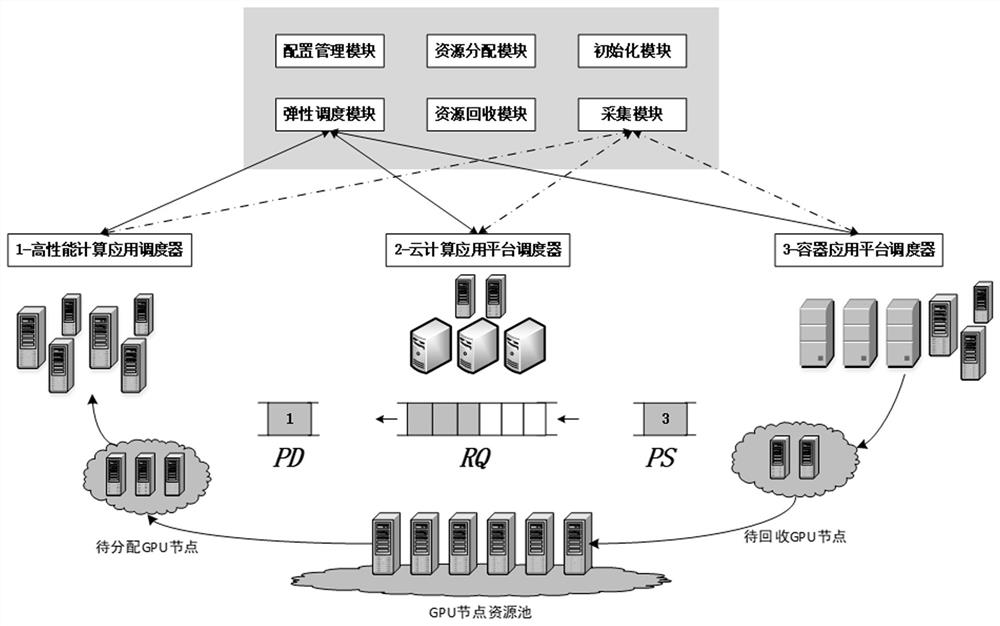

[0039] Such as figure 1 As shown, a schematic diagram of general flexible scheduling of GPU resources in the present invention is given. The three application platforms are high-performance computing application platform, cloud computing application platform and container application platform, and their identification IDs are 1, 2, and 3 respectively. At the same time, the platform also has a public GPU node resource pool for flexible scheduling and dynamic scaling. As the core platform for resource elastic scaling, it is mainly composed of configuration management module, elastic scheduling module, resource allocation module, resource recycling module, initialization module and collection module. The specific functions of each module are as follows:

[0040] The configuration management module is used to configure the management platform scheduling i...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com