An automatic workpiece grabbing method of the robot based on an RGB-D image and a CAD model

A RGB image and robot technology, which is applied in the field of robot automatic grabbing of workpieces based on RGB-D images and CAD models, can solve the problem of low grabbing accuracy and achieve fast computing speed, high accuracy, and good matching effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

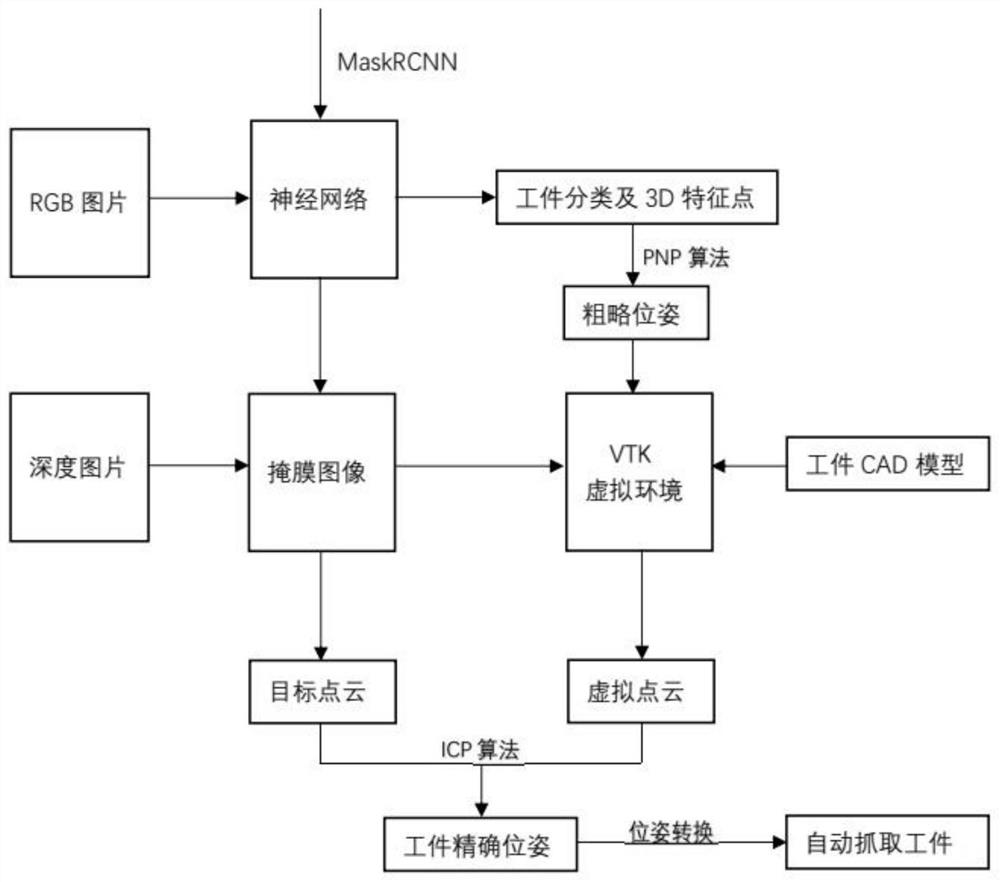

[0014] The method of the present invention is divided into three stages of workpiece image instance segmentation, point cloud matching and robot capture, and the specific steps are as follows.

[0015] 1. Data collection:

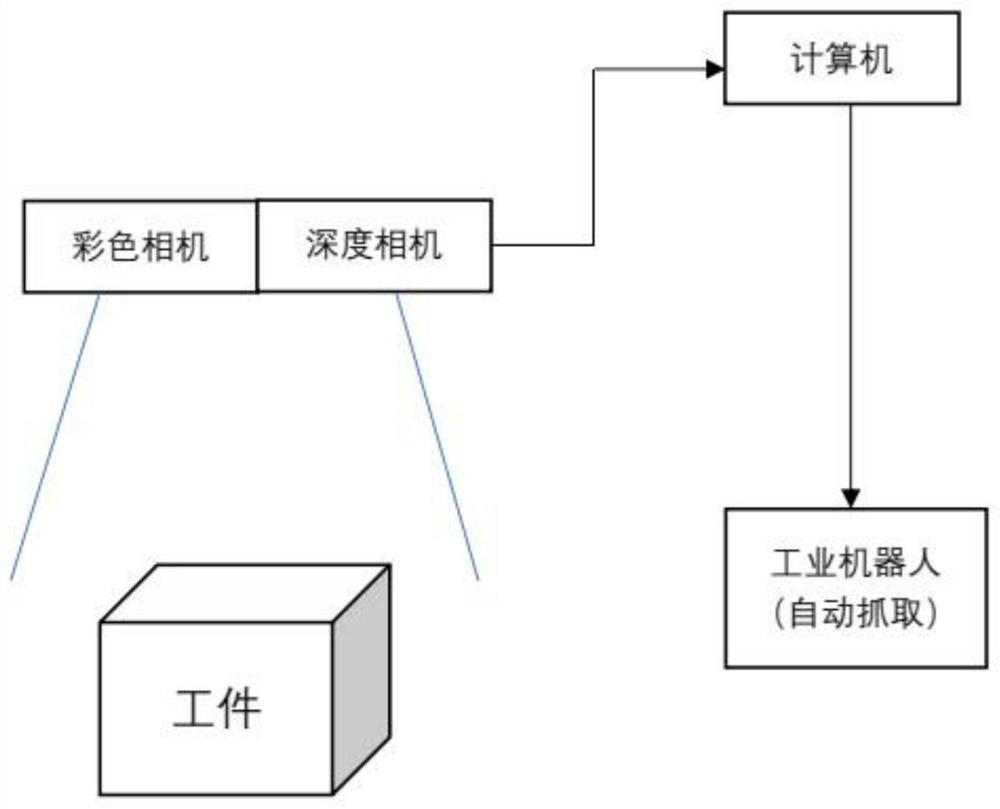

[0016] 1-1 Collect the data of the workpiece through the color camera and the depth camera (obtain multiple images and establish a data set), and obtain the RGB image I (multiple images, data sets) and depth image I (multiple images, data sets) of the workpiece );

[0017] 1-2. According to the relative position of the two cameras, the homography matrices of the two camera perspective transformations are calculated. The RGB image I and the depth image I are converted through the homography matrix, and the RGB image I and the depth image I are calculated to be aligned RGB Image I and Depth Image I.

[0018] 1-3. Adjust the aligned RGB image I and depth image I to the same size to obtain RGB image II and depth image II.

[0019] 2. Send the obtained RGB im...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com