Cache elimination method and system

A technology of caching and caching data, which is applied in the field of data processing to achieve the effect of improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

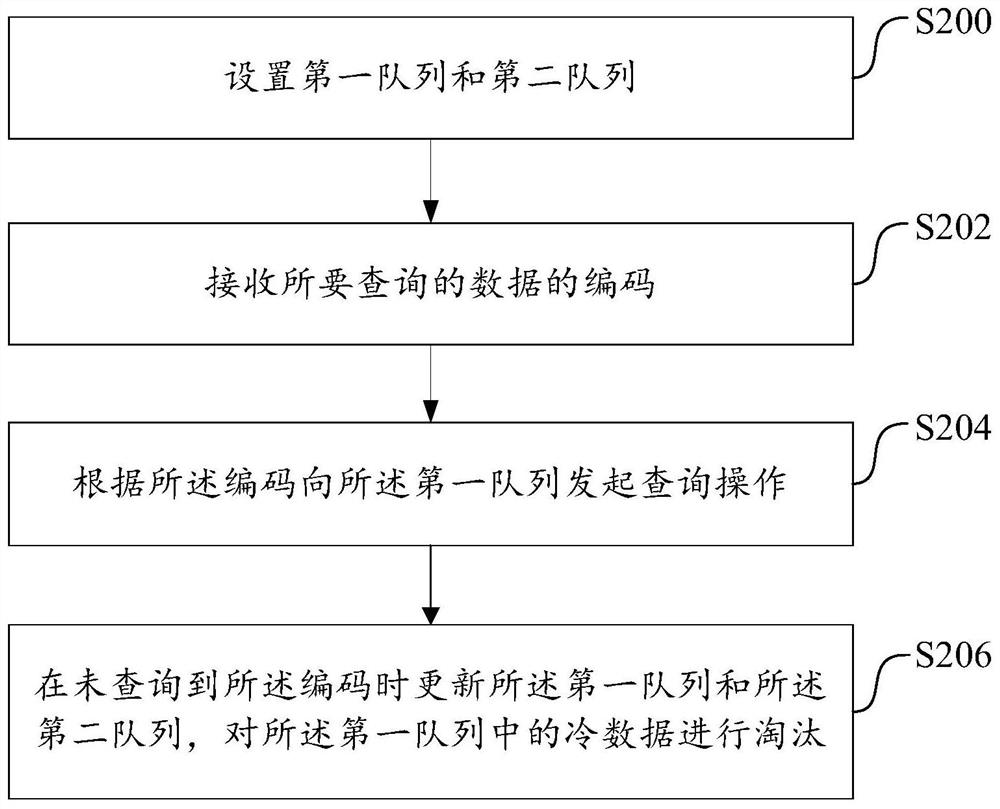

[0049] like figure 2 As shown, it is a flowchart of a cache elimination method proposed in the first embodiment of the present application. It can be understood that the flowchart in this embodiment of the method is not used to limit the sequence of executing steps. According to needs, some steps in the flowchart can also be added or deleted.

[0050] The method includes the following steps:

[0051] S200, set a first queue and a second queue.

[0052] In this embodiment, the first queue is used to maintain cached data and a unique code (Key) corresponding to each of the data, and the second queue is used to maintain the code and the per-second data of each of the data. query rate. Cache data locally or in the service (the first queue), which can quickly respond to requests.

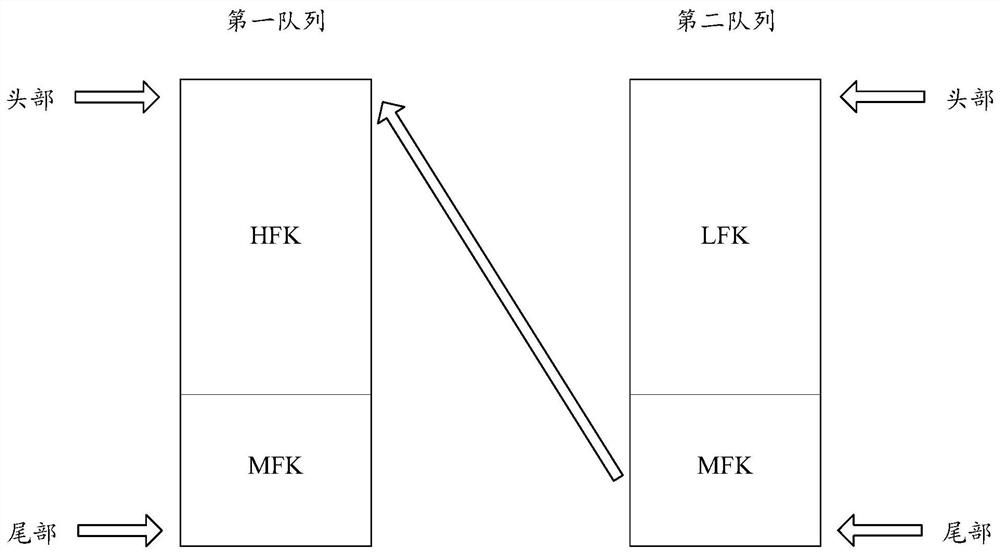

[0053] see image 3 As shown, it is a schematic diagram of the first queue and the second queue. The first queue and the second queue are both LRU queues. When inserting (Insert), data will be pl...

Embodiment 2

[0072] like Figure 5 As shown, a schematic diagram of a hardware architecture of an electronic device 2 is provided for the third embodiment of the present application. In this embodiment, the electronic device 2 may include, but is not limited to, a memory 21 , a processor 22 , and a network interface 23 that can be communicatively connected to each other through a system bus. It should be pointed out that, Figure 5 Only the electronic device 2 is shown with components 21-23, but it should be understood that implementation of all shown components is not a requirement and that more or fewer components may be implemented instead.

[0073] The memory 21 includes at least one type of readable storage medium, and the readable storage medium includes flash memory, hard disk, multimedia card, card-type memory (for example, SD or DX memory, etc.), random access memory (RAM), static Random Access Memory (SRAM), Read Only Memory (ROM), Electrically Erasable Programmable Read Only M...

Embodiment 3

[0077] like Image 6 As shown, a schematic block diagram of a cache elimination system 60 is provided for the third embodiment of the present application. The cache elimination system 60 may be divided into one or more program modules, and the one or more program modules are stored in a storage medium and executed by one or more processors to complete the embodiments of the present application. The program modules referred to in the embodiments of the present application refer to a series of computer program instruction segments capable of accomplishing specific functions. The following description will specifically introduce the functions of each program module in this embodiment.

[0078] In this embodiment, the cache elimination system 60 includes:

[0079] The setting module 600 is used for setting the first queue and the second queue.

[0080] In this embodiment, the first queue is used to maintain the cached data and the unique code corresponding to each of the data, a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com