Multi-modal medical image fusion method

A medical image and fusion method technology, applied in the field of image fusion to enhance self-learning ability and avoid information loss

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0032] The present invention will be further described below in conjunction with the drawings and embodiments of the specification.

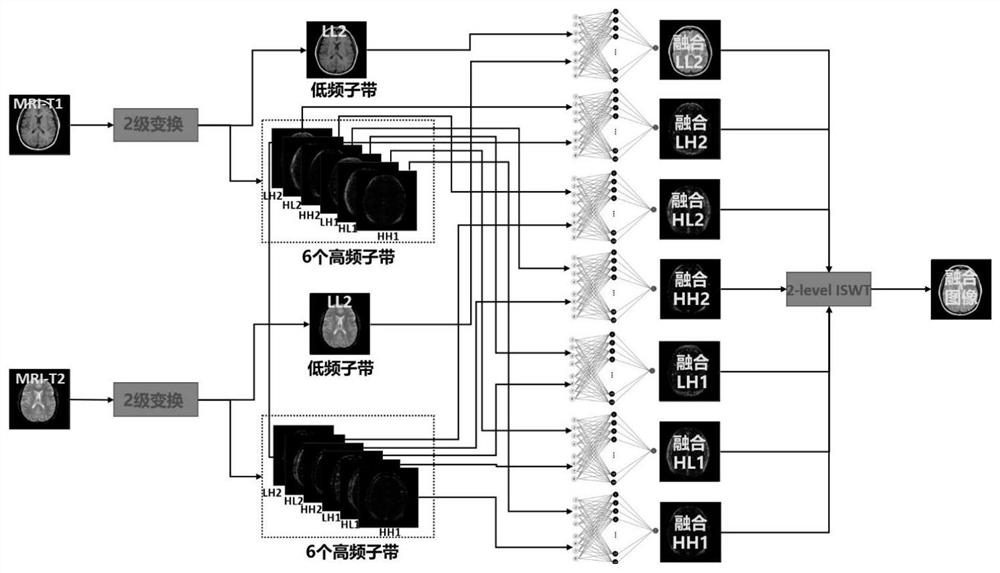

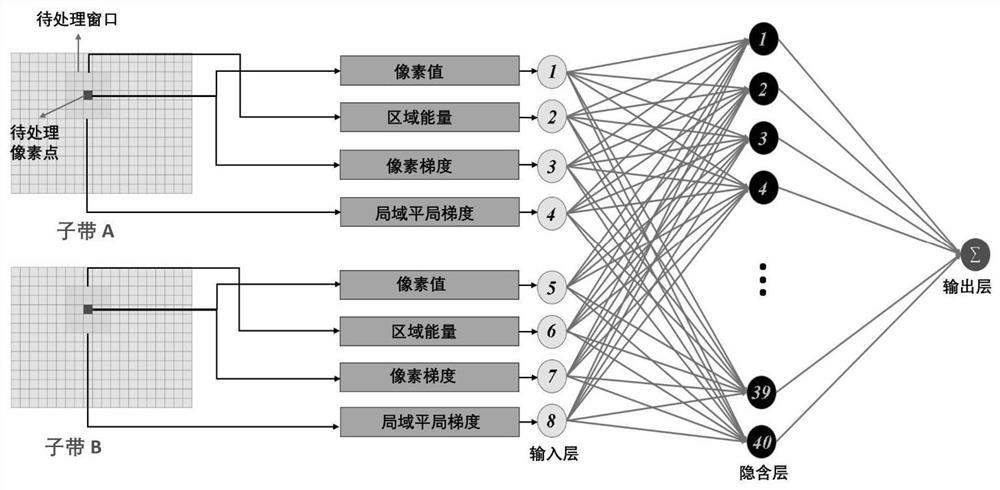

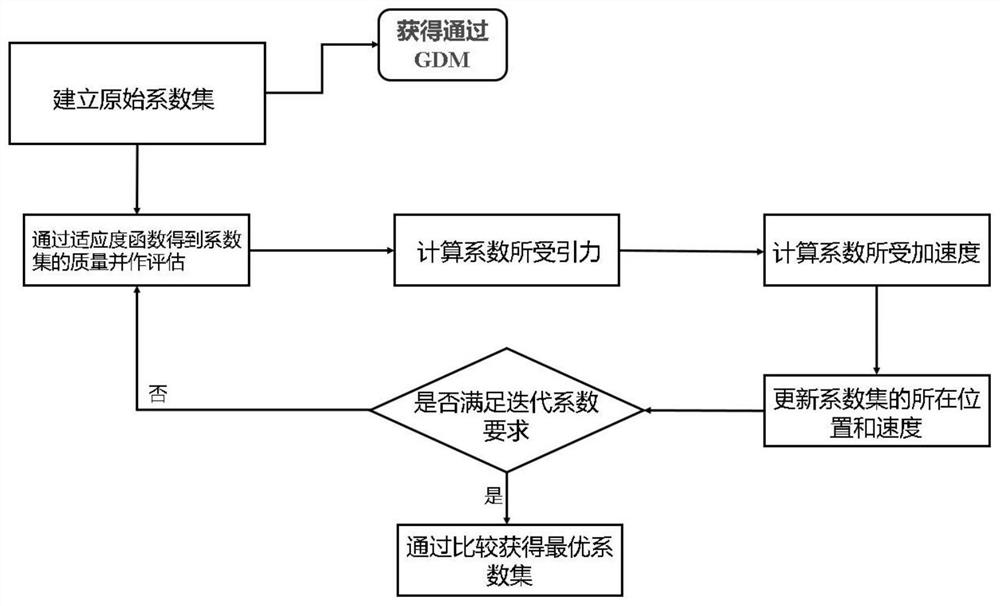

[0033] Such as Figure 1-3 As shown, the present invention is a new framework (D-ERBFNN) for medical image fusion based on discrete stationary wavelet transform and enhanced radial basis function neural network. Firstly, considering the translation invariance and calculation amount, the discrete stationary wavelet transform is used as a multi-scale transform operator. After performing two-stage wavelet decomposition, 14 subbands are obtained, which respectively represent different information features of the two source images to be fused. In this way, up and down sampling is not involved, and the loss of information is avoided as much as possible. Then, for the corresponding pair of subbands, fully considering the pixel characteristics and the context features between pixels, the feature information accurate to the point level is substituted i...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com