Human body action mapping method and device, computer equipment and storage medium

A mapping method and human motion technology, applied in the field of automation, can solve the problems of complex calculation and time-consuming robot follow-up calculations, and achieve the effects of reducing calculation complexity, fast speed, and increasing calculation frequency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

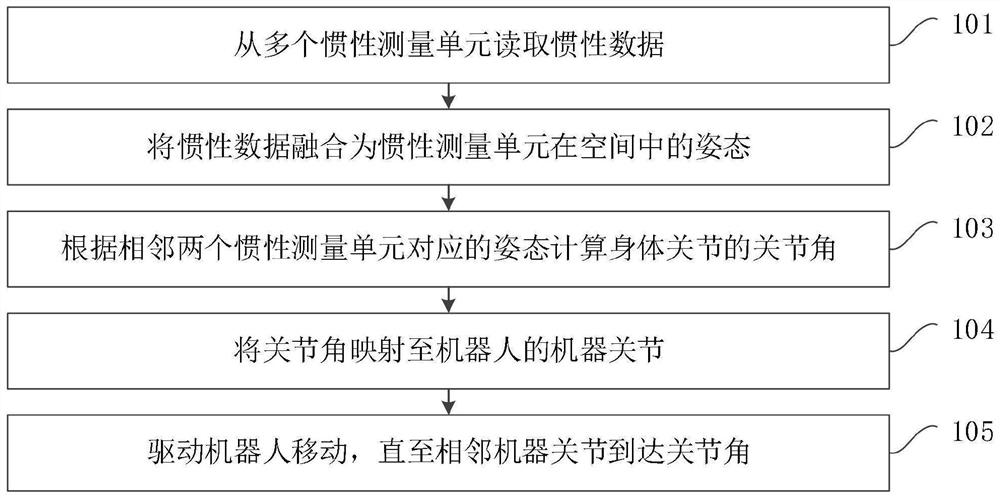

[0035] figure 1 It is a flowchart of a human motion mapping method provided by Embodiment 1 of the present invention. This embodiment can be applied to the situation where IMU is used to collect the motion of joints specified by the user and calculate the posture, and map it to the machine joints of the robot in real time. The method It can be performed by a human body motion mapping device, which can be implemented by software and / or hardware, and can be configured in a computer device, such as a personal computer, server, workstation, etc., and specifically includes the following steps:

[0036] Step 101, read inertial data from multiple inertial measurement units.

[0037] In this embodiment, a plurality of inertial measurement units can be pre-configured. The inertial measurement unit is a sensor or a collection of sensors that measure data generated when the carrier moves (ie, inertial data). The inertial measurement unit includes the following types:

[0038] 1. Six-axi...

Embodiment 2

[0137] image 3 It is a flow chart of a human body motion mapping method provided by Embodiment 2 of the present invention. This embodiment is based on the foregoing embodiments, and further increases the operation of virtual reality human body motions. The method specifically includes the following steps:

[0138] Step 301, read inertial data from multiple inertial measurement units.

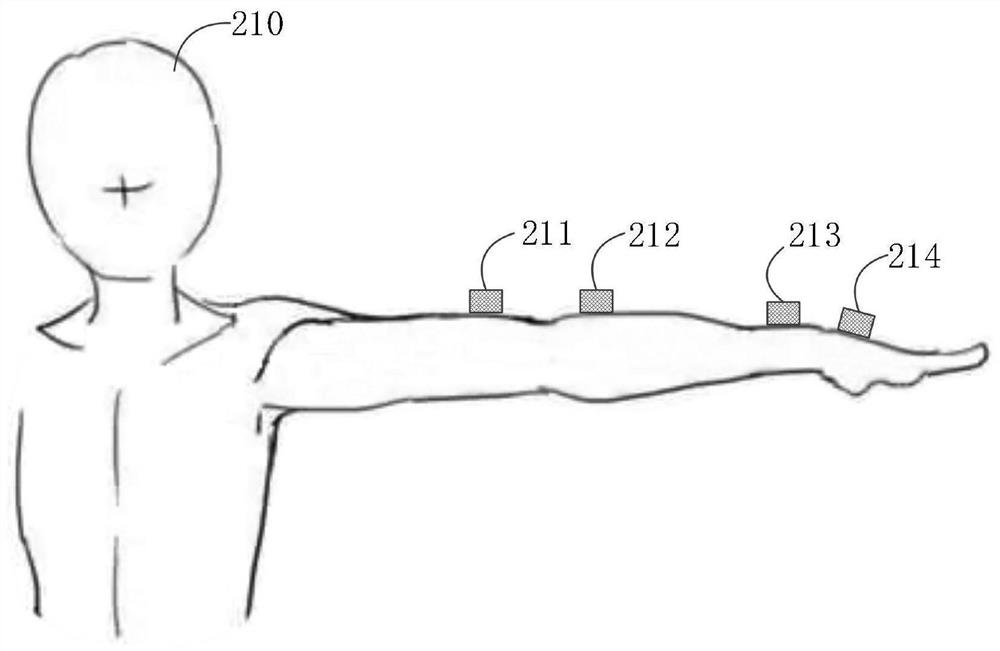

[0139] Wherein, a plurality of inertial measurement units are mounted on the user's body parts, and part of the user's body joints are spaced between two adjacent inertial measurement units.

[0140] Step 302, fusing the inertial data into the attitude of the inertial measurement unit in space.

[0141] Step 303, loading business scenarios.

[0142] Step 304 , in a business scenario, generate a virtual part matching the body part according to the pose.

[0143] In some business scenarios, you can start a 3D engine, such as Unity engine, Unreal Engine, Cocos2dx engine, self-developed engine, ...

Embodiment 3

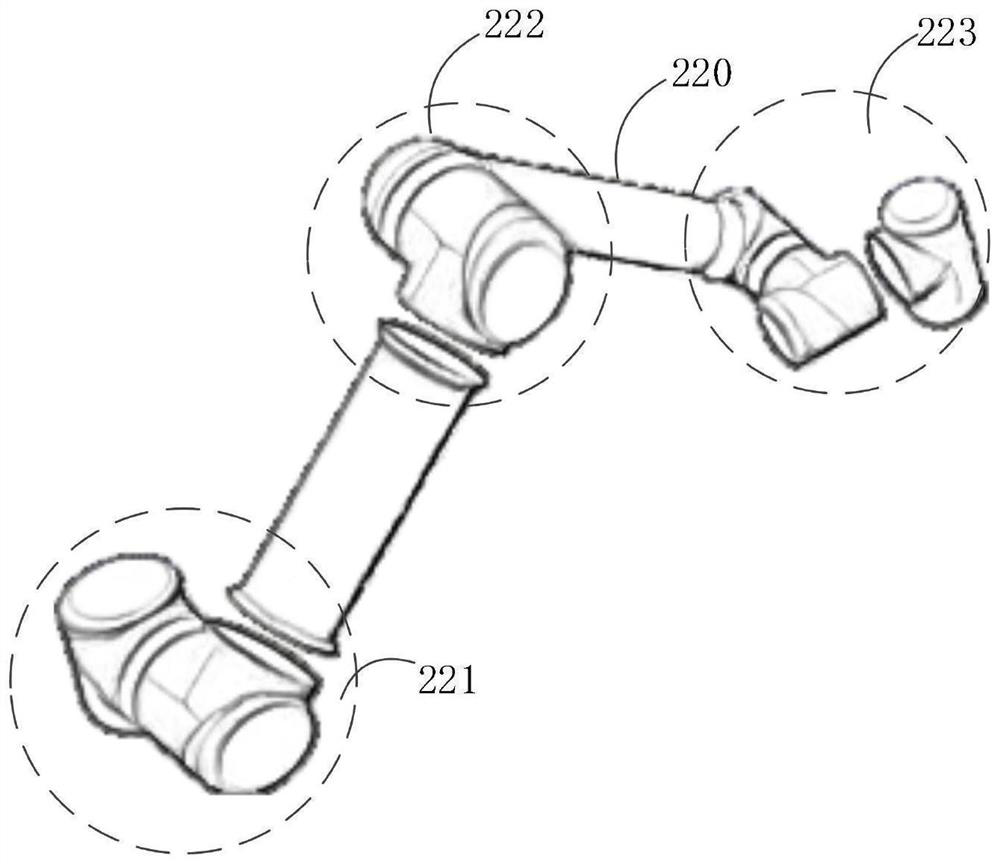

[0170] Figure 4 A structural block diagram of a human body action mapping device provided in Embodiment 3 of the present invention may specifically include the following modules:

[0171] The inertial data reading module 401 is used to read inertial data from a plurality of inertial measurement units. The plurality of inertial measurement units are mounted on the body parts of the user, and the interval between two adjacent inertial measurement units is determined by Describe the user's body joints;

[0172] an attitude fusion module 402, configured to fuse the inertial data into the attitude of the inertial measurement unit in space;

[0173] A joint angle calculation module 403, configured to calculate the joint angles of the body joints according to the postures corresponding to two adjacent inertial measurement units;

[0174] A joint angle mapping module 404, configured to map the joint angles to machine joints of the robot;

[0175] The robot driving module 405 is co...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com