Limb movement and language factor matching method and device for virtual image

A virtual image and body movement technology, applied in the field of data processing, can solve problems such as mismatch between movement and language, inconsistent expression, poor synchronization between language and movement, and achieve the effect of language and movement synchronization and consistent expression

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

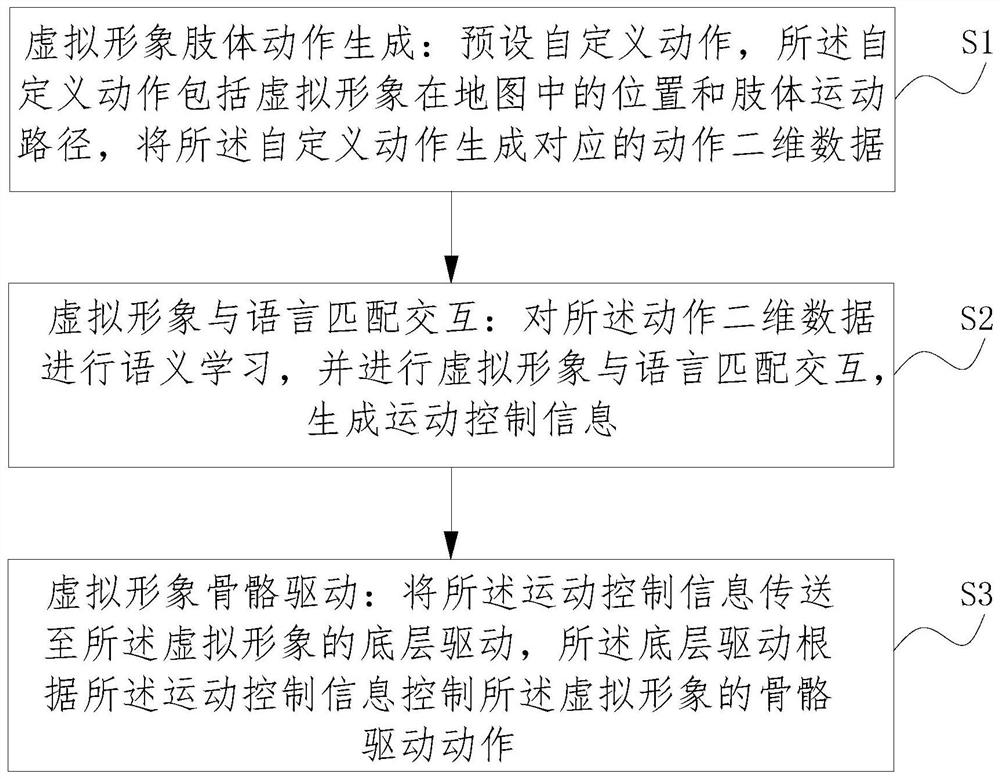

[0036] see figure 1 , providing a body movement and language factor matching method for an avatar, comprising the following steps:

[0037] S1. Generation of body movements of the avatar: preset custom actions, the custom actions include the position of the avatar on the map and the movement path of the body, and generate corresponding two-dimensional action data for the custom actions;

[0038] S2. Avatar and language matching interaction: perform semantic learning on the two-dimensional data of the action, and perform avatar and language matching interaction to generate motion control information;

[0039] S3. Avatar skeletal drive: transmit the motion control information to the underlying driver of the avatar, and the underlying driver controls the skeletal drive action of the avatar according to the motion control information.

[0040] Specifically, the skeleton driving includes data storage, image display and frame animation processing. According to the received differe...

Embodiment 2

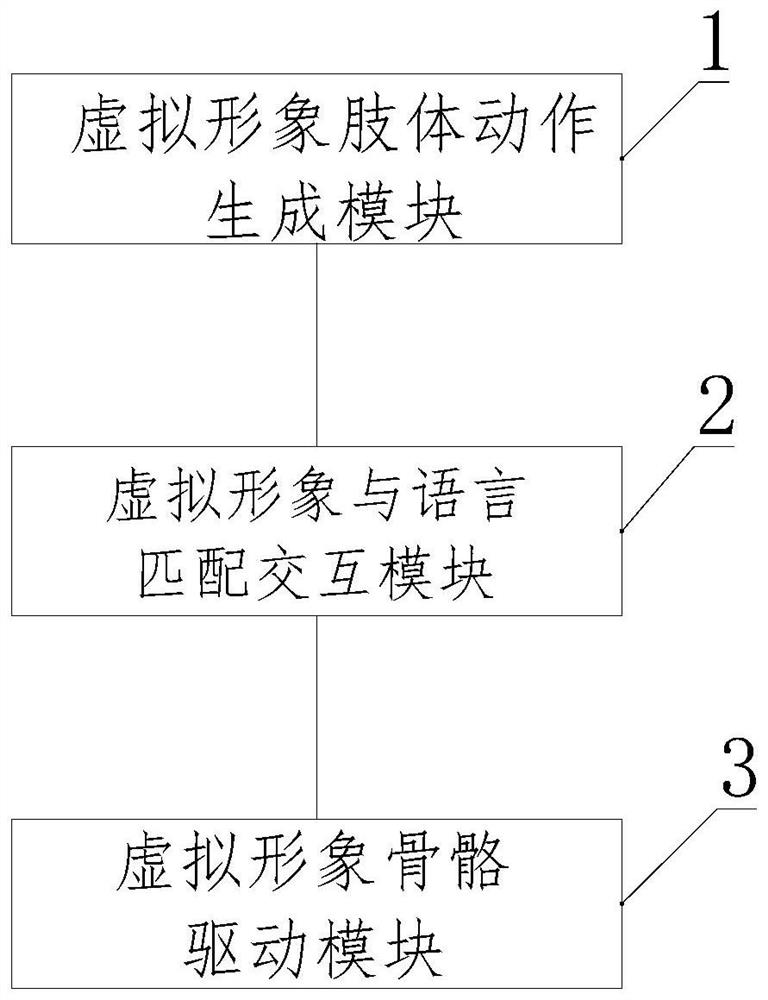

[0062] see figure 2 , the present invention also provides a body movement and language factor matching device for an avatar, using the body movement and language factor matching method for an avatar in Embodiment 1, including:

[0063] The avatar body movement generation module 1 is used to preset custom actions, the self-definition action includes the position of the avatar in the map and the movement path of the limbs, and generates the corresponding two-dimensional action data of the self-definition action;

[0064] The avatar and language matching interaction module 2 is used for performing semantic learning on the two-dimensional action data, and performing avatar and language matching interaction to generate motion control information;

[0065] The avatar skeleton driving module 3 is configured to transmit the motion control information to the underlying driver of the avatar, and the underlying driver controls the skeleton driving action of the avatar according to the m...

Embodiment 3

[0076] Embodiment 3 of the present invention provides a computer-readable storage medium, and the computer-readable storage medium stores program codes for the method for matching body movements and language factors of avatars, and the program codes include the program codes used to implement Embodiment 1. Instructions for matching methods of body movements and language factors of avatars in any possible implementation thereof.

[0077] The computer-readable storage medium may be any available medium that can be accessed by a computer, or a data storage device such as a server, a data center, etc. integrated with one or more available media. The available medium may be a magnetic medium (for example, a floppy disk, a hard disk, or a magnetic tape), an optical medium (for example, DVD), or a semiconductor medium (for example, a solid state disk (SolidStateDisk, SSD)) and the like.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com