Task configuration method for heterogeneous distributed machine learning cluster

A machine learning and task configuration technology, applied in the physical field, can solve the problems of high time overhead, high configuration task time overhead, low utilization of node resources, etc., and achieve the effect of strong adaptability and high model accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

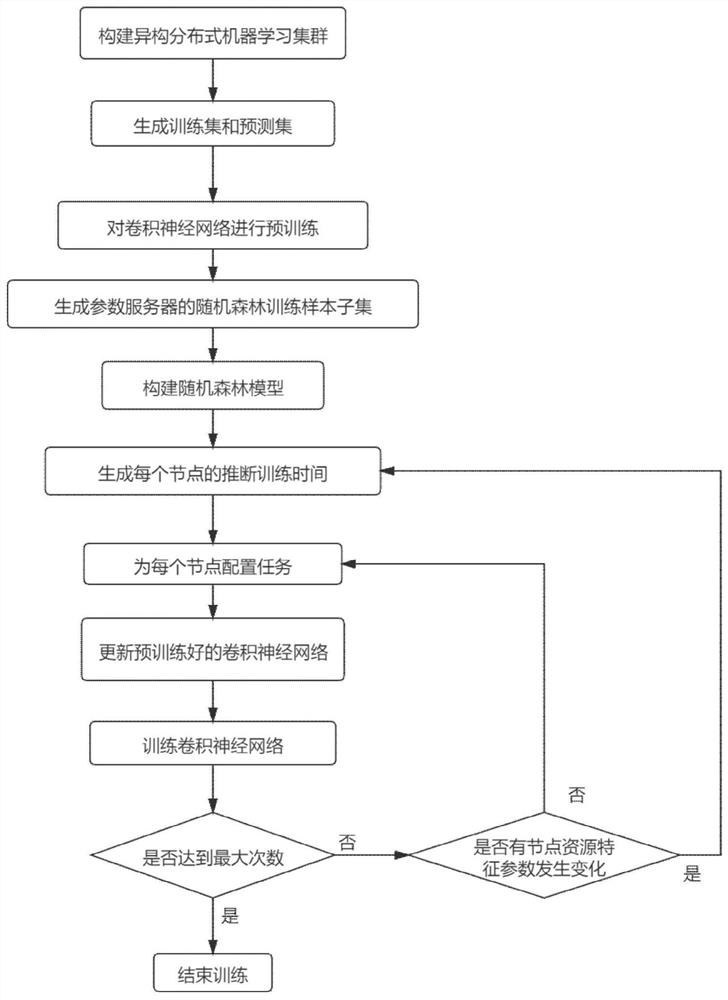

[0042] By the following figure 1 Further description of the invention.

[0043] Refer figure 1 Specific steps of the present invention achieves further described.

[0044] Step 1, to build a heterogeneous distributed machine learning cluster.

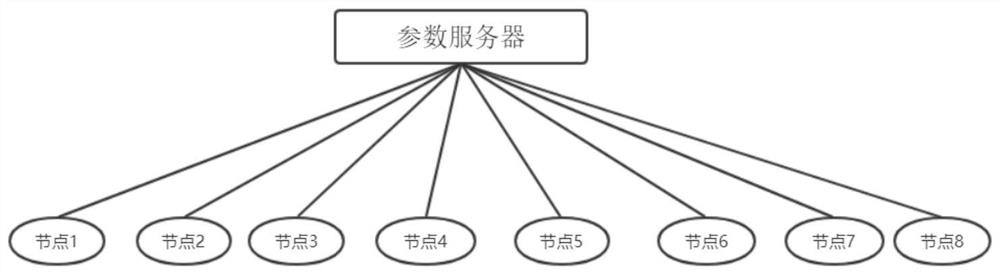

[0045] A parameter server and at least four nodes a heterogeneous distributed machine learning cluster.

[0046] Refer figure 2 , Heterogeneous distributed learning machine parameter consists of a server cluster and eight nodes constructed according to the embodiment of the present invention is further described.

[0047] Step 2, generating a training set and the prediction set.

[0048] Selecting at least 10,000 server parameters set image composed of images, each image comprising at least one target.

[0049] Embodiments of the present invention, an image data set derived from the open source cifar10, 20000 were selected image. Each image contains an image of an airplane.

[0050] Each aircraft image for each image to mark, label and gene...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com