Human body posture recognition method based on convolutional neural network of covariance matrix transformation

A convolutional neural network and covariance matrix technology, which is applied in the field of human gesture recognition, can solve the problems of recognition accuracy attenuation and operation time, and achieve the effect of expanding the scope of use and reducing the decline in recognition accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

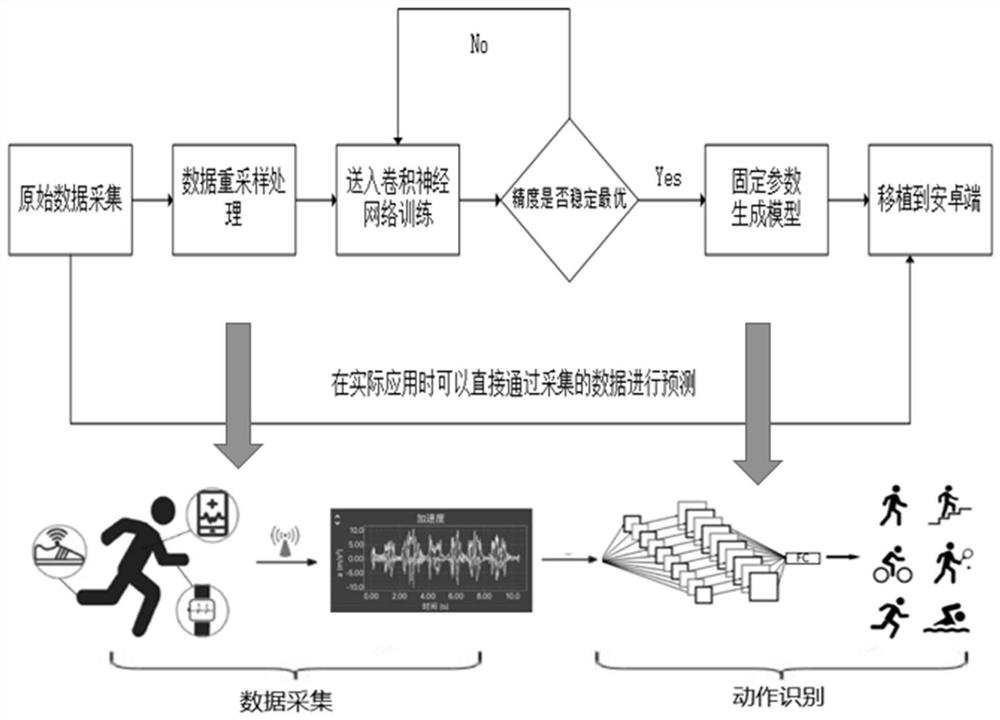

[0040] The data collection process and network construction of the present invention will be further described in detail below in conjunction with the accompanying drawings and specific implementation.

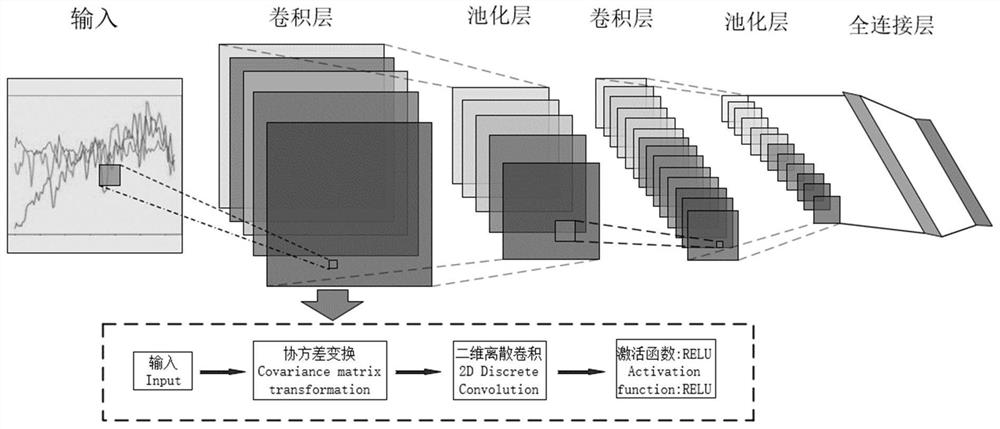

[0041] As shown in the flow chart, the present invention proposes a convolutional neural network based on covariance matrix transformation for a human body gesture recognition method, including the following steps:

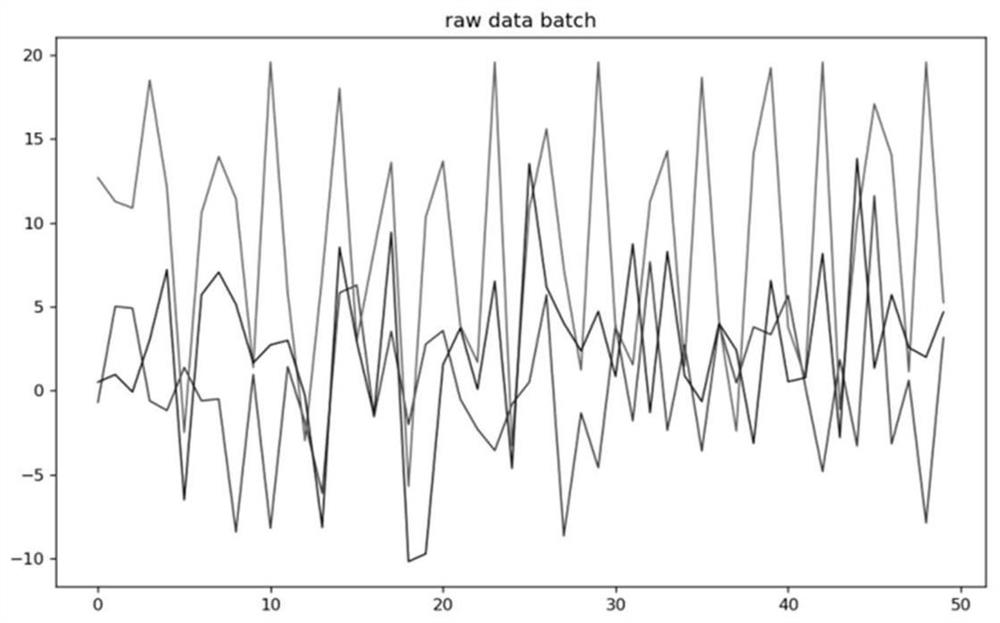

[0042] Step 1: All subjects have the same model of smartphone (model is iphone11), and place the smartphone above the left and right wrists by fixing the mobile phone bag, and then the subjects perform daily actions (walking, running, up and down stairs and jumping, etc.) , the accelerometer and gyroscope in the smartphone will record the three-axis sensor data when the subject is moving, and each set of data will collect 300 movements in advance.

[0043] The data collection scheme is shown in the attached figure, for the same subject has a smart phone in both left...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com