Video natural language text retrieval method based on space time sequence characteristics

A natural language and text technology, applied in the physical field, can solve the problems of difficulty in accurately modeling the spatiotemporal semantic features of videos, affecting the retrieval accuracy of natural language texts in videos, etc. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

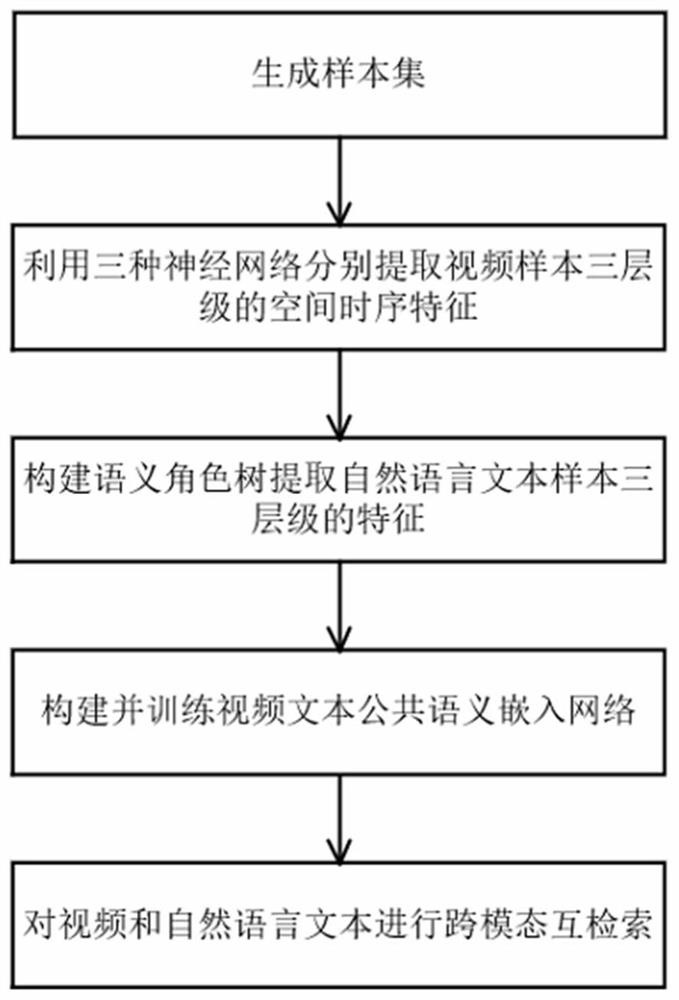

[0043] Attached below figure 1 And embodiment the present invention is described in further detail.

[0044] Step 1, generate a sample set.

[0045] Select at least 6,000 multi-category dynamic behavior videos to be retrieved and their corresponding natural language text annotations to form a sample set. Each video contains at least 20 human-labeled natural language text annotations, and the number of natural language texts does not exceed 30 characters. At least 120,000 video natural language text pairs.

[0046] Step 2, using three neural networks to extract three-level spatial and temporal features of video samples.

[0047] Input the videos in the sample set into the trained deep residual neural network ResNet-152, extract the features of each frame image in each video, average the image features of all frames in each video, and output the video The 2048-dimensional frame-level features are used as the first-level features of the video.

[0048] Use the trained 3D conv...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com