Mining area target identification method based on fusion of fisheye camera and laser radar

A technology of laser radar and fisheye camera, which is applied in the field of environmental perception, can solve the problems of incomplete fusion, large degree of information loss, poor correction effect, etc., and achieve the effect of reducing hardware cost, complete feature extraction, and improving effectiveness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0073] The specific embodiments of the present invention will be described in detail below in conjunction with the accompanying drawings, but it should be understood that the protection scope of the present invention is not limited by the specific embodiments.

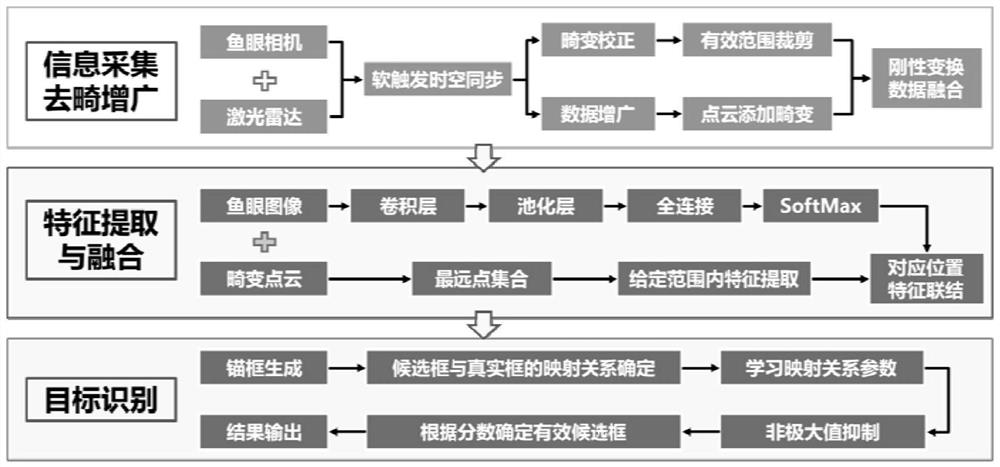

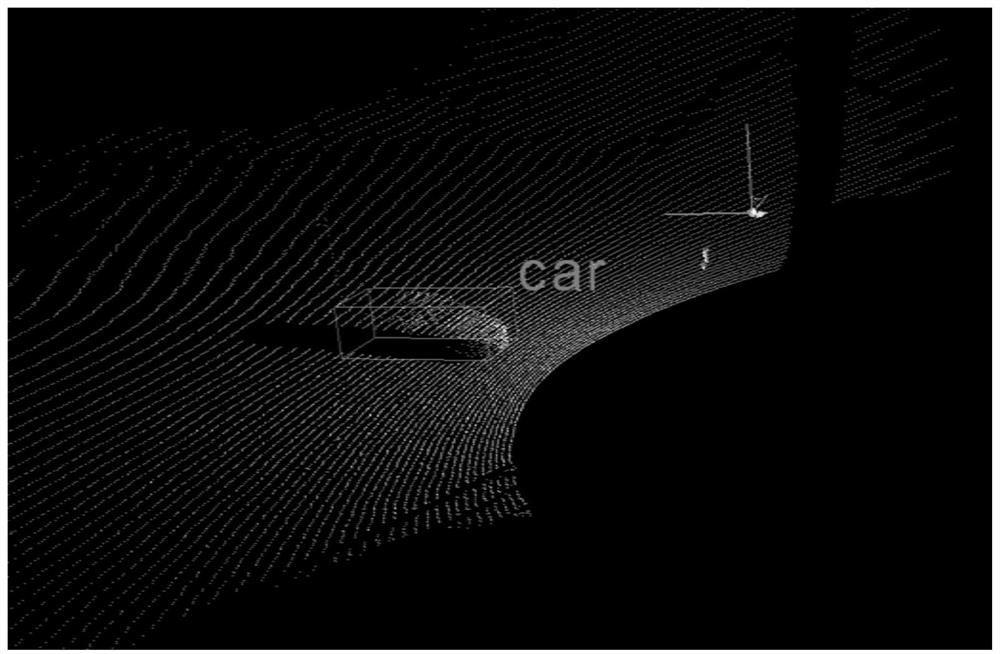

[0074] like figure 1 As shown, the present invention proposes a mining area target recognition method based on fisheye camera and laser radar fusion, and its steps include three steps: 1) information collection, de-distortion augmentation; 2) feature extraction and fusion; 3) two Stage object recognition network construction. The three steps present a serial relationship, all of which lay the foundation for the execution of the next step.

[0075] 1) Information collection, distortion correction and data augmentation:

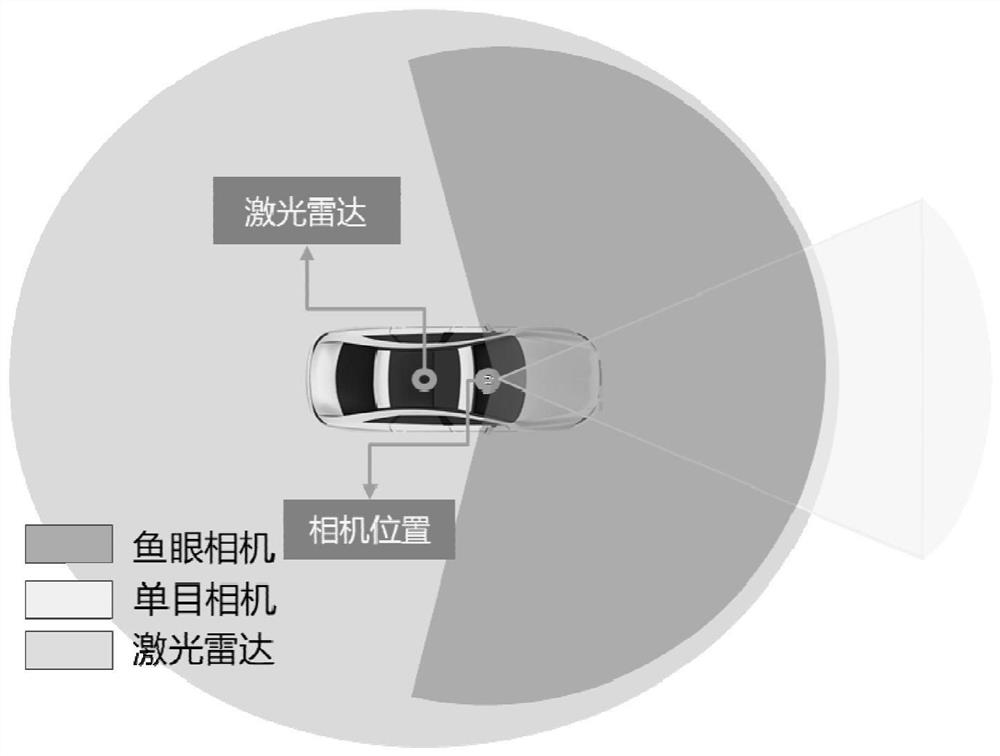

[0076] It is divided into two branches: image acquisition based on fisheye camera and point cloud acquisition based on lidar, to obtain real-time environmental information during driving in the mining...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com