Data compression method, data compression system and operation method of deep learning acceleration chip

A deep learning and acceleration chip technology, applied in neural learning methods, electrical digital data processing, digital data information retrieval, etc., can solve problems such as acceleration chips

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

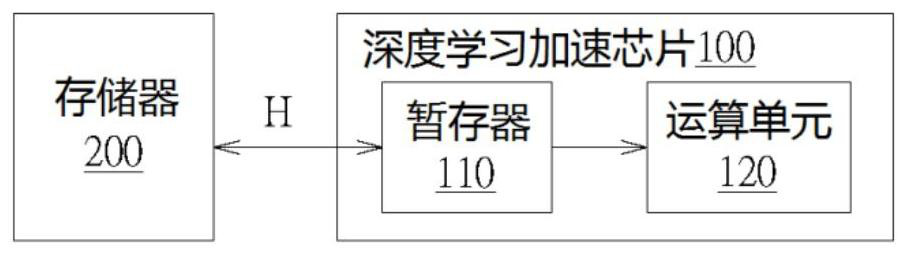

[0037] Please refer to figure 1 , which is a schematic diagram of the deep learning acceleration chip 100 according to an embodiment. During the operation of the deep learning acceleration chip 100 , an external memory 200 (eg, DRAM) is required to store the trained filter coefficient tensor matrix H of the deep learning model. After the filter coefficient tensor matrix H is transferred to the temporary register 110 , the operation unit 120 performs an operation.

[0038] The researchers found that in the operation process of the deep learning acceleration chip 100 , the most time-consuming and power-consuming process is the process of transferring the filter coefficient tensor matrix H from the memory 200 . Therefore, researchers are committed to reducing the amount of data transfer between the memory 200 and the deep learning acceleration chip 100, in order to speed up the processing speed of the deep learning acceleration chip 100 and reduce power consumption.

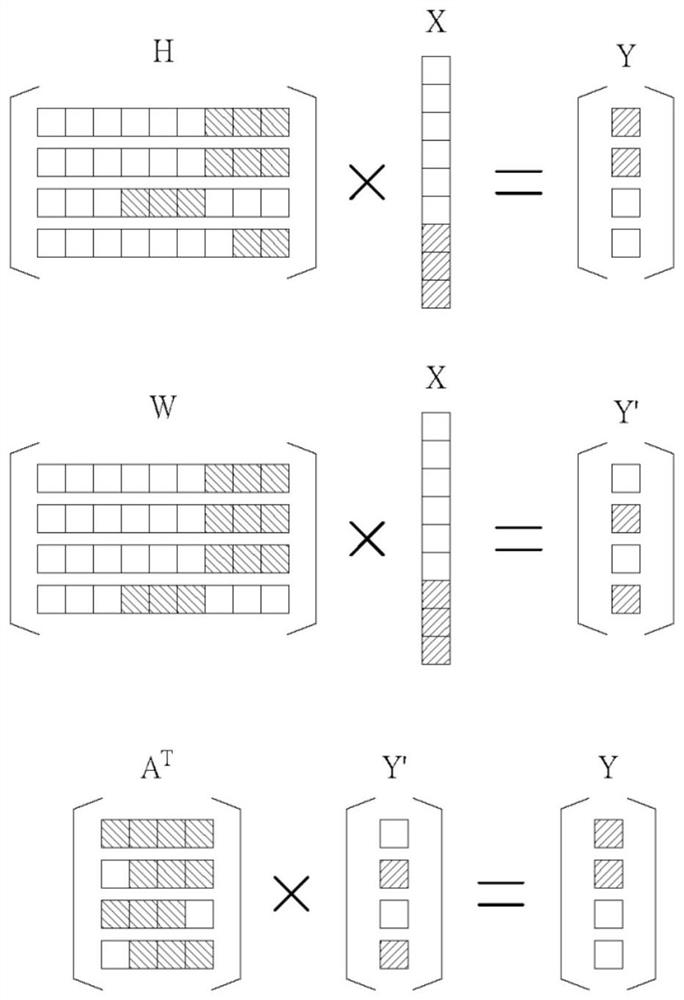

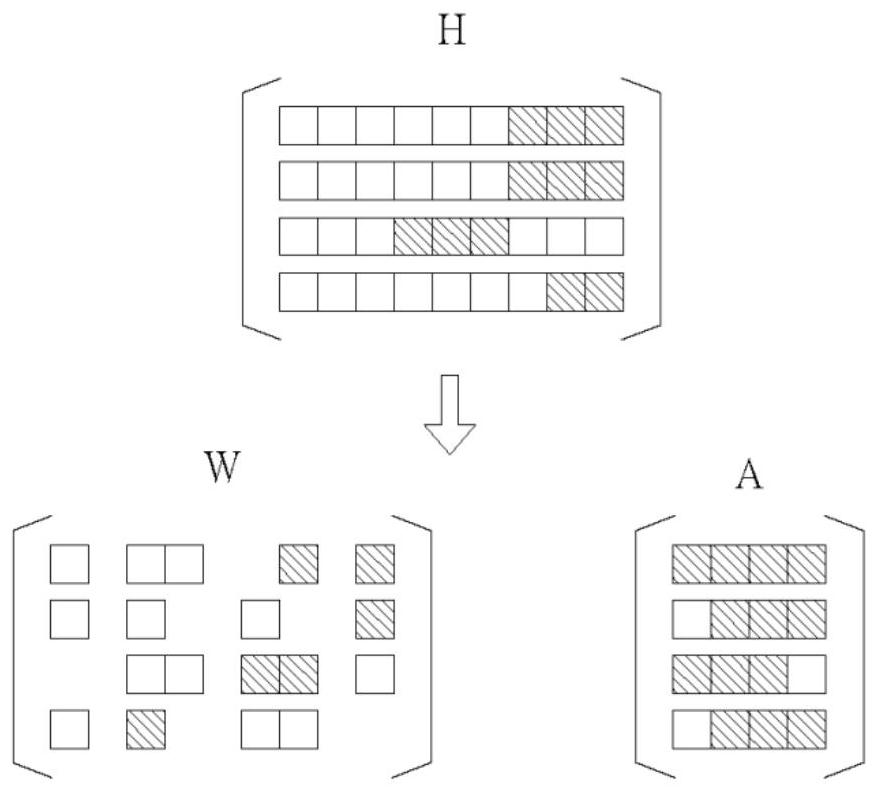

[0039] Pl...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com