Embedded DRAM cache

a memory structure and cache technology, applied in the field of cache memory structures for processor based systems, can solve the problems of reducing increasing the number of cycles it takes to transfer data, and increasing the latency or number of cycles, so as to reduce the average memory latency, increase system bandwidth and overall performance, and reduce the effect of average memory latency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

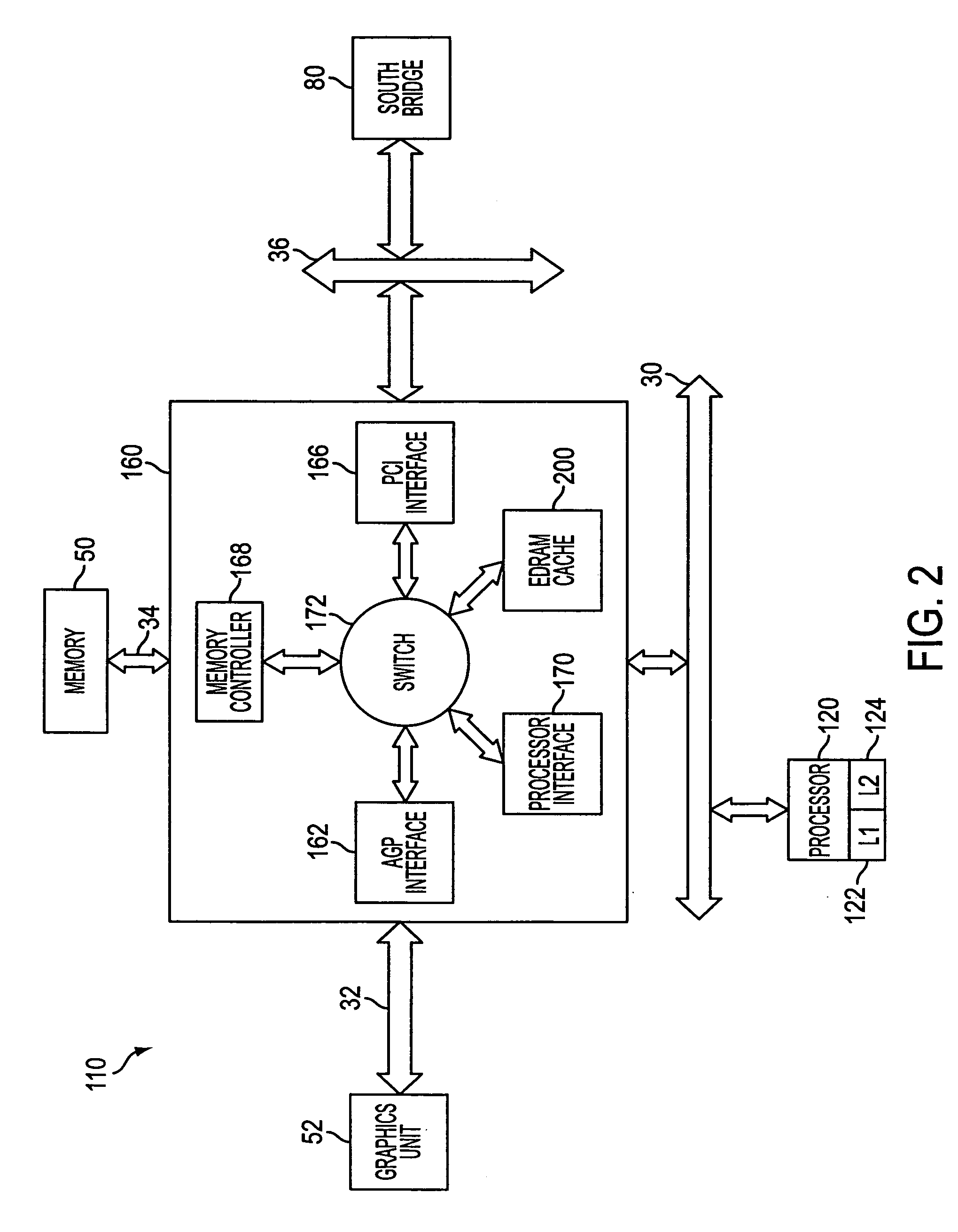

[0022]FIG. 2 illustrates a portion of a processor based system 110 having an eDRAM L3 cache 200 integrated on the system chipset constructed in accordance with an exemplary embodiment of the present invention. The system 110 includes a south bridge 80 and a north bridge 160. The south bridge 80 is connected to a north bridge 160 via a bus such as a PCI bus 36. The north and south bridges comprise the system chipset for the system 110. Although not illustrated, the system 110 also includes the typical components connected to the south bridge 80 as illustrated in FIG. 1. The south bridge components are not illustrated solely for clarity purposes of FIG. 2.

[0023] In the illustrated embodiment, the L3 cache 200 is integrated on the north bridge 160 of the system chipset. As such, the L3 cache is positioned closer to the processor 120 in comparison to the system memory 50. For example, the processor 120 can access the L3 cache 200 without having to send or receive information over the m...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com