Technique for enhancing effectiveness of cache server

a cache server and efficiency technology, applied in the field of network system and cache server, can solve the problems of ineffective operations, waste of network resources, and inability to carry out operations (1), (2) and (3), and achieve the effect of increasing the efficiency of the cache server, shortening the time taken for obtaining data, and increasing probabilities

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

first embodiment

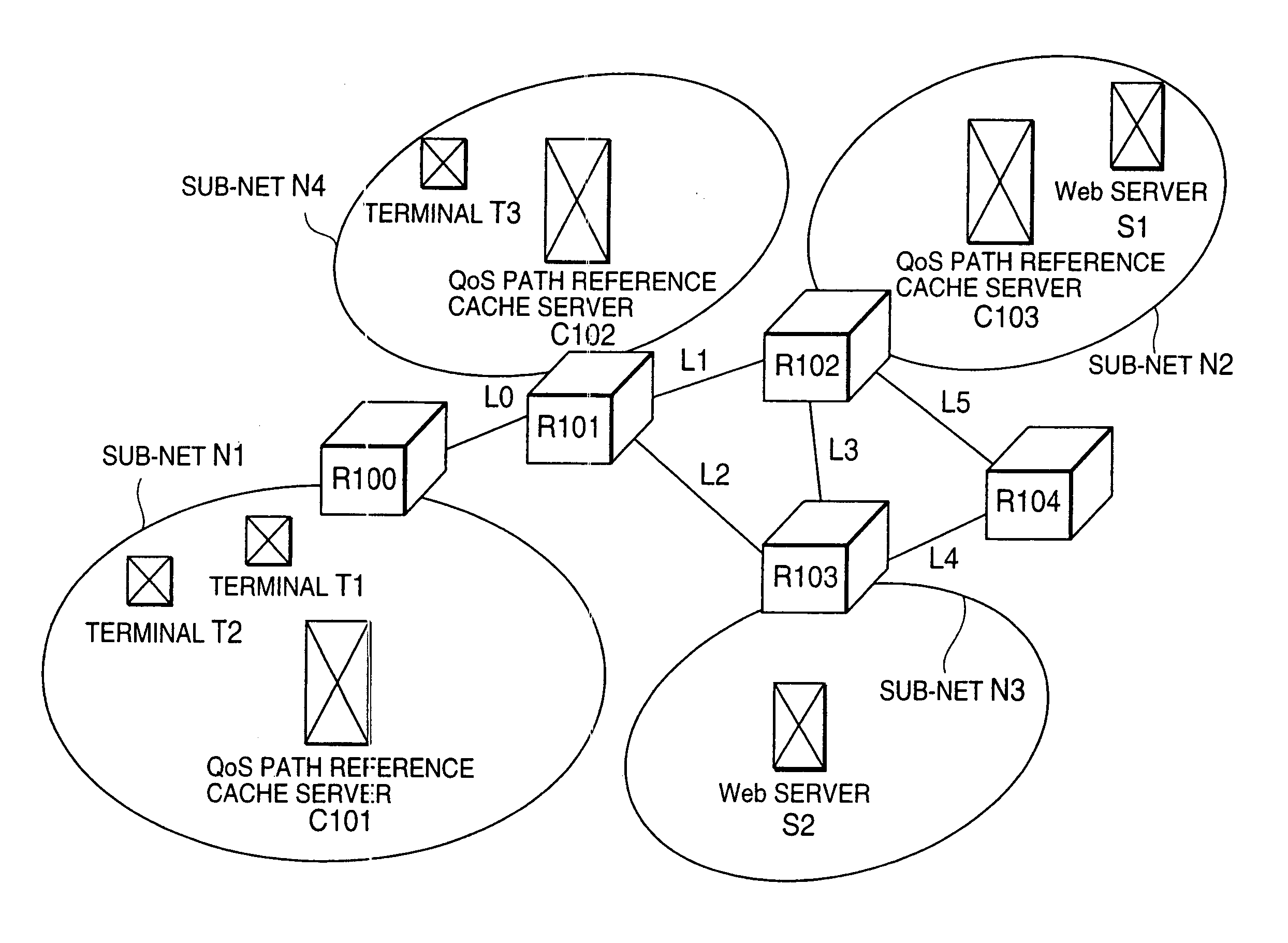

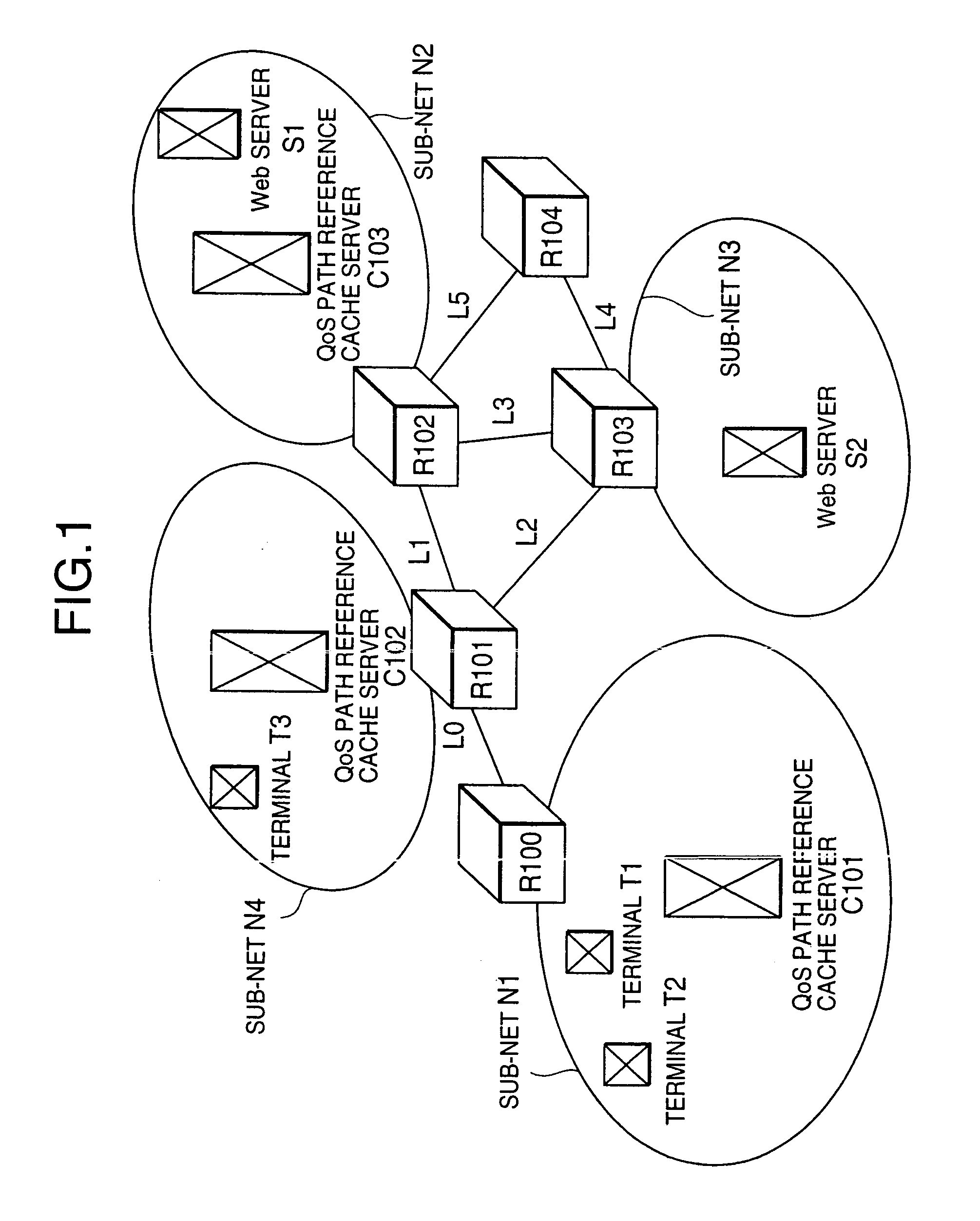

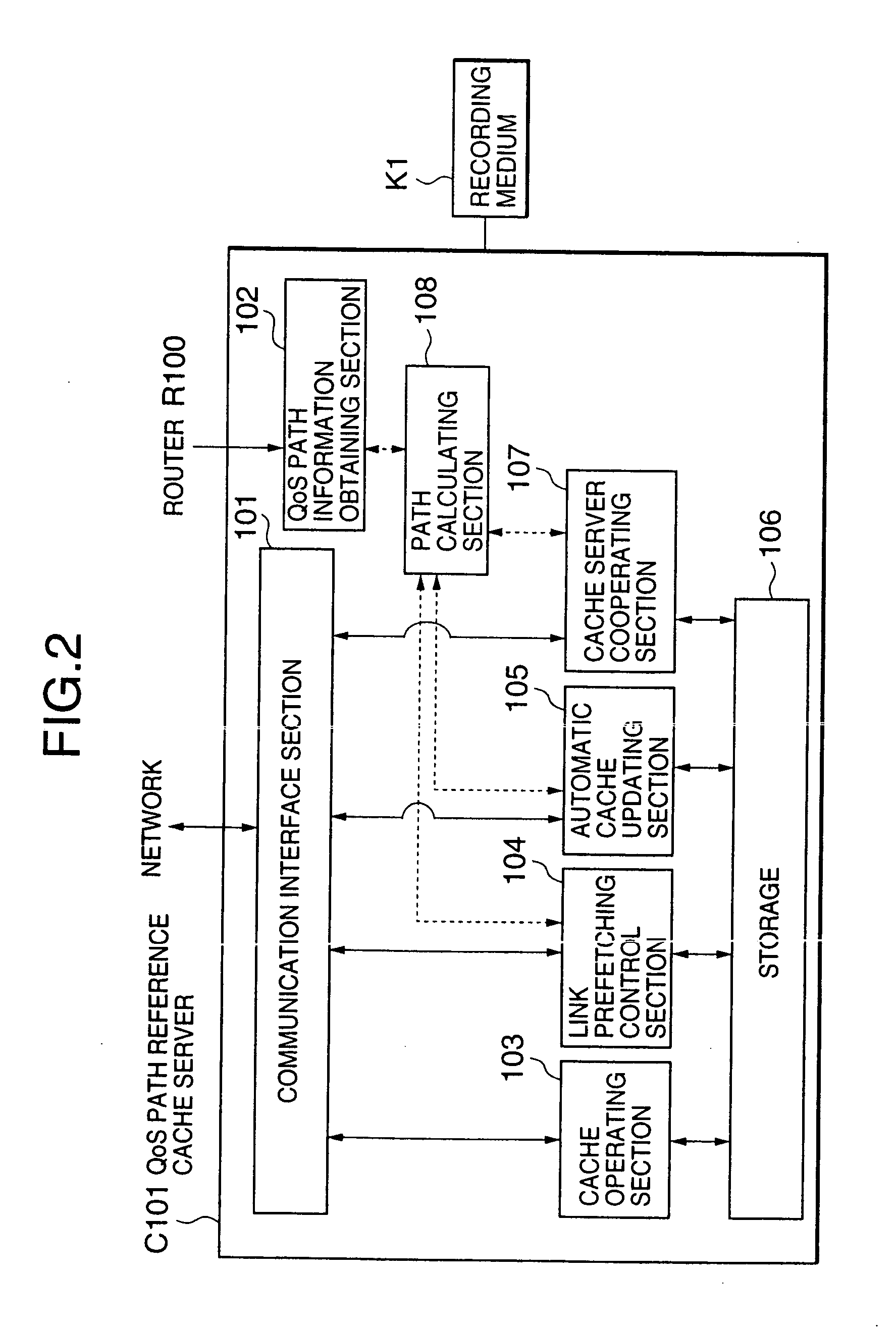

[0079]FIG. 1 shows the configuration of a network system according to a first embodiment of the present invention. Web servers S1 and S2 exist within sub-nets N2 and N3 respectively, and hold various Web content information. Terminals T1, T2 and T3 for accessing the Web servers S1 and S2 exist within sub-nets N1 and N4. QoS (Quality of Service) path reference cache servers C101 to C103 are also disposed on the network. The QoS path reference cache servers C101 to C103 hold copies of various content on the Web servers S1 and S2 that have been accessed from the terminals T1 to T3 and other cache servers (QoS path cache servers or conventional cache servers are not shown). In addition, the QoS path reference cache servers C101 to C103 are designed to obtain QoS path information that includes pairs of names of links and routers that are connected with each other, bandwidth of each link, and remaining bandwidth of each link.

[0080] The QoS path information maybe obtained by communicating...

second embodiment

[0107]FIG. 6 is a block diagram showing an example of a network system according to a second embodiment of the present invention. The second embodiment shown in FIG. 6 is different from the first embodiment shown in FIG. 1 in that the routers R100 to R104 are replaced with path-settable routers R200 to R204, and that the QoS path reference cache servers C101 to C103 are replaced with QoS path reference cache servers C201 to C203.

[0108] The path-settable routers R200 to R204 have functions that are achieved by operating an MPLS protocol in addition to the functions of the routers R100 to R104. The path-settable routers R200 to R204 have functions for setting a path specified by the path information on the network, according to the path information received from the QoS path reference cache servers C201 to C203. The path information is composed of network addresses of two servers that communicate with each other, identifiers (port numbers and the like in a TCP / IP network) for identif...

third embodiment

[0117]FIG. 9 is a block diagram showing an example of a network system according to a third embodiment of the present invention. The network system of the third embodiment shown in FIG. 9 is the same as the conventional network system (see FIG. 36) except the followings. Namely, the cache servers C1 to C3 are replaced with QoS path reference relay control cache servers C301 to C303. Relay servers M301 and M302 are provided in the third embodiment. Further, the routers R0 to R4 are replaced with routers R100 to R104, which have the same functions as those of the routers R0 to R4. It may be so structured that the QoS path reference relay control cache servers take the role of the relay servers at the same time. In other words, it may be so designed that the functions of QoS path reference relay control cache server and relay server are built into one casing. Further, it may also be so designed that the functions of router, QoS path reference relay control cache server, and relay serve...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com