Stereo vision three-dimensional human face modelling approach based on dummy image

A virtual image and 3D face technology, applied in the field of image processing and computer vision, can solve the problems of increasing the complexity of modeling work, a large amount of 3D face data, and high computational complexity, and meet the requirements of overcoming the orthogonality of input images , Overcoming data redundancy and the effect of reliable disparity information

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

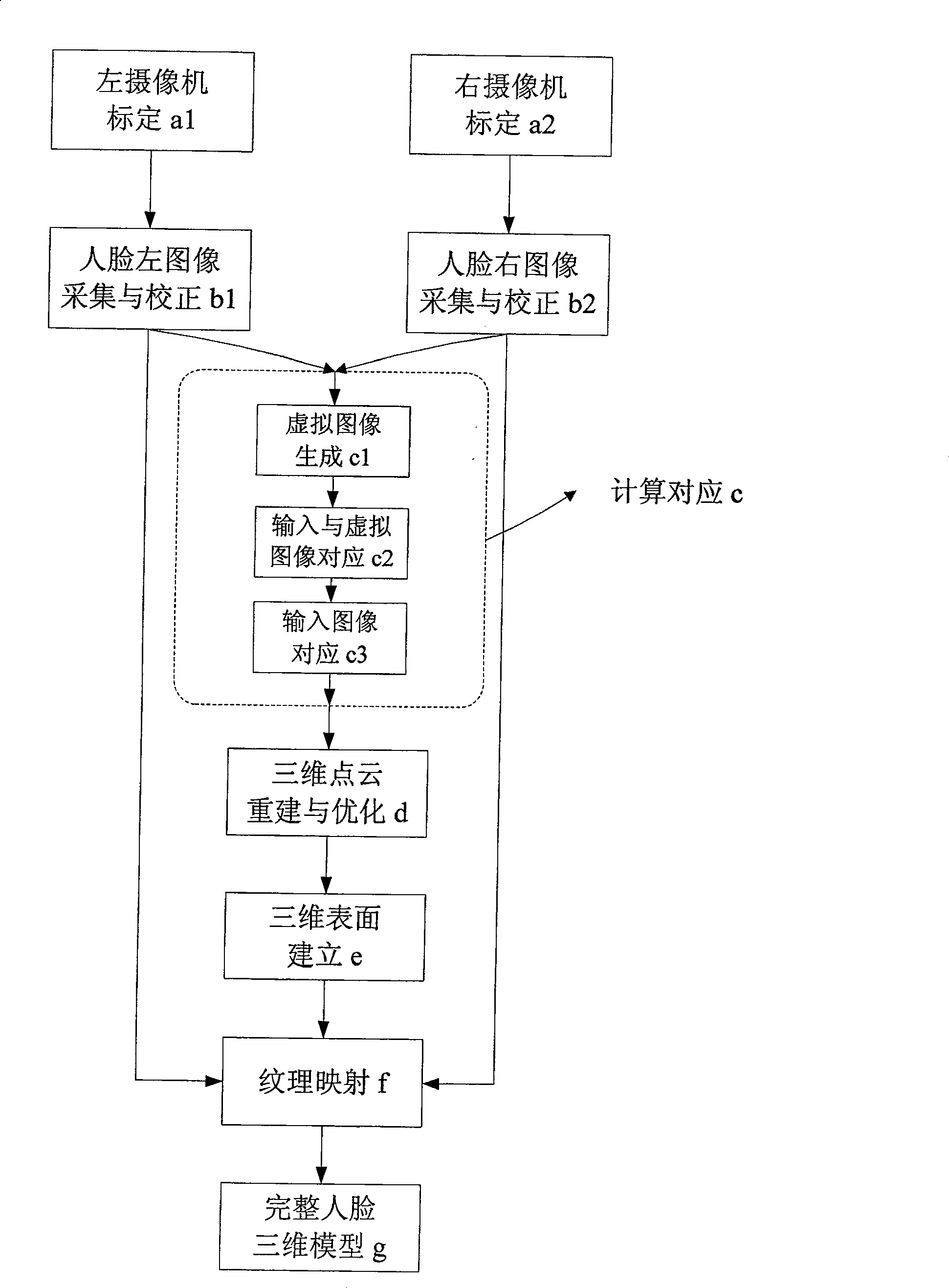

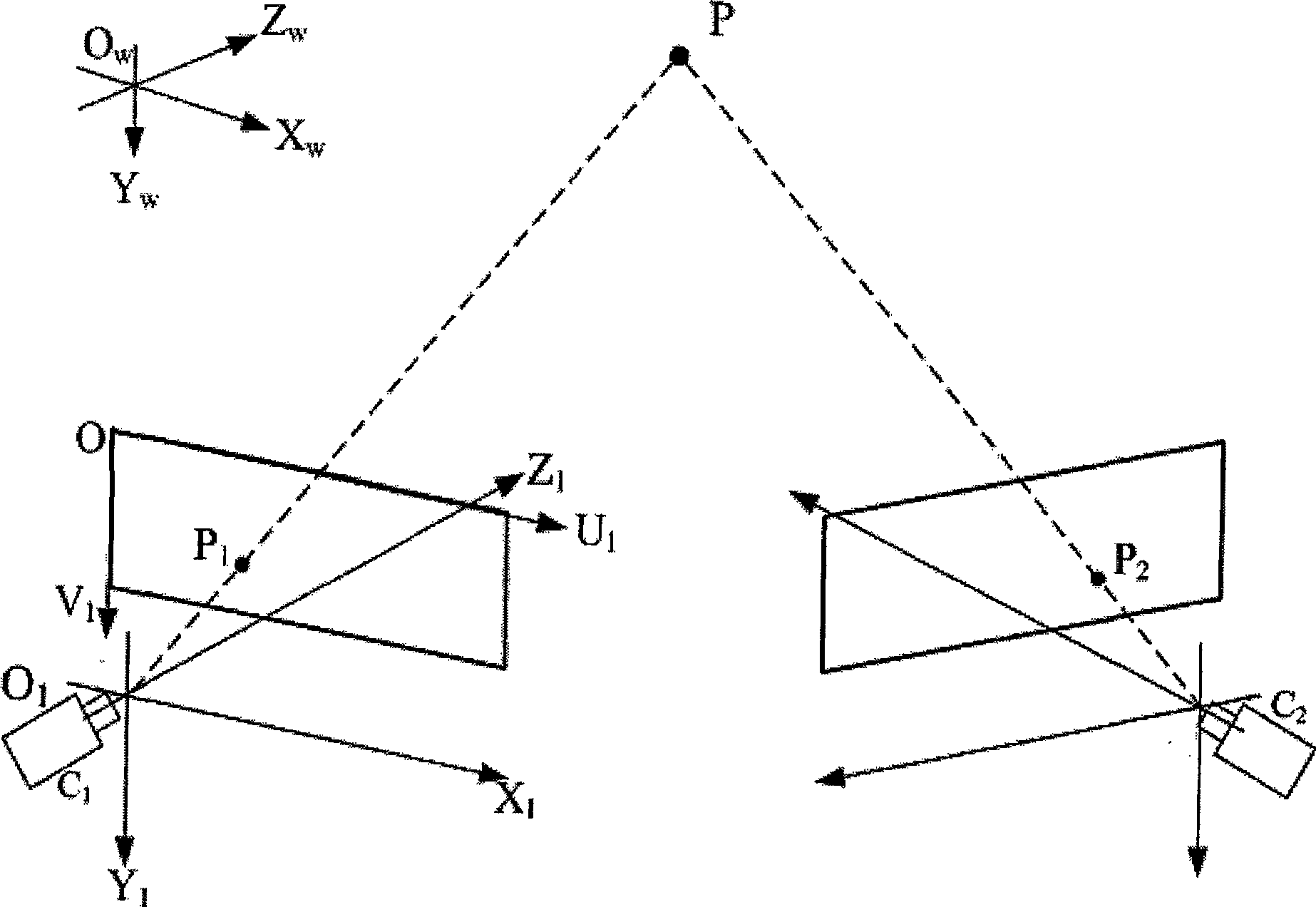

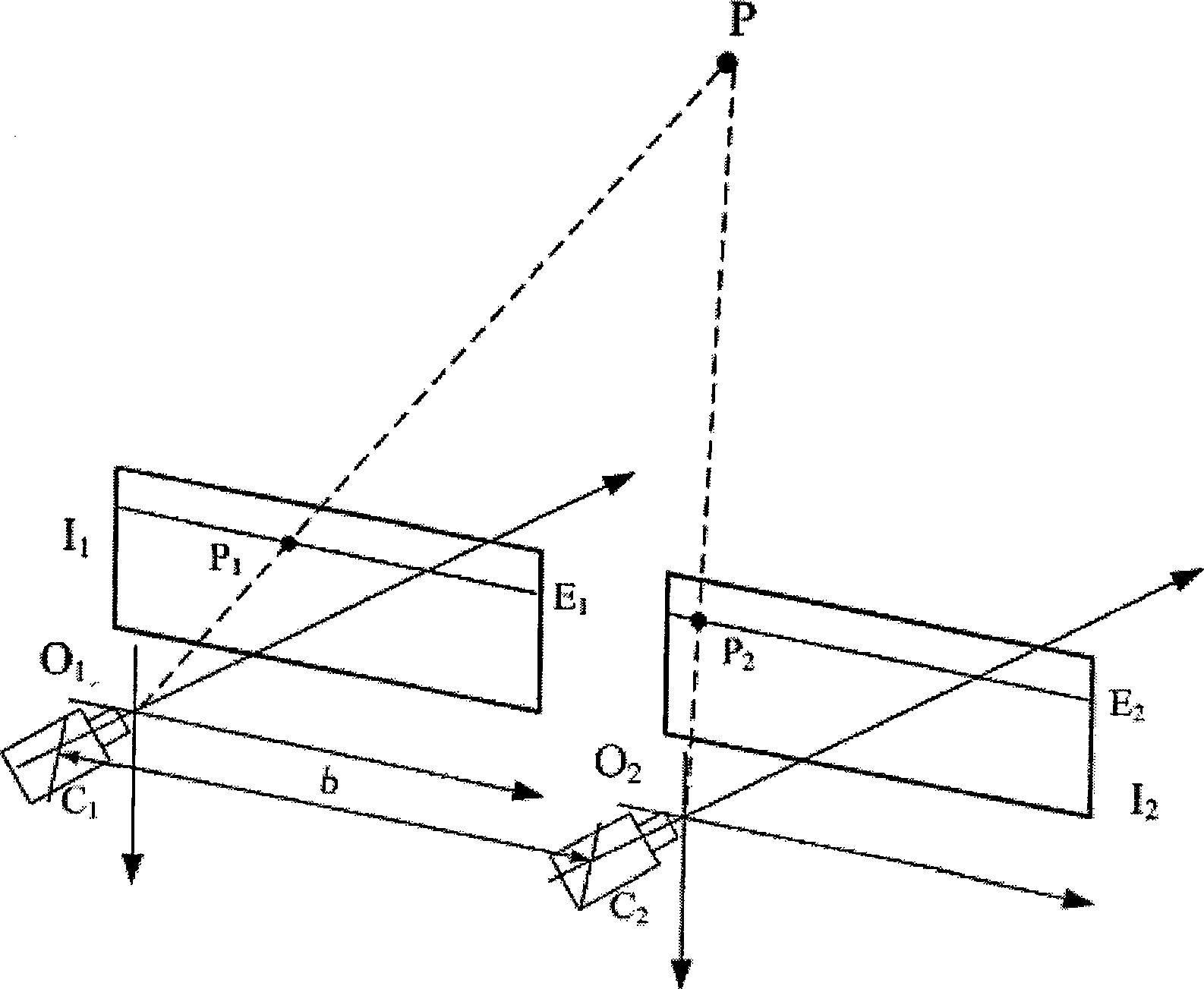

[0030] figure 1The specific flow of the stereoscopic three-dimensional face modeling method based on virtual image correspondence of the present invention is given. First, calibrate a1 and a2 respectively for the left and right cameras used, then take the left and right images of the tested face, and use the camera calibration results to correct the images b1 and b2 respectively, and then carry out the correspondence between the left and right images of the stereogram pair Relational Computing c. The specific steps for calculating c of the corresponding relationship are as follows: firstly, using a reference three-dimensional face model, estimate the pose, size and position of the tested face in the input left and right images, so as to generate a face similar to the pose of the tested face in the input stereo image pair Refer to the virtual image pair c1 of the face; then, calculate the correspondence c2 between the input stereogram pair and the virtual image pair; finally, ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com