Device and method for detecting impurities after filling of beverage

A detection method and detection device technology, applied in the direction of optical defect/defect, image analysis, instrument, etc., can solve the problems of high missed detection rate, low efficiency, affecting product quality, etc., and achieve the effect of saving cost and improving detection quality

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0056] specific implementation plan

[0057] Below in conjunction with accompanying drawing, the present invention is further described in detail:

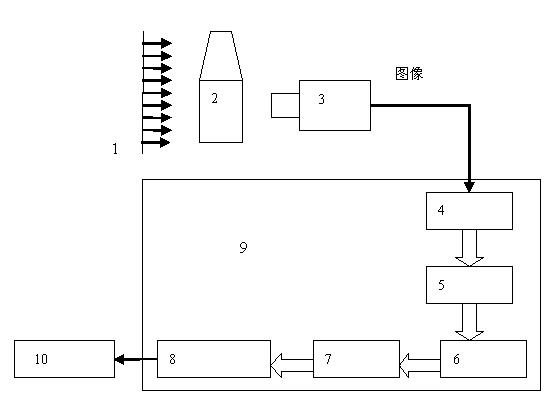

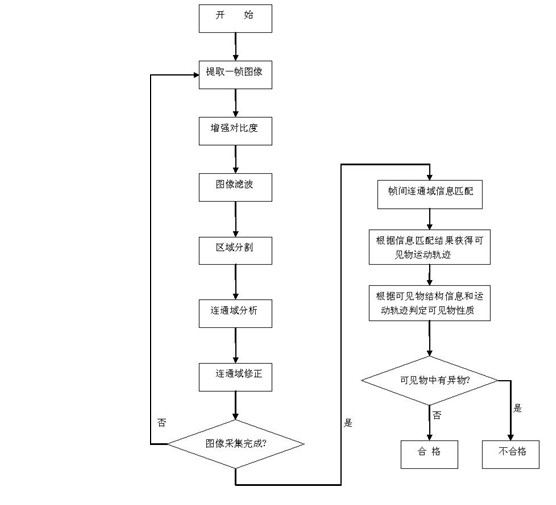

[0058] Such as figure 1 As shown, a device for detecting impurities after beverage filling, the device includes a light source 1 and a camera 3, the light source 1 and the camera 3 cooperate with the product 2 to be inspected; the camera 3 is connected to the target segmentation unit of the processing device 9 4; the target segmentation unit 4, the information extraction unit 5, the information matching unit 6, the trajectory acquisition unit 7 and the product quality analysis unit 8 of the processing device 9 are connected in sequence; the product analysis unit 8 is also connected with the control outside the processing device 9 The device 10 is connected.

[0059] The image acquisition comes from several frames of beverage bottle body images extracted by industrial cameras and flipped by mechanical devices. The lighting scheme...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com