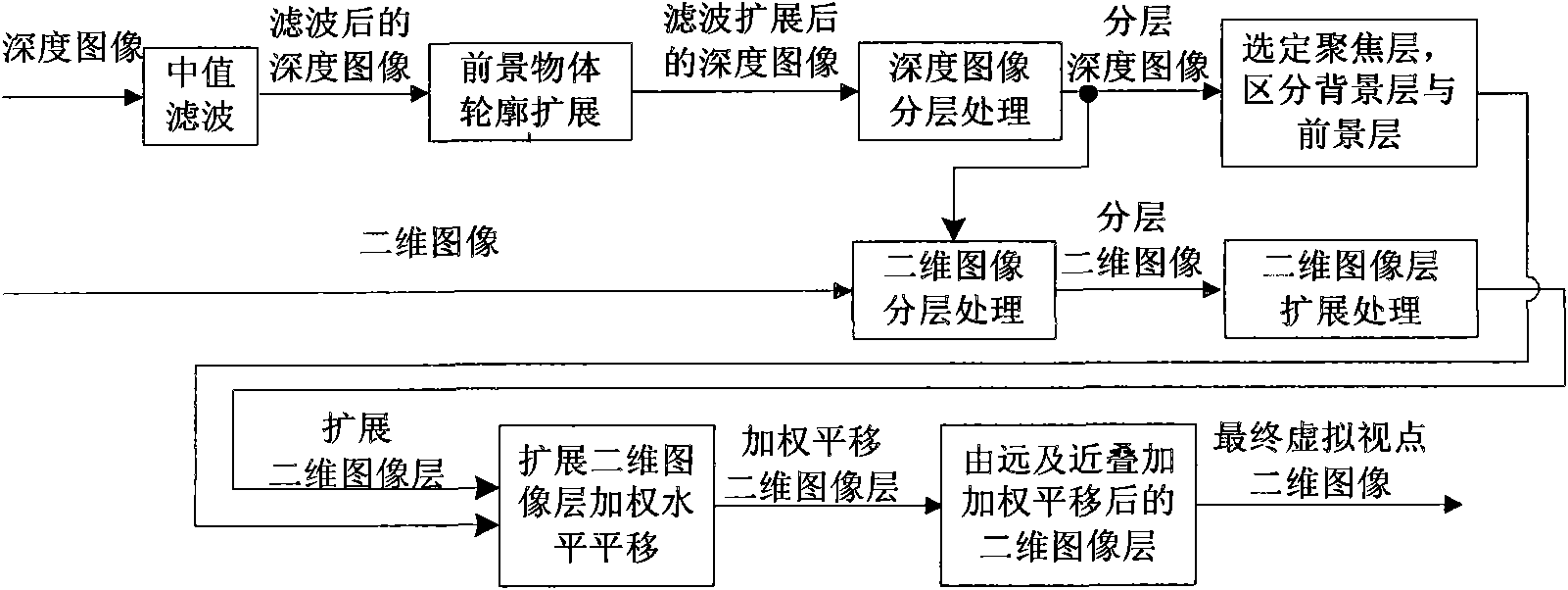

Method for generating virtual multi-viewpoint images based on depth image layering

A multi-viewpoint image and depth image technology, which is applied in the field of virtual multi-viewpoint image generation, can solve the problems of large amount of calculation, time-consuming, difficult to obtain camera array model parameters, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

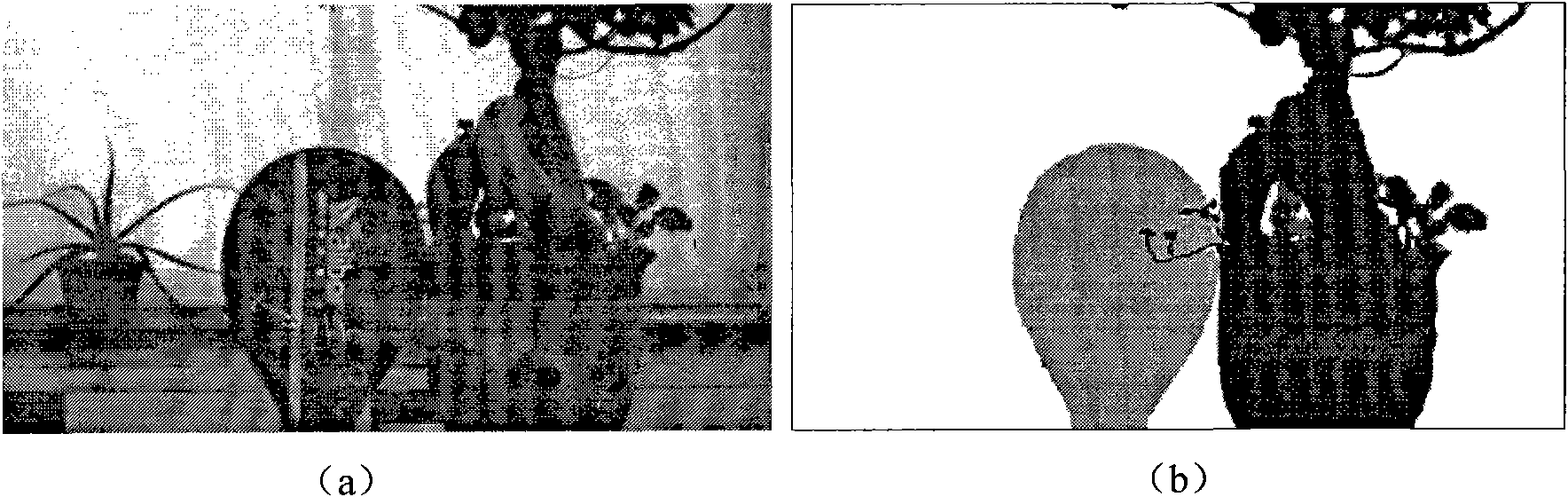

[0149] (1) The Racket two-dimensional image test stream with an image resolution of 640×360 and the Racket depth image test stream with a resolution of 640×360 are used as video files to generate multi-viewpoint virtual images. figure 2 (a) is a screenshot of the Racket two-dimensional image test stream, figure 2 (b) is a screenshot of the Racket depth image test stream.

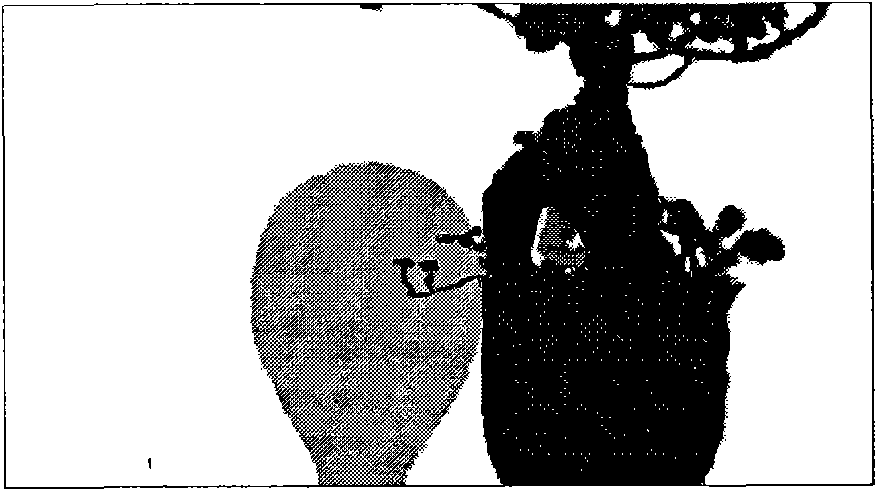

[0150] (2) Perform median filtering and edge extension on the input depth image to obtain the preprocessed depth image. image 3 It is the depth image after Racket depth image preprocessing.

[0151] (3) Set the layer number N=17, perform layer processing on the preprocessed depth image, and obtain the layered depth image. Figure 4 (a) is the layer 0 layered depth image.

[0152] (4) Select the 12th layer as the focus depth layer, then the 0-11th layer is the foreground layer, and the 13th-16th layer is the background layer.

[0153] (5) According to the layered depth image, the Racket two-dimensional...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com