Chinese environment-oriented complex scene text positioning method

A technology for complex scene and text positioning, applied in the field of image processing, can solve the problems of poor robustness of positioning methods, high false alarm rate, and large amount of calculation, so as to save time, improve accuracy, and enhance robustness.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

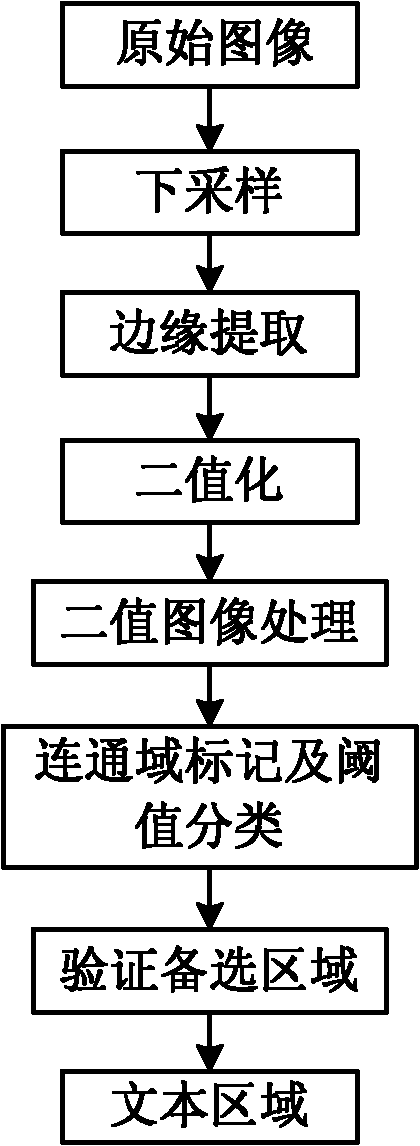

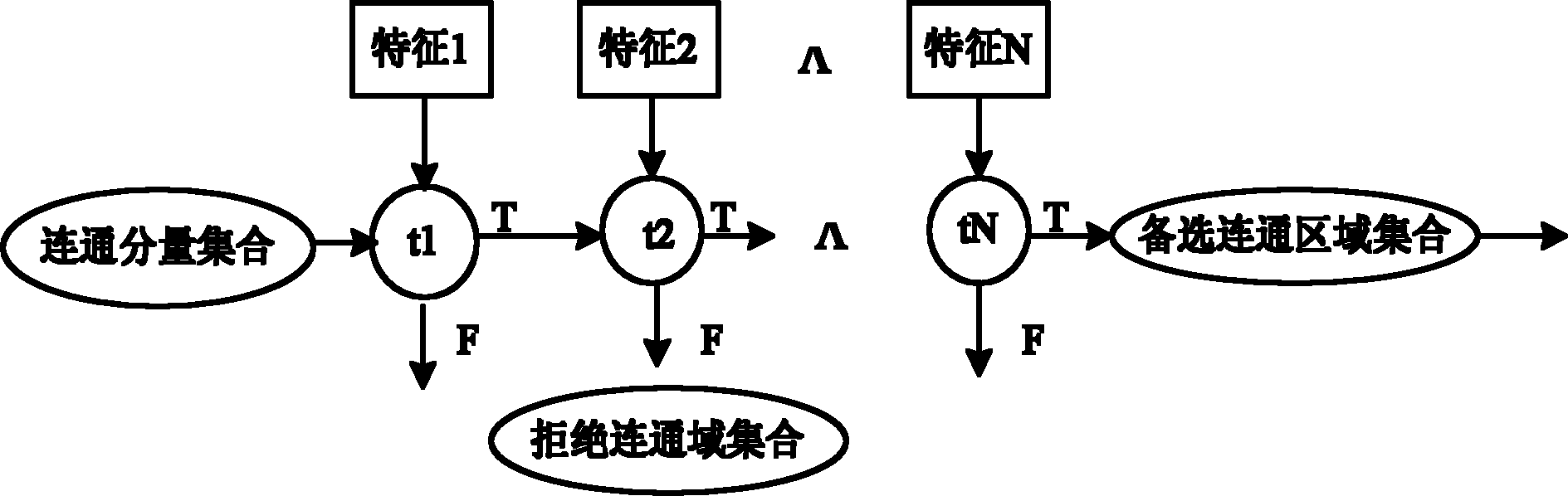

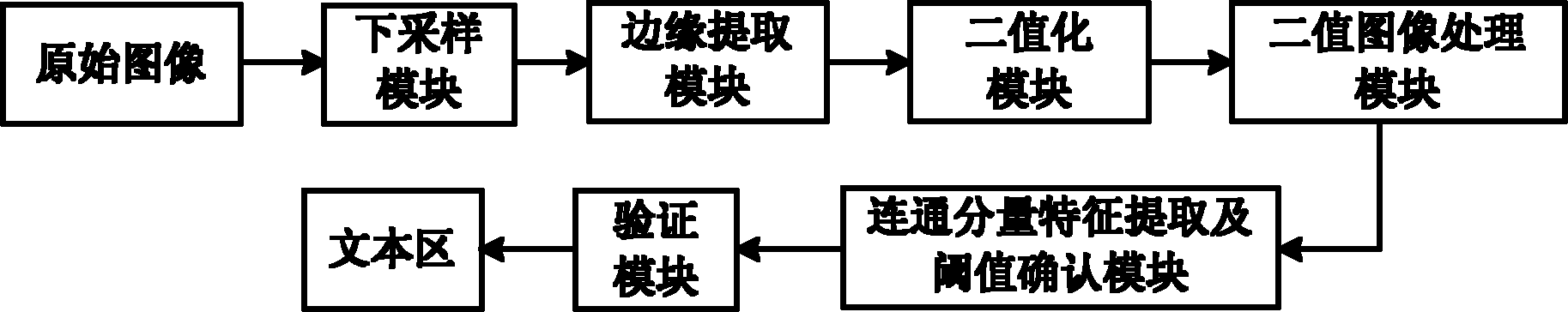

[0040] The present invention will be described in detail below in conjunction with the accompanying drawings.

[0041] In the present invention, its input image can be the image that various image acquisition devices acquire, for example: digital camera DC, the mobile phone with camera function, the PDA with camera function or can be one of the video sequences from digital video camera DV frame etc. The image processed by the method of the present invention may be for various image coding formats, such as JPEG, BMP, and the like. In the following description, the library used for the parameter learning involved in the present invention is a self-built database. Since there is currently no public scene text database oriented to the Chinese environment, the present invention is dedicated to building a database with 5,000 to 10,000 pictures, which covers various types of complex scene text images, and the text in the images includes Chinese and English Characters, so this embod...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com