Cache memory, memory system and control method therefor

A memory system and cache technology, applied in the field of memory systems, can solve the problems of complicated interfaces and increased area of the DMAC 105 and the cache memory 102, and achieve the effects of suppressing the complexity of the interface, reducing the area, and suppressing the complexity.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment approach 1

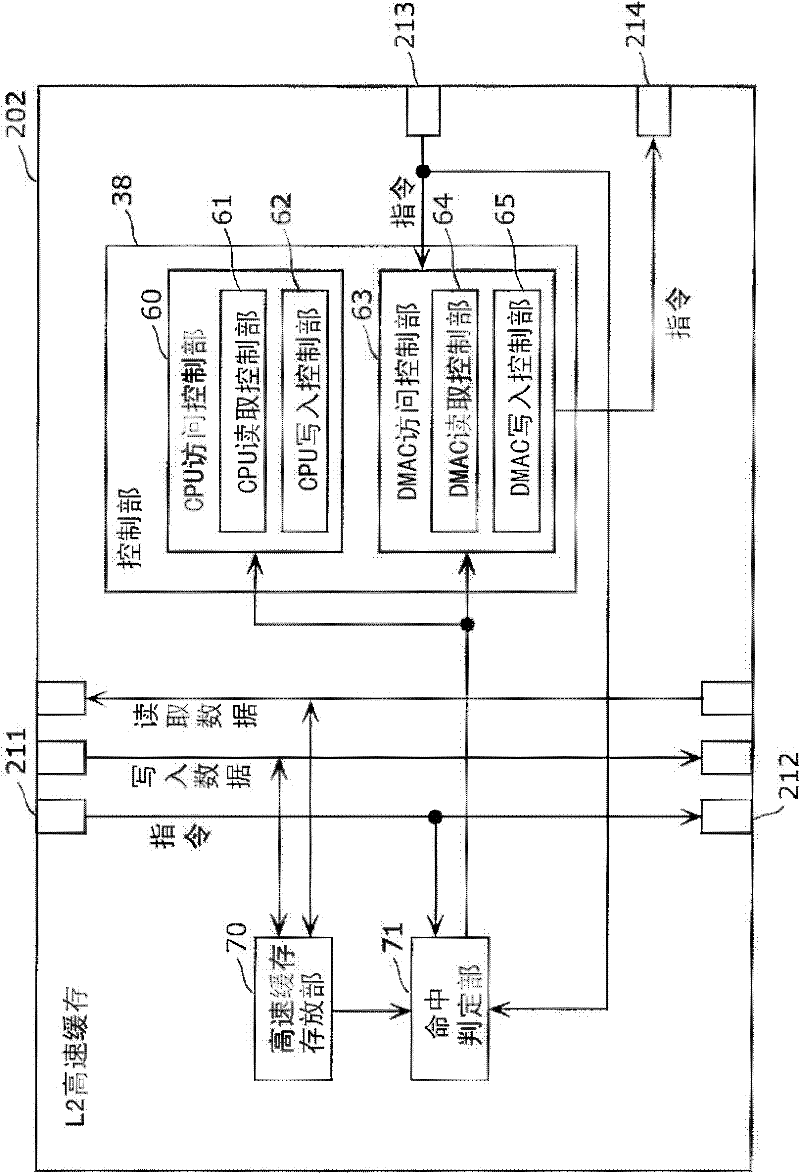

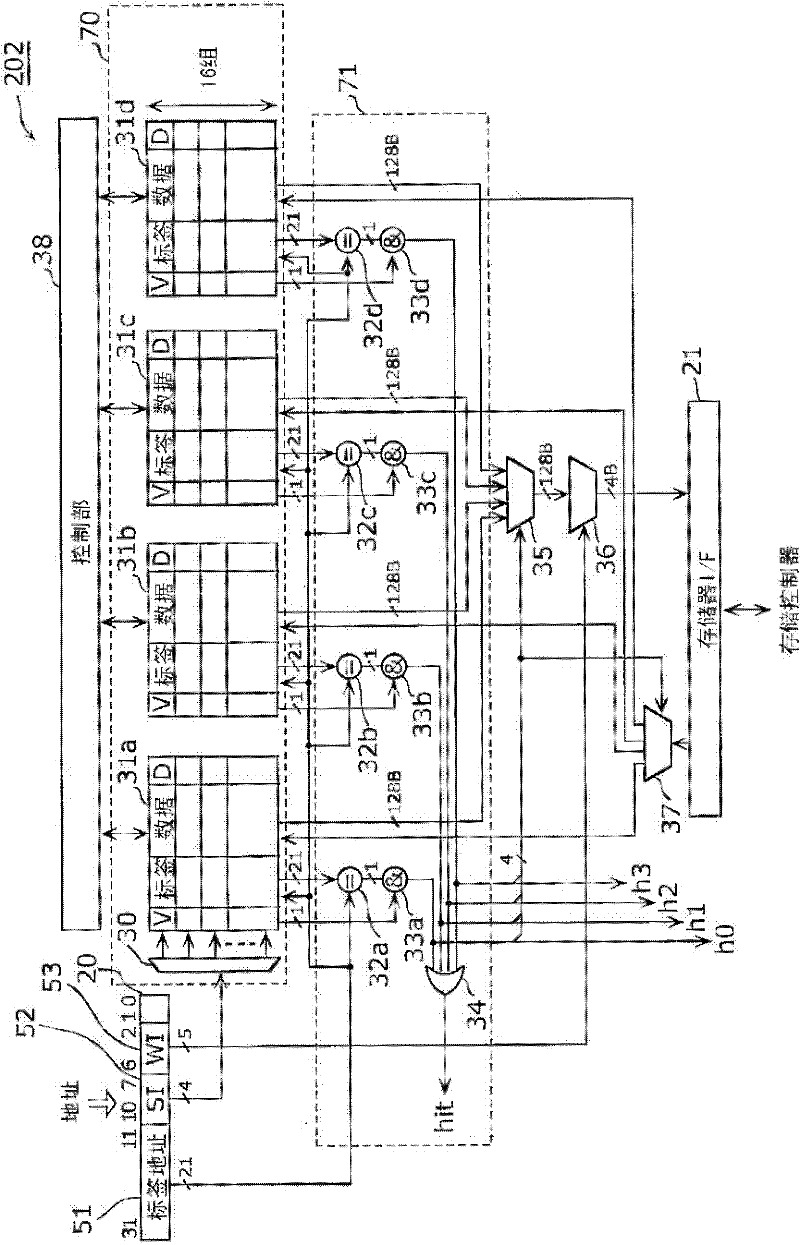

[0085] The cache memory according to the embodiment of the present invention outputs a read command to the main memory after the hit data is written back to the main memory when a read access from a host such as a DMAC hits. Also, when the write access from the master has hit, the hit data is invalidated, and a write command is output to the main memory.

[0086] Accordingly, even if a processor such as a CPU or a host does not perform flush processing, it is possible to maintain consistency between the cache memory and the main memory. Thus, the cache memory according to the embodiment of the present invention can suppress the decrease in the processing performance of the processor that occurs in order to maintain coherency between the cache memory and the main memory.

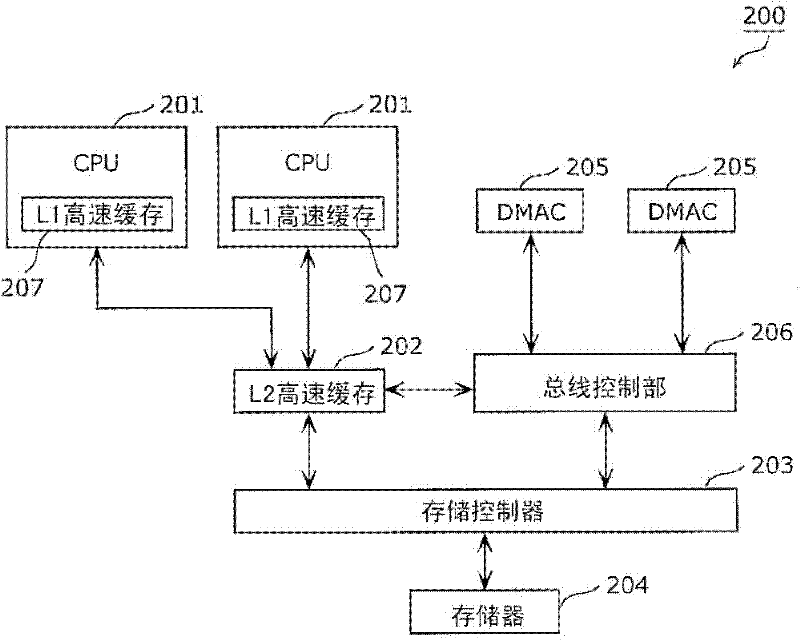

[0087] In addition, read data and write data are directly transferred between the host device and the main memory without passing through the cache memory. Thereby, there is no need to form a bus for transfe...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com