Address assignment method for data registers of distributed cache chipset

A data buffer and distributed buffer technology, applied in memory address/allocation/relocation, electrical digital data processing, instruments, etc. Problems such as limited foot resources

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

no. 1 example

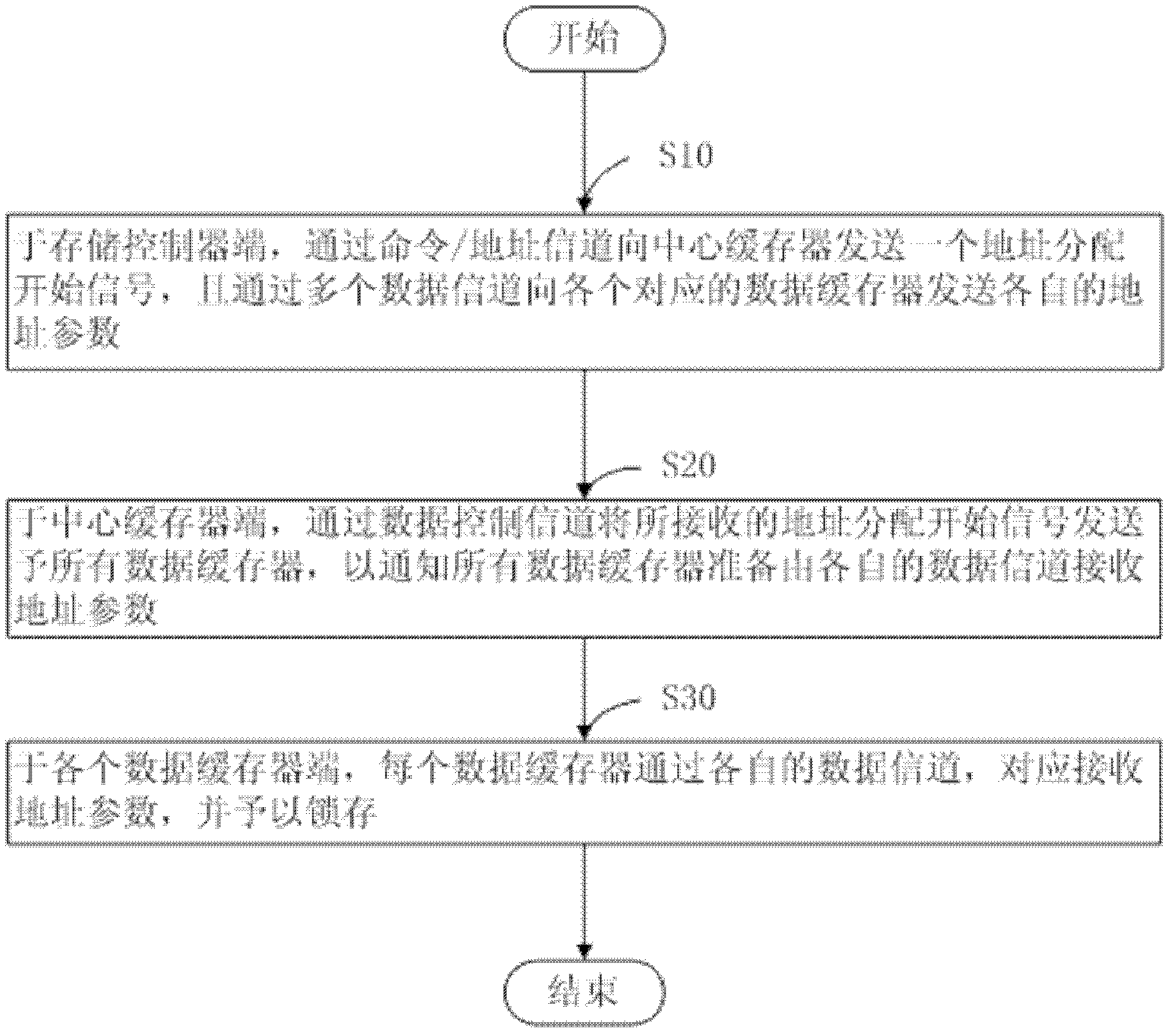

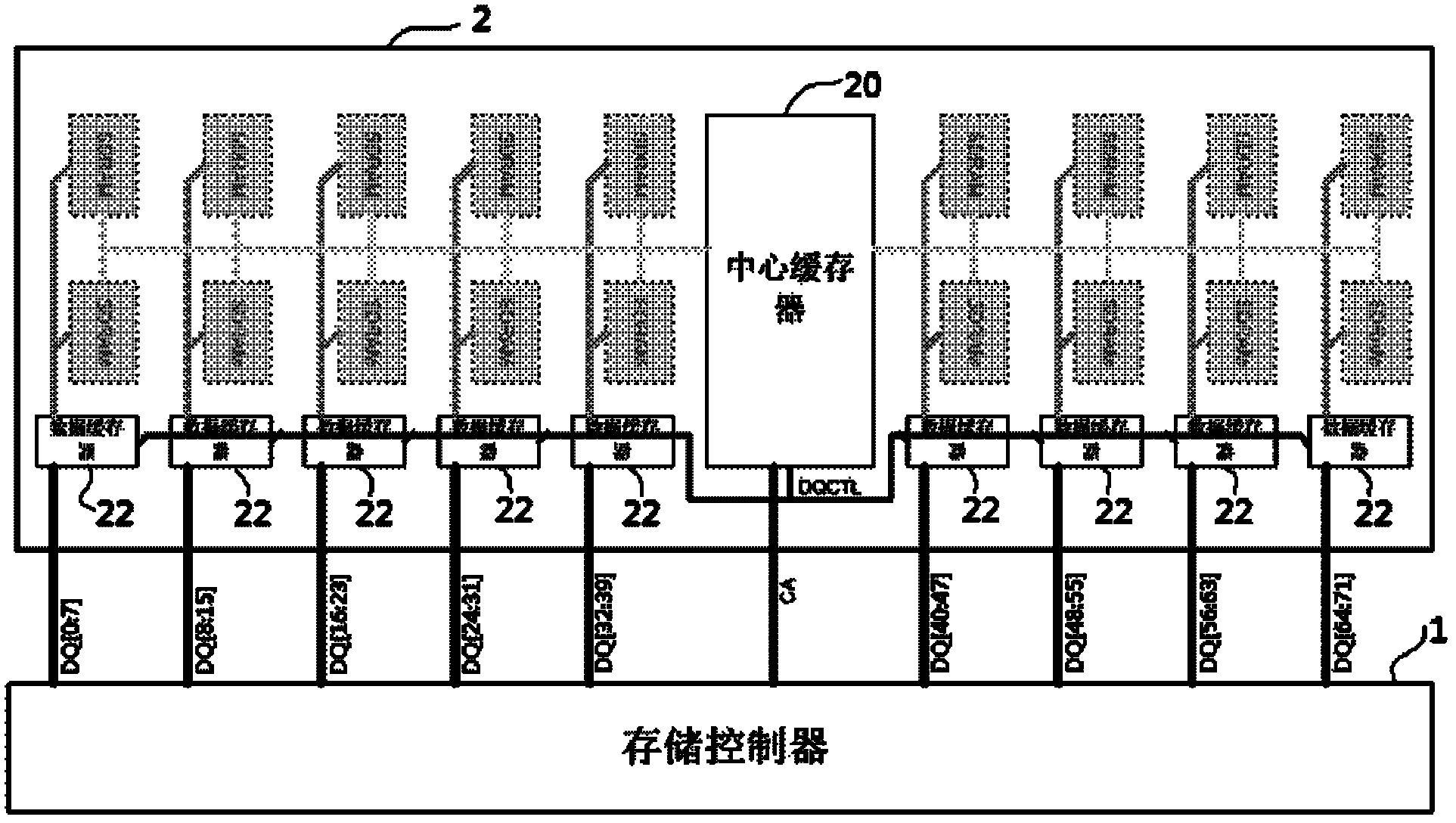

[0040] Preferably, see image 3 , is a schematic diagram showing the operation flow of the first embodiment of the address allocation method of the data buffer in the distributed cache chipset of the present invention. The following fit figure 2 The specific operation steps of the address allocation method of the data buffer in the distributed cache chipset of the present invention will be described in detail.

[0041] Firstly, step S100 is executed to preset an appointment time and a specific amount at the end of the storage controller 1 . Wherein, the appointed time is set to ensure that the data buffer 22 has enough time to receive and latch its address configuration value after receiving the address allocation start signal. Next, proceed to step S101.

[0042] In step S101, at the storage controller 1 end, an address allocation start signal is sent to the central buffer 20 through the command / address channel (CA), and at the same time, the timer is cleared and starts t...

no. 2 example

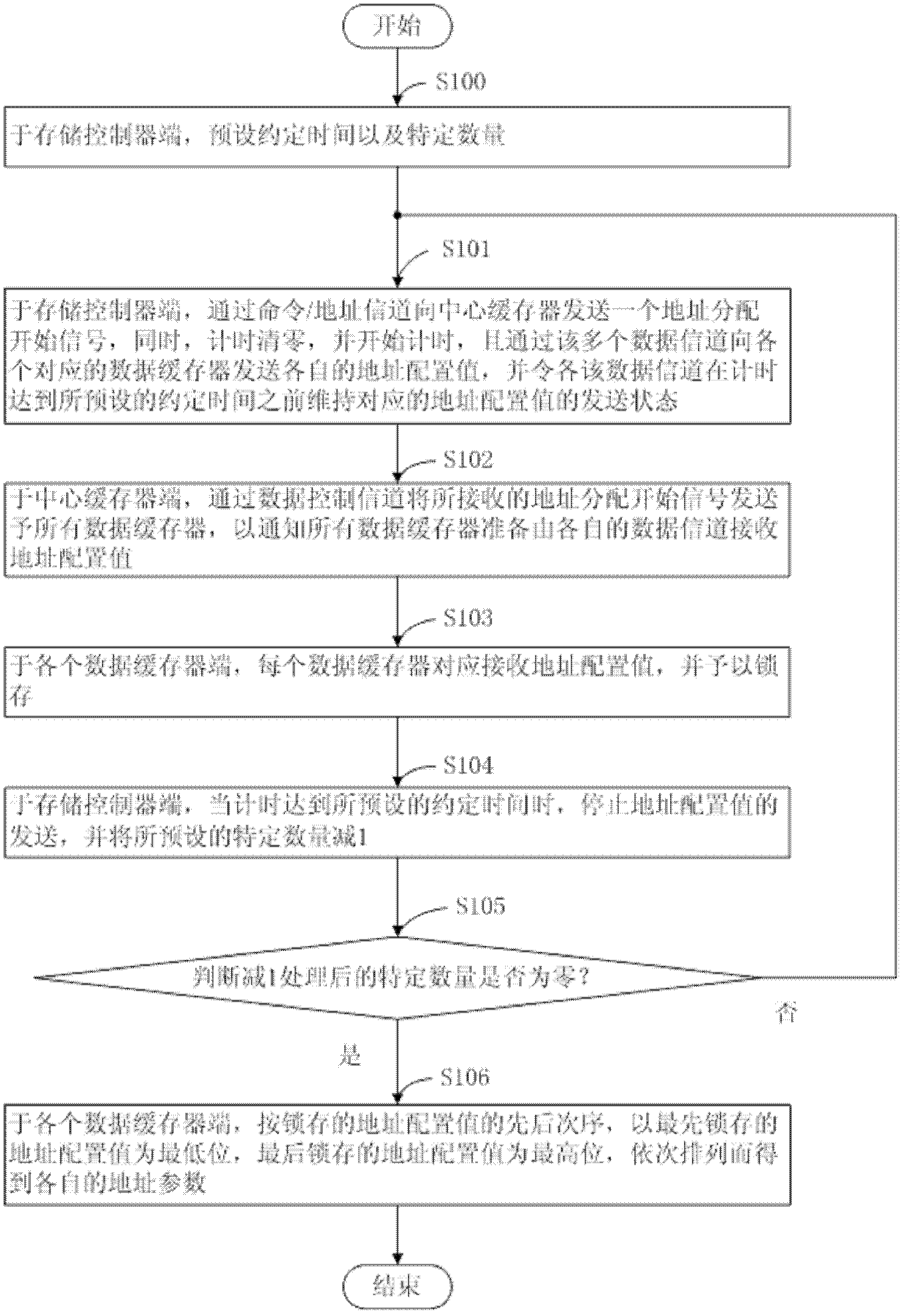

[0053] see Figure 4 , is a schematic diagram showing the operation flow of the second embodiment of the address allocation method of the data buffer in the distributed cache chipset of the present invention, wherein, the address of the data buffer in the distributed cache chipset of the previous embodiment distribution method (such as image 3 Shown) the same or similar steps are represented by the same or similar symbols, and detailed descriptions are omitted to make the description of this case clearer and easier to understand.

[0054] The biggest difference between the address assignment method of the data buffer in the distributed cache chipset of the second embodiment and the address assignment method of the data buffer in the distributed cache chipset of the first embodiment is that the first embodiment The address allocation method of the data buffer in the distributed cache chipset is at the storage controller side, and each sending state of the address configuratio...

no. 3 example

[0056] see Figure 5 , is a schematic diagram showing the operation flow of the third embodiment of the address allocation method of the data buffer in the distributed cache chipset of the present invention, wherein, the address of the data buffer in the distributed cache chipset of the previous embodiment distribution method (such as image 3 as well as Figure 4 Shown) the same or similar steps are represented by the same or similar symbols, and detailed descriptions are omitted to make the description of this case clearer and easier to understand.

[0057] The address assignment method of the data buffer in the distributed cache chipset of the third embodiment and the address assignment method of the data buffer in the distributed cache chipset of the first embodiment and the distributed cache chipset of the second embodiment The biggest difference in the address allocation method of the data buffer in the first embodiment and the second embodiment of the distributed cach...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com