Multi-camera dynamic scene 3D (three-dimensional) rebuilding method based on joint optimization

A multi-camera, dynamic scene technology, applied in 3D modeling, image analysis, image data processing, etc., can solve problems such as inability to handle optical flow estimation, insufficient robustness, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

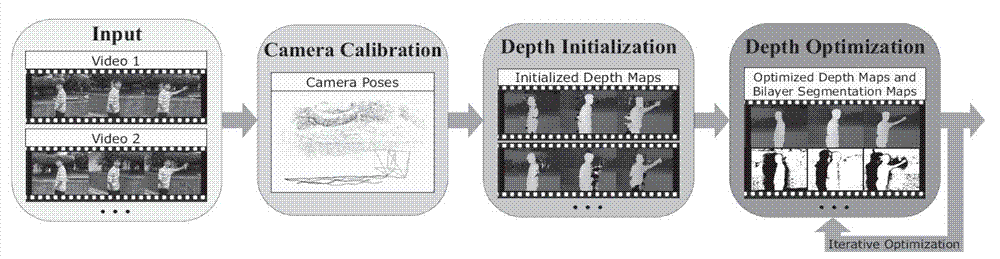

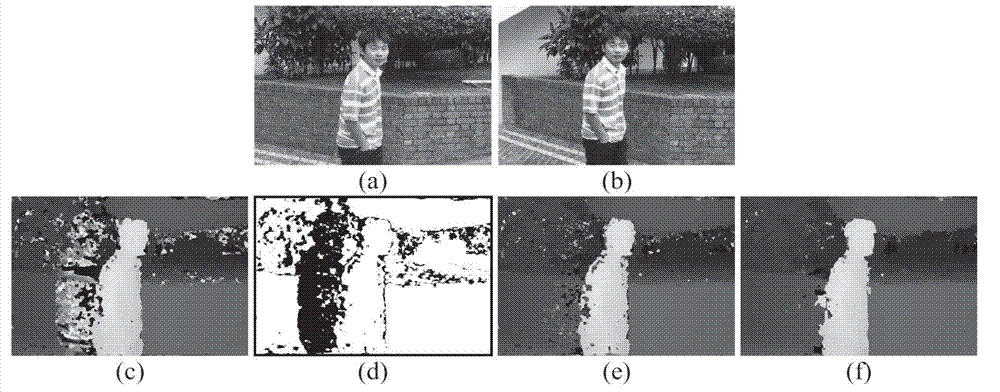

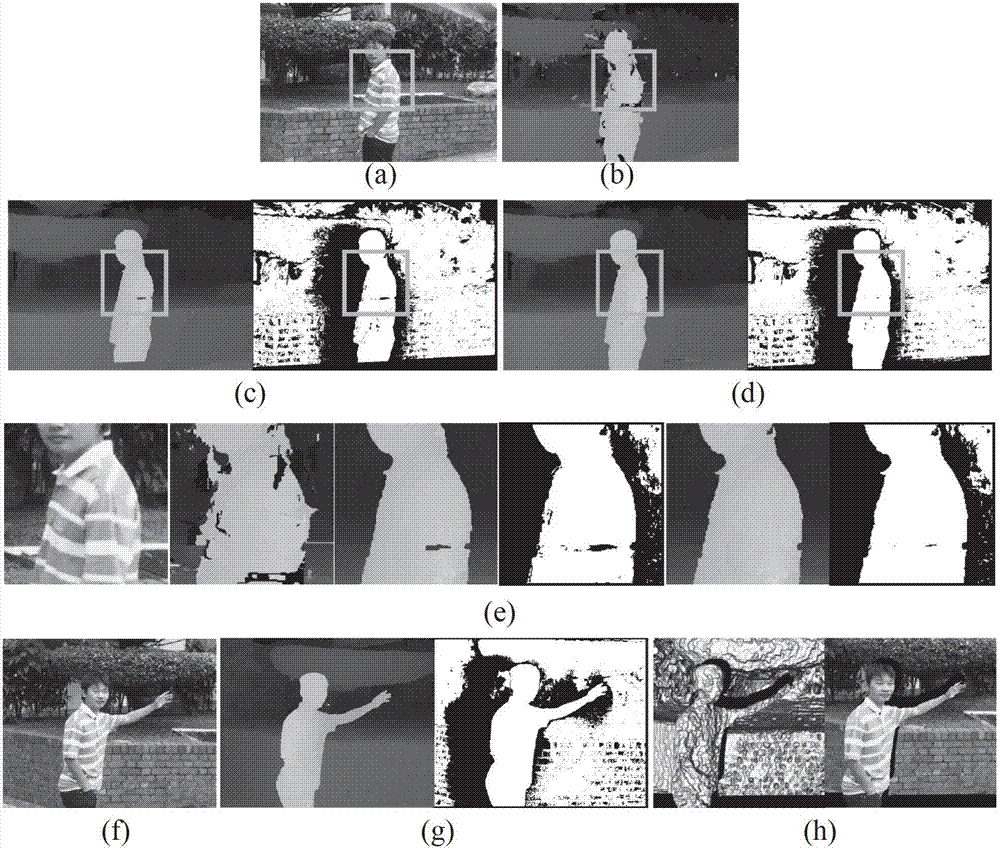

[0121] The invention discloses a multi-camera dynamic scene 3D reconstruction method based on joint optimization, which can solve the time-space consistent depth restoration and static / dynamic double-layer segmentation at the same time, and does not require accurate static / dynamic segmentation, and the method is robust And no prior knowledge of the background is required. This method allows the participating multi-cameras to move freely and independently, and can handle dynamic scenes captured by only 2~3 cameras. Such as figure 1 As shown, this method mainly includes three steps: First, we use the synchronized video frames spanning M cameras at each time t To initialize the depth map at time t ; 2. Simultaneously carry out deep spatio-temporal consistency optimization and static / dynamic two-layer segmentation, we iteratively perform 2~3 rounds of spatio-temporal consistency optimization, so as to finally achieve high-quality dynamic 3D reconstruction. The implementation ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com