Speech separation method based on fuzzy membership function

A technology of fuzzy membership function and speech separation, applied in speech analysis, instruments, etc., can solve problems such as low speech quality

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

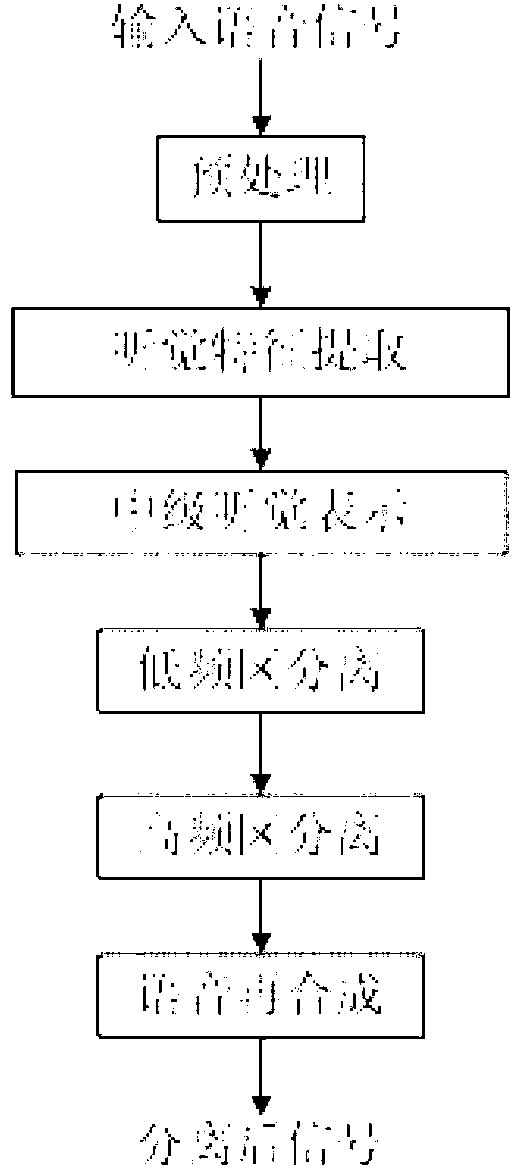

[0099] The invention discloses a speech separation method based on a fuzzy membership function. The method simulates the human auditory system and uses speech pitch features to separate speech, including the following steps:

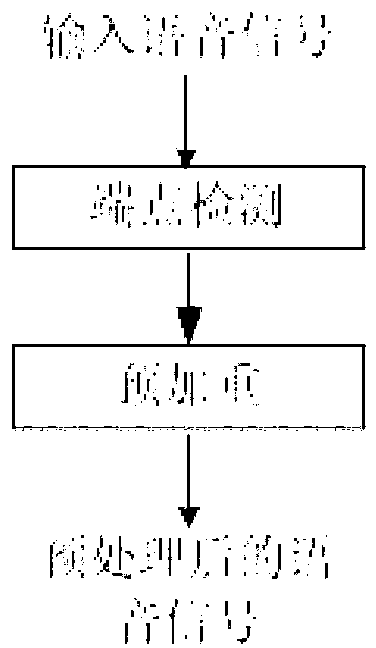

[0100] (1) Speech preprocessing process, such as figure 2 As shown, the process includes: input a voice signal, perform endpoint detection and pre-emphasis on it, and the pre-emphasis coefficient is 0.95;

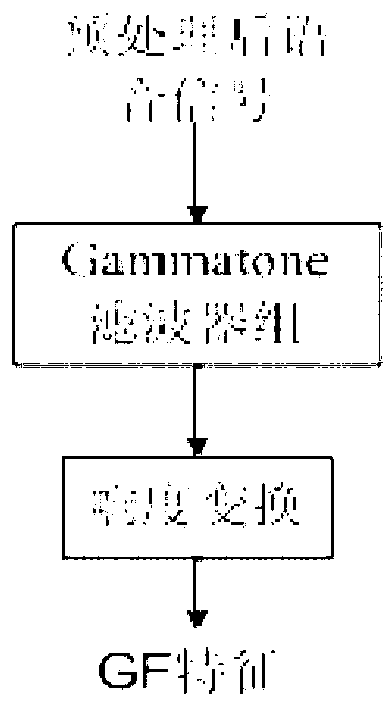

[0101] (2) Auditory feature extraction process, such as image 3 As shown, the process includes:

[0102] (1) The preprocessed signal is processed by a gamma-pass filter that simulates the cochlea.

[0103] 1) The time-domain response of the gamma-pass filter is

[0104] g c (t)=t i-1 exp(-2πb c t)cos(2πf c +φ c )U(t)(1≤c≤N)

[0105] Among them, N is the number of filters, c is the ordinal number of the filter, and the value is in the range of [1, N] according to the frequency. i is the order number of the filter, take i=4. U(t) is the uni...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com