Depth enhancing method based on texture distribution characteristics

A technology of distributing features and textures, applied in image enhancement, image data processing, instruments, etc., can solve problems such as boundary jitter to be suppressed, depth value fluctuation, and unsatisfactory filtering effect in the boundary area, so as to improve continuity and accuracy. , to promote the effect of application and promotion

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0014] In specific embodiments, the following examples may be employed. It should be noted that the specific methods (such as the Sobel operator, the least square difference method, etc.) described in the following implementation process are only examples, and the scope of the present invention is not limited to these methods.

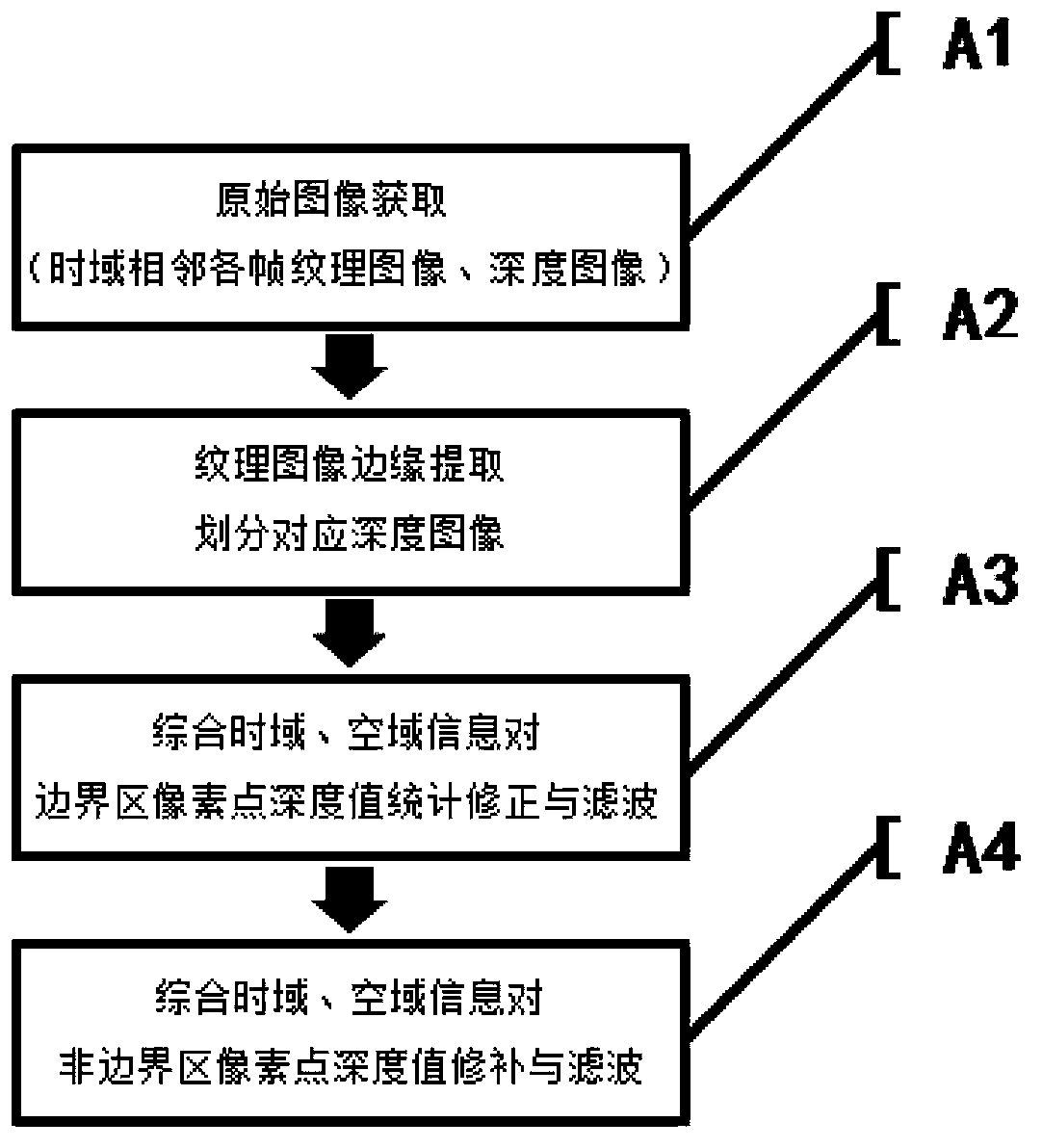

[0015] A1: The input multi-frame texture images and corresponding depth images adjacent in the time domain are respectively collected by the color camera and the depth camera of the low-end depth sensor. Taking Kinect as an example, the speed of data collection is 60FPS, so the camera uses In the case of general speed motion, the correlation between the current frame and the previous and subsequent frames of image data in the time domain is very high. Therefore, there is enough image information in the subsequent processing to ensure the effectiveness of image enhancement from the aspect of time domain. Although the depth image enhancement in the time...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com