Kinect depth video spatio-temporal union restoration method

A combined repair and depth video technology, applied in the field of 3D rendering, can solve the problems of depth repair and influence without considering reflection and dark areas, and achieve the effect of easy to distinguish repair, good repair effect, and obvious hole repair effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0022] Below in conjunction with accompanying drawing, the present invention will be further described with specific example:

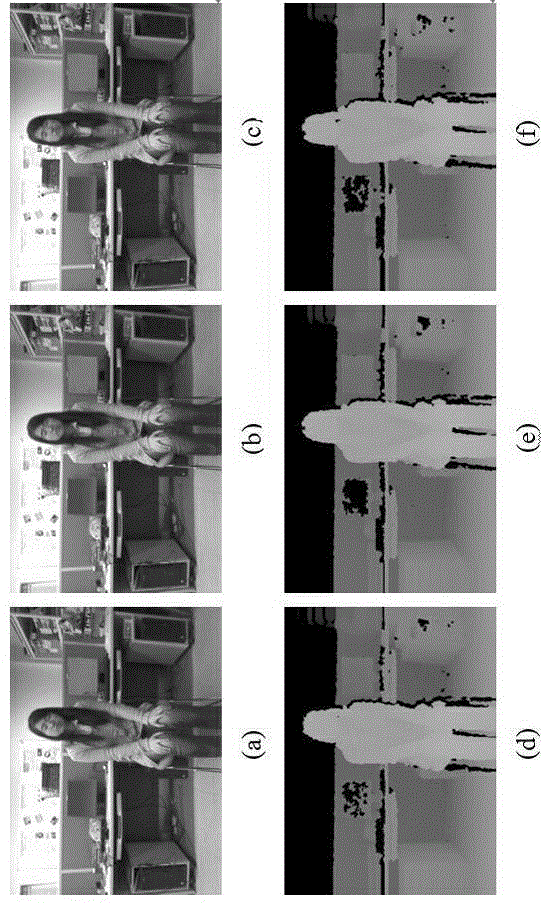

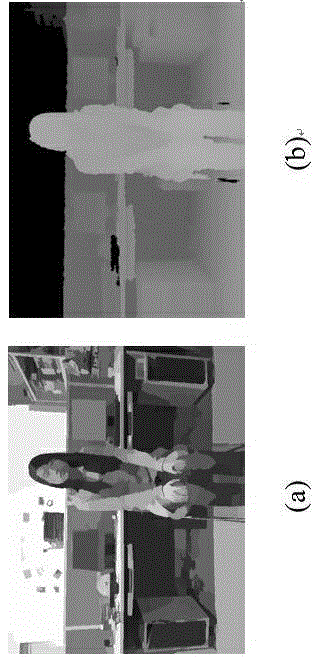

[0023] The present invention uses a section of depth video sequence and corresponding color video sequence captured by Kinect to detect the effect of the proposed method. The video sequence consists of 100 frames of images. The image resolution is 640×480. All the examples involved use MATLAB7 as the simulation experiment platform.

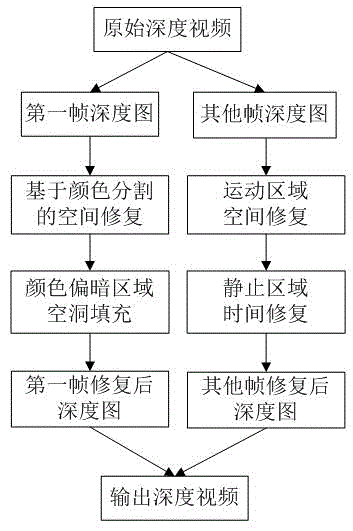

[0024] The flow chart of the present invention is as figure 1 Shown: For the depth map of the first frame, only spatial inpainting is used. Use the color segmentation map of its corresponding color image to guide the initial depth filling, and then perform hole repair on darker color areas to further improve the depth map quality. For all depth maps after the first frame, the motion area is extracted first, the space of the motion area is repaired separately, and then the depth value of the corresponding position of t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com