Data reading/writing method and device and computer system on basis of multi-level Cache

A data reading and caching technology, applied in the memory system, data processing input/output process, and computing, etc., can solve the problem of low cache access efficiency, reduce the cache miss rate, improve the cache access efficiency, and enhance the cache hit rate Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

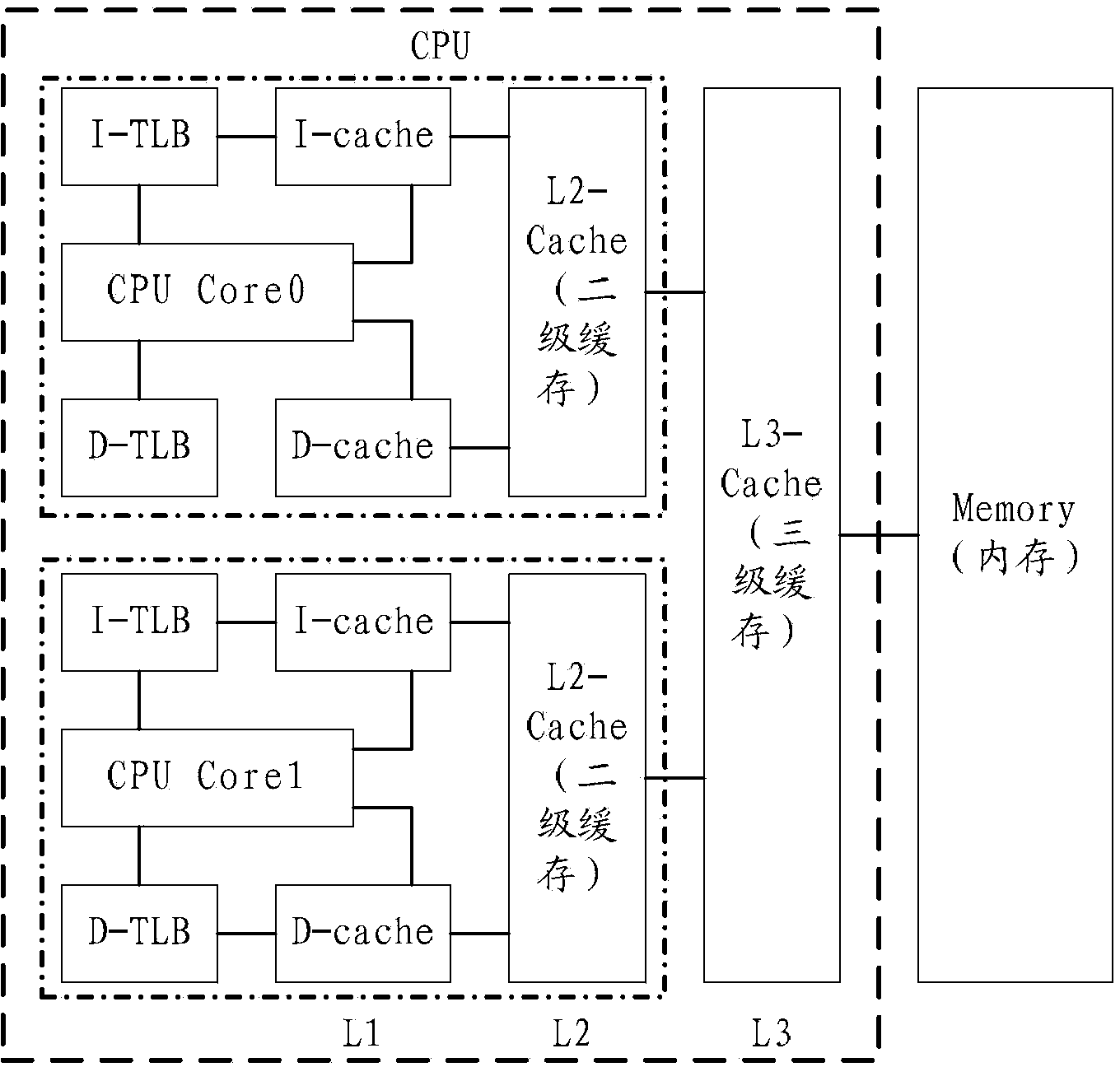

[0108] The following will provide a specific implementation solution for the data reading method based on the multi-level cache according to the above computer system.

[0109] In the multi-level cache-based data reading method provided by this embodiment, the attribute information of the memory page further includes cache location attribute information of the physical memory page, and the cache location attribute information is set according to the access of the physical memory page.

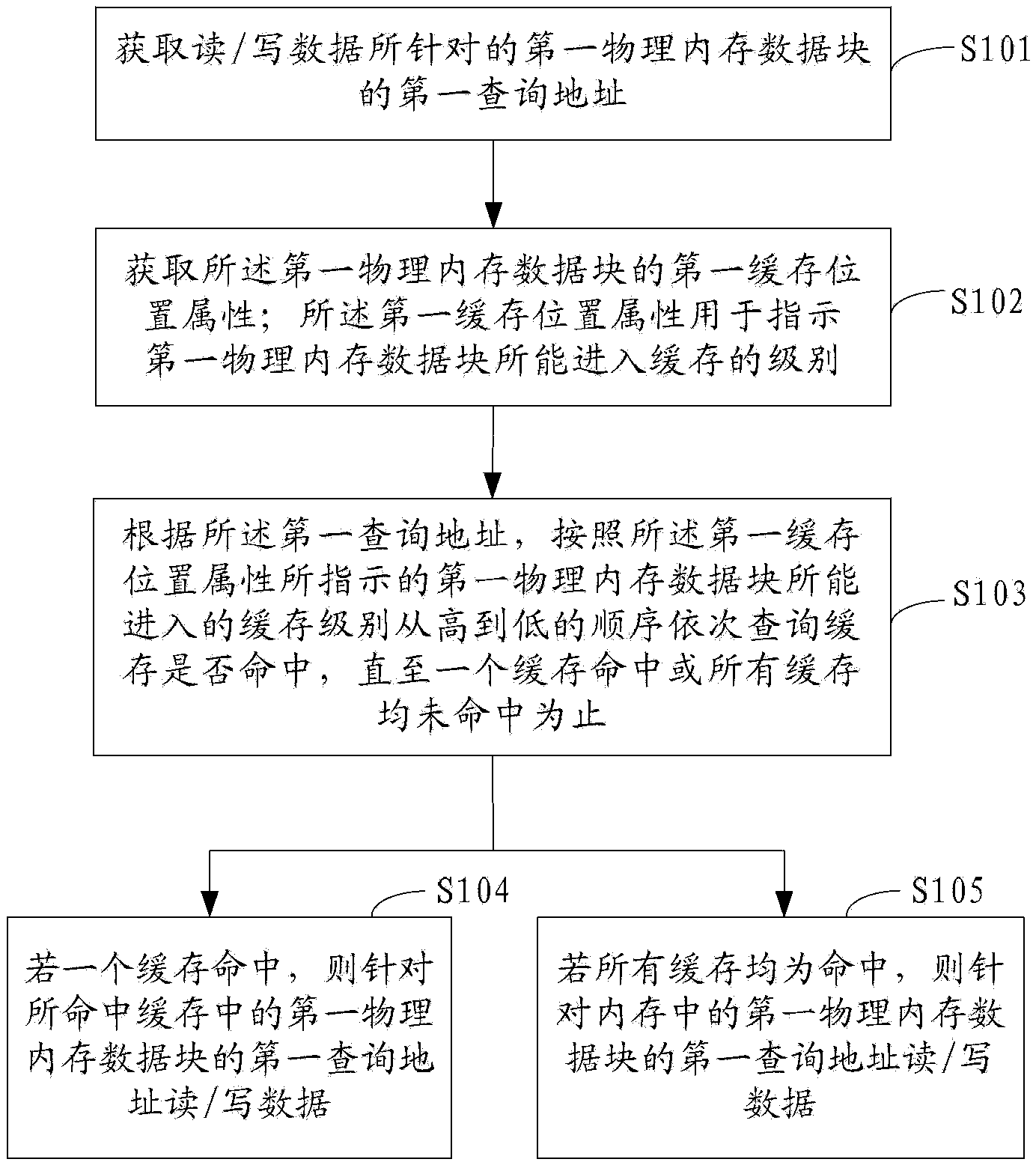

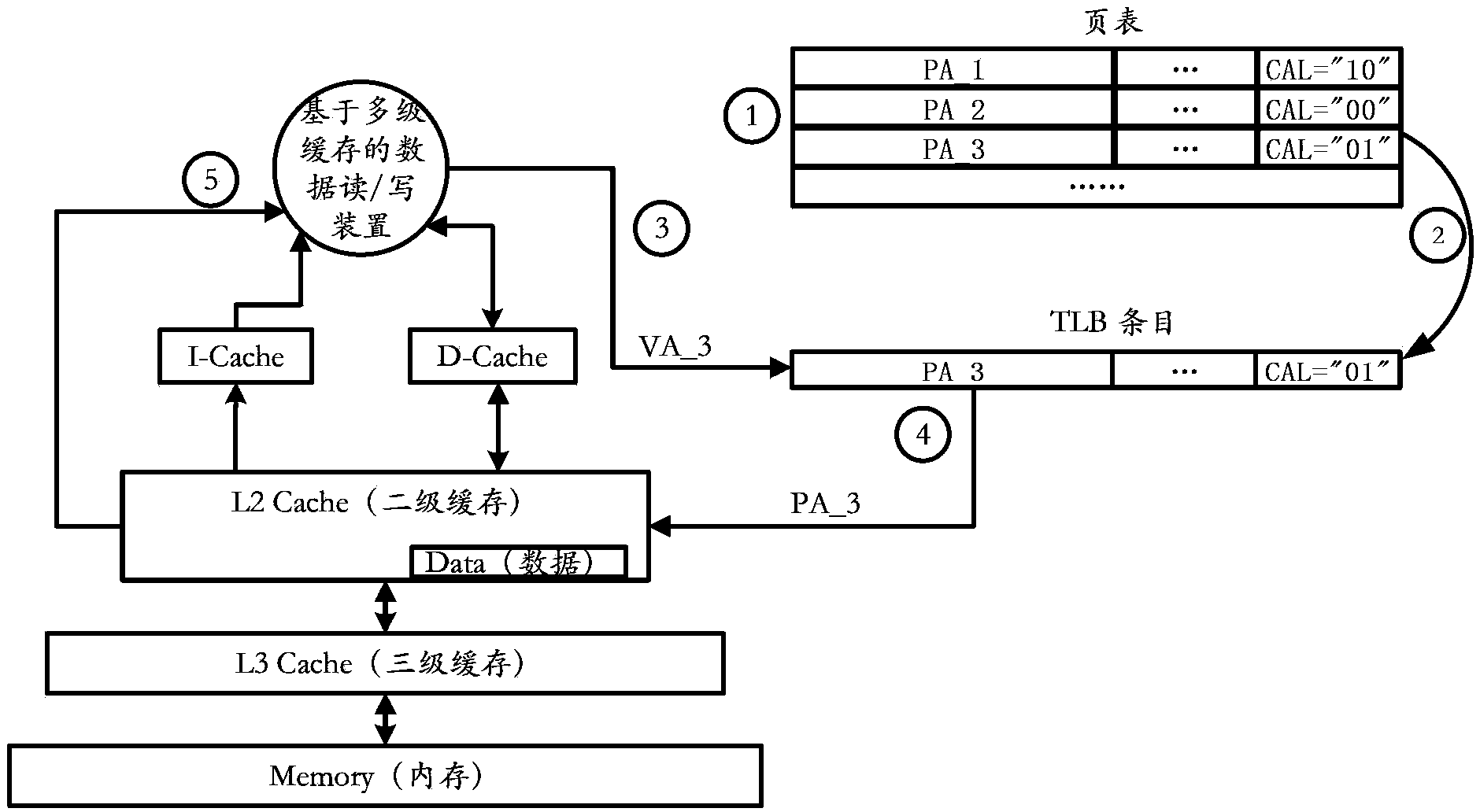

[0110] Such as image 3 As shown, the data reading method based on multi-level cache specifically includes the following steps:

[0111] Step 1. Set the cache location attribute of the memory page.

[0112] In this specific embodiment, through the accessed situation of the physical memory page, some bits are added in the page table to identify the cache access level (CAL, Cache Access Level) information, and the CAL information is used to identify that the memory page can enter The highest ac...

Embodiment 2

[0124] For the L2Cache miss in step ④ in the first embodiment, another embodiment is provided. In this embodiment, the first 4 steps may refer to the first 4 steps in Embodiment 1, which will not be repeated here.

[0125] If the L2Cache misses in Step 4 of Embodiment 1, such as Figure 4 As shown, this embodiment also includes:

[0126] In step ⑤, the multi-level cache-based data read / write device in the CPU Core uses the high-order bit PA_3 of the physical address to query whether the next-level L3Cache is hit.

[0127] The device for reading / writing data based on the multi-level cache in the CPU Core compares the high-order bit PA_3 of the physical address with the Tag in the L3Cache, and obtains a result of whether the L3Cache hits.

[0128] If the L3Cache hits, proceed to step ⑥; if the L3Cache misses, proceed to step ⑦.

[0129] Step 6. If the L3Cache hits, the data to be read is transmitted to the multi-level cache-based data read / write device in the CPU Core through...

Embodiment 3

[0133] The following will provide a specific implementation solution for the data writing method based on the multi-level cache based on the above computer system.

[0134] In this embodiment, the same memory page cache location property setting as in Embodiment 1, that is, the same page table is used. Therefore, in this embodiment, when the multi-level cache-based data read / write device in the CPU Core initiates a data write request, the first 4 steps can refer to the first 4 steps in Embodiment 1, the only difference is The request for reading data is changed to the request for writing data, and others are not described here. If the L2Cache is hit by step ④, such as Figure 5 As shown, this embodiment also includes:

[0135] Step ⑤: Write data into L2Cache.

[0136] Further, if the data written in step ⑤ is shared data, the following steps can also be performed in order to maintain the consistency of the shared data.

[0137] Step ⑥: The multi-level cache-based data read...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com