Patents

Literature

82 results about "Cache locality" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Cache Locality. Cache locality controls the way that the Generic SQL Adapter (GSA) uses in-memory caching. Specifically, it determines whether the GSA will cache data using its own caching functionality, using the caching functionality of the Oracle Coherence distributed cache application, or a mix of both methods.

Block synchronous decoding

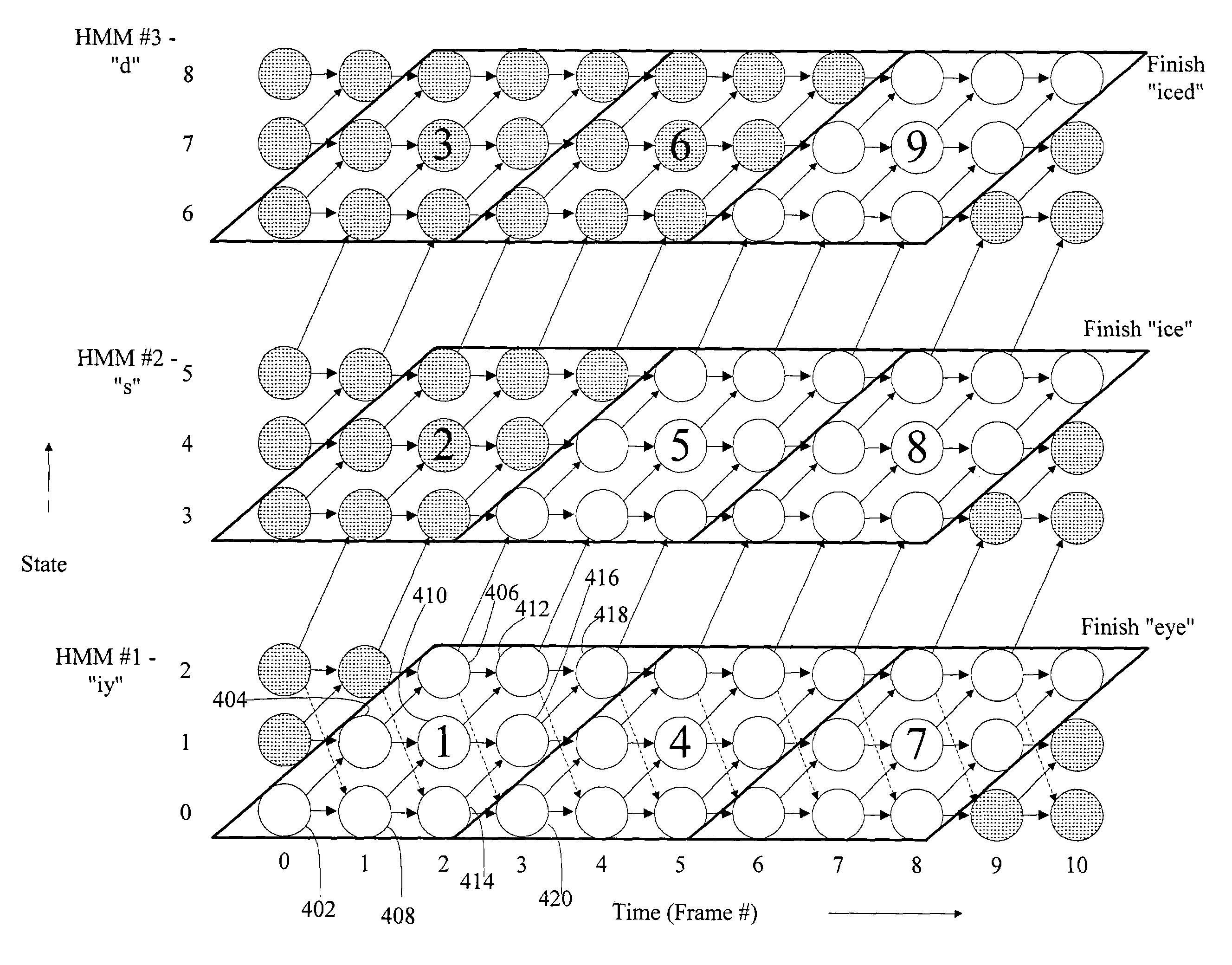

InactiveUS7529671B2Improved pattern recognition speedImprove cache localityKitchenware cleanersGlovesHide markov modelCache locality

A pattern recognition system and method are provided. Aspects of the invention are particularly useful in combination with multi-state Hidden Markov Models. Pattern recognition is effected by processing Hidden Markov Model Blocks. This block-processing allows the processor to perform more operations upon data while such data is in cache memory. By so increasing cache locality, aspects of the invention provide significantly improved pattern recognition speed.

Owner:MICROSOFT TECH LICENSING LLC

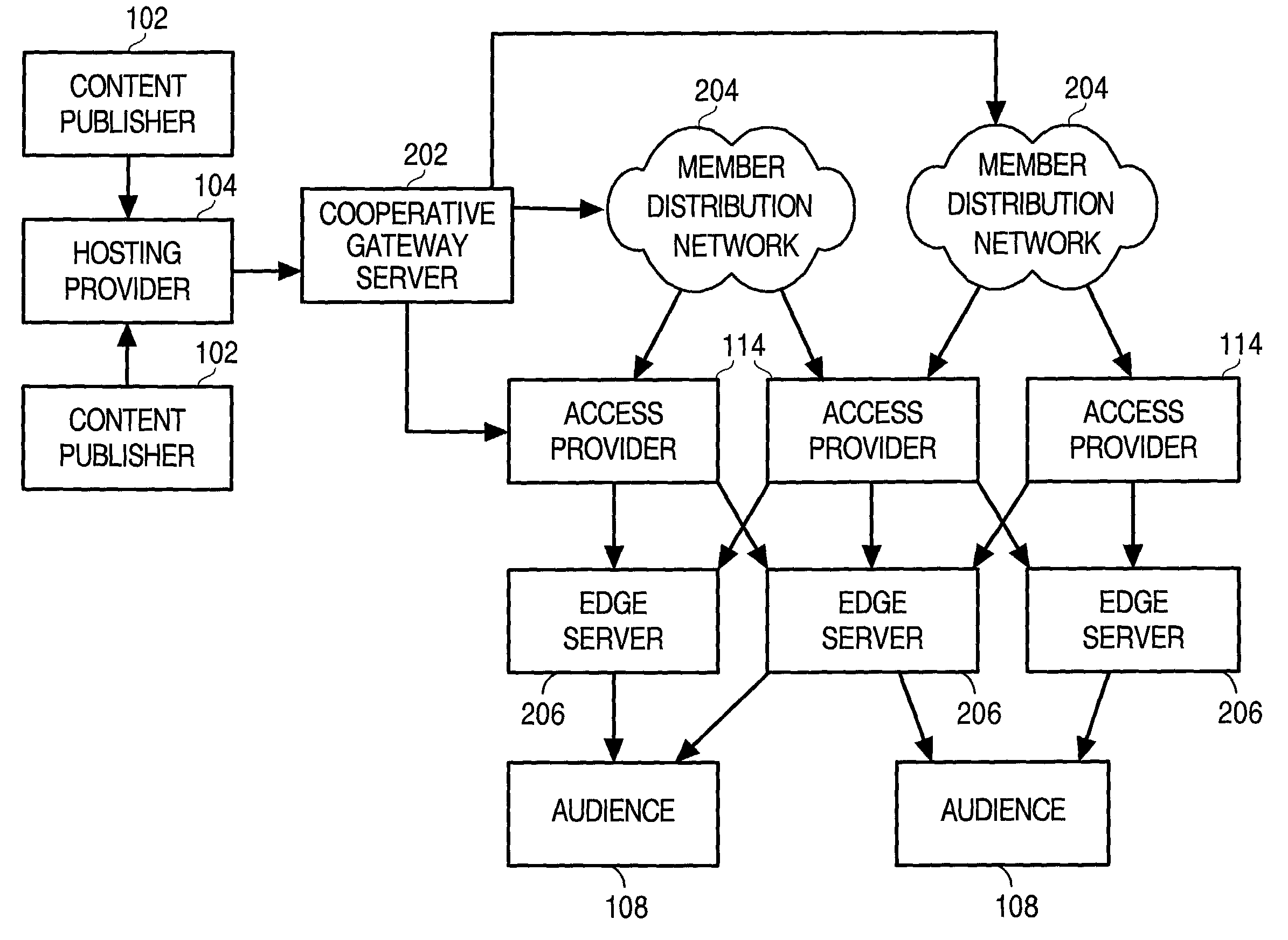

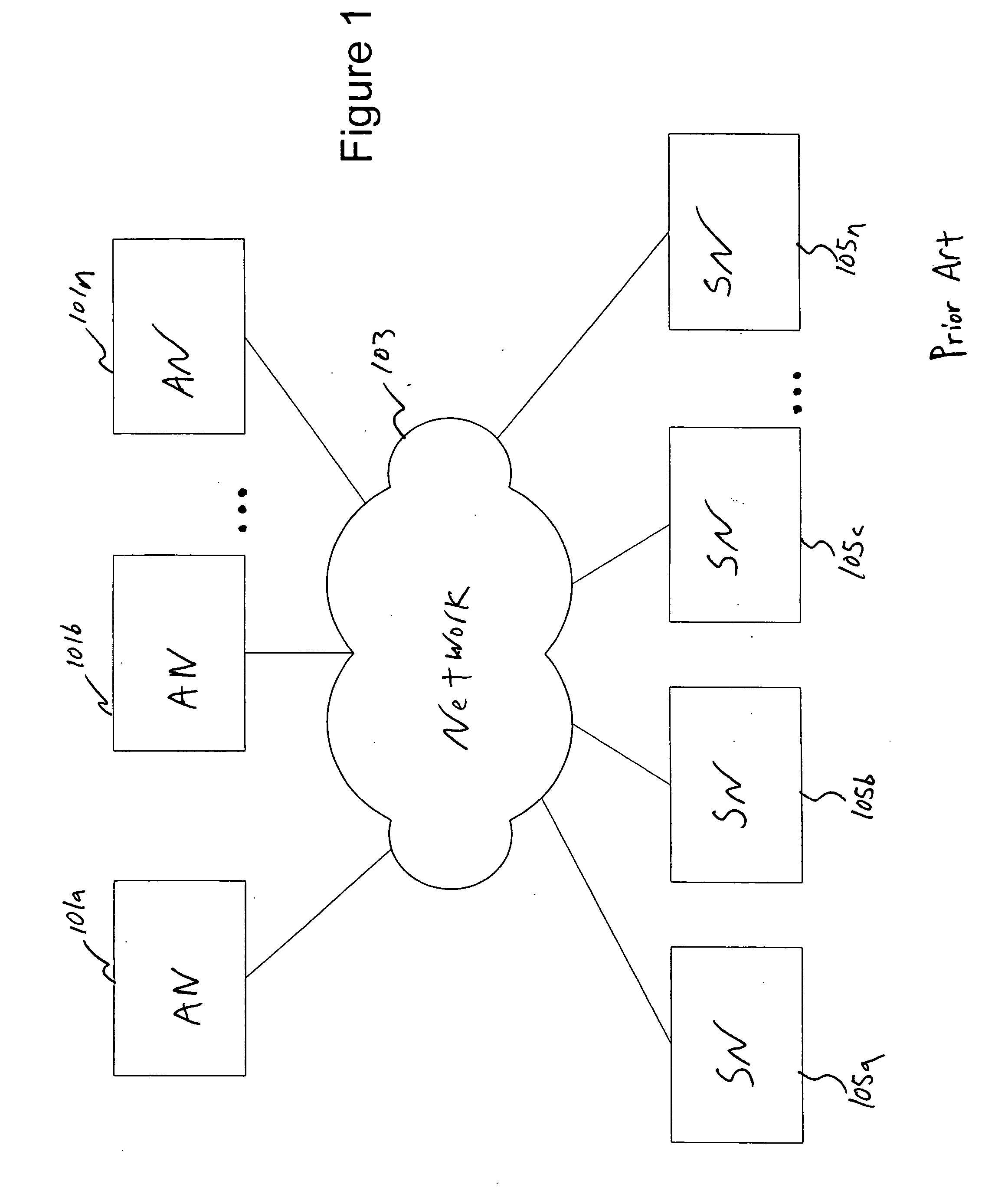

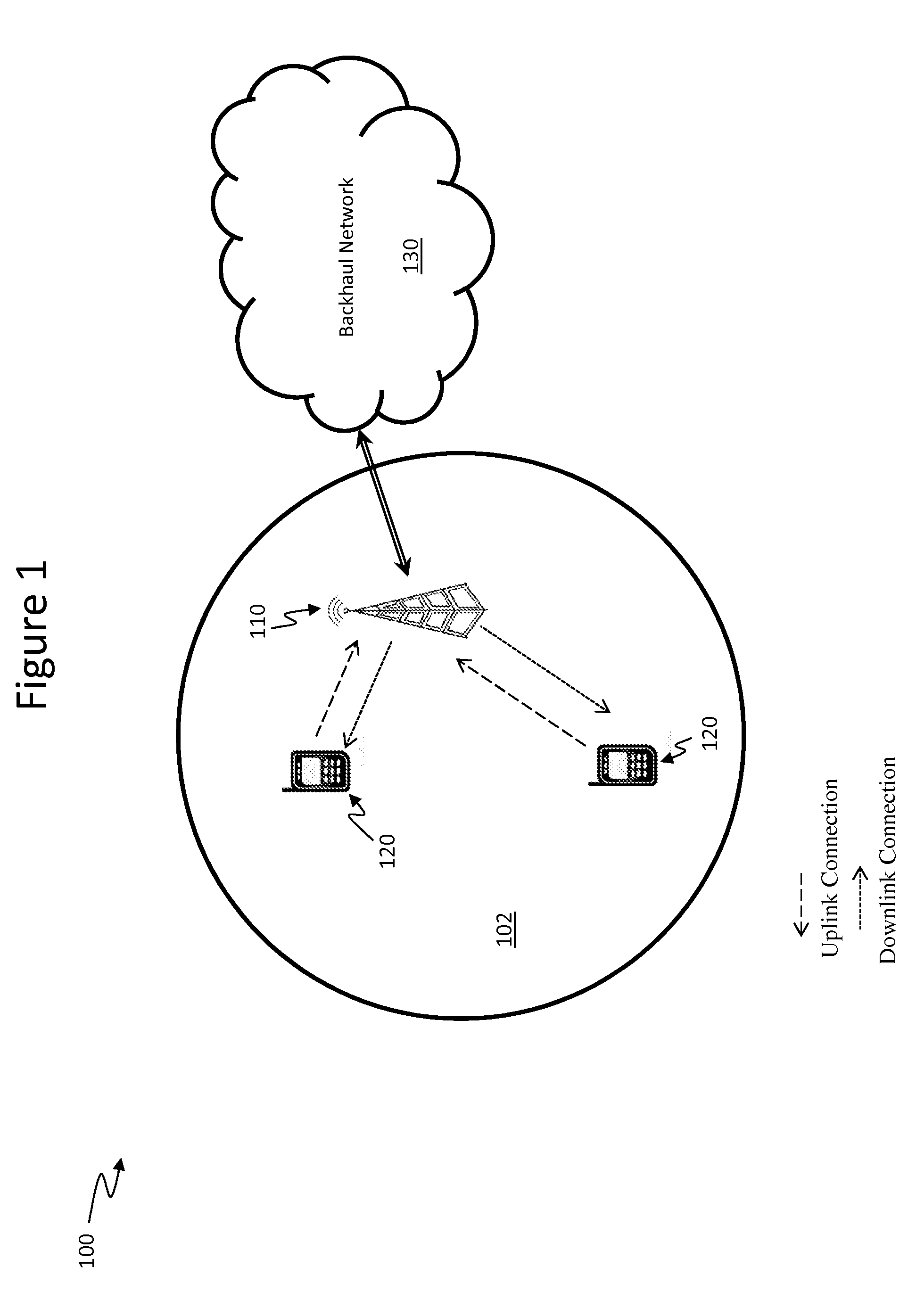

Cooperative management of distributed network caches

InactiveUS7117262B2Multiple digital computer combinationsSecuring communicationInternet contentControl signal

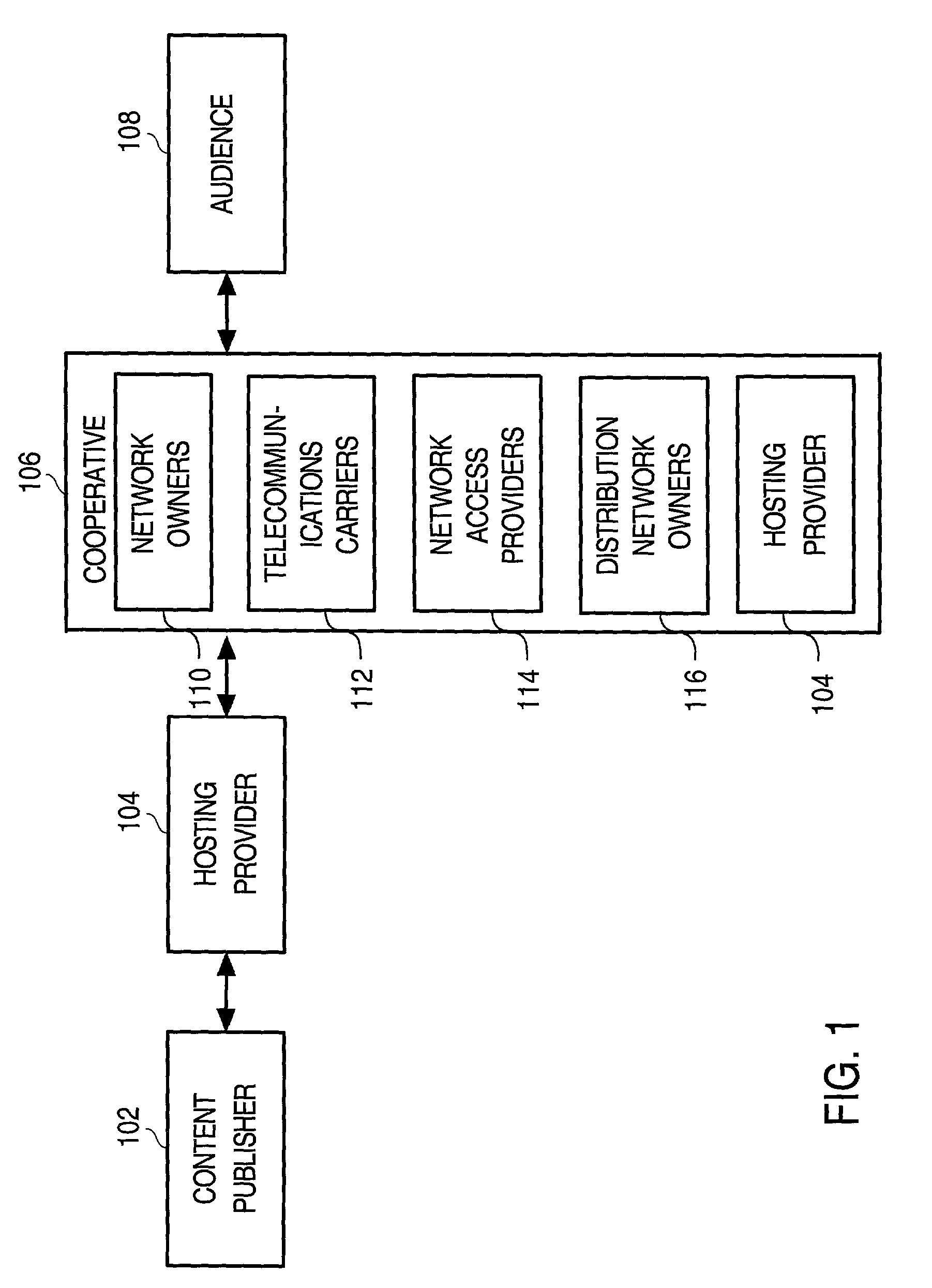

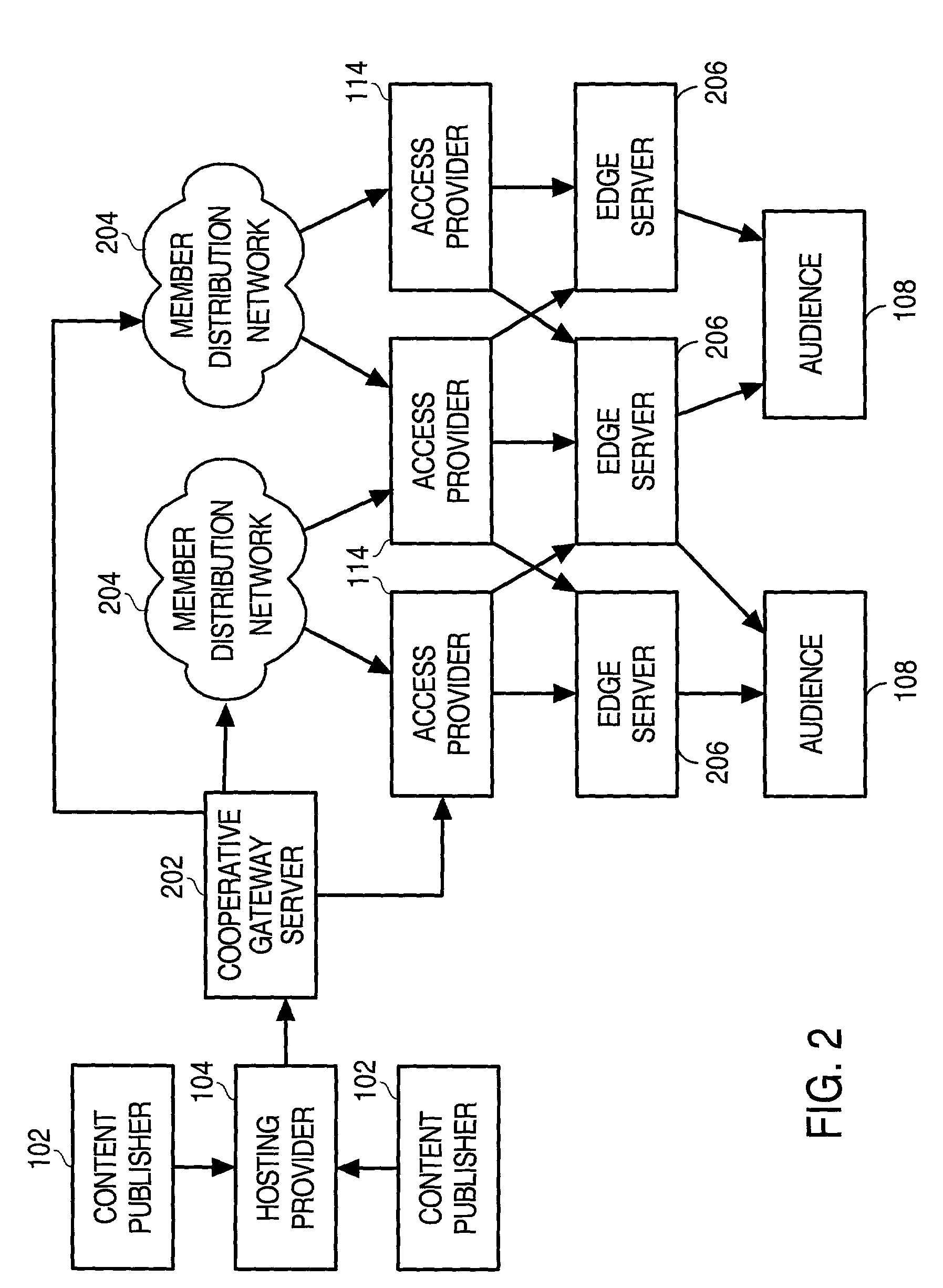

The techniques described employ a cooperative organization of network service providers to provide improved distributed network services. The network service providers that are constituent to the cooperative organization represent various perspectives within the overall Internet content distribution network, and may include network owners, telecommunications carriers, network access providers, hosting providers and distribution network owners, the latter being an entity that caches content at a plurality of locations distributed on the network. Aspects include managing content caches by receiving control signals specifying actions related to cached content that is distributed on a network, such as the Internet, and forwarding the control signals through to the caching locations to implement the actions represented by the control signals, thus providing content publishers the capability of refreshing their content regardless of where it is cached. Aspects include managing content caches by receiving activity records that contain statistics related to requests for cached content, segregating the statistics according to which content publisher provides the content associated with the statistics, and providing to each content publisher statistics corresponding to content provided by that content publisher, thus allowing them to monitor requests for their content regardless of where it may be cached on the network.

Owner:R2 SOLUTIONS

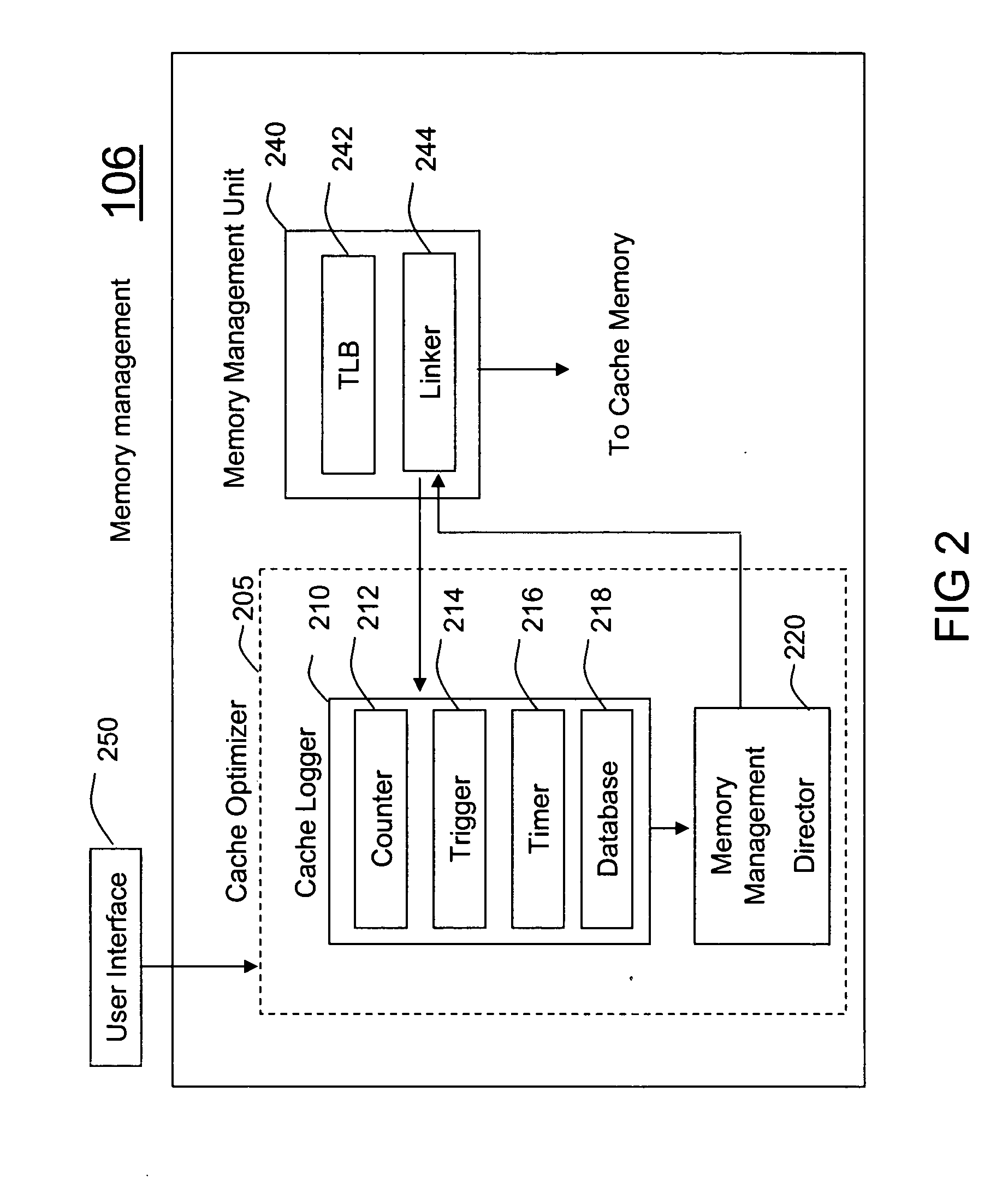

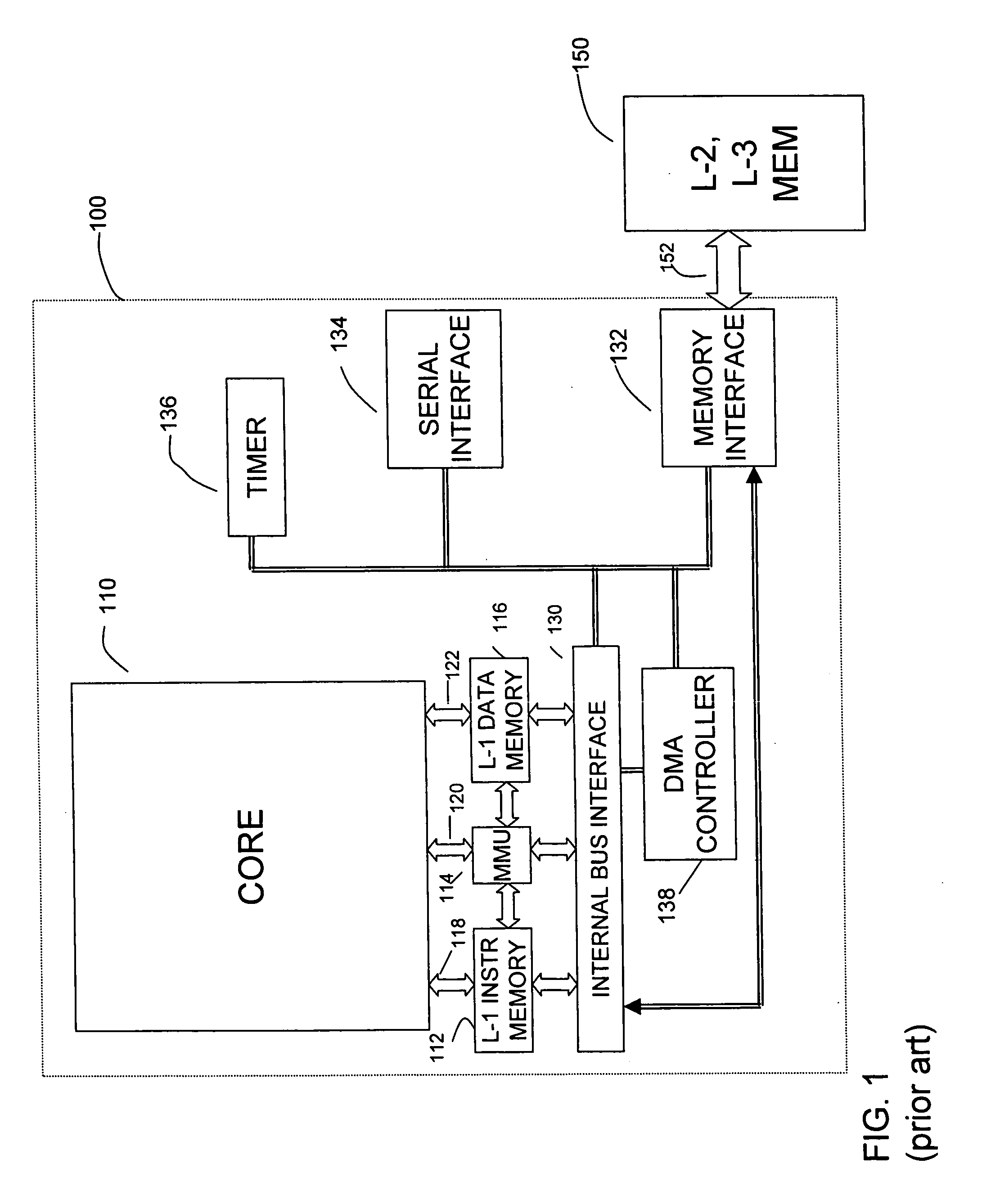

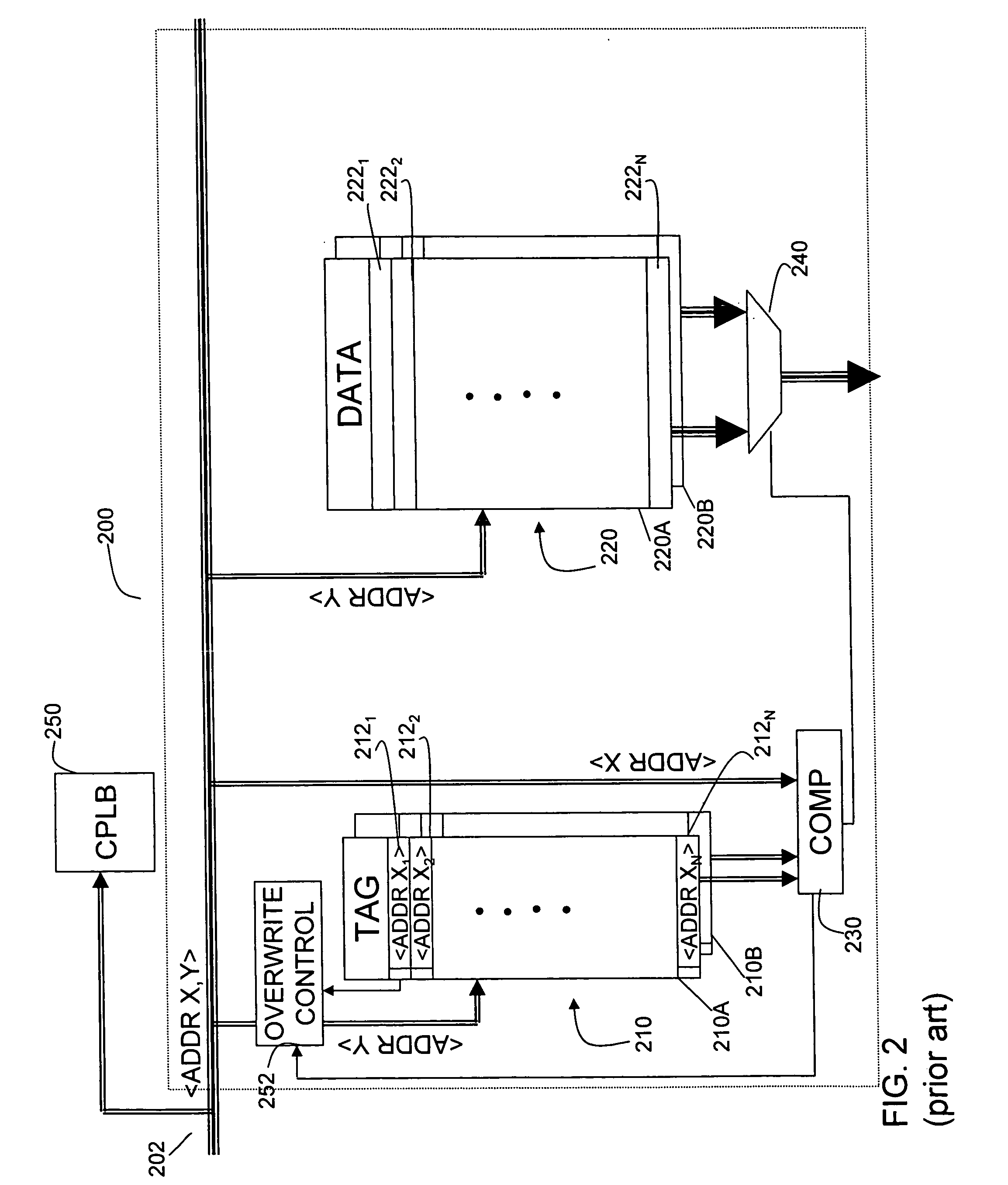

Method and system for run-time cache logging

InactiveUS20070150881A1Increase heightMaximize cache locality compile timeError detection/correctionSpecific program execution arrangementsCache optimizationParallel computing

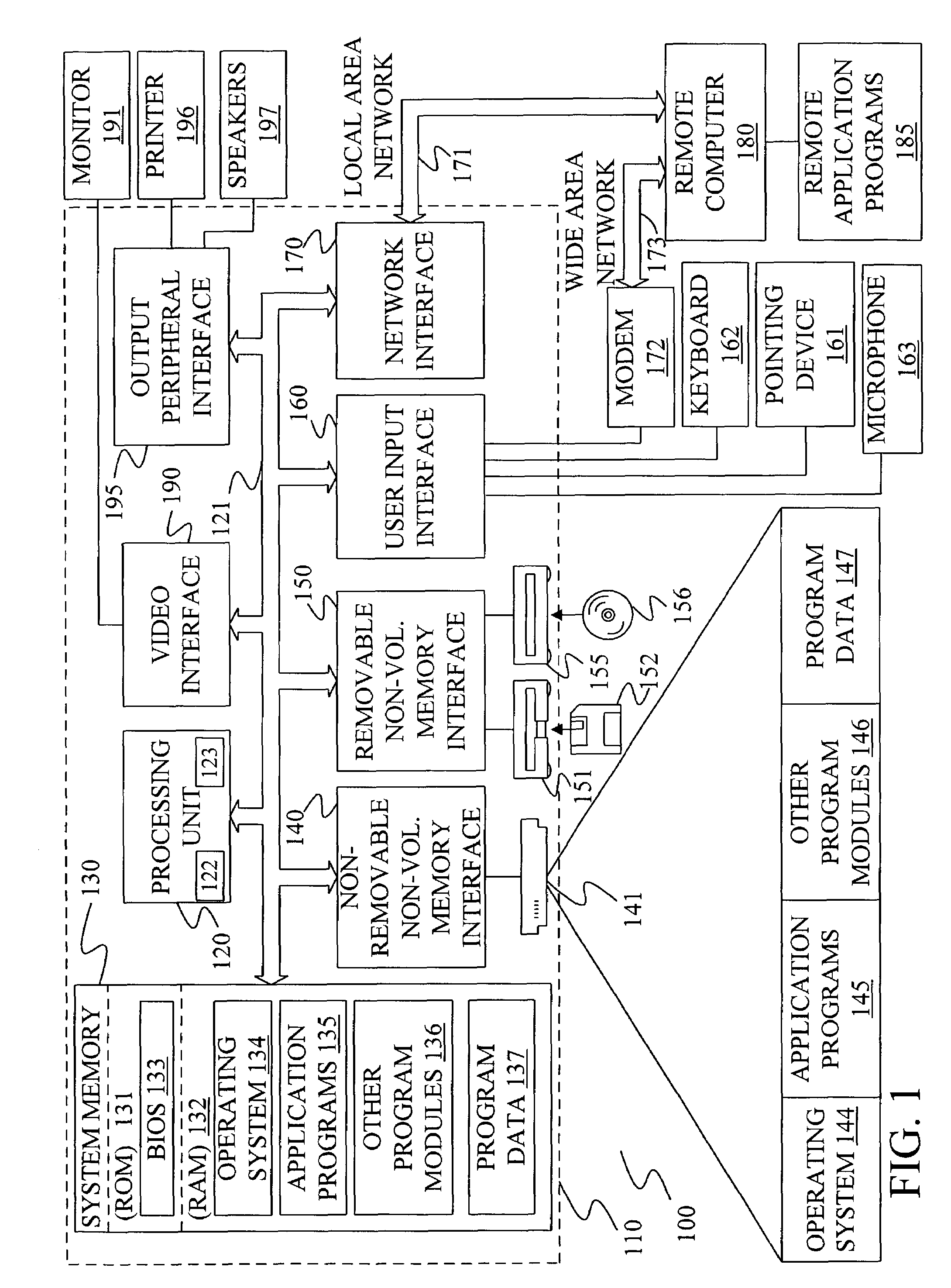

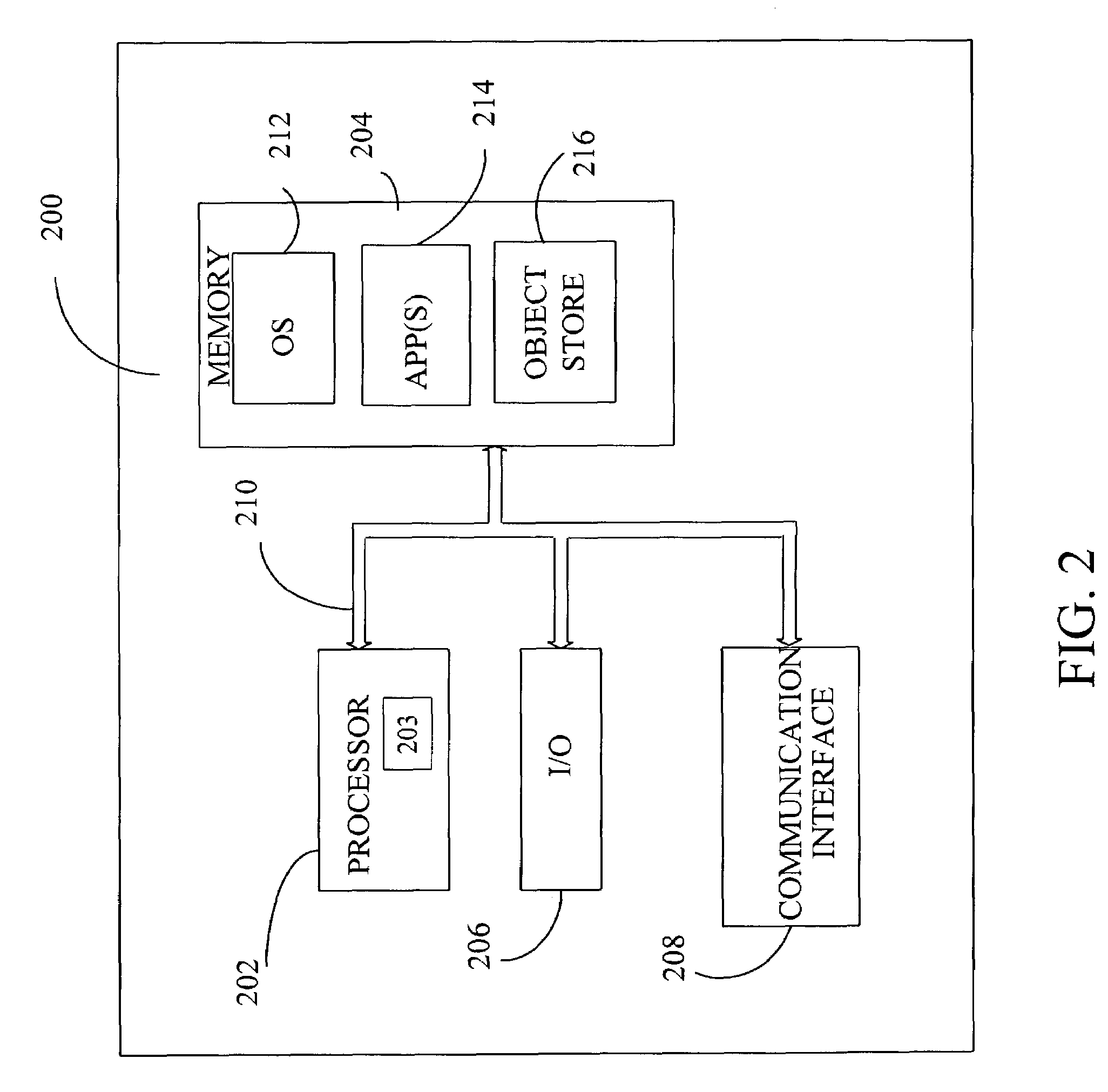

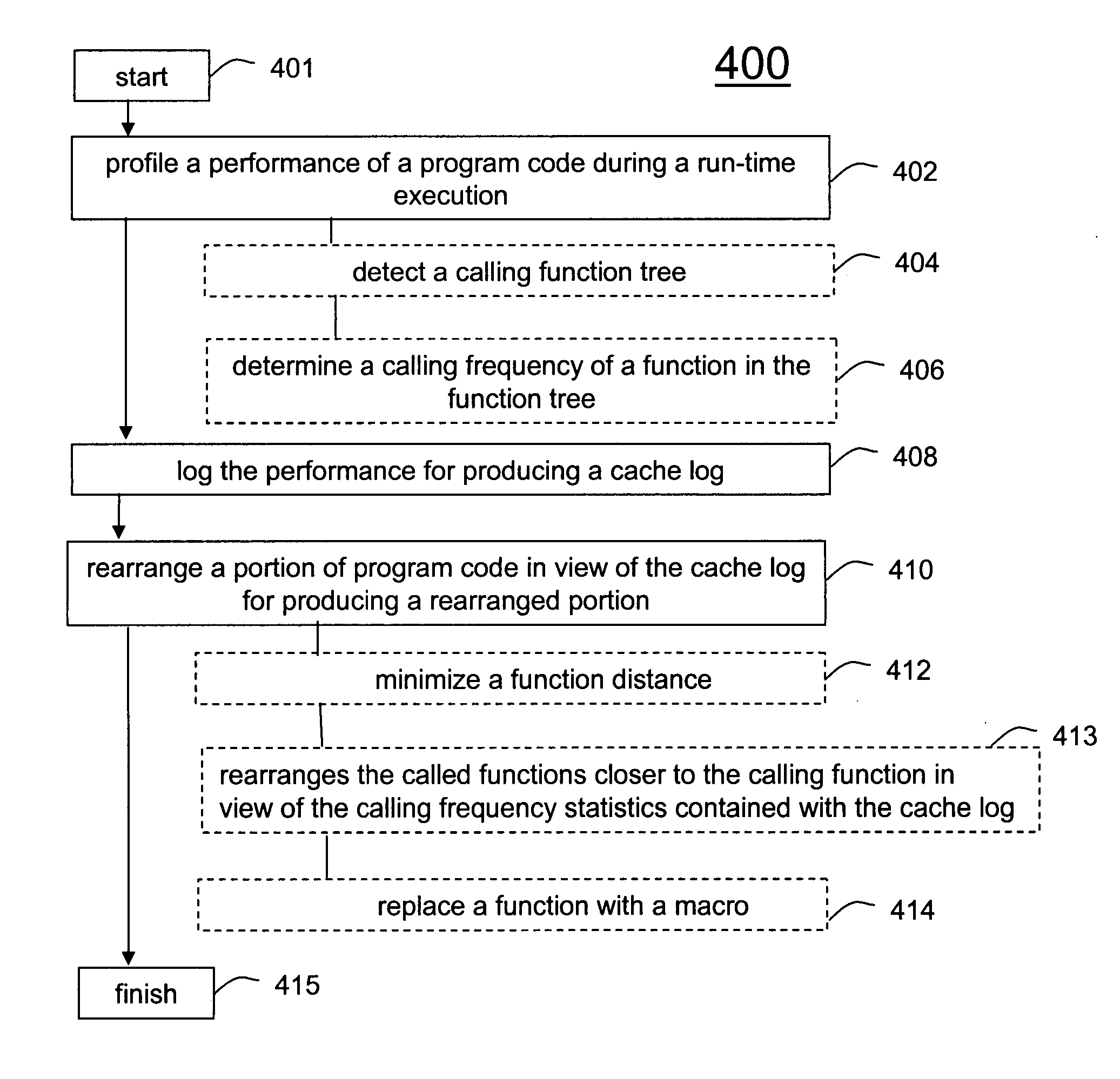

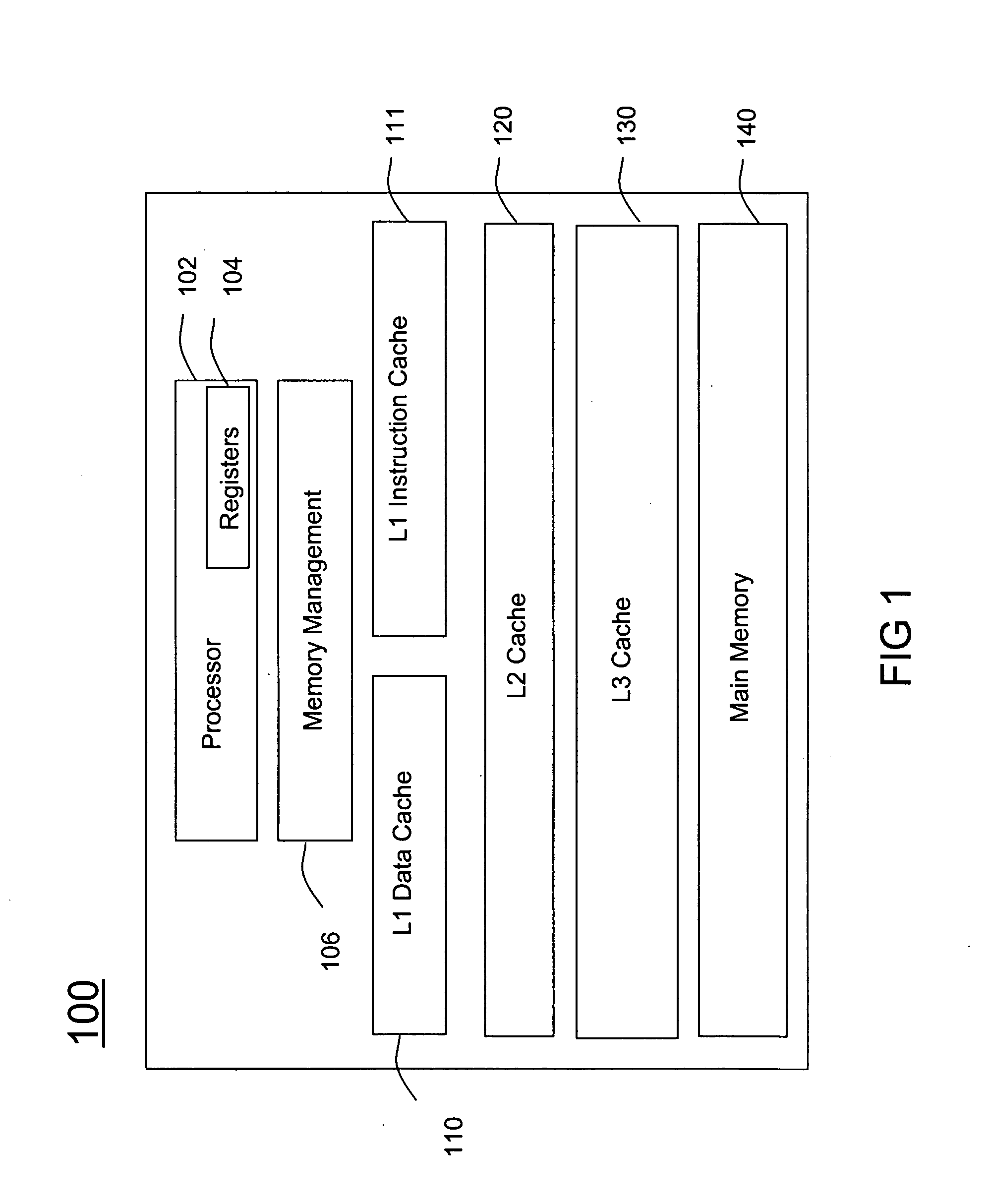

A method (400) and system (106) is provided for run-time cache optimization. The method includes profiling (402) a performance of a program code during a run-time execution, logging (408) the performance for producing a cache log, and rearranging (410) a portion of program code in view of the cache log for producing a rearranged portion. The rearranged portion is supplied to a memory management unit (240) for managing at least one cache memory (110-140). The cache log can be collected during a real-time operation of a communication device and is fed back to a linking process (244) to maximize a cache locality compile-time. The method further includes loading a saved profile corresponding with a run-time operating mode, and reprogramming a new code image associated with the saved profile.

Owner:MOTOROLA INC

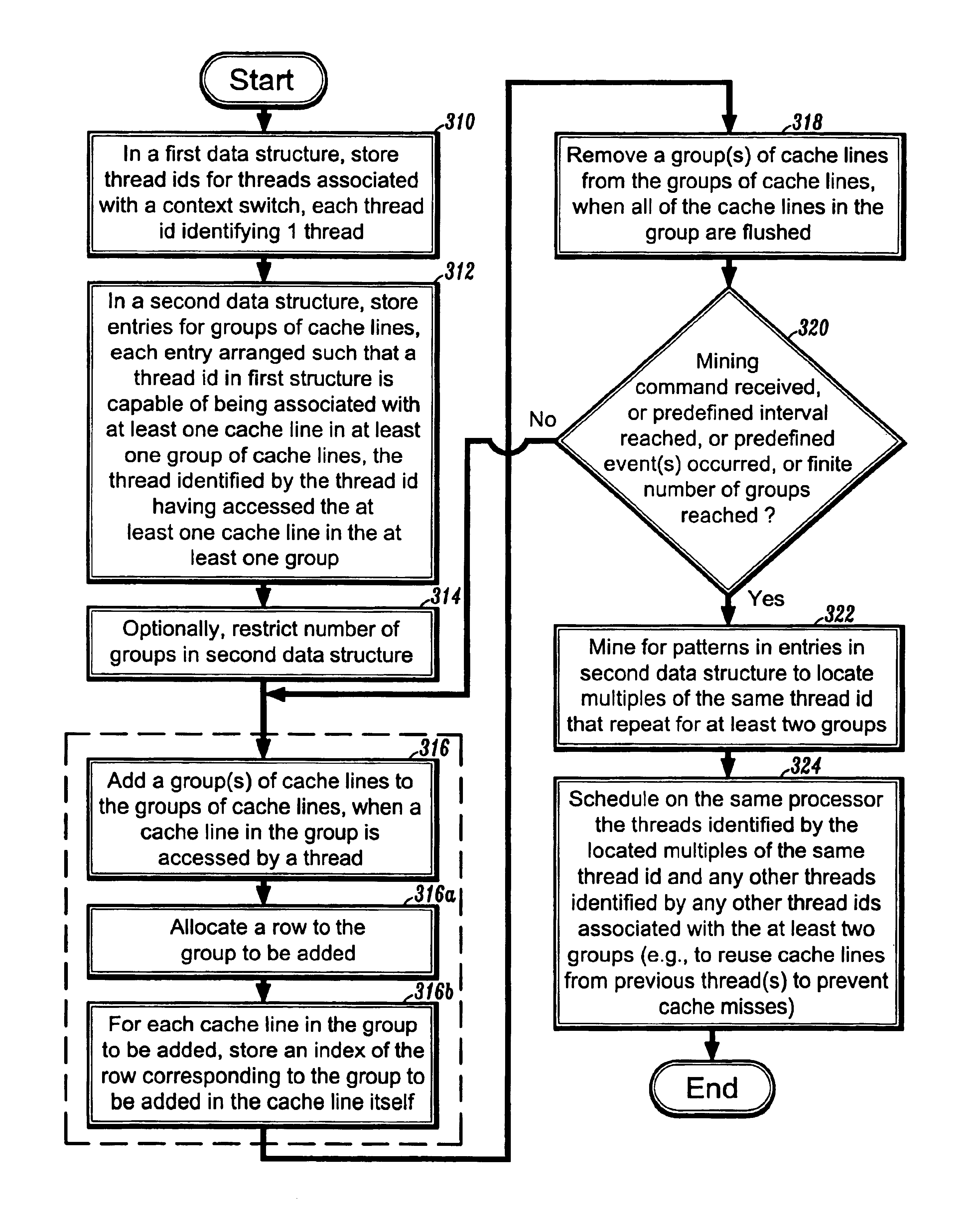

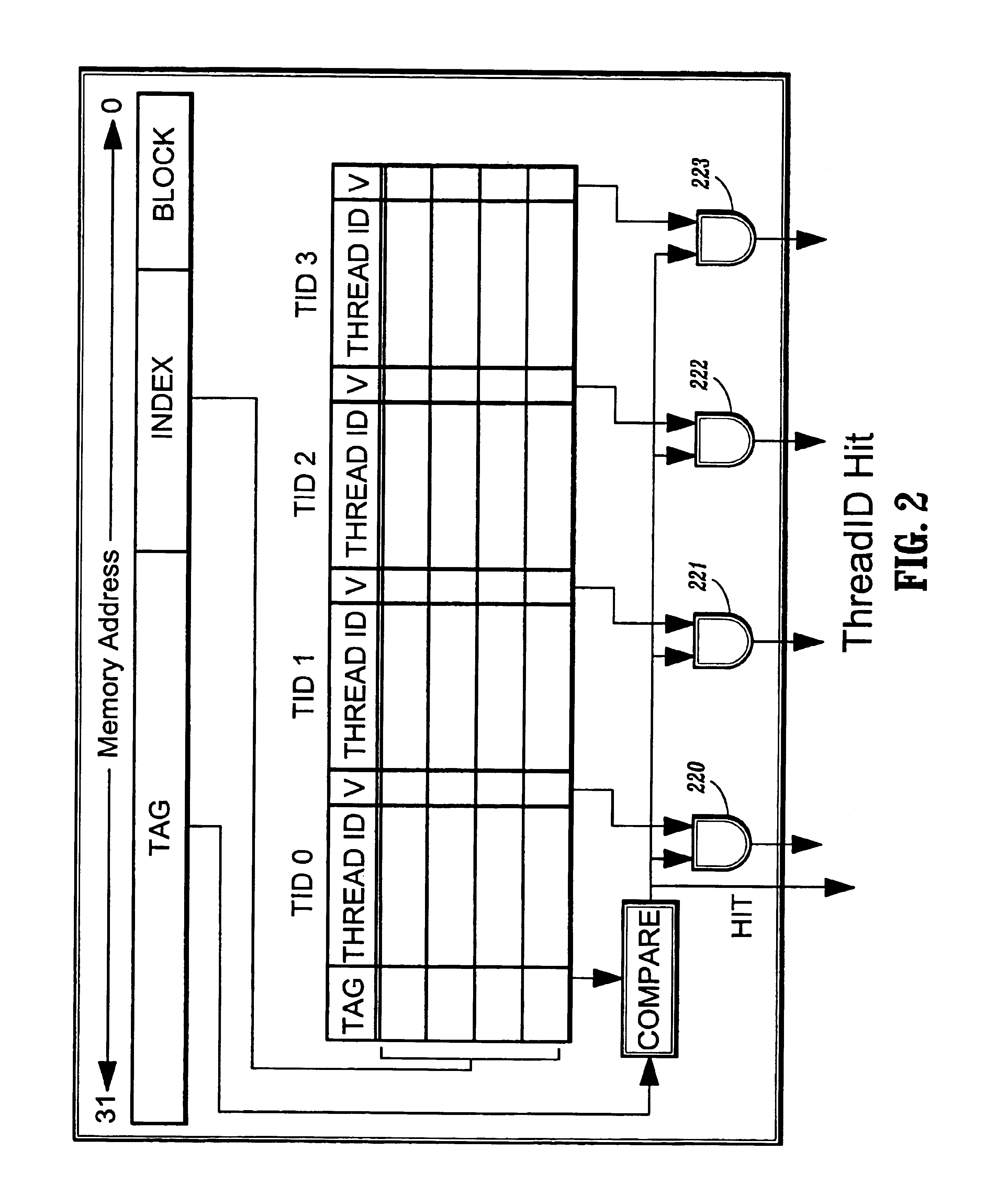

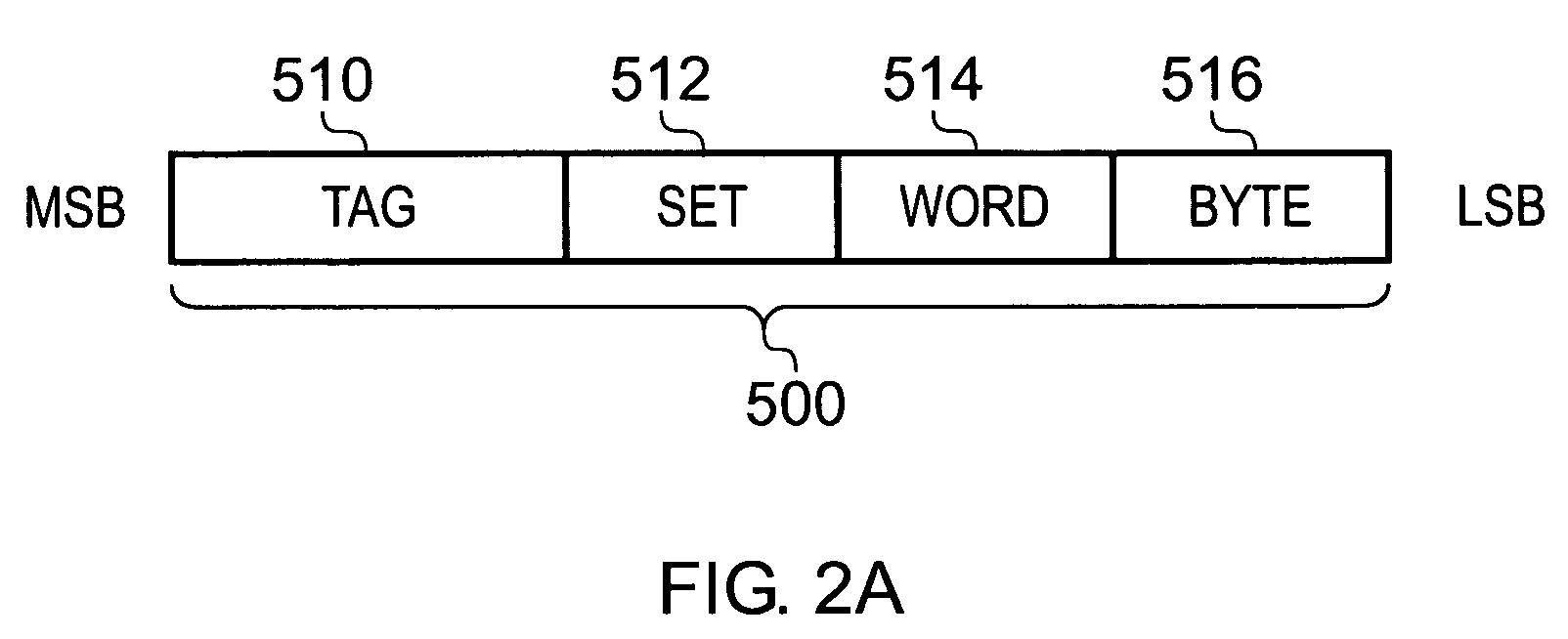

Hardware-assisted method for scheduling threads using data cache locality

InactiveUS6938252B2Improve performanceConvenient ArrangementResource allocationMemory adressing/allocation/relocationMulti processorParallel computing

A method is provided for scheduling threads in a multi-processor system. In a first structure thread ids are stored for threads associated with a context switch. Each thread id identifies one thread. In a second structure entries are stored for groups of contiguous cache lines. Each entry is arranged such that a thread id in the first structure is capable of being associated with at least one contiguous cache line in at least one group, the thread identified by the thread id having accessed the at least one contiguous cache line. Patterns are mined for in the entries to locate multiples of a same thread id that repeat for at least two groups. Threads identified by the located multiples of the same thread id are mapped to at least one native thread, and are scheduled on the same processor with other threads associated with the at least two groups.

Owner:IBM CORP

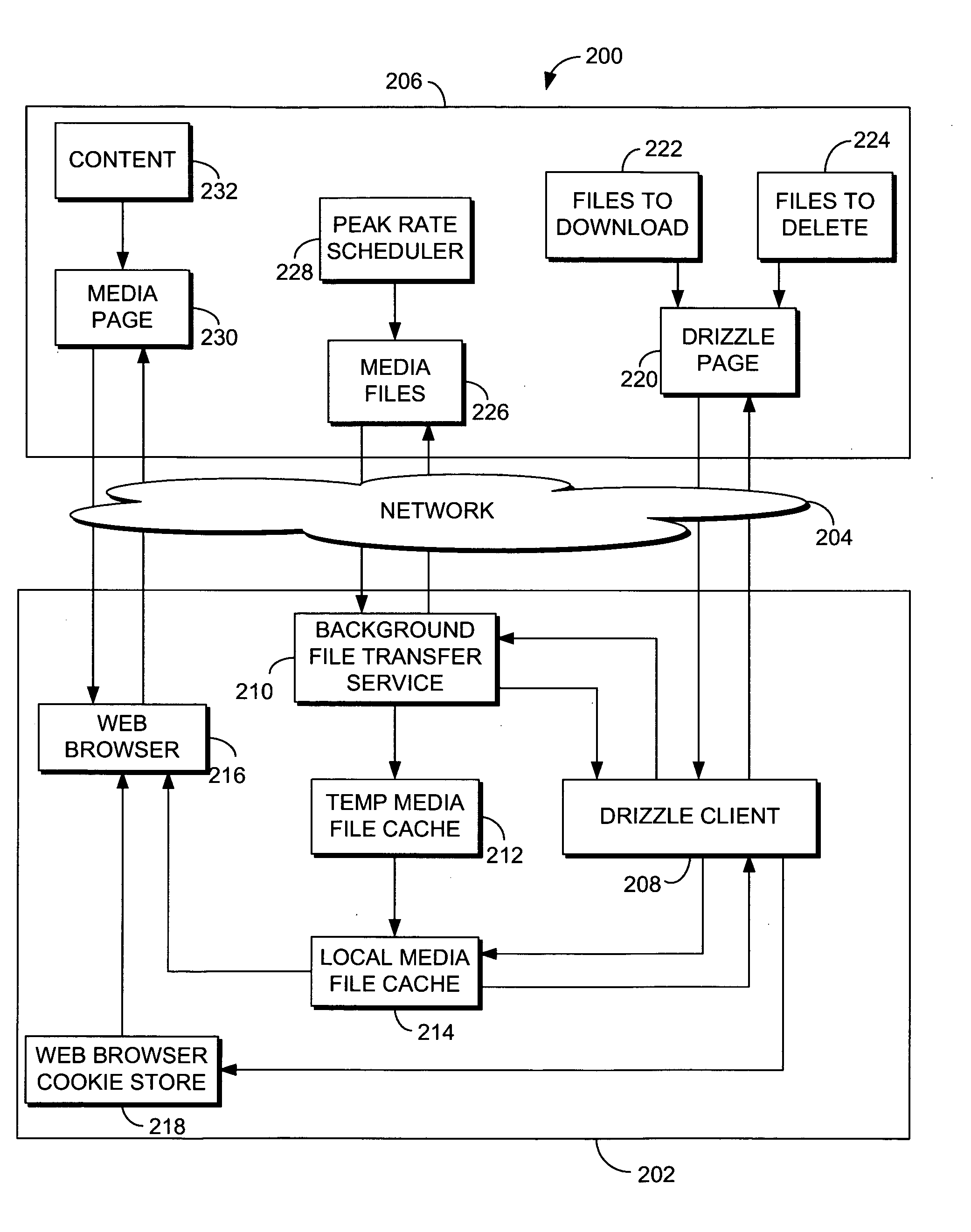

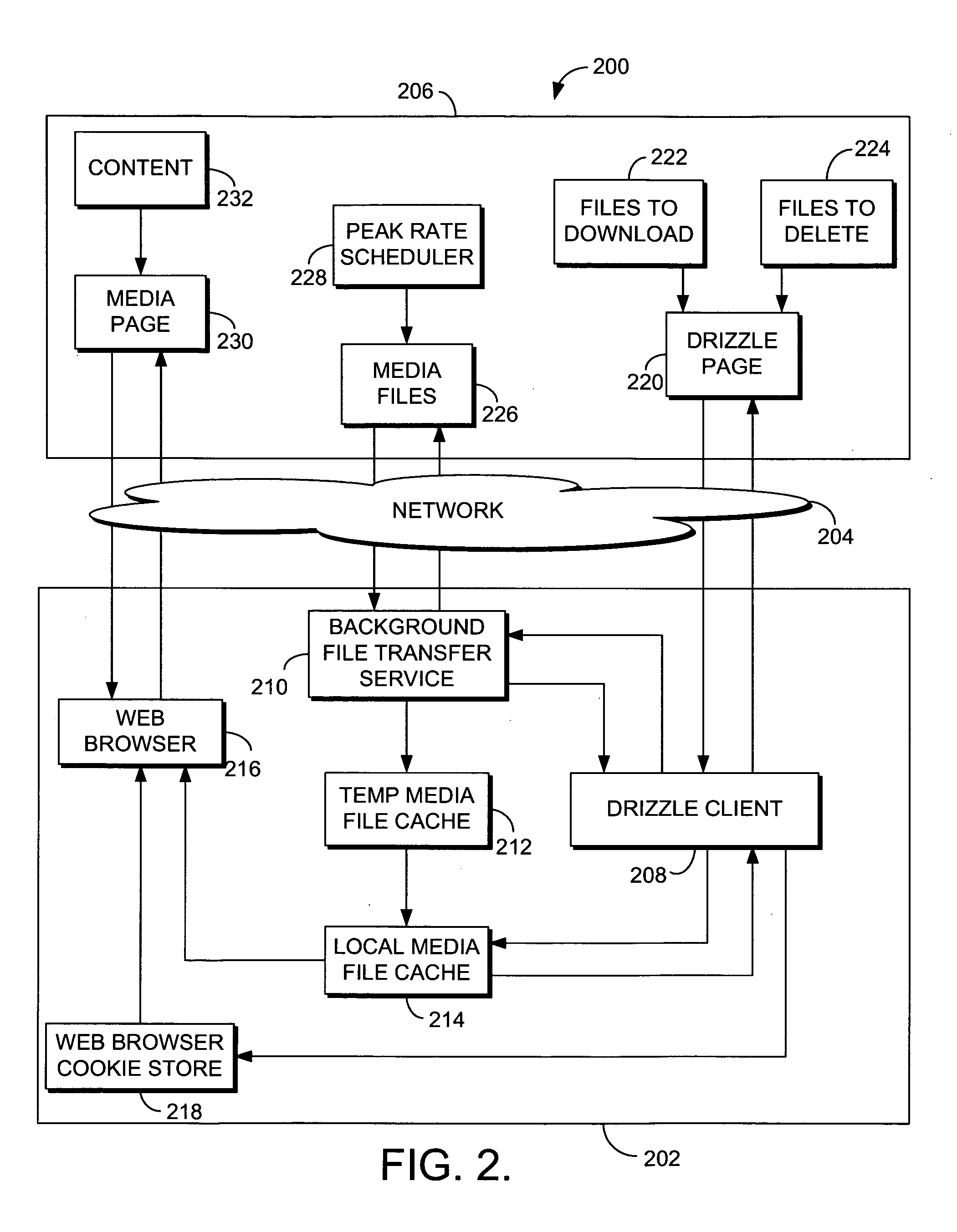

System and method for transferring a file in advance of its use

InactiveUS20060106807A1Digital data information retrievalDigital data processing detailsFile transmissionWeb page

The invention relates to a system, methods, and computer-readable media for transferring one or more files to a computer in advance of their use by the computer. In accordance with one method of the invention, the computer sends a request for instructions to acquire at least one predetermined file. The request includes information regarding the location of a cache on the computer. The computer receives the instructions to acquire the file, as well as at least one cookie, which includes information regarding the file and the location of the cache. Based on the instructions, the computer obtains the file and stores it in the cache in advance of its use by the computer. If a web page that includes the file is requested, the request for the web page includes the cookie. The computer then receives data that represents that web page and includes a reference to the file stored in the cache at the computer. The reference may be used to open the file stored in the cache.

Owner:MICROSOFT TECH LICENSING LLC

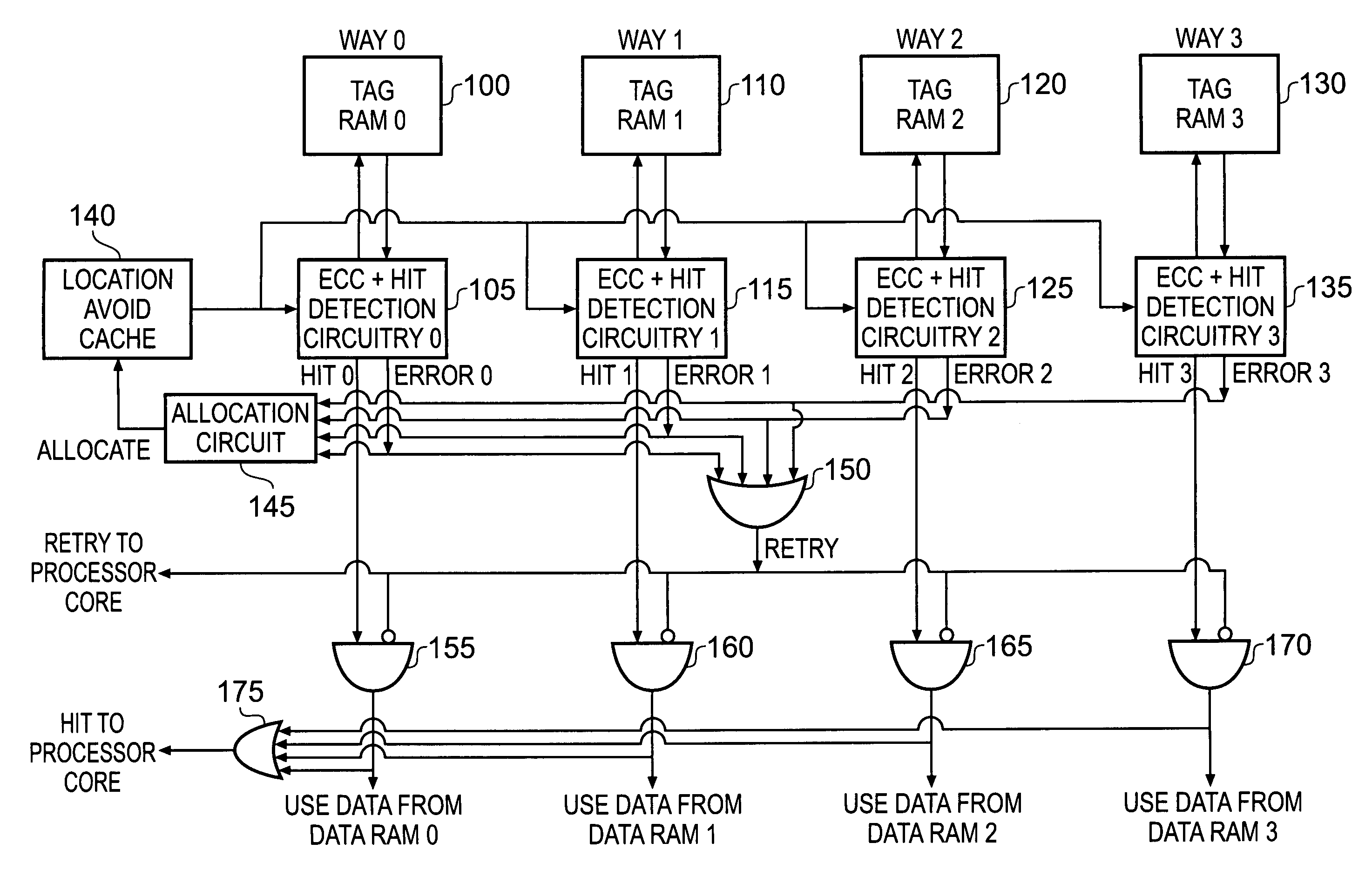

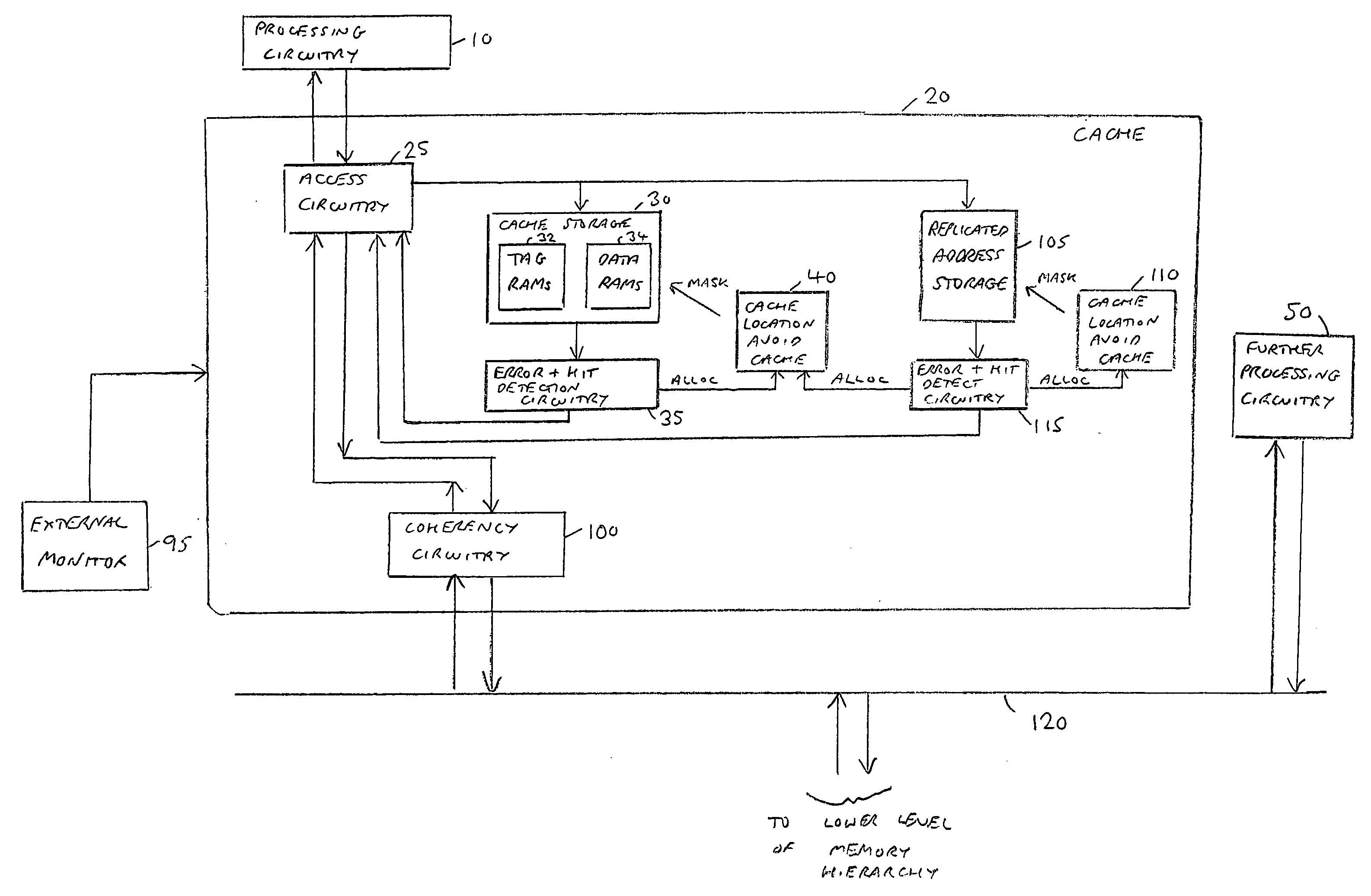

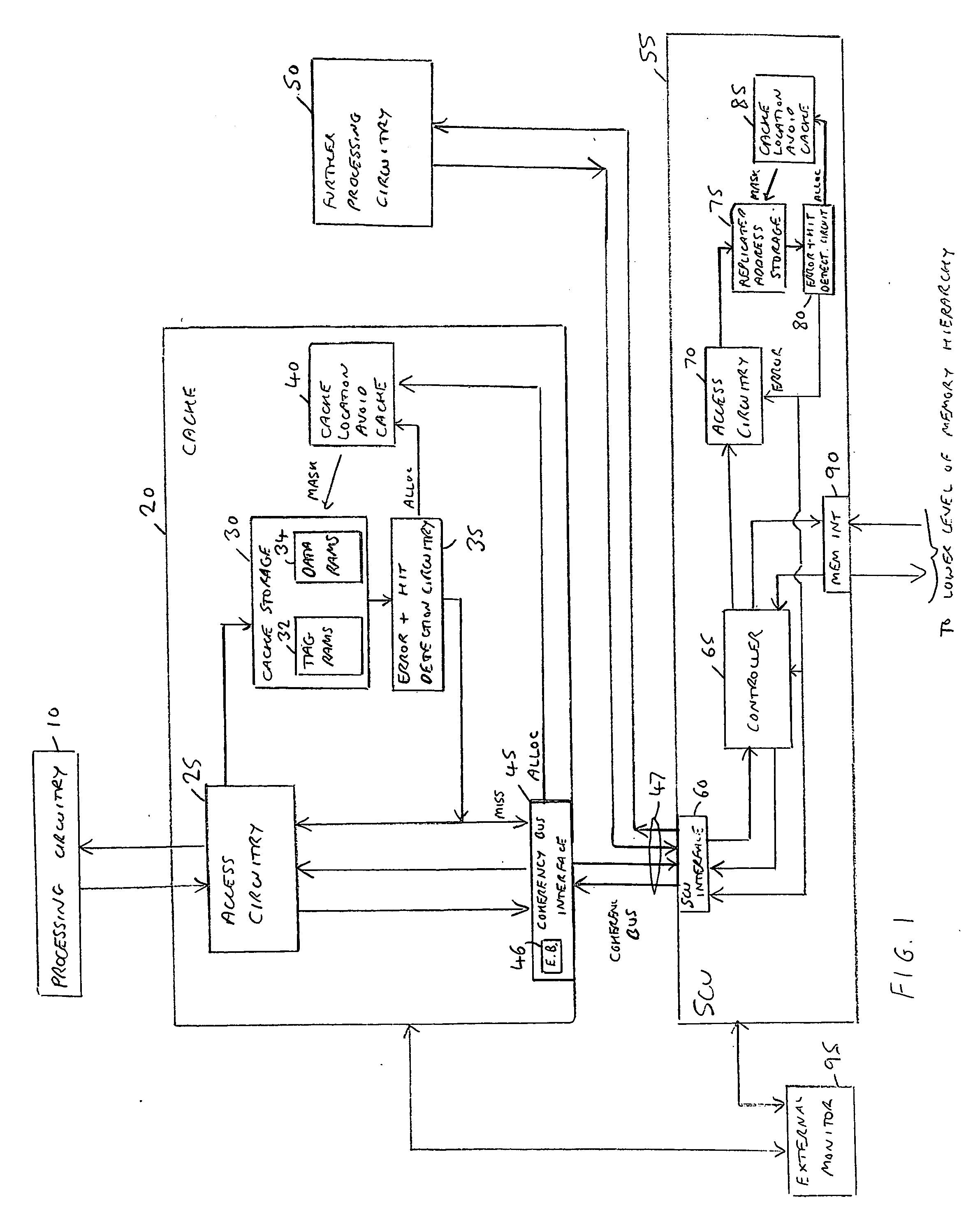

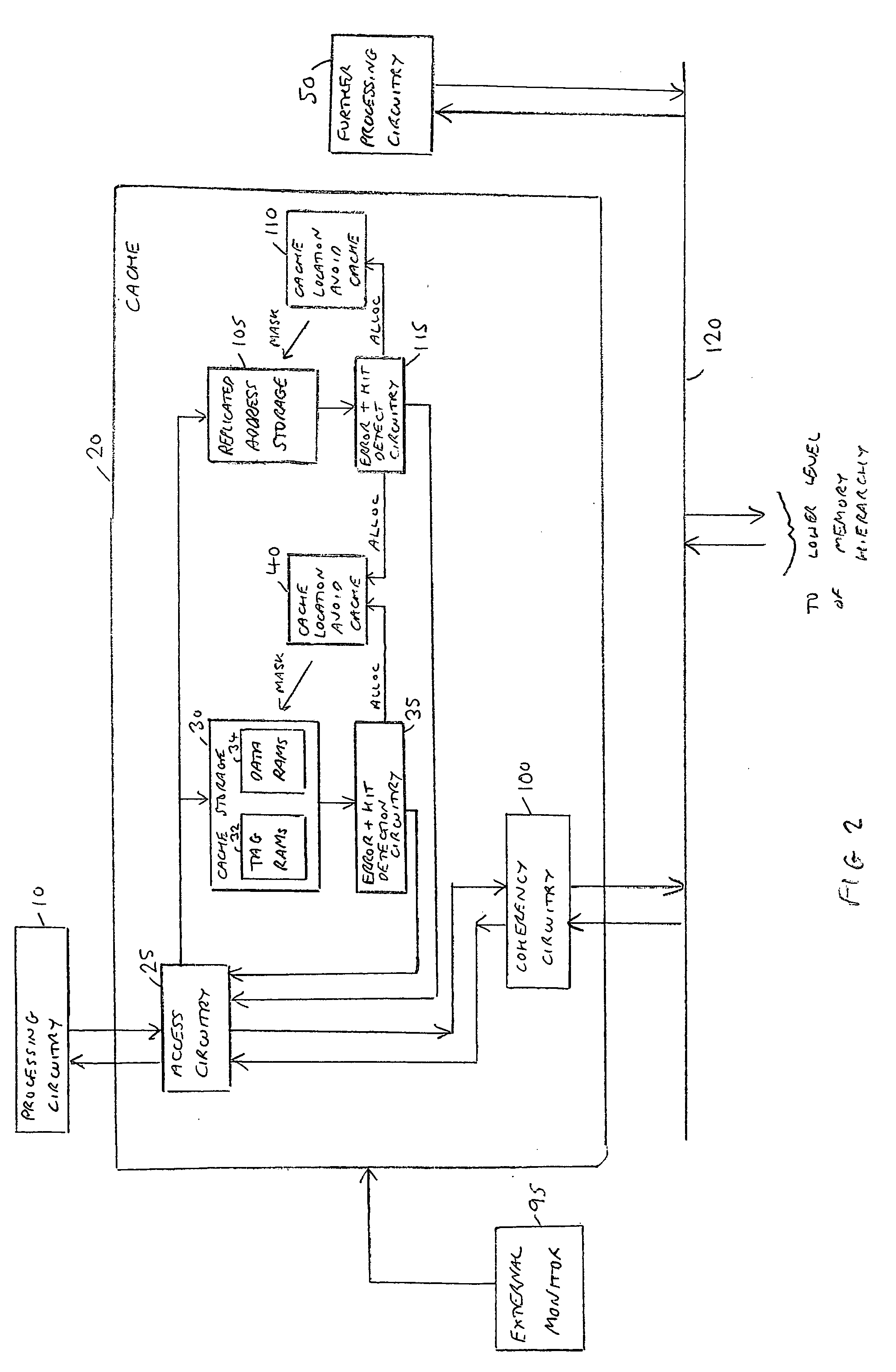

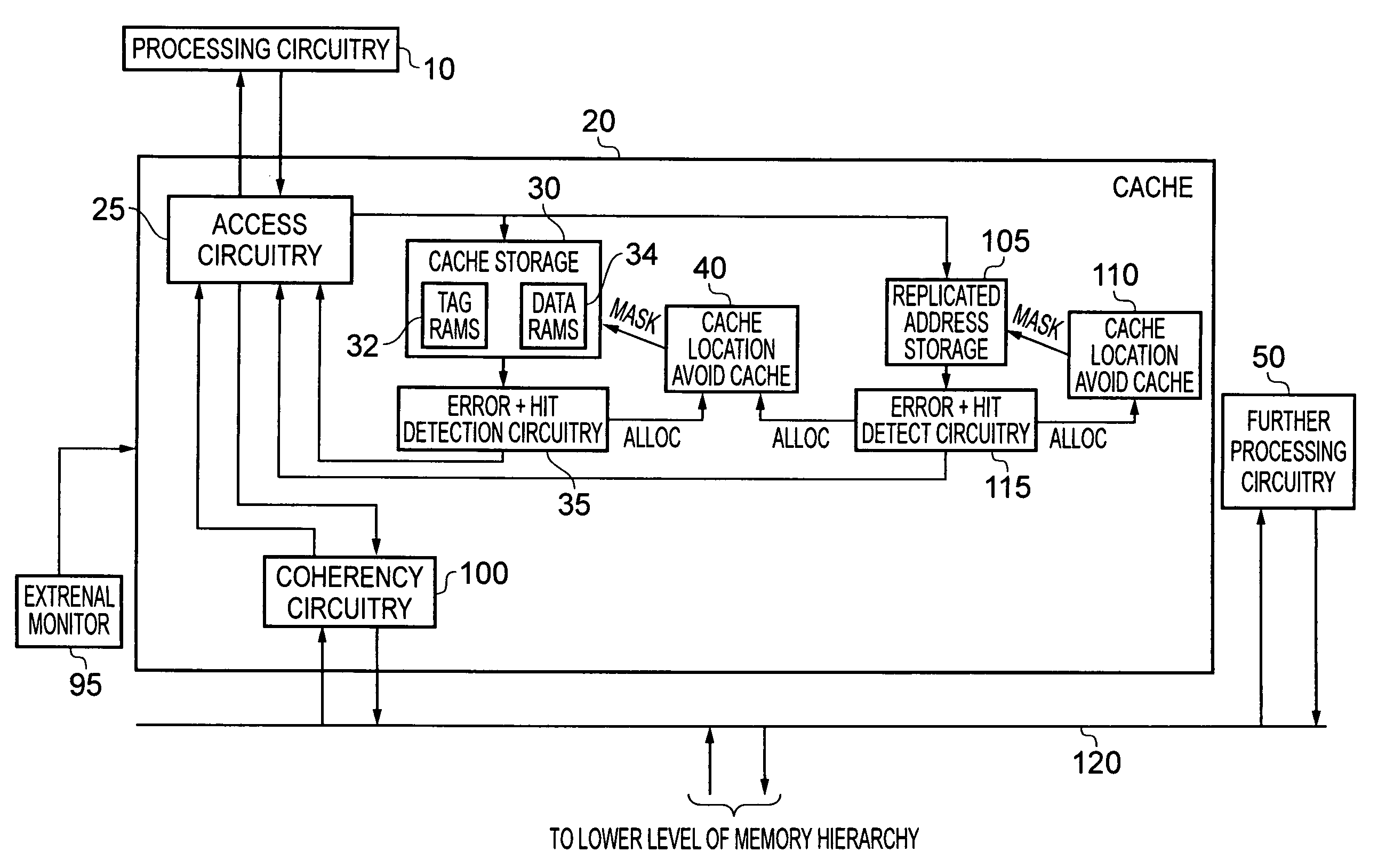

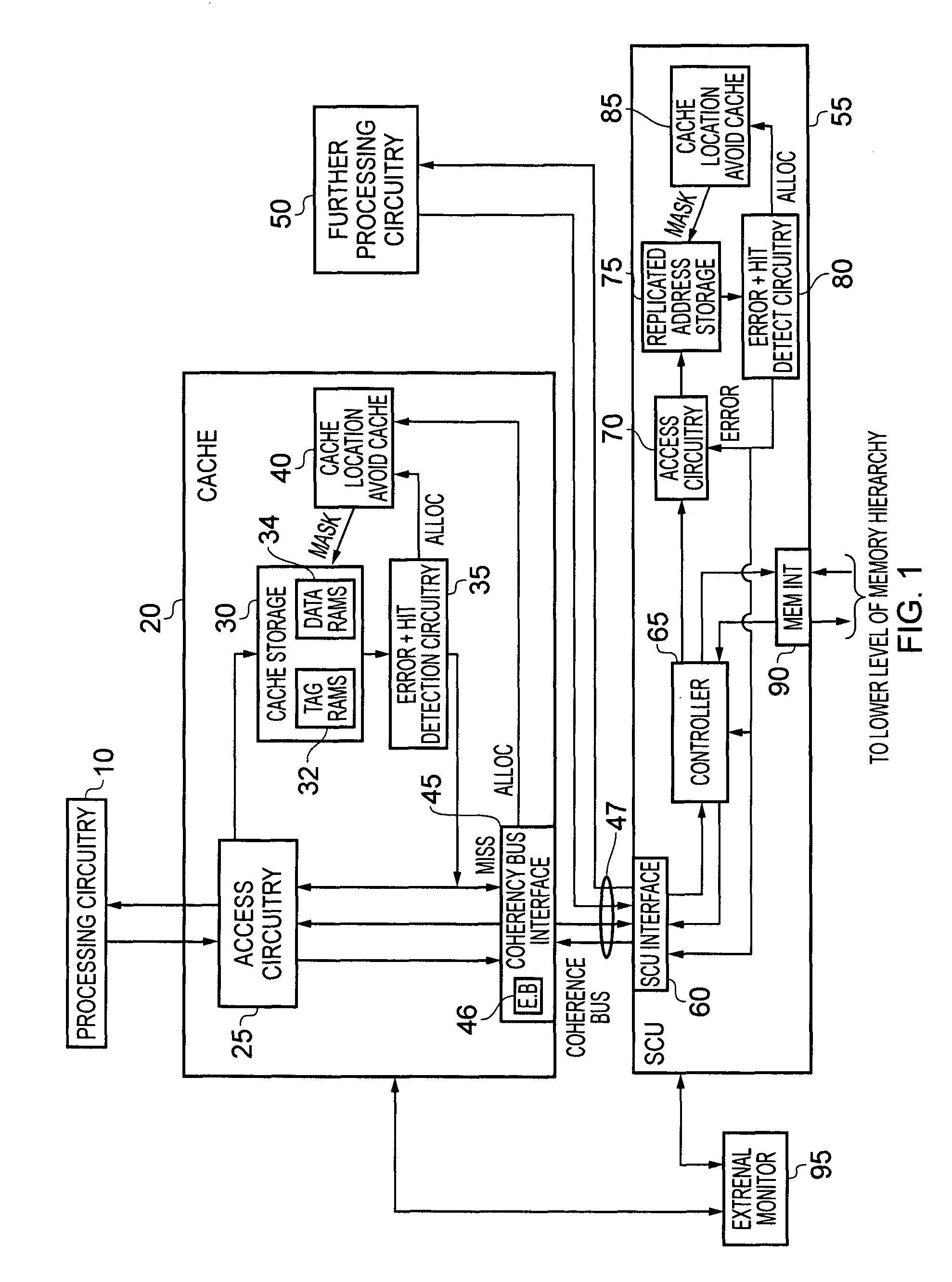

Handling of hard errors in a cache of a data processing apparatus

ActiveUS20090164727A1The process is simple and effectiveOptimization mechanismError detection/correctionDigital storageCache accessParallel computing

A data processing apparatus and method are provided for handling hard errors occurring in a cache of the data processing apparatus. The cache storage comprising data storage having a plurality of cache lines for storing data values, and address storage having a plurality of entries, with each entry identifying for an associated cache line an address indication value, and each entry having associated error data. In response to an access request, a lookup procedure is performed to determine with reference to the address indication value held in at least one entry of the address storage whether a hit condition exists in one of the cache lines. Further, error detection circuitry determines with reference to the error data associated with the at least one entry of the address storage whether an error condition exists for that entry. Additionally, cache location avoid storage is provided having at least one record, with each record being used to store a cache line identifier identifying a specific cache line. On detection of the error condition, one of the records in the cache location avoid storage is allocated to store the cache line identifier for the specific cache line associated with the entry for which the error condition was detected. Further, the error detection circuitry causes a clean and invalidate operation to be performed in respect of the specific cache line, and the access request is then re-performed. The cache access circuitry is arranged to exclude any specific cache line identified in the cache location avoid storage from the lookup procedure. This mechanism provides a very simple and effective mechanism for handling hard errors that manifest themselves within a cache during use, so as to ensure correct operation of the cache in the presence of such hard errors. Further, the technique can be employed not only in association with write through caches but also write back caches, thus providing a very flexible solution.

Owner:ARM LTD

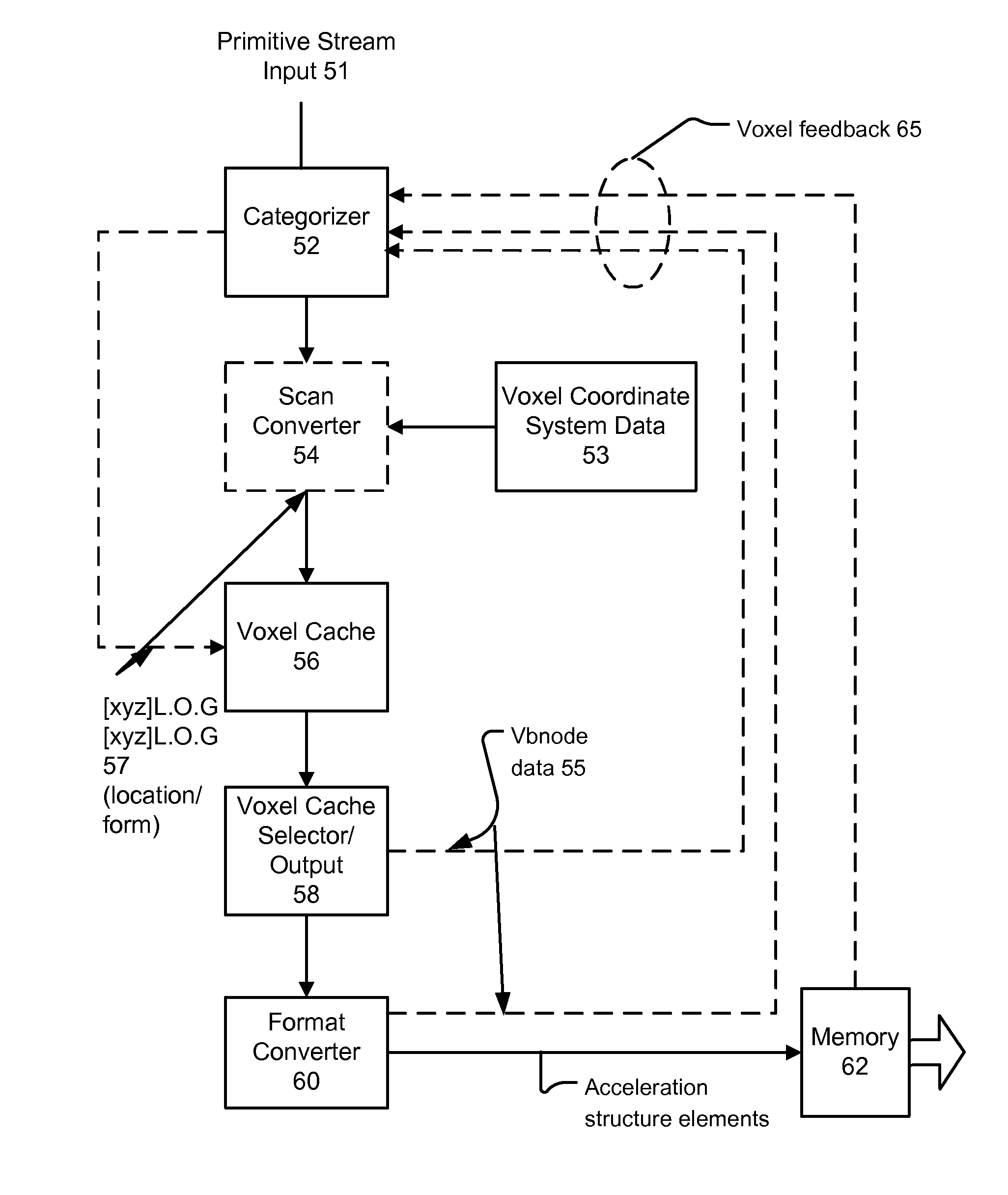

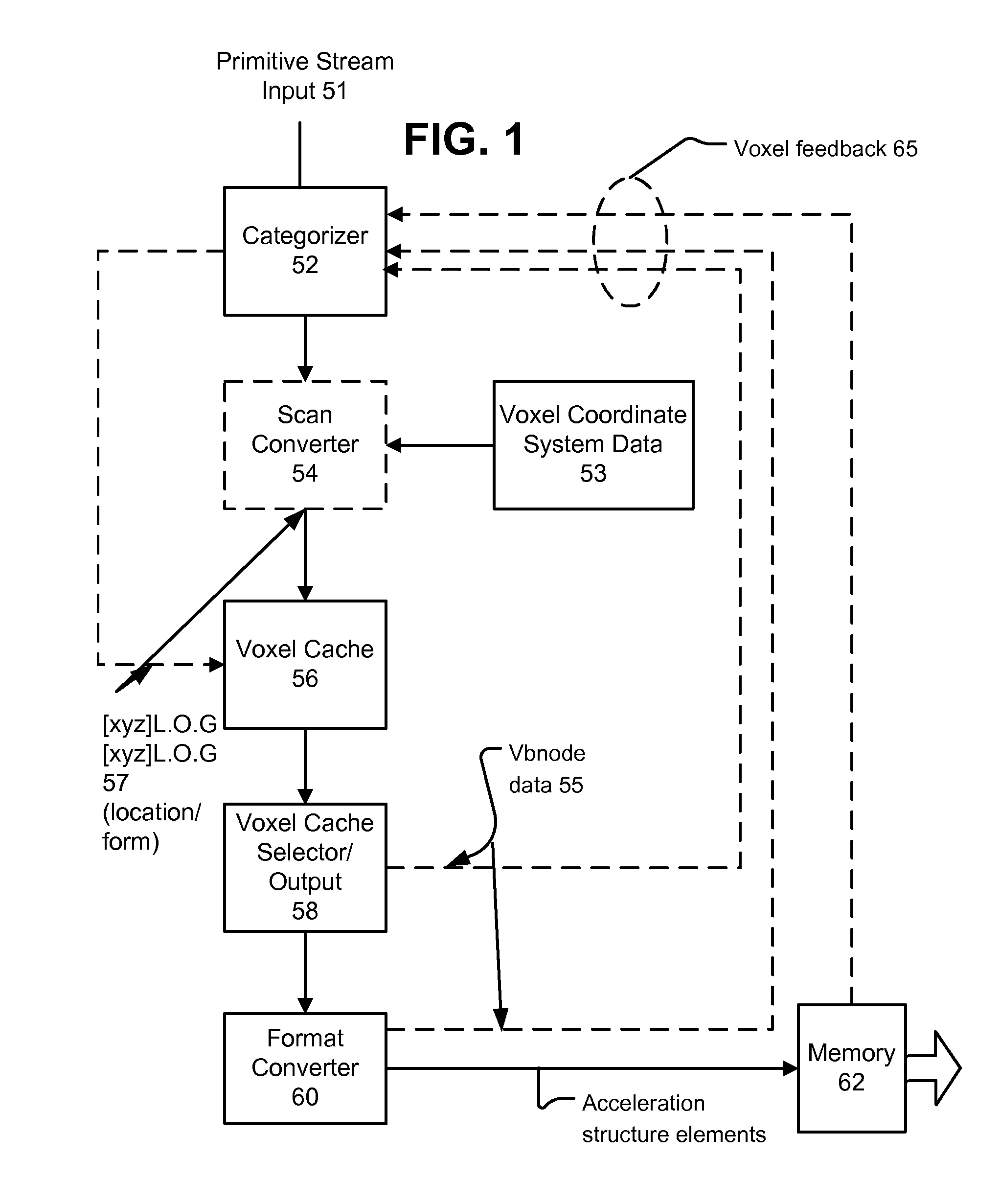

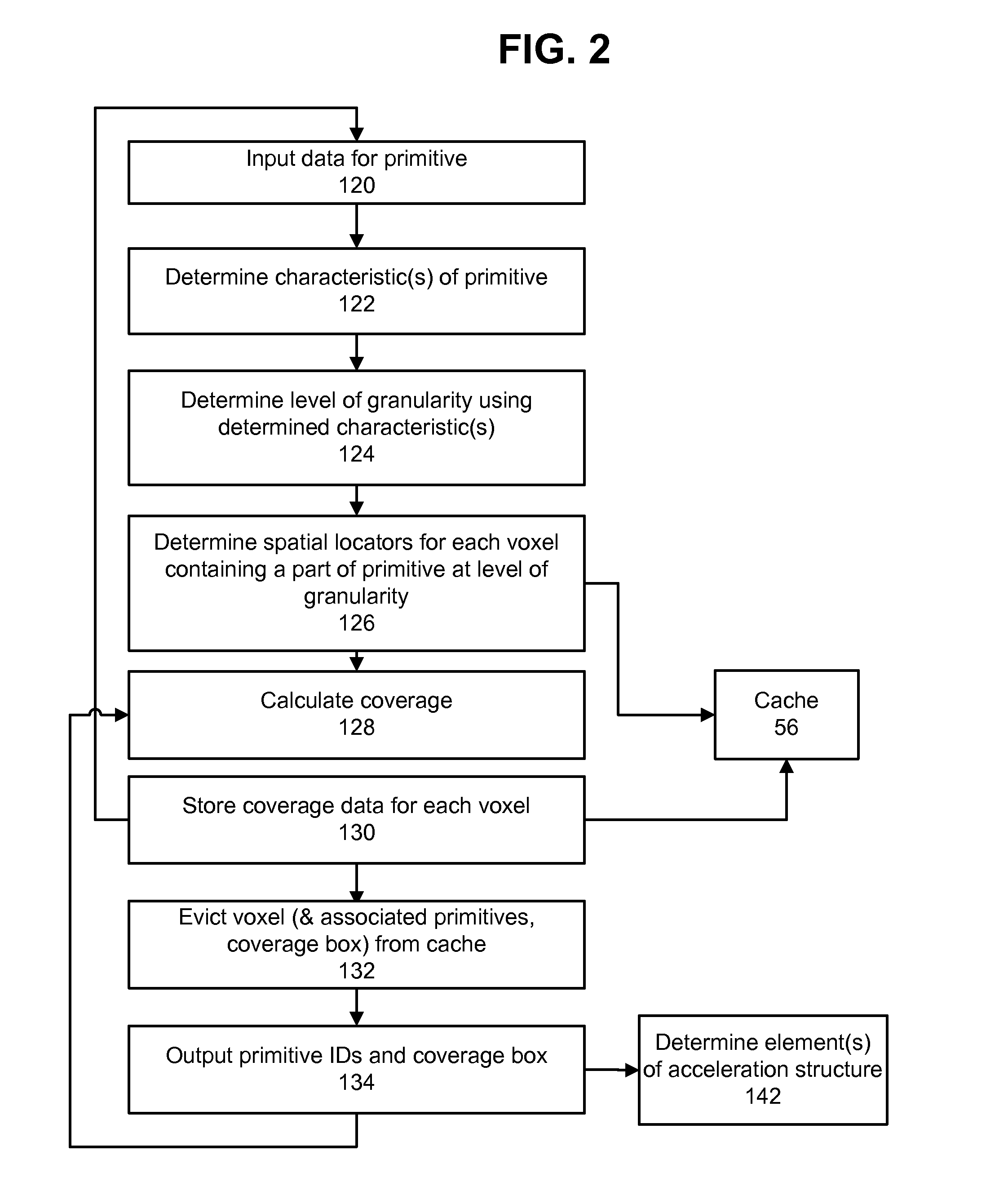

Systems and methods for 3-d scene acceleration structure creation and updating

ActiveUS20130113800A1High bandwidth linkImplemented cost-effectivelyImage generation3D-image renderingParallel computingCache locality

Systems and methods for producing an acceleration structure provide for subdividing a 3-D scene into a plurality of volumetric portions, which have different sizes, each being addressable using a multipart address indicating a location and a relative size of each volumetric portion. A stream of primitives is processed by characterizing each according to one or more criteria, selecting a relative size of volumetric portions for use in bounding the primitive, and finding a set of volumetric portions of that relative size which bound the primitive. A primitive ID is stored in each location of a cache associated with each volumetric portion of the set of volumetric portions. A cache location is selected for eviction, responsive to each cache eviction decision made during the processing. An element of an acceleration structure according to the contents of the evicted cache location is generated, responsive to the evicted cache location.

Owner:IMAGINATION TECH LTD

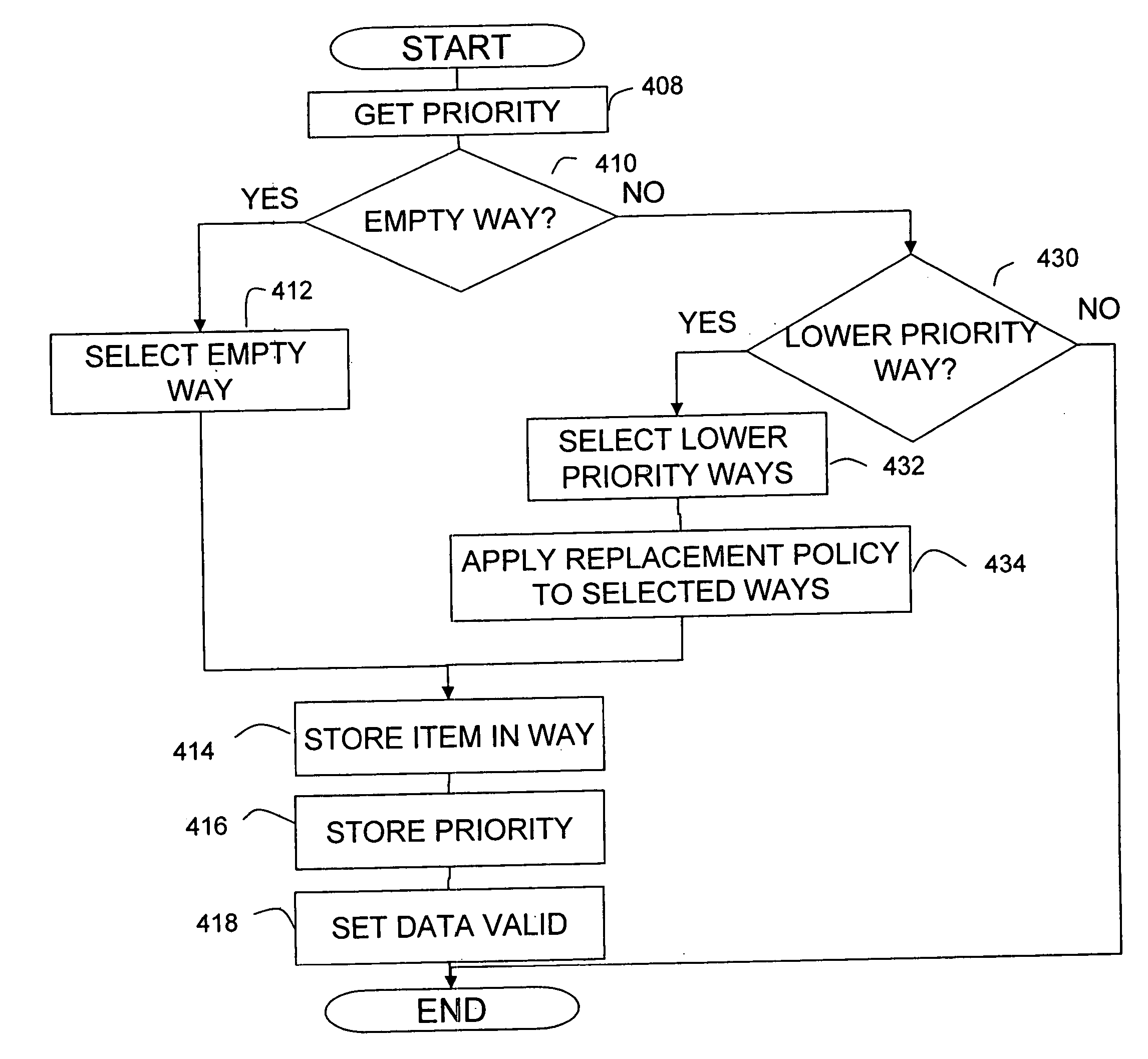

Cache memory with improved replacement policy

InactiveUS20050188158A1Memory adressing/allocation/relocationGeneral purposeDigital signal processing

A processor system having a cache memory. The replacement policy for the cache is augmented with a consideration of priority so that higher priority items are not displaced by lower priority items. The priority based replacement policy can be used to allow processes that are of lower priority to share the same cache with processes that are of higher priority. A processor including digital signal processing and general purpose logic function is shown to employ the priority based replacement policy to allow processes executing generalized logic functions to use the cache when not needed for digital signal processing operations that are time critical. A processor having digital signal processing capability is shown to employ the priority system to reserve a block of memory configured for a cache. The block of memory is reserved by setting the priority of those cache locations to a priority higher than any other executing process.

Owner:ANALOG DEVICES INC

Credit-Based Streaming Multiprocessor Warp Scheduling

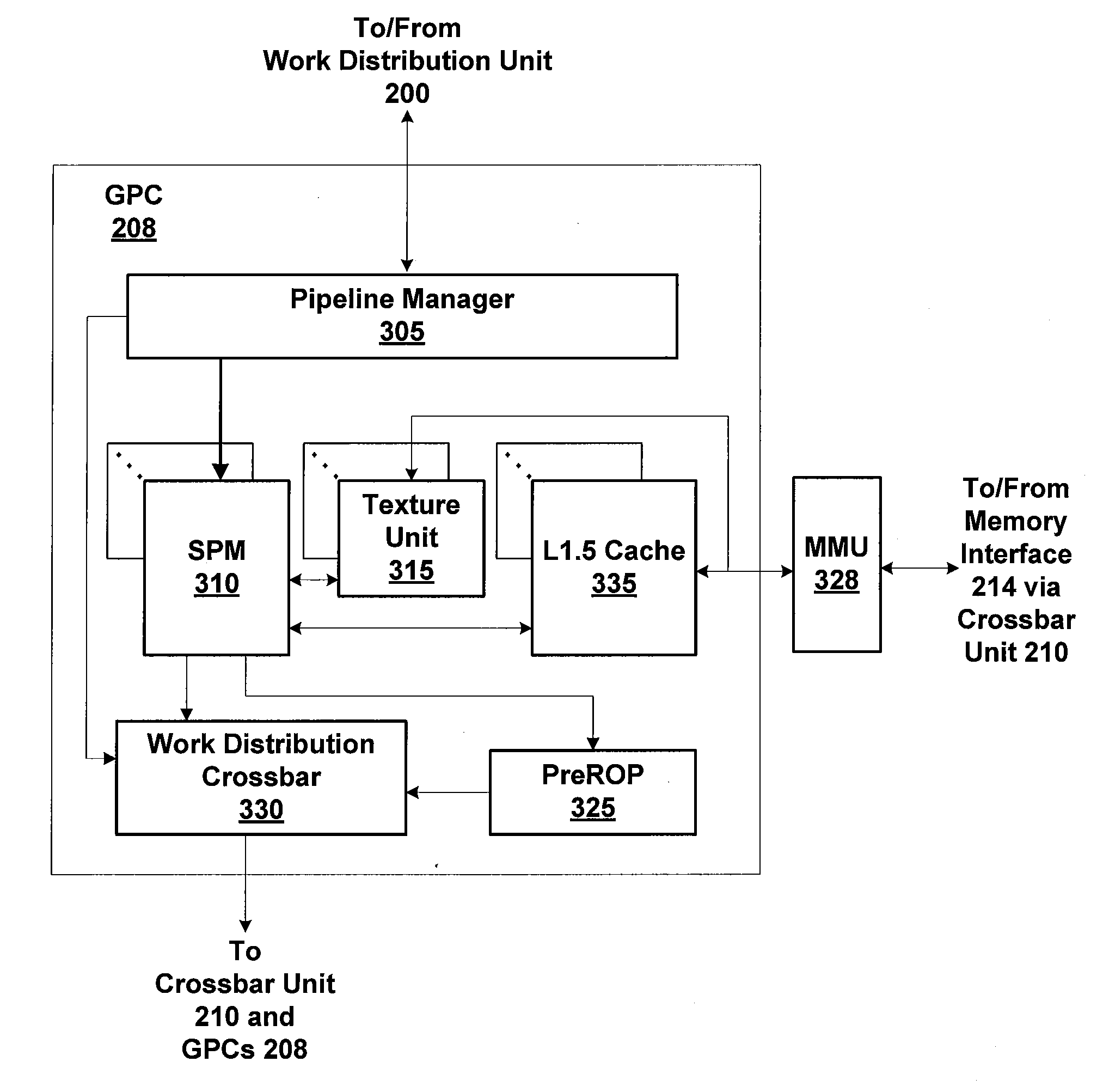

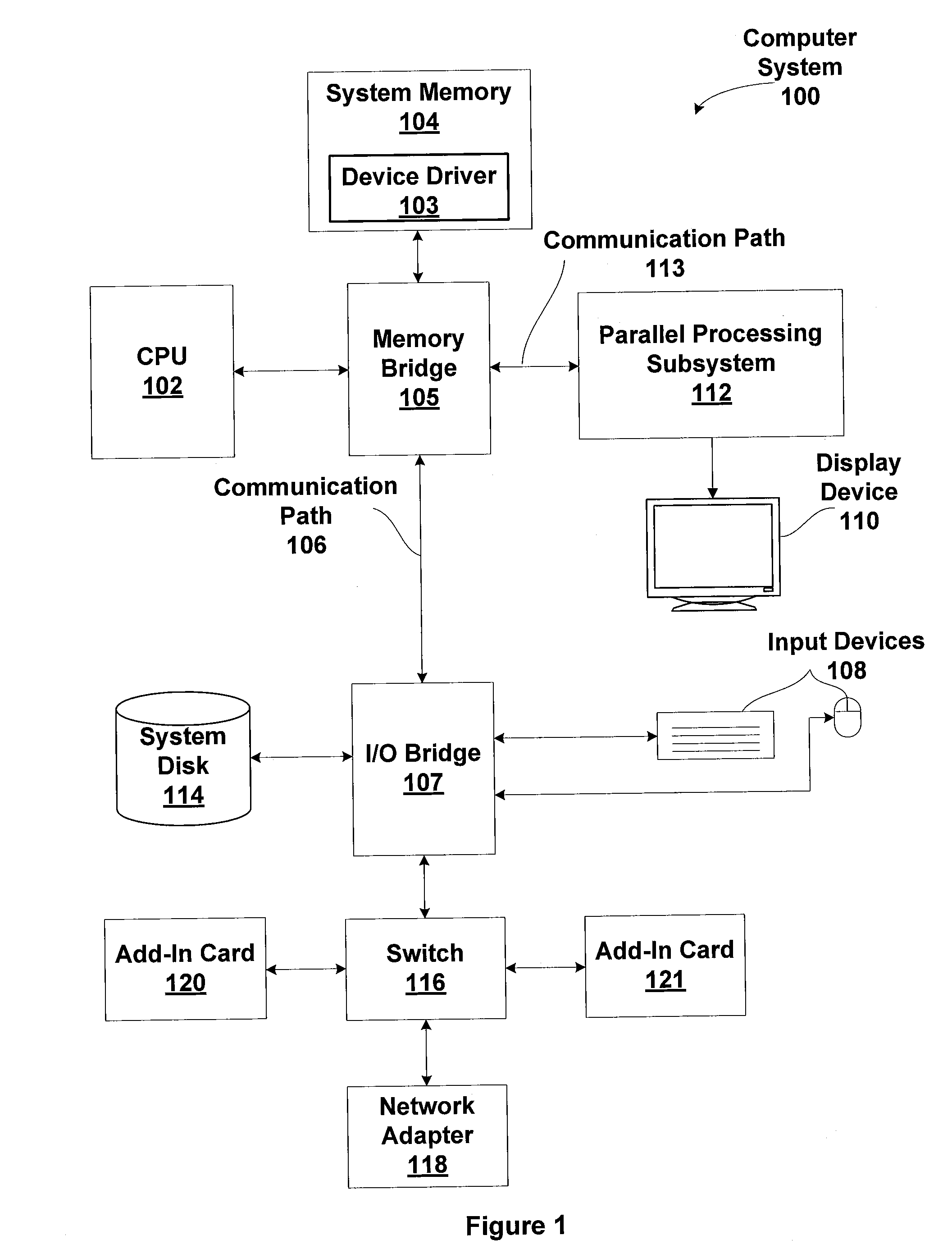

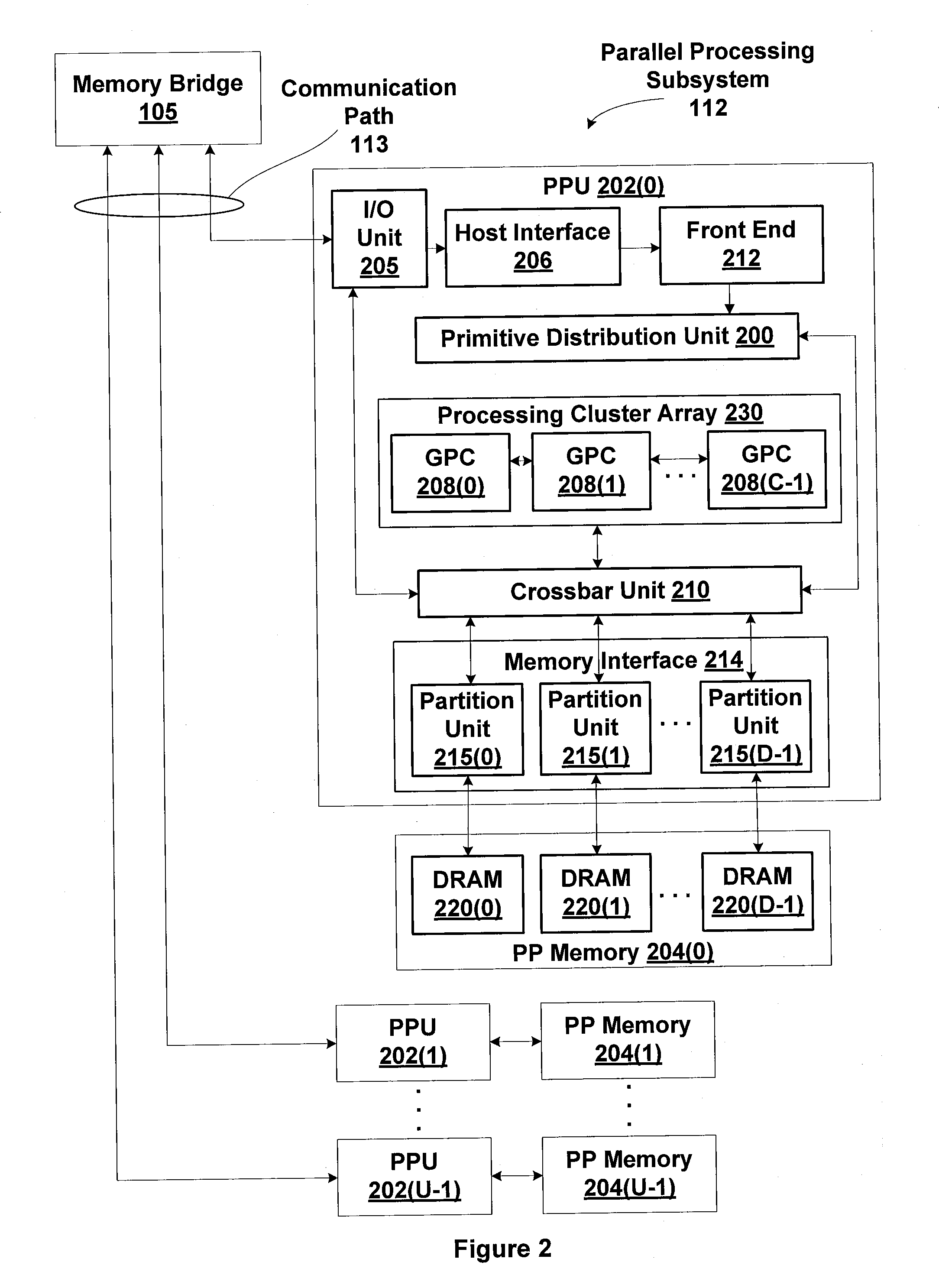

ActiveUS20110072244A1Improves cache localityImprove system performanceDigital computer detailsConcurrent instruction executionCache accessScheduling instructions

One embodiment of the present invention sets forth a technique for ensuring cache access instructions are scheduled for execution in a multi-threaded system to improve cache locality and system performance. A credit-based technique may be used to control instruction by instruction scheduling for each warp in a group so that the group of warps is processed uniformly. A credit is computed for each warp and the credit contributes to a weight for each warp. The weight is used to select instructions for the warps that are issued for execution.

Owner:NVIDIA CORP

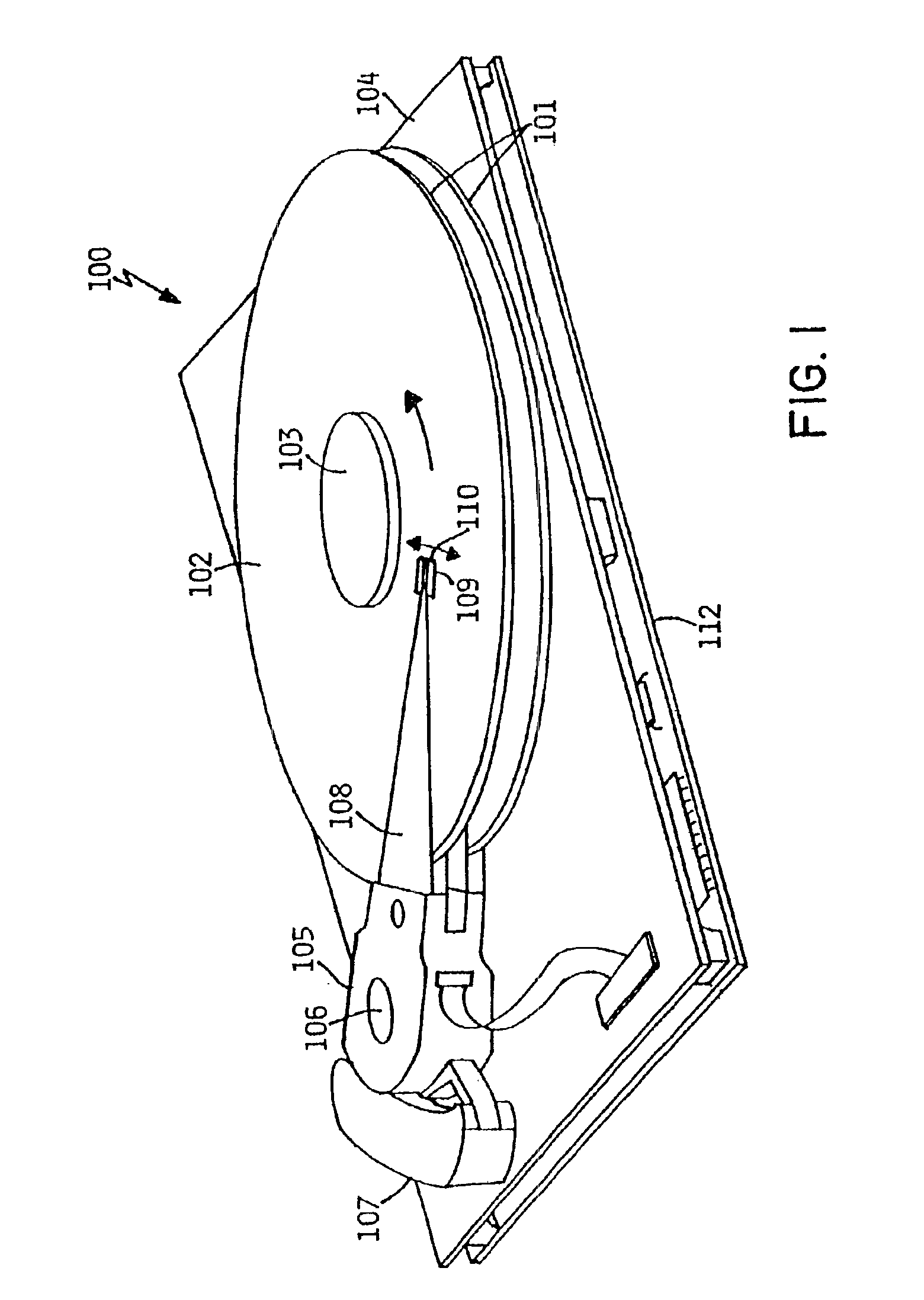

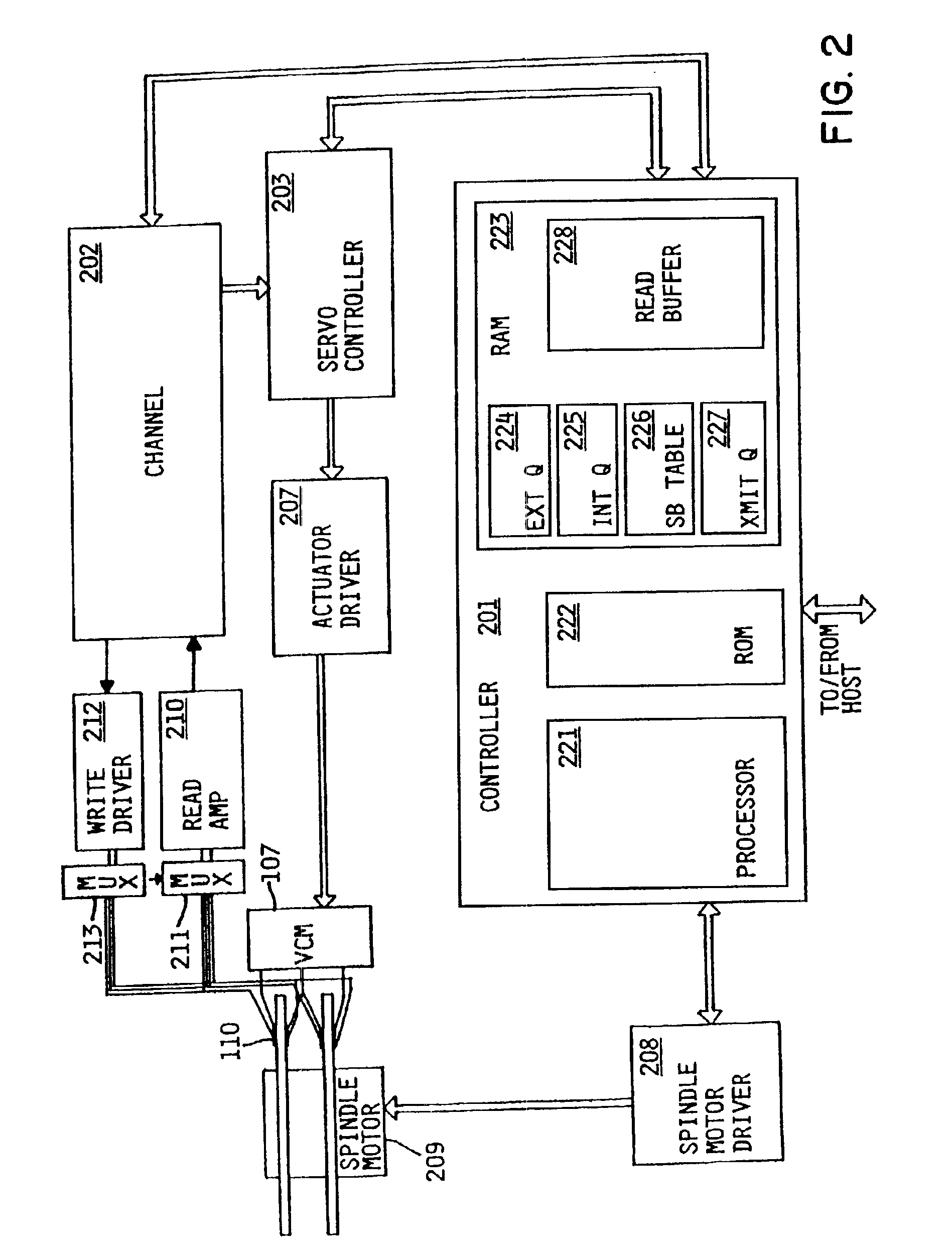

Method and apparatus for servicing mixed block size data access operations in a disk drive data storage device

InactiveUS6925526B2Improve efficiencyImprove performanceInput/output to record carriersRecord information storageData accessEngineering

Write operations less than full block size (short block writes) are internally accumulated while being written to disk in a temporary cache location. Once written to the cache location, the disk drive signals the host that the write operation has completed. Accumulation of short block writes in the drive is transparent to the host and does not present an exposure of data loss. The accumulation of a significant number of short block write operations in the queue make it possible to perform read / modify / write operations with a greater efficiency. In operation, the drive preferably cycles between operation in the cache location and the larger data block area to achieve efficient use of the cache and efficient selection of data access operations. In one embodiment, a portion of the disk surface is formatted at a smaller block size for use by legacy software.

Owner:INTELLECTUAL DISCOVERY INC

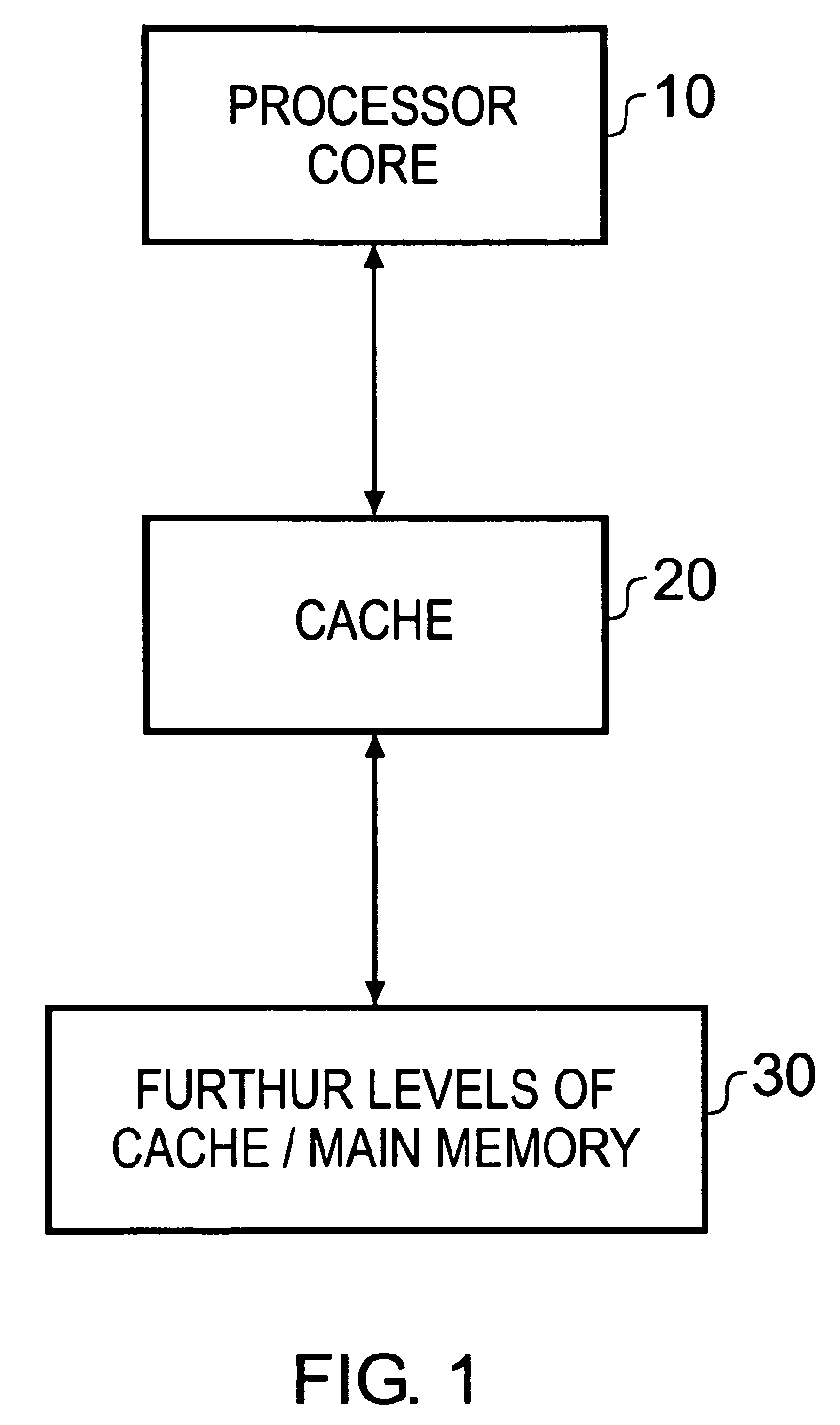

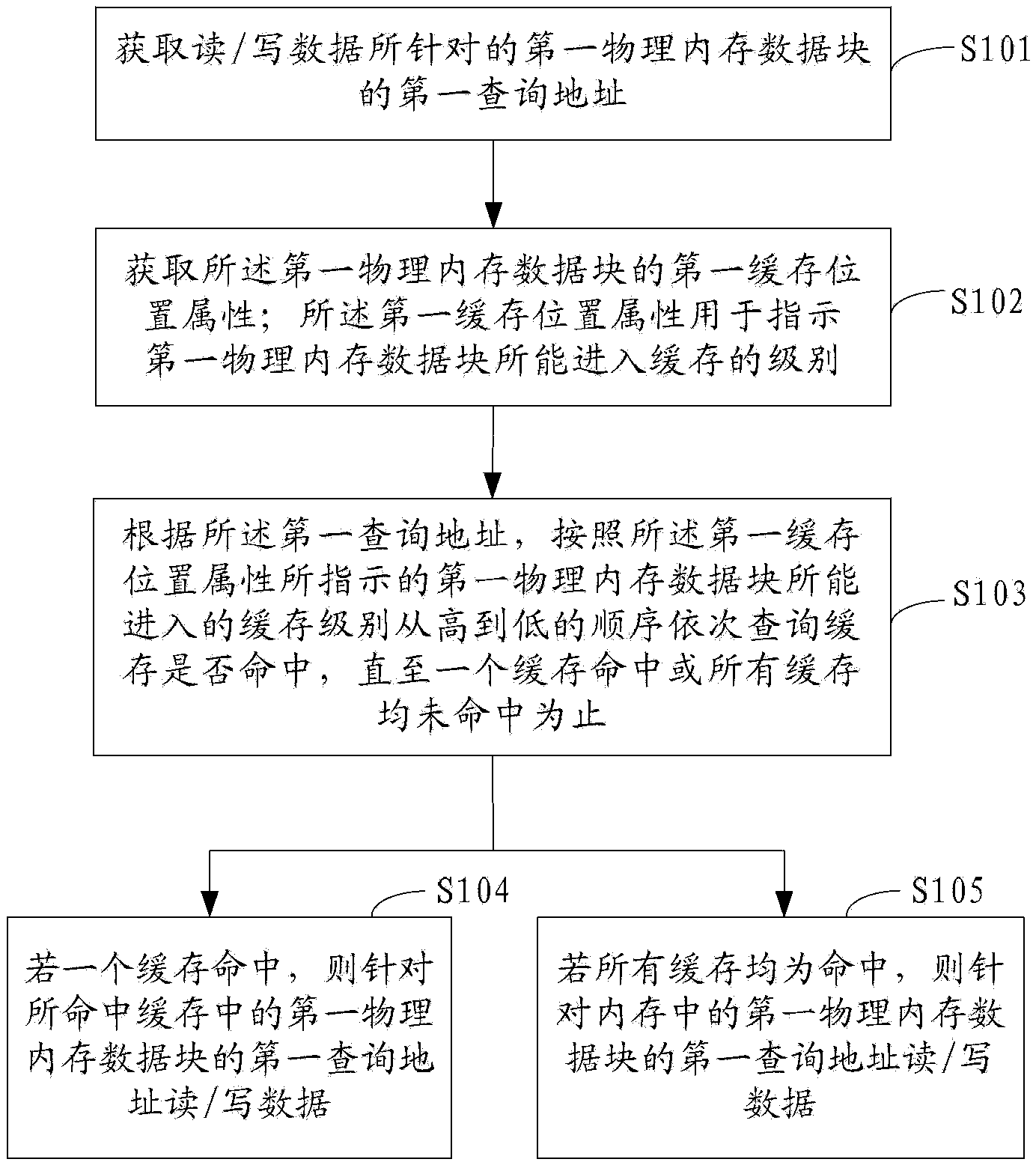

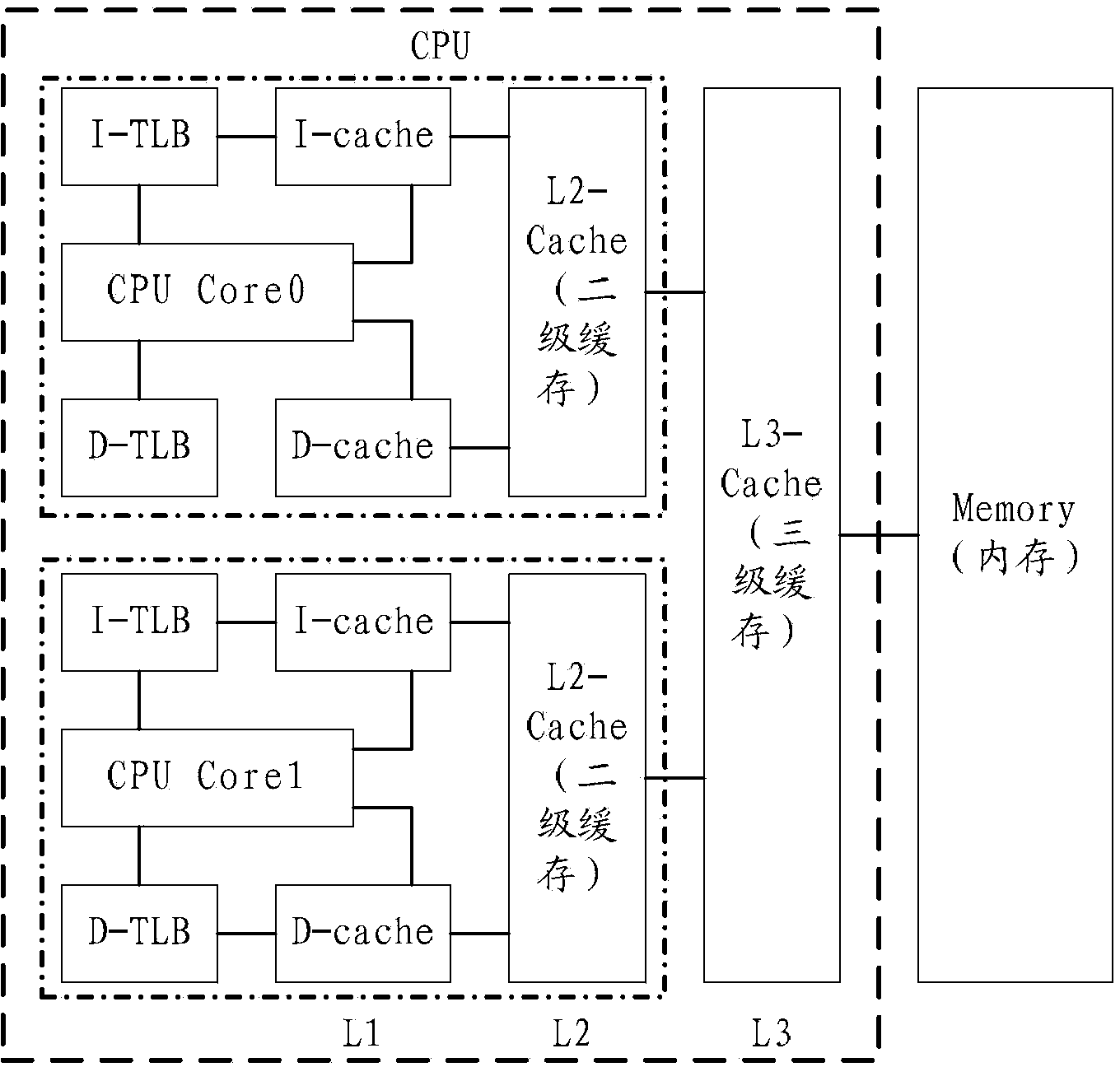

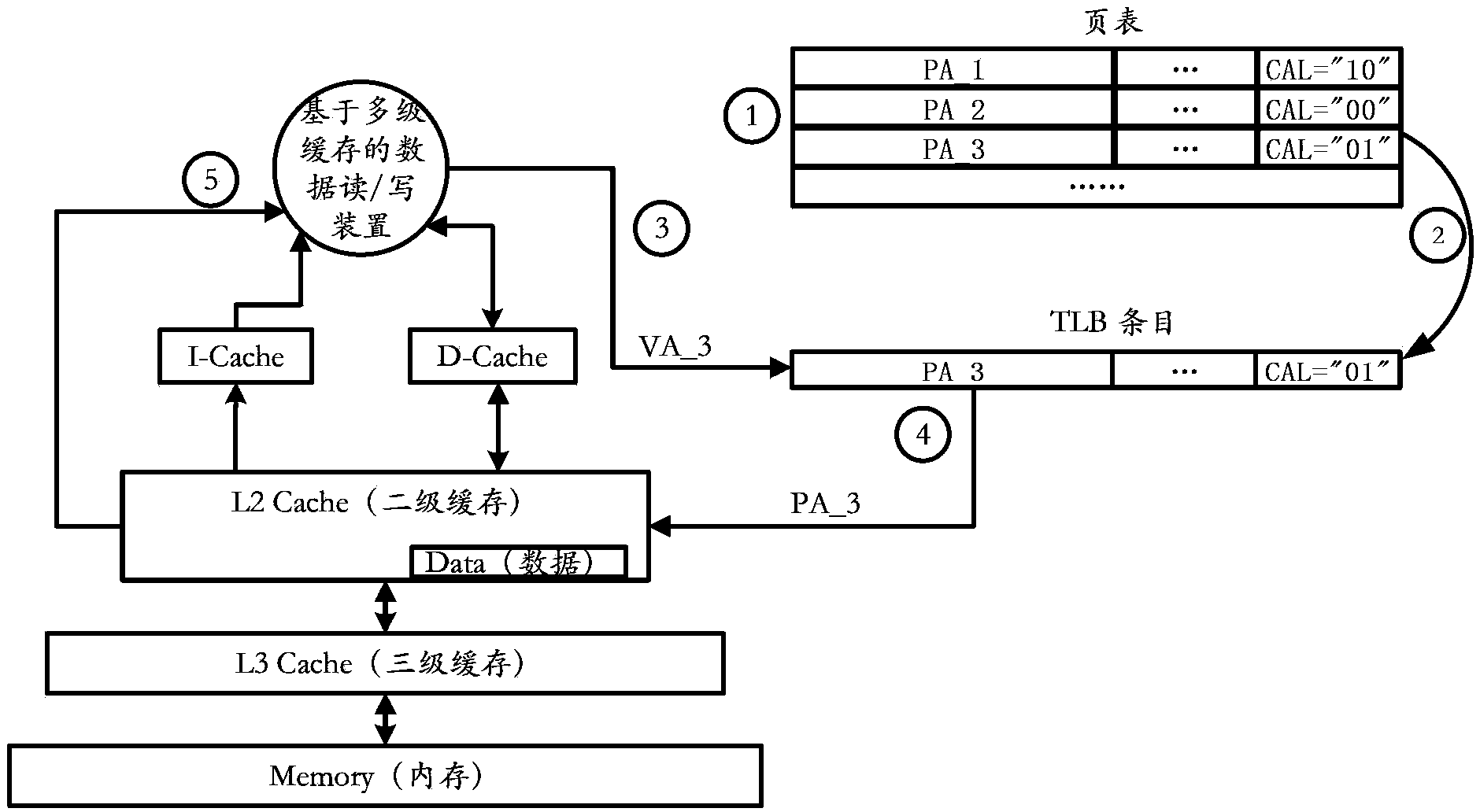

Data reading/writing method and device and computer system on basis of multi-level Cache

ActiveCN104346294AAvoid enteringImprove access efficiencyMemory architecture accessing/allocationMemory adressing/allocation/relocationCache accessParallel computing

A multi-level cache-based data reading / writing method and device, and a computer system, which relate to the data reading / writing field of computer systems, and are used to improve the Cache access efficiency during data reading / writing. The method comprises: acquiring a first query address of a first physical memory data block for reading / writing data; acquiring a first cache position attribute of the first physical memory data block; according to the first query address, in accordance with a high to low order of cache levels which the first physical memory data block indicated by the first cache position attribute can access, querying whether the caches are hit in sequence or not, until one cache is hit or none of the caches is hit; if one cache is hit, reading / writing data for the first query address of the first physical memory data block in the hit cache; alternatively, if none of the caches is hit, reading / writing data for a first query address of a first physical memory data block in the memory.

Owner:HUAWEI TECH CO LTD +1

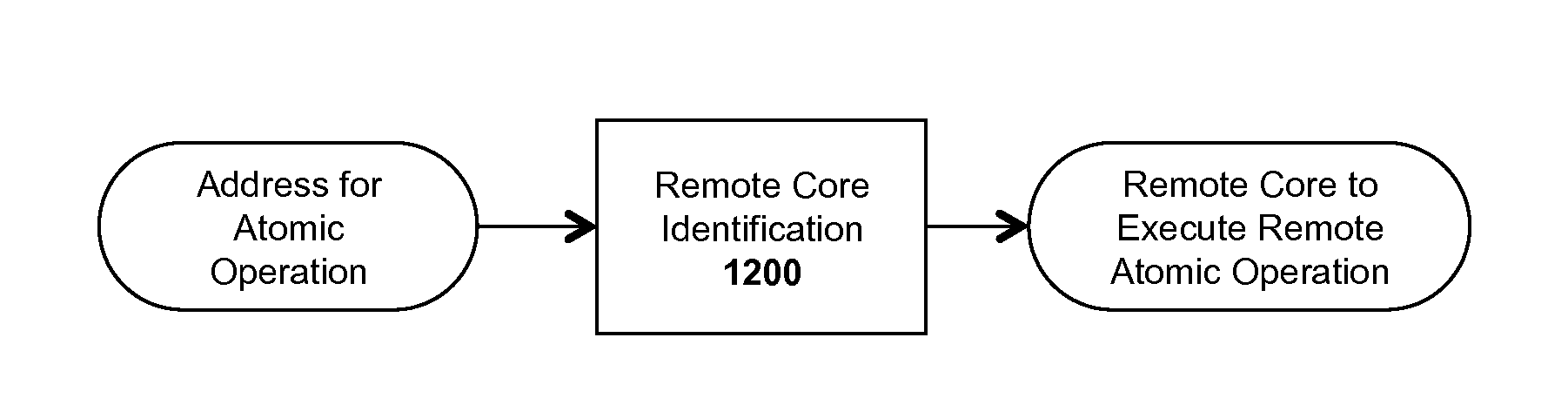

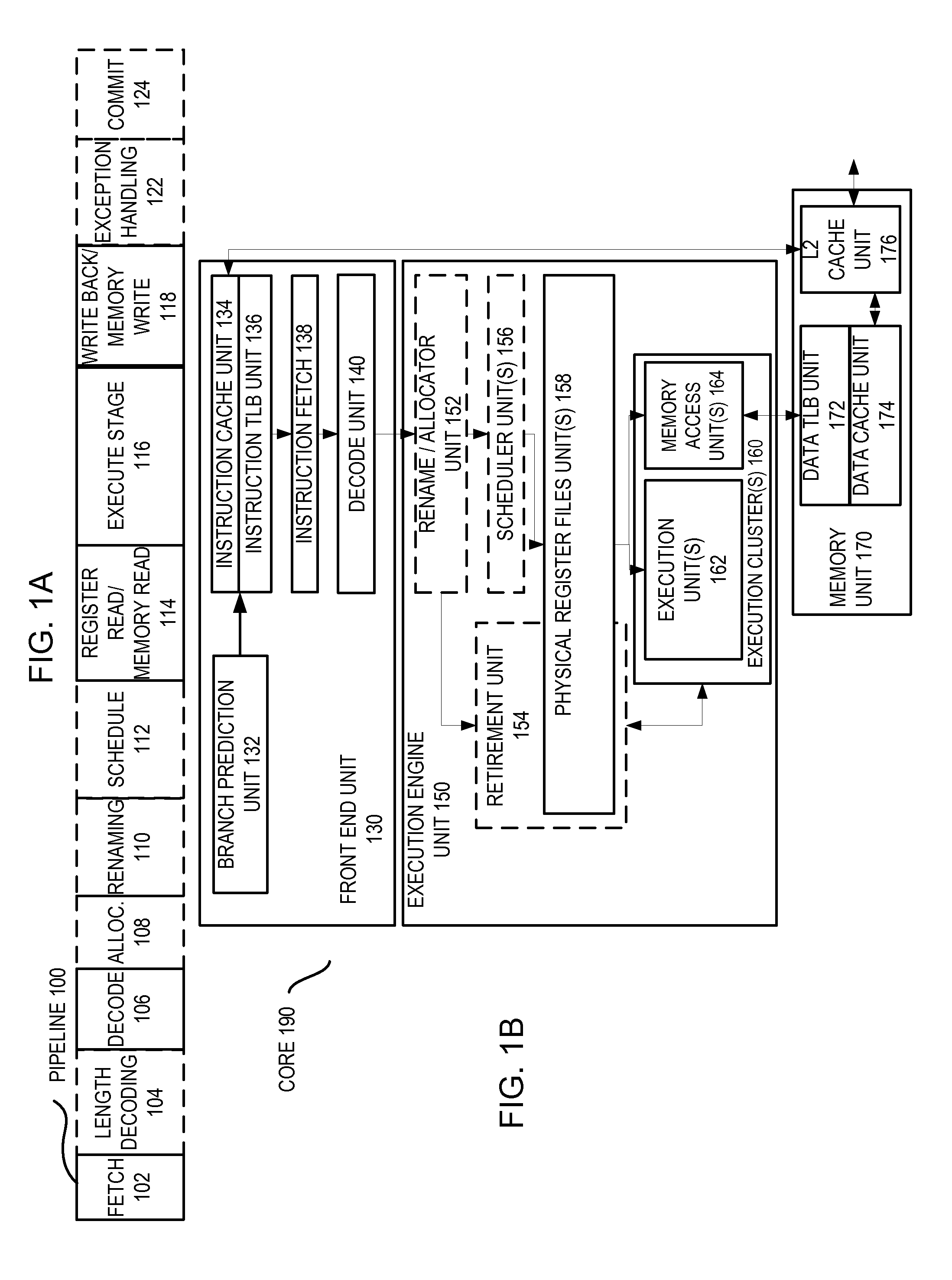

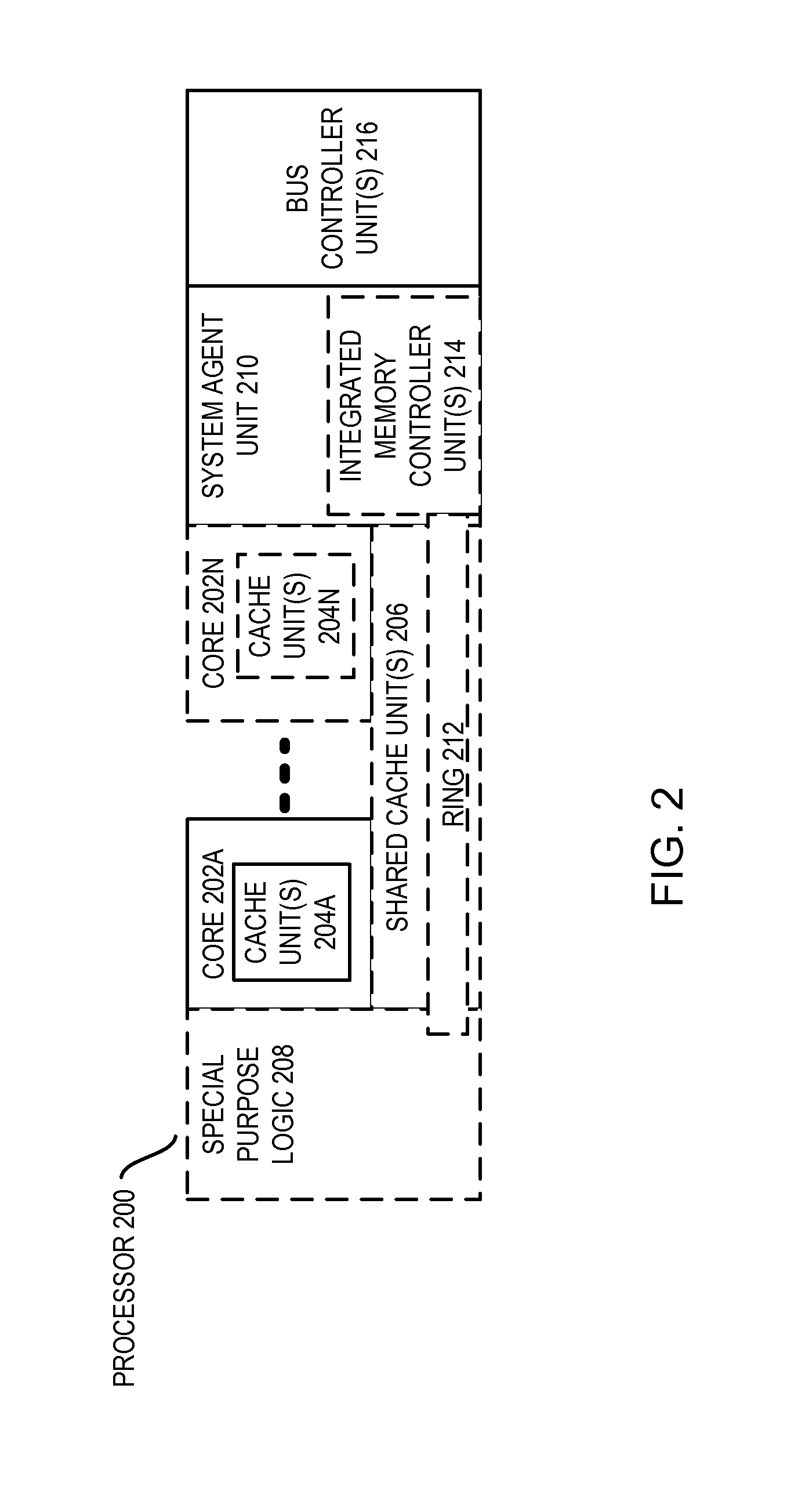

Method and apparatus for selecting cache locality for atomic operations

InactiveUS20150178086A1Error detection/correctionMemory adressing/allocation/relocationCache localityAtomic operations

Owner:INTEL CORP

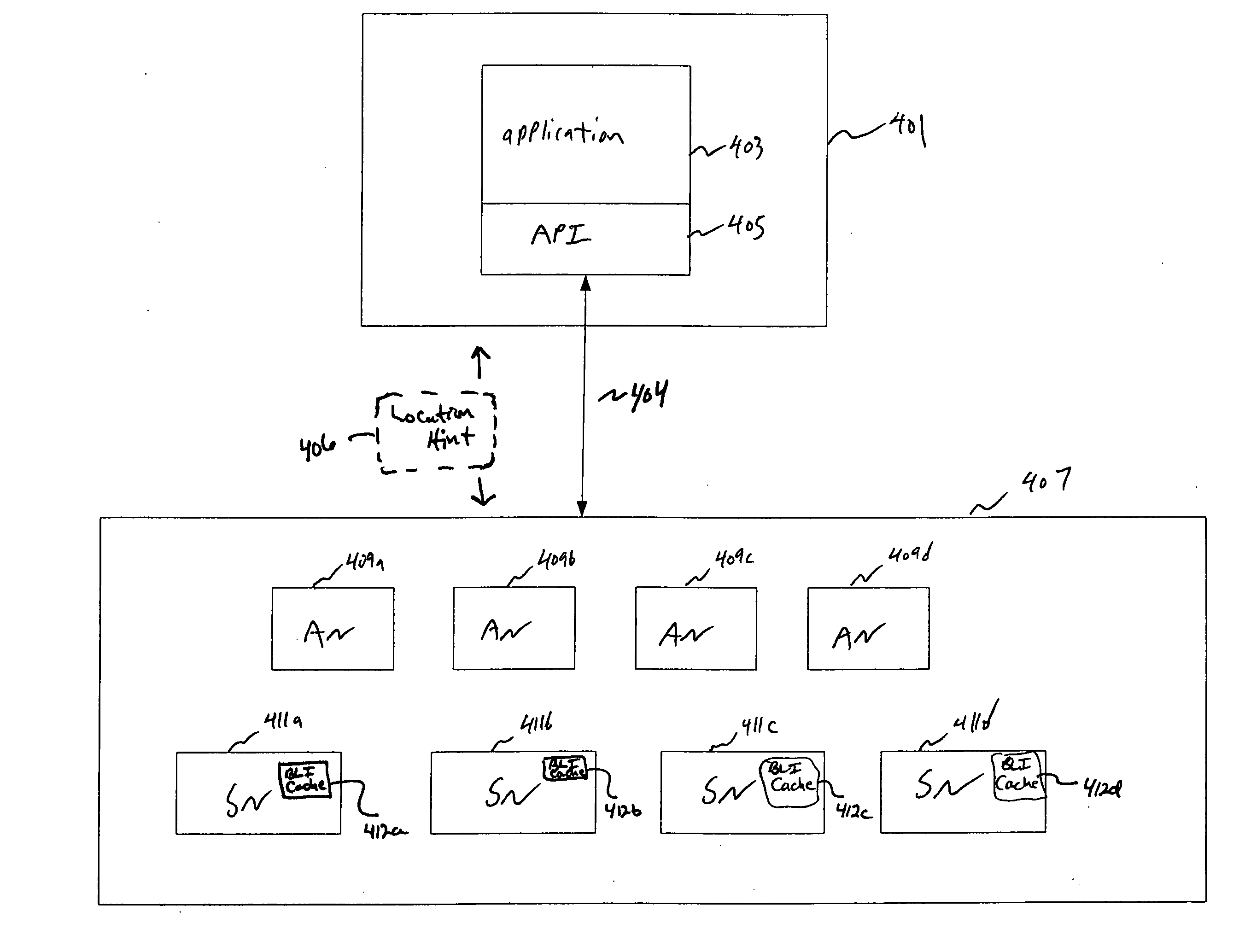

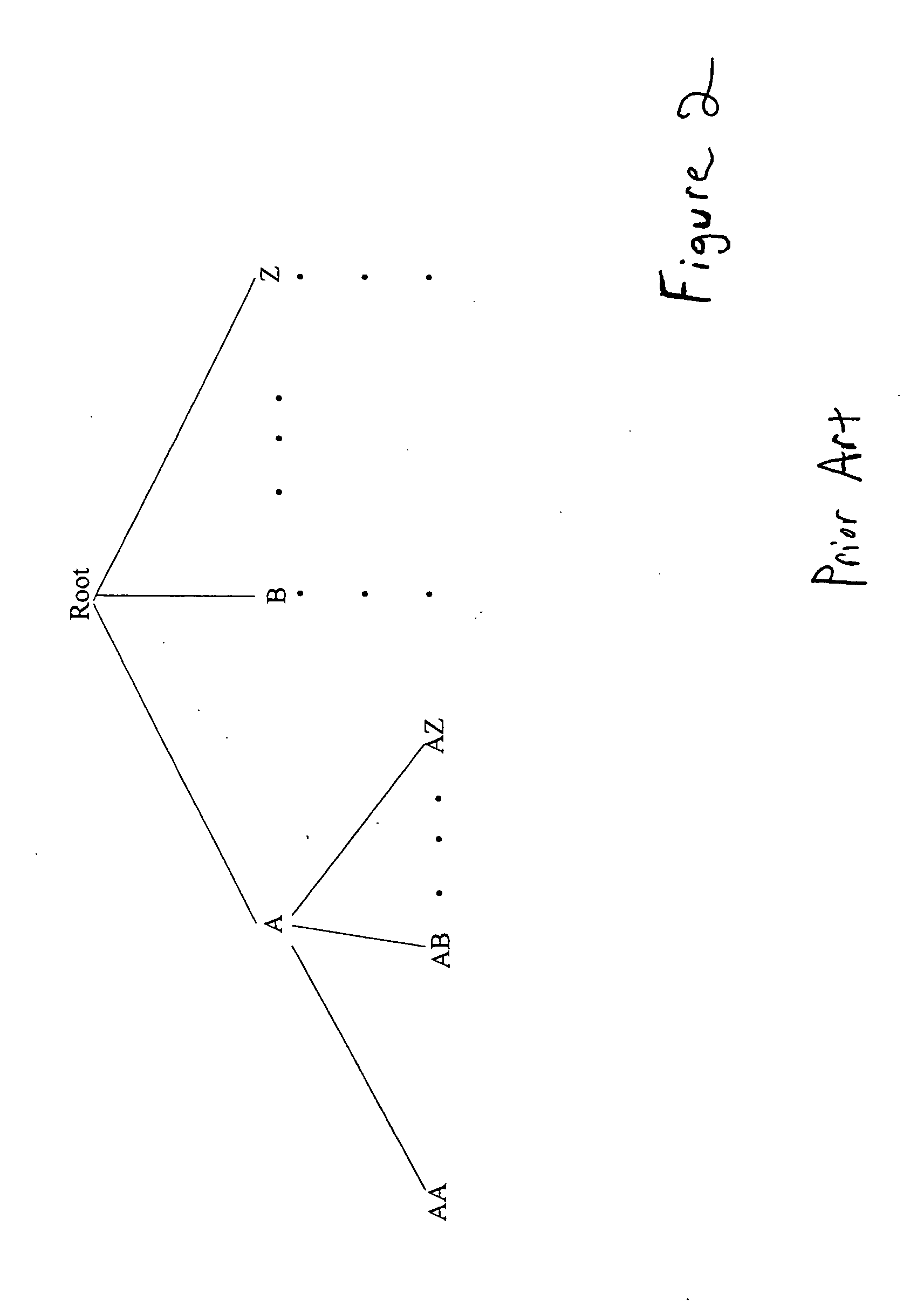

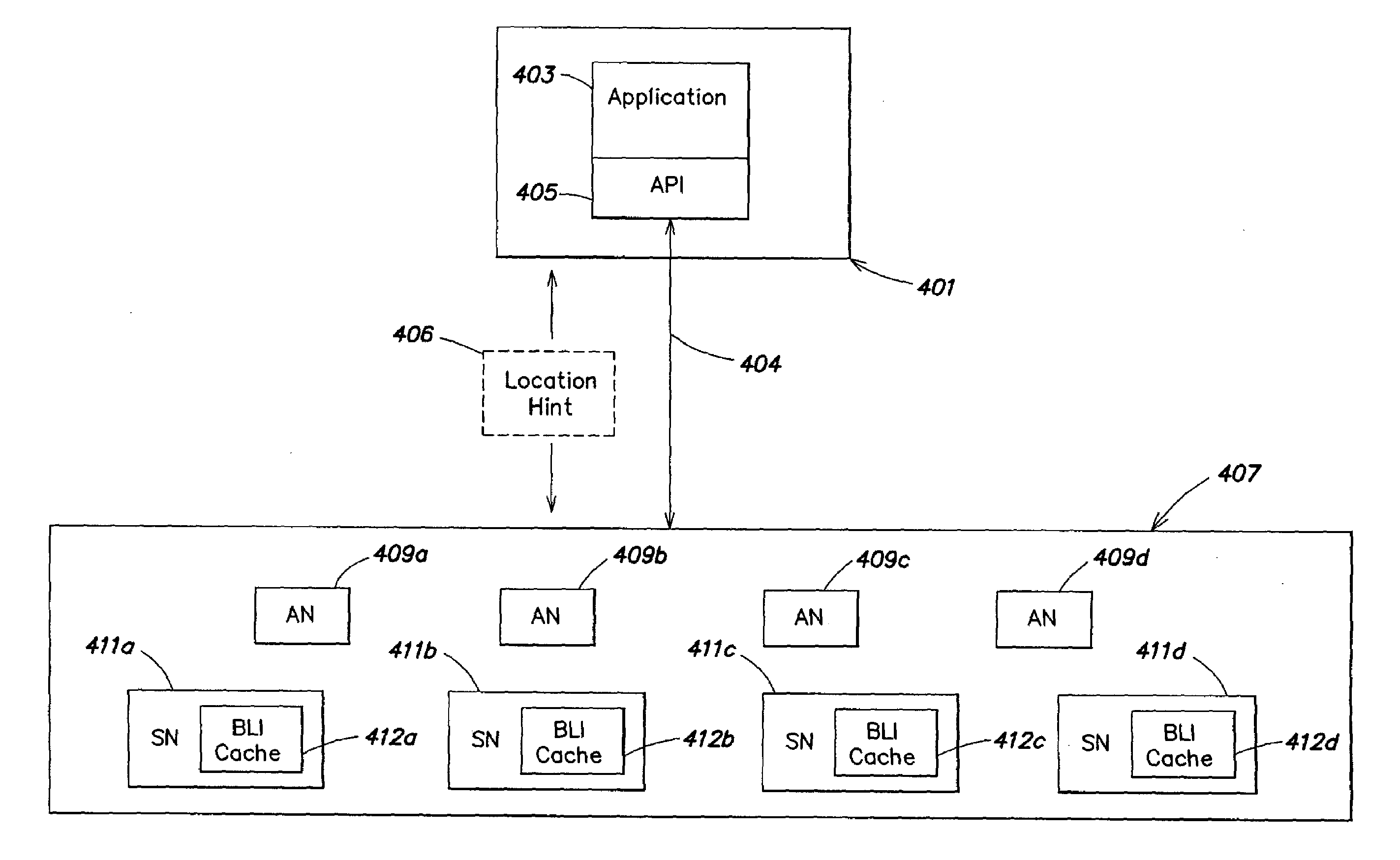

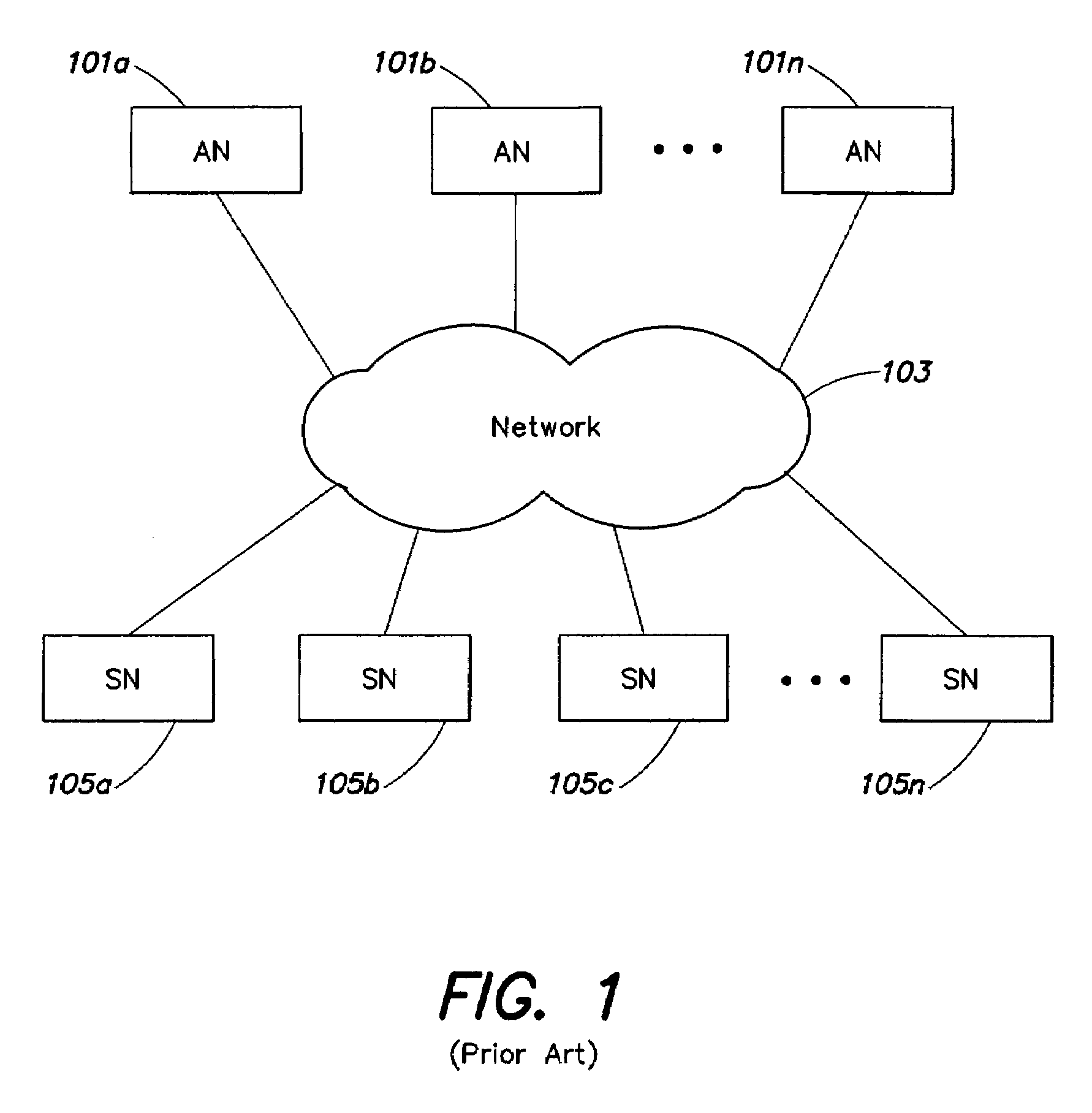

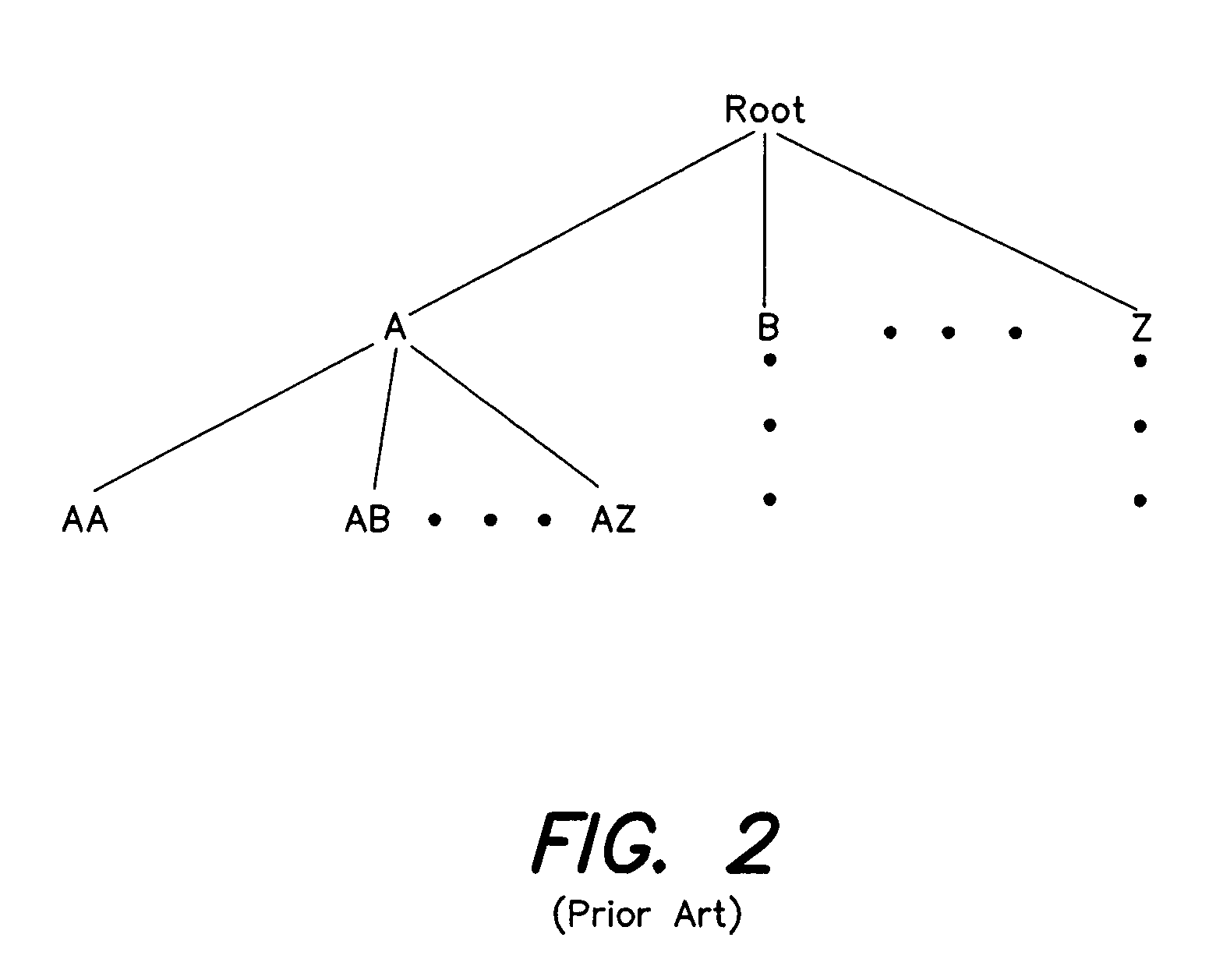

Methods and apparatus for caching a location index in a data storage system

ActiveUS20050125627A1Memory adressing/allocation/relocationData switching networksData storeInherent location

One embodiment is a system for locating content on a storage system, in which the storage system provides a location hint to the host of where the data is physically stored, which the host can resubmit with future access requests. In another embodiment, an index that maps content addresses to physical storage locations is cached on the storage system. In yet another embodiment, intrinsic locations are used to select a storage location for newly written data based on an address of the data. In a further embodiment, units of data that are stored at approximately the same time having location index entries that are proximate in the index.

Owner:EMC IP HLDG CO LLC

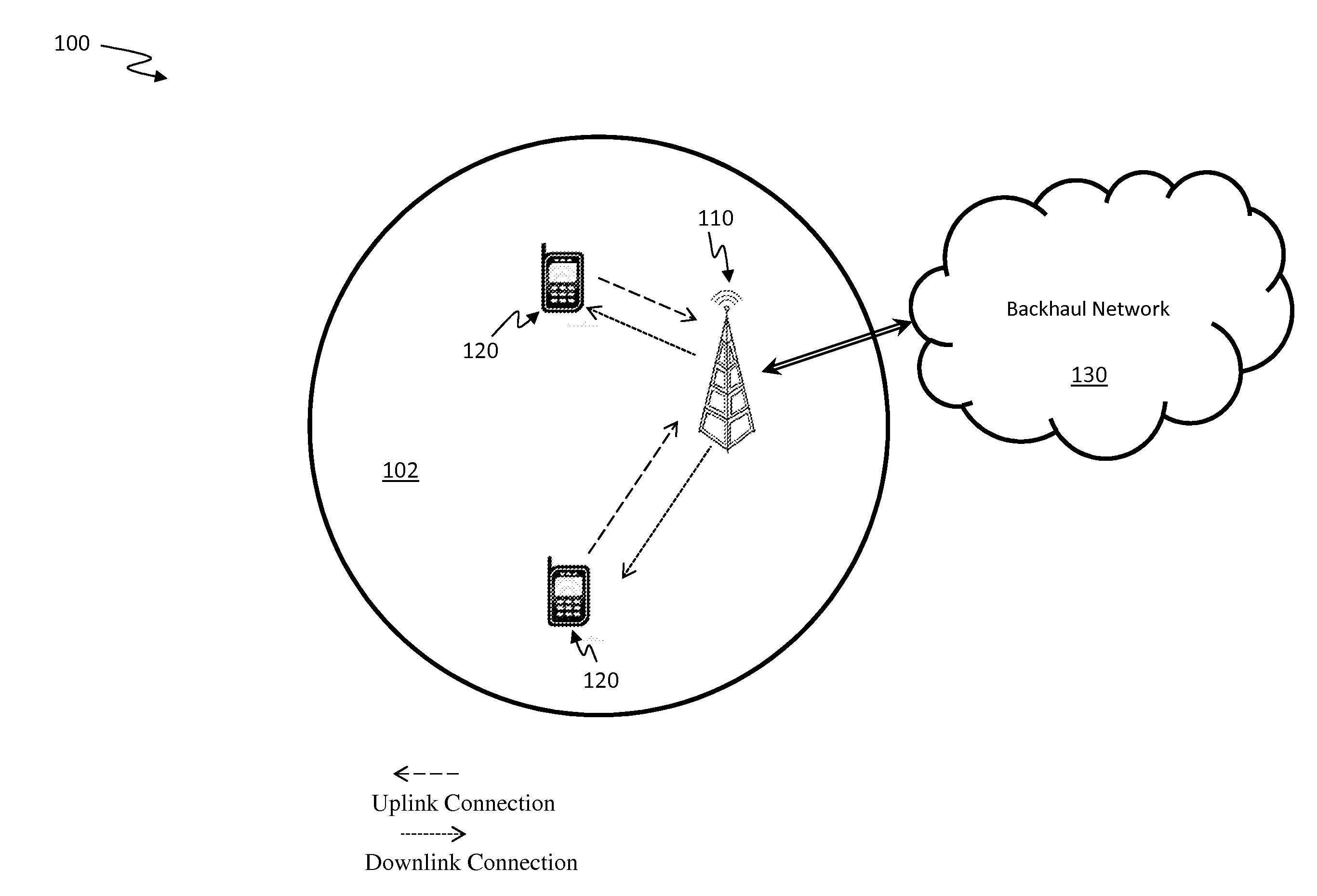

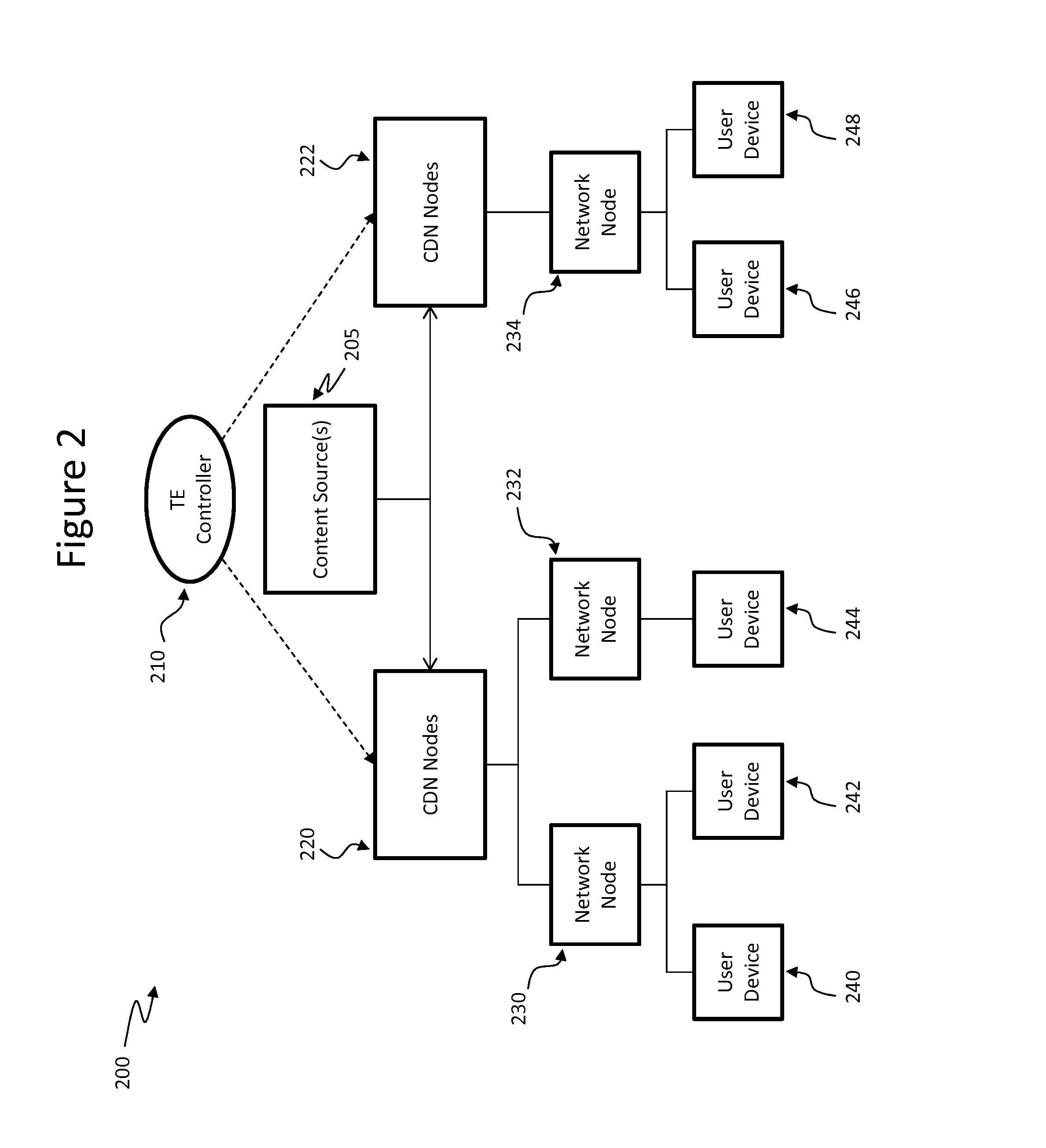

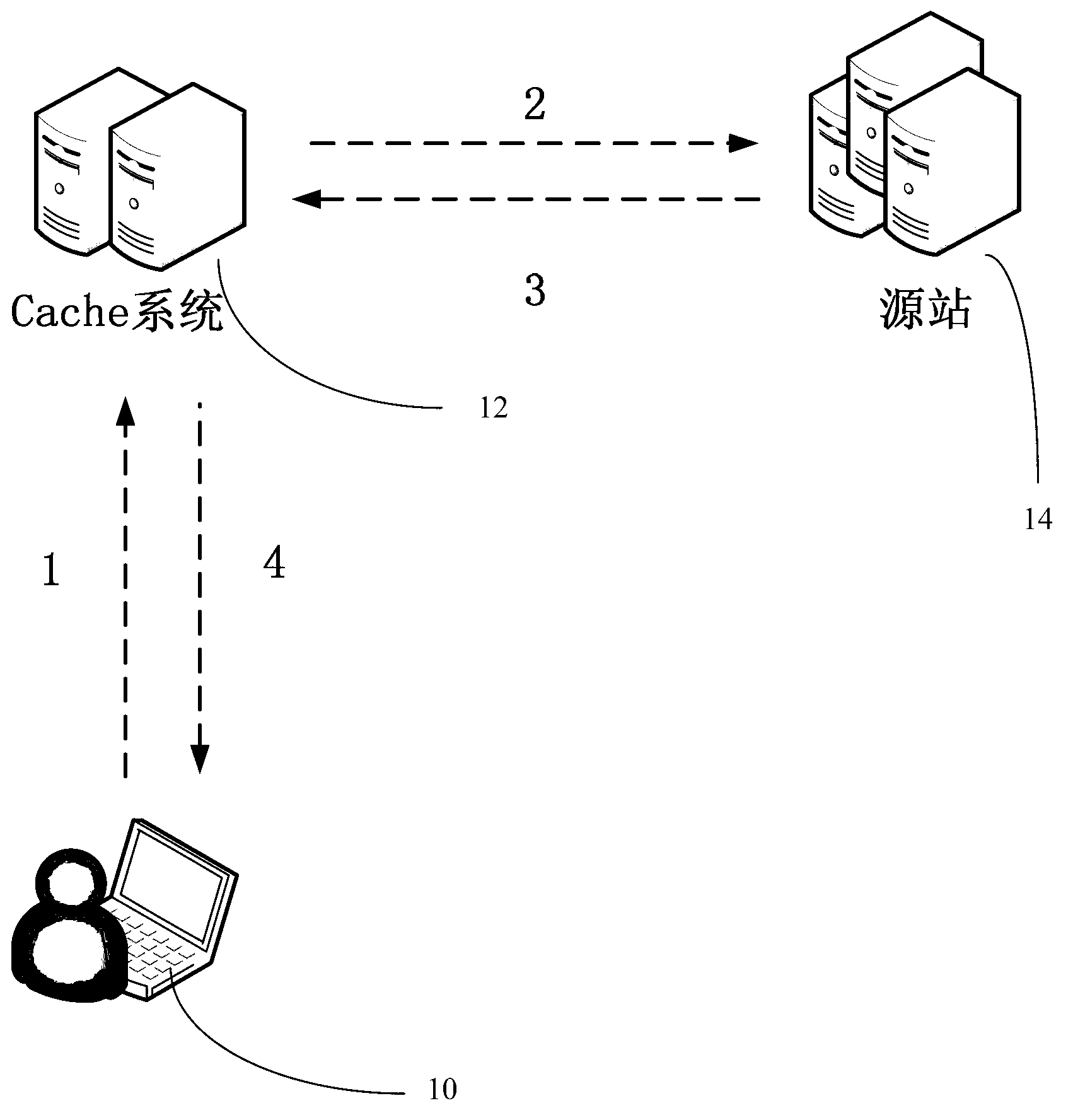

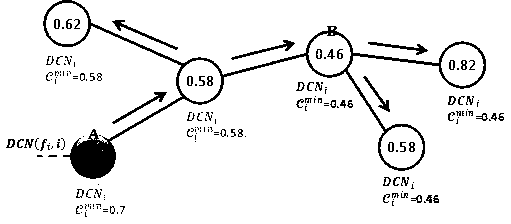

Distributed Content Discovery for In-Network Caching

Network caching performance can be improved by allowing users to discover distributed cache locations storing content of a central content server. Specifically, retrieving the content from a distributed cache proximately located to the user, rather than from the central content server, may allow for faster content delivery, while also consuming fewer network resources. Content can be associated with distributed cache locations storing that content by cache location tables, which may be maintained at intermediate network nodes, such as border routers and other devices positioned in-between end-users and central content servers. Upon receiving a query, the intermediate network nodes may determine whether the content requested by the query is associated with a cache location in the cache location table, and if so, provide the user with a query response identifying the associated cache location.

Owner:HUAWEI TECH CO LTD

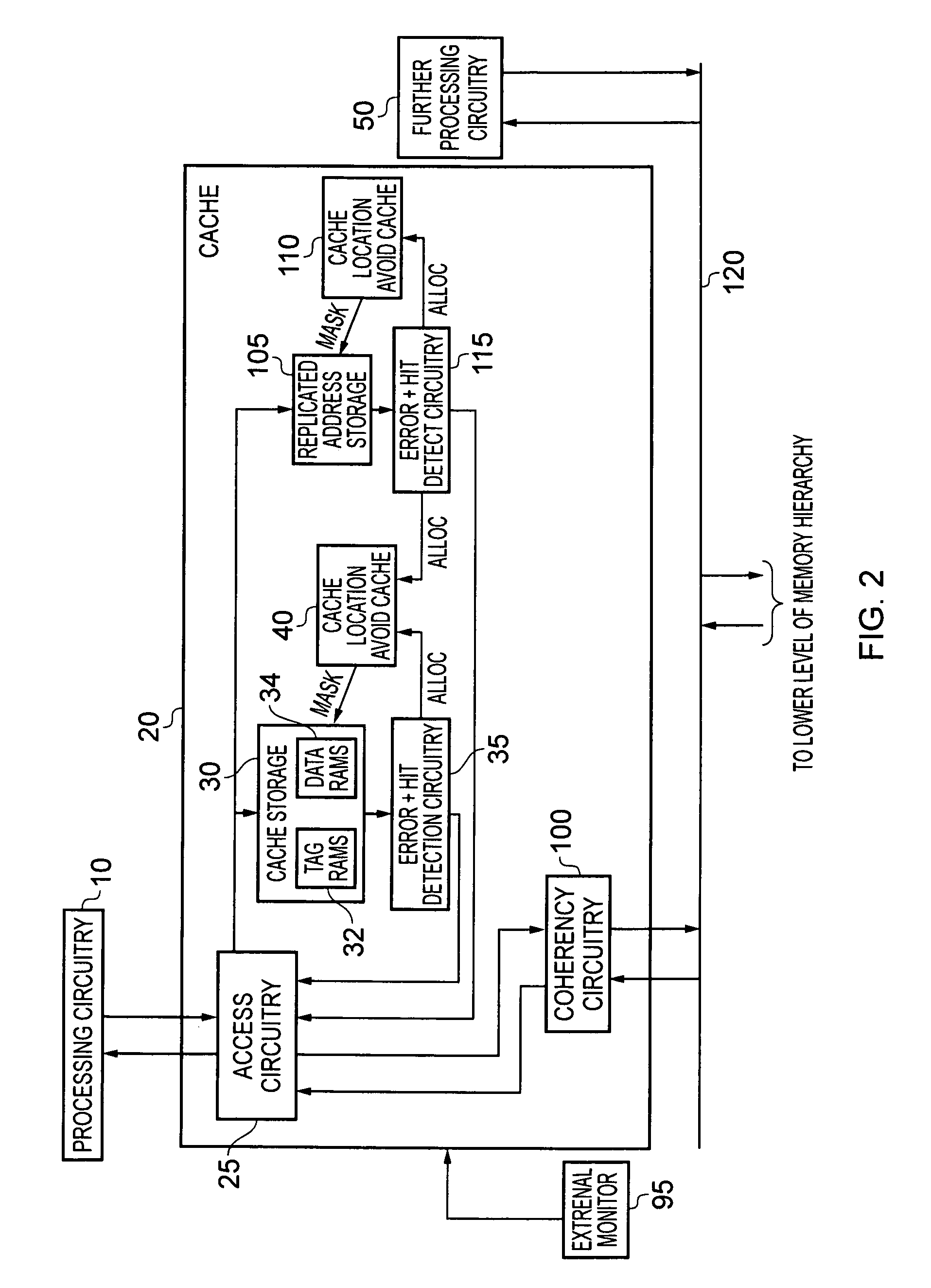

Handling of errors in a data processing apparatus having a cache storage and a replicated address storage

ActiveUS20110047411A1Reduce power consumptionImprove system reliabilityError preventionTransmission systemsTreatment errorData value

A data processing apparatus and method are provided for handling errors. The data processing apparatus comprises processing circuitry for performing data processing operations, a cache storage having a plurality of cache records for storing data values for access by the processing circuitry when performing the data processing operations, and a replicated address storage having a plurality of entries, each entry having a predetermined associated cache record within the cache storage and being arranged to replicate the address indication stored in the associated cache record. On detecting a cache record error when accessing a cache record of the cache storage, a record of a cache location avoid storage is allocated to store a cache record identifier for the accessed cache record. On detection of an entry error when accessing an entry of the replicated address storage, use of the address indication currently stored in that accessed entry of the replicated address storage is prevented, and a command is issued to the cache location avoid storage. In response to the command, a record of the cache location avoid storage is allocated to store the cache record identifier for the cache record of the cache storage associated with the accessed entry of the replicated address storage. Any cache record whose cache record identifier is stored in the cache location avoid storage is logically excluded from the plurality of cache records of the cache storage for the purposes of subsequent operation of the cache storage. Such an approach enables errors to be correctly handled, prevents errors from spreading in a system, and minimises communication necessary on detection of an error in a data processing apparatus having both a cache storage and a replicated address storage.

Owner:ARM LTD

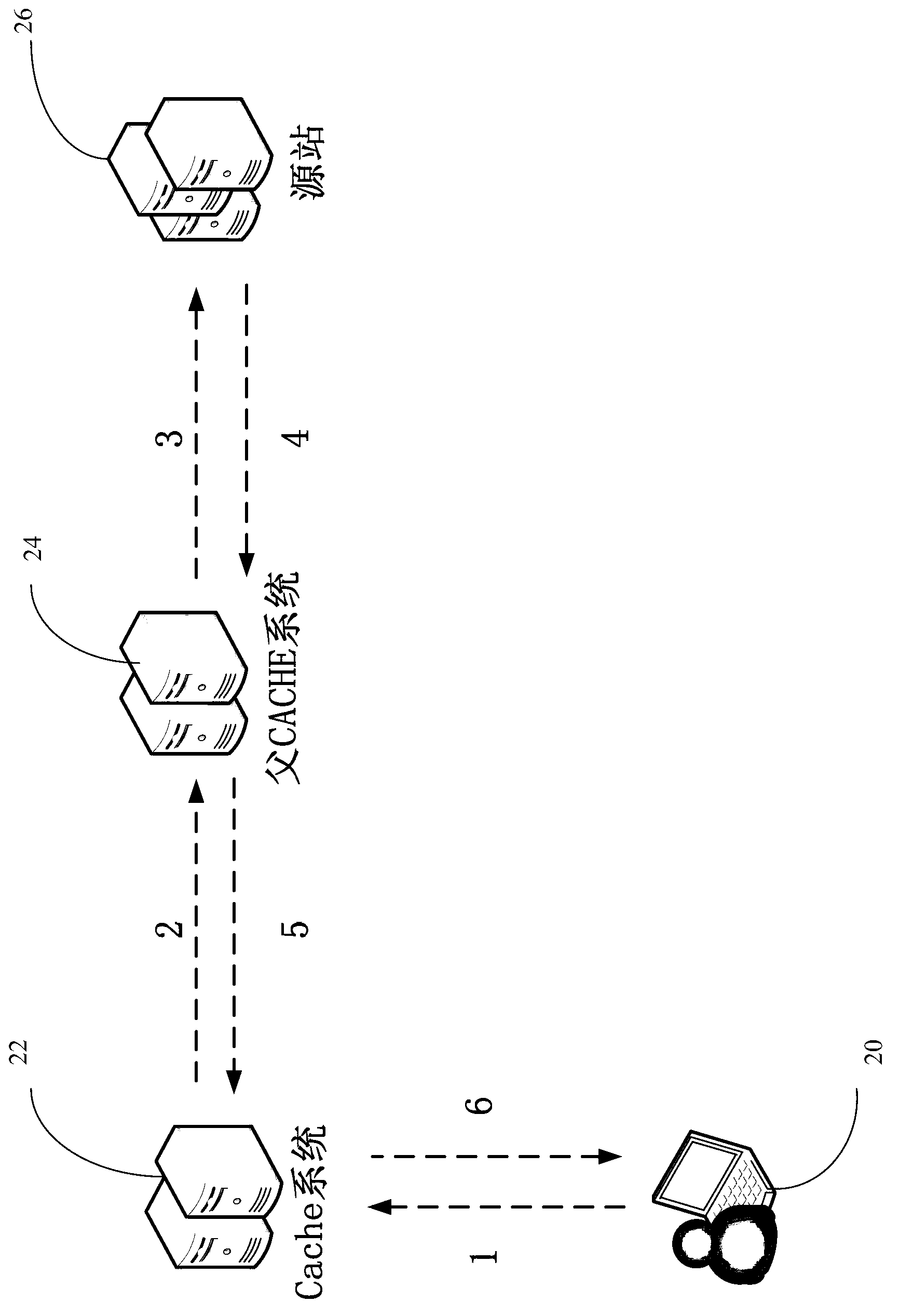

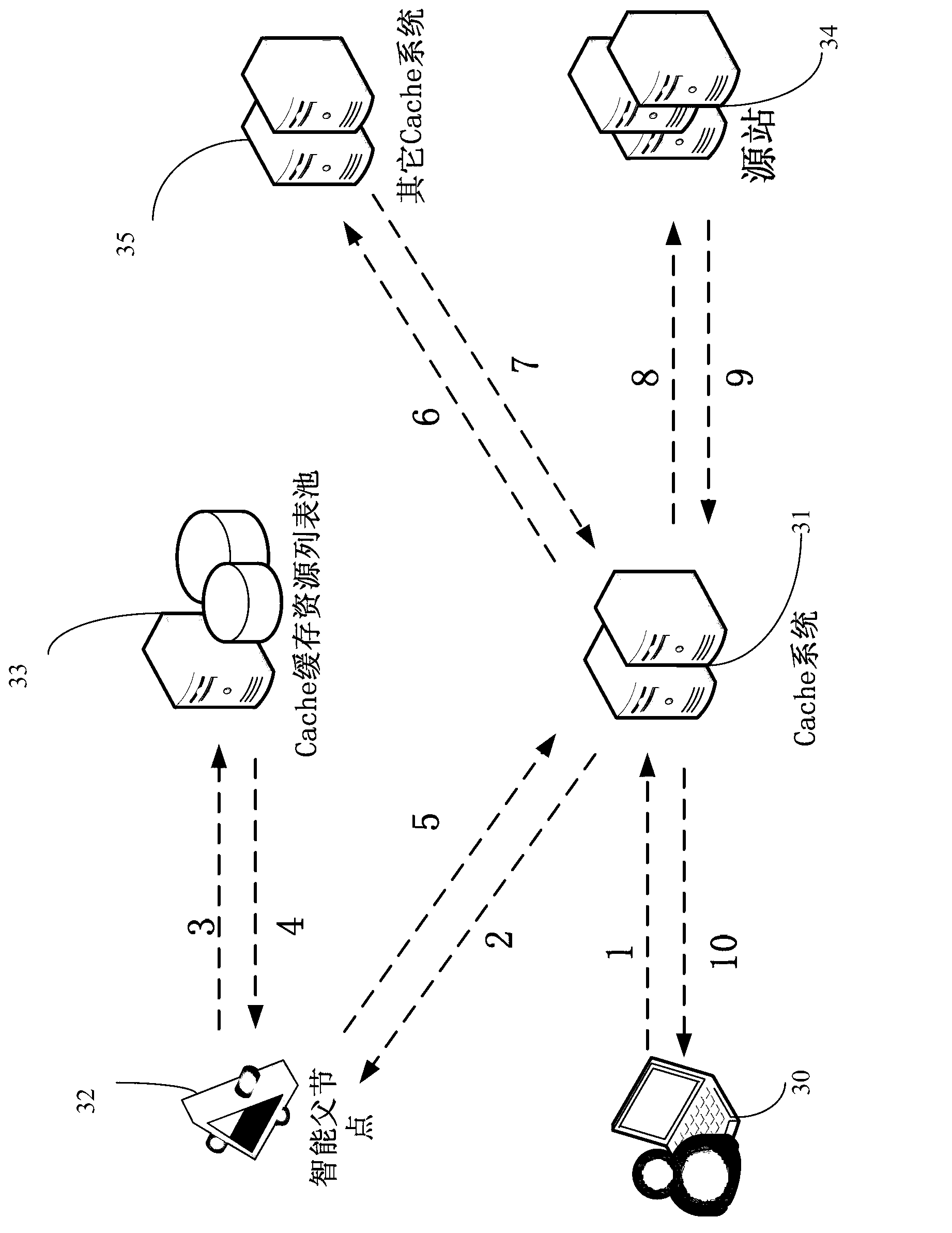

Method and system for sharing Web cached resource based on intelligent father node

ActiveCN102843426APlay the role of intelligent guidanceReduce back-to-source bandwidthTransmissionRelationship - FatherWeb cache

The invention discloses a method and a system for sharing Web cached resource based on an intelligent father node, which are used for further reducing the back source bandwidth of a caching system and the access pressure on a source station. The technical scheme of the invention is as follows: the system comprises multiple WEB cache nodes, a source station server, a cached resource list pool and the intelligent father node. The multiple Web cache nodes are used for caching the resource, receiving the user request and returning back the resource corresponding to the user request to the user; the source station server is used for storing the source data; the cached resource list pool is used for receiving and reporting the resource of the multiple Web cache nodes, and forming a resource detailed list of all the Web cache nodes; and the intelligent father node is used for receiving the request of one Web cache node, inquiring the cache position of the resource to be requested in the cached resource list pool, and realizing the resource sharing scheduling among multiple Web cache nodes by redirection.

Owner:CHINANETCENT TECH

Methods and apparatus for caching a location index in a data storage system

ActiveUS7159070B2Memory adressing/allocation/relocationData switching networksData selectionEngineering

One embodiment is a system for locating content on a storage system, in which the storage system provides a location hint to the host of where the data is physically stored, which the host can resubmit with future access requests. In another embodiment, an index that maps content addresses to physical storage locations is cached on the storage system. In yet another embodiment, intrinsic locations are used to select a storage location for newly written data based on an address of the data. In a further embodiment, units of data that are stored at approximately the same time having location index entries that are proximate in the index.

Owner:EMC IP HLDG CO LLC

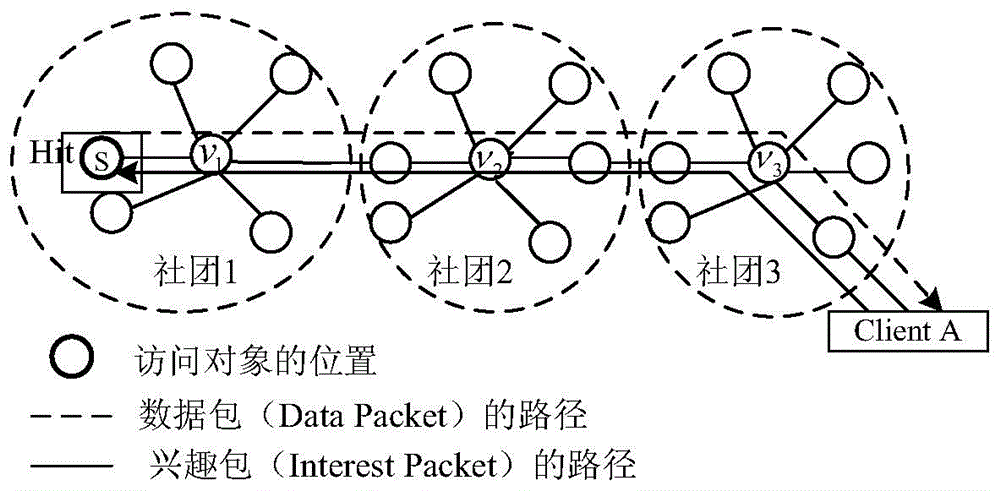

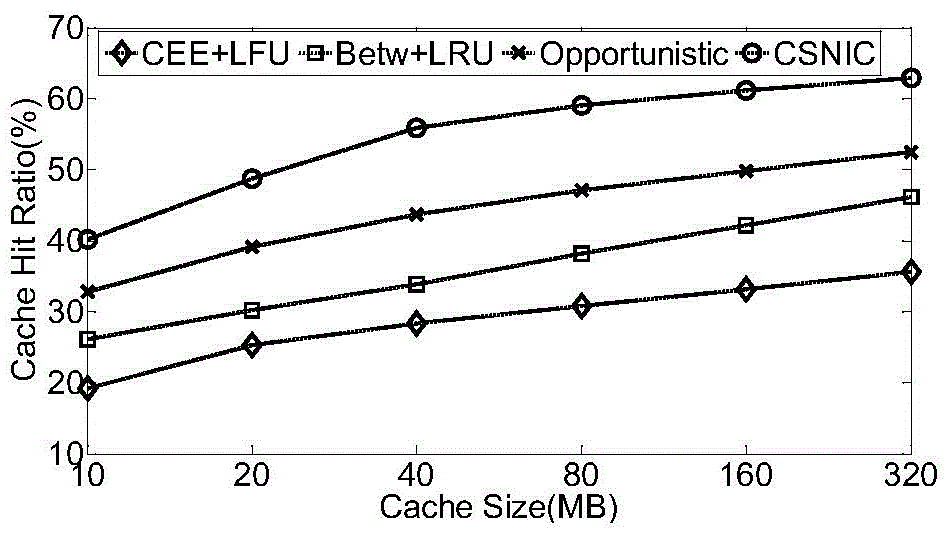

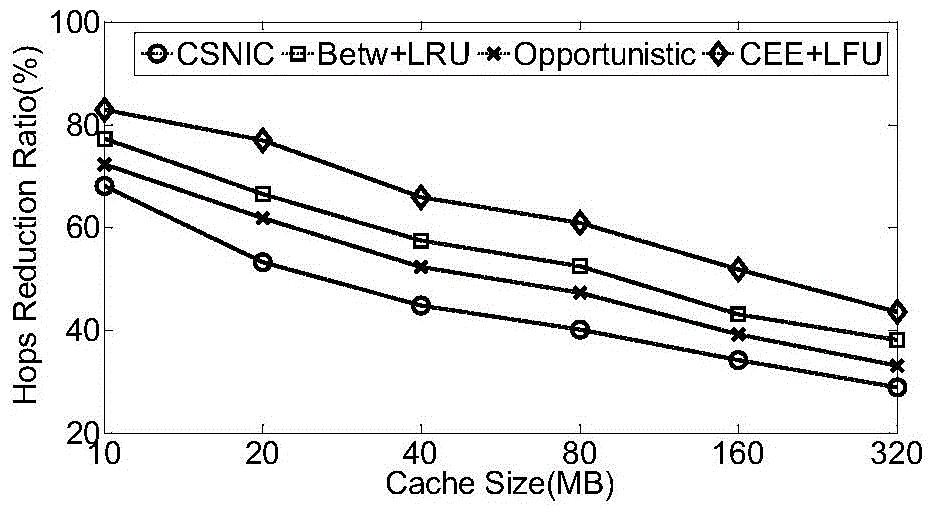

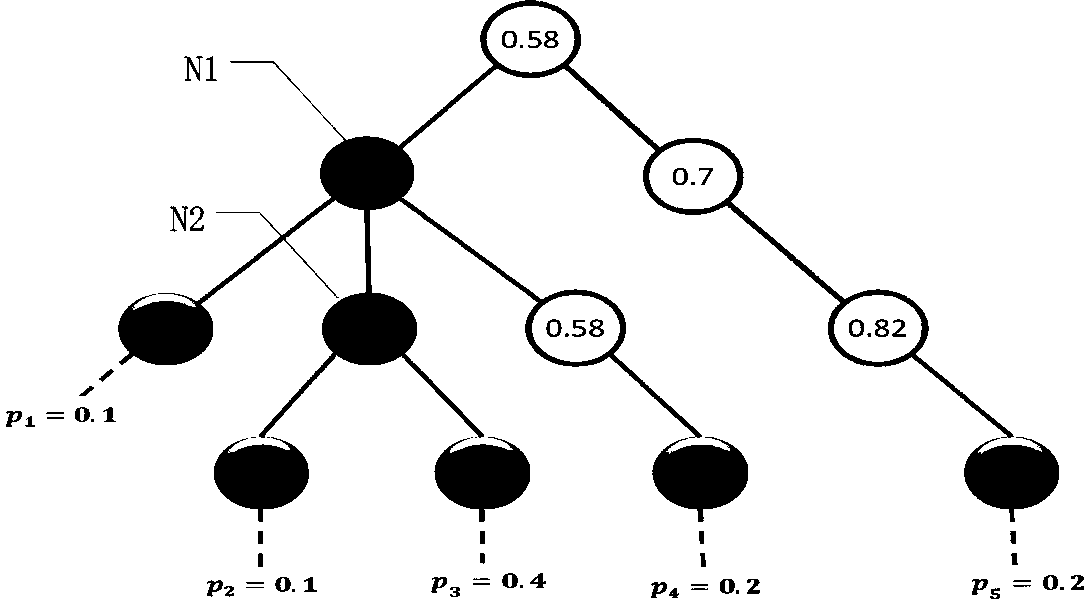

ICN cache strategy based on node community importance

The invention discloses an ICN cache strategy based on node community importance. The ICN cache strategy includes the steps of: 1) defining node community importance; 2) determining a content cache position based on the node community importance; and 3) adopting a node content replacing mechanism based on the node community importance. The invention provides a cache strategy which applies a community characteristic of an Internet network topological structure, and determines the content cache position and performs cache replacement based on importance of each node in a community the node belongs to. Simulation experiments are carrier out in different network environments, and experimental results verify effectiveness of the method provided by the invention.

Owner:GUANGDONG POLYTECHNIC NORMAL UNIV

Disowning cache entries on aging out of the entry

InactiveUS20070174554A1Improve system performanceMemory adressing/allocation/relocationTerm memoryData storing

Caching where portions of data are stored in slower main memory and are transferred to faster memory between one or more processors and the main memory. The cache is such that an individual cache system must communicate to other associated cache systems, or check with such cache systems, to determine if they contain a copy of a given cached location prior to or upon modification or appropriation of data at a given cached location. The cache further includes provisions for determining when the data stored in a particular memory location may be replaced.

Owner:IBM CORP

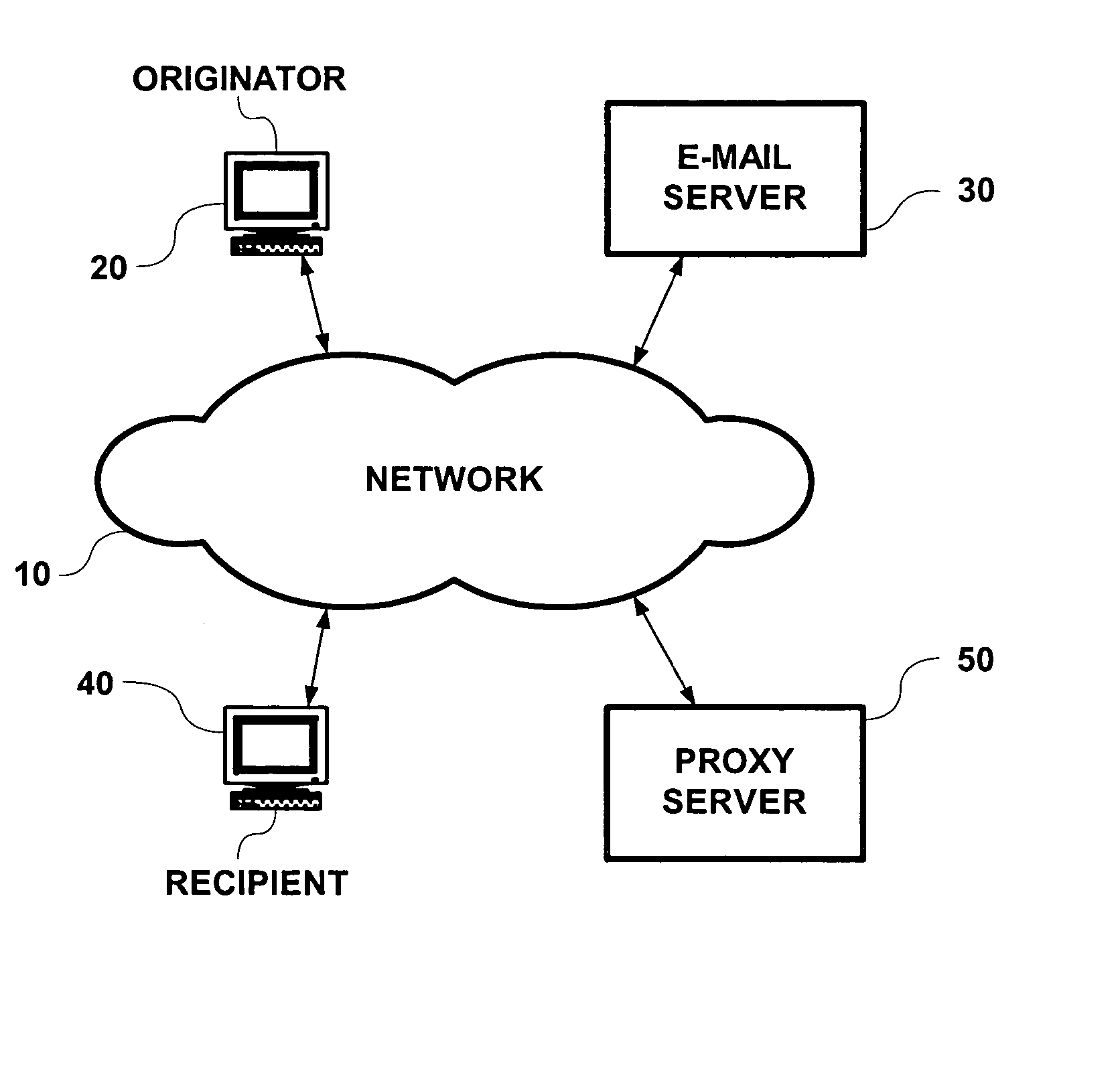

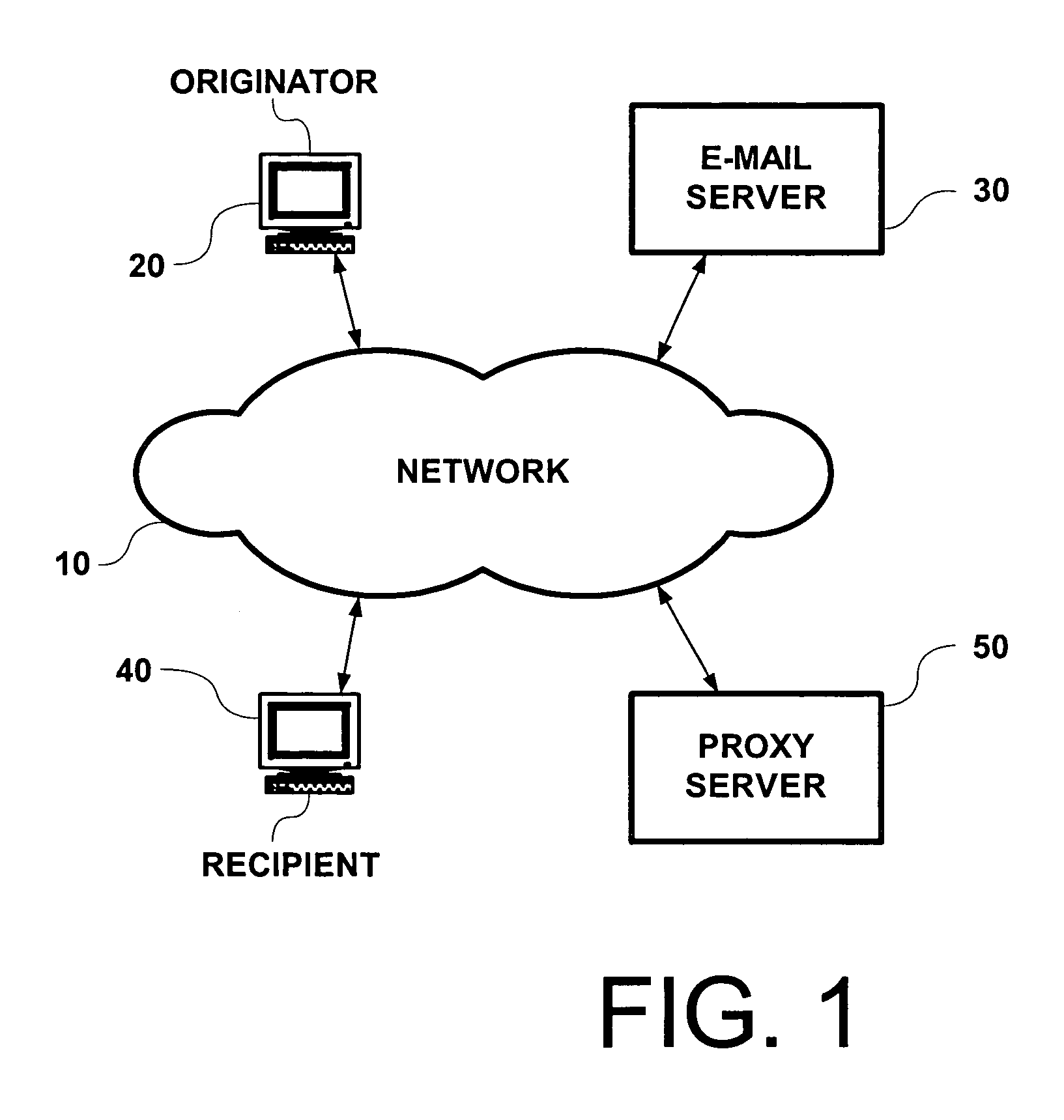

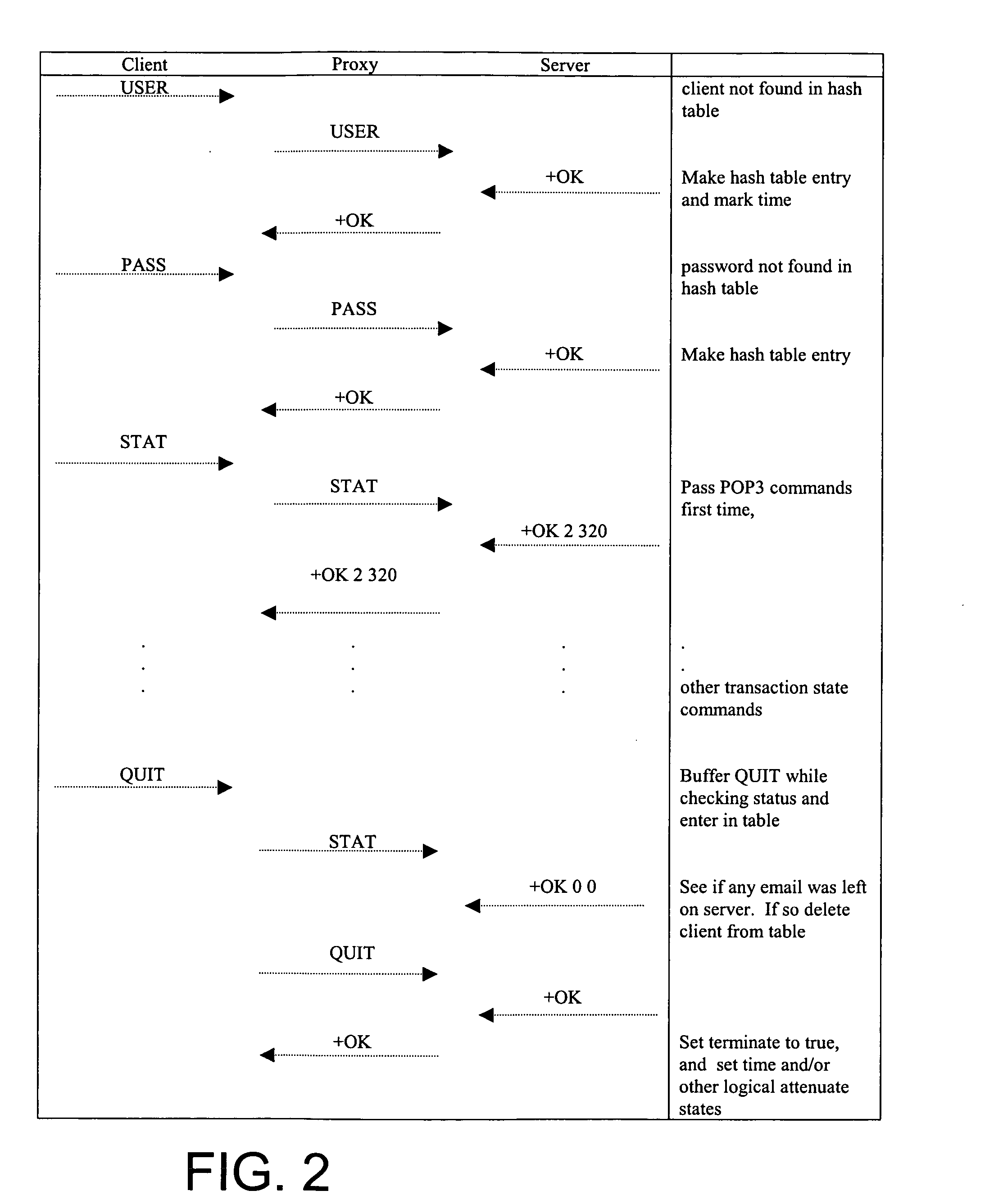

Reduction of network server loading

InactiveUS20050138196A1Reduce in quantityLower performance requirementsMultiple digital computer combinationsData switching networksShortest distanceInternet traffic

Bandwidth loading on the network is managed by pushing e-mail message traffic out to the edges of the network at times when bandwidth demand is low. To accomplish this, a user's e-mail is cached at the proxy server nearest to his presumed location. This decentralizes the e-mail storage away from the mail server and spreads it out over the network at the various proxy servers. This cache action is preferably done when there is a lull in network traffic (e.g., at night). This has the effect of decentralizing the bandwidth demand on the overall network since the e-mail messages have a shorter distance to travel when retrieved by the user from the cache location at the proxy server.

Owner:TIME WARNER CABLE INTERNET

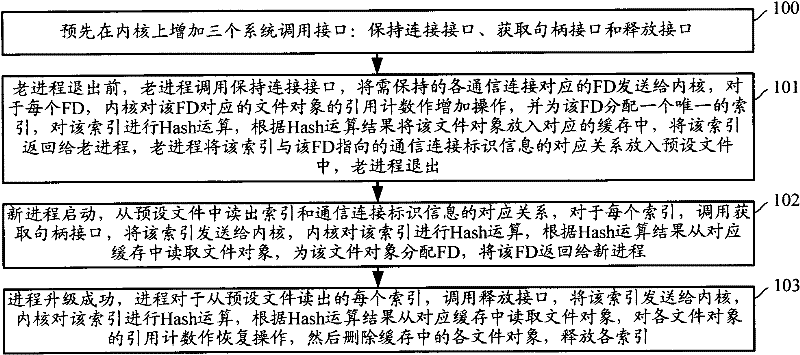

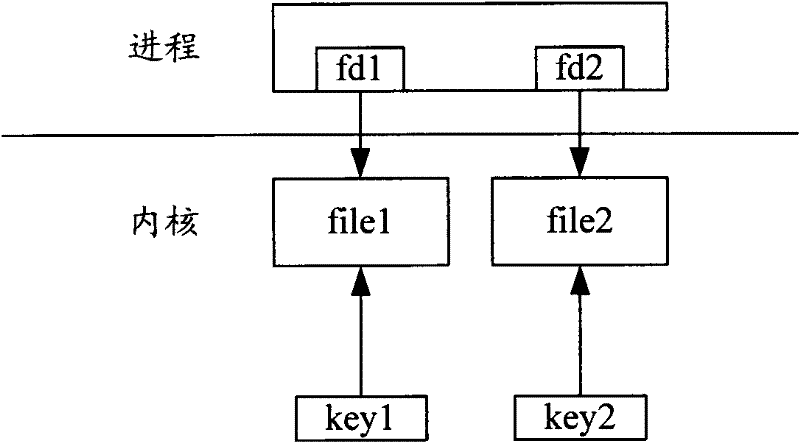

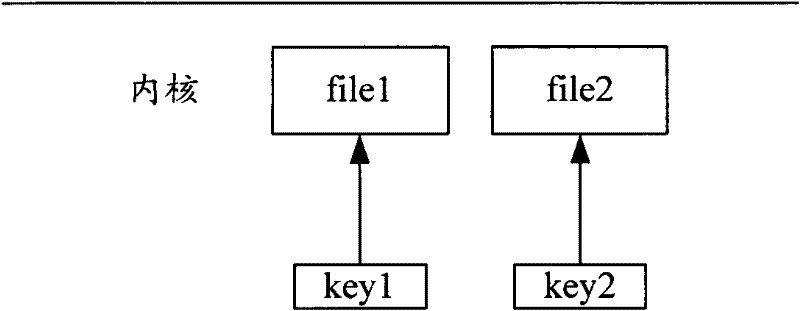

Progress starting method, kernel and progress

ActiveCN102508713APerformance is not affectedImprove versatilityProgram initiation/switchingFile descriptorOperating system

The invention discloses a progress starting method, a kernel and a progress. The progress starting method comprises the following steps: before quitting the progress, the kernel receives a connection keeping request carrying file descriptor (FD), sent by the progress; for each FD carried in the connection keeping request, the kernel increases a reference count of a file object corresponding to the FD, puts the file object in a cache, and returns the position information of the cache to the progress, so that the progress puts a corresponding relation between the position information of the cache and communication connection identification information pointed by the FD in a pre-set storage area; and, in the process of starting the progress, the kernel receives an FD obtaining request carrying the position information of the cache, sent by the progress, reads various file objects from the cache, distributes the FDs to various file objects, and returns the FDs to the progress. According to the progress starting method, the kernel and the progress disclosed by the invention, uninterrupted communication connection in the process of upgrading or restarting the progress is realized; furthermore, the processing complexity is reduced.

Owner:NEW H3C TECH CO LTD

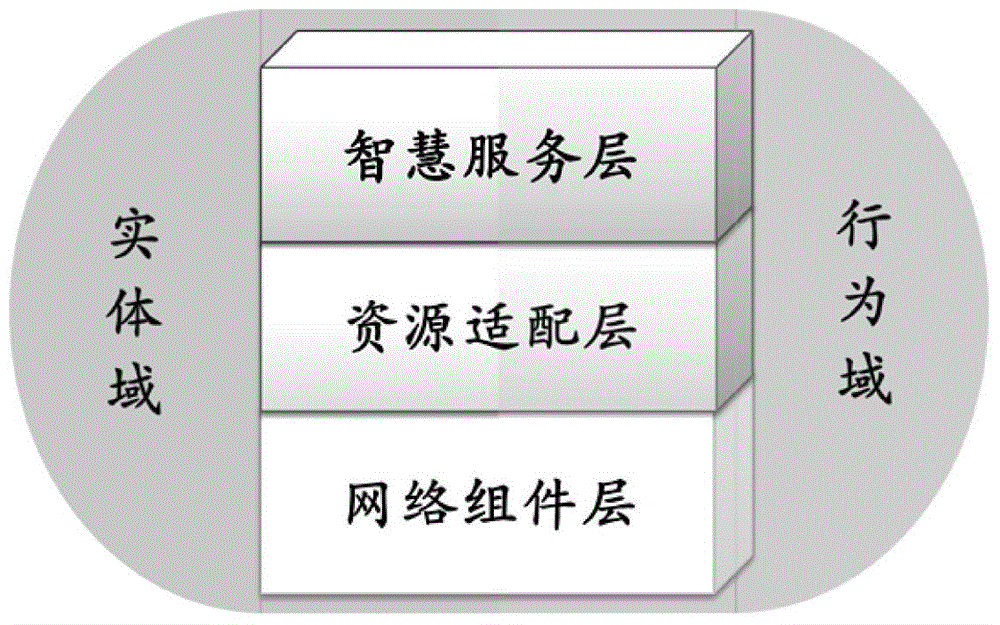

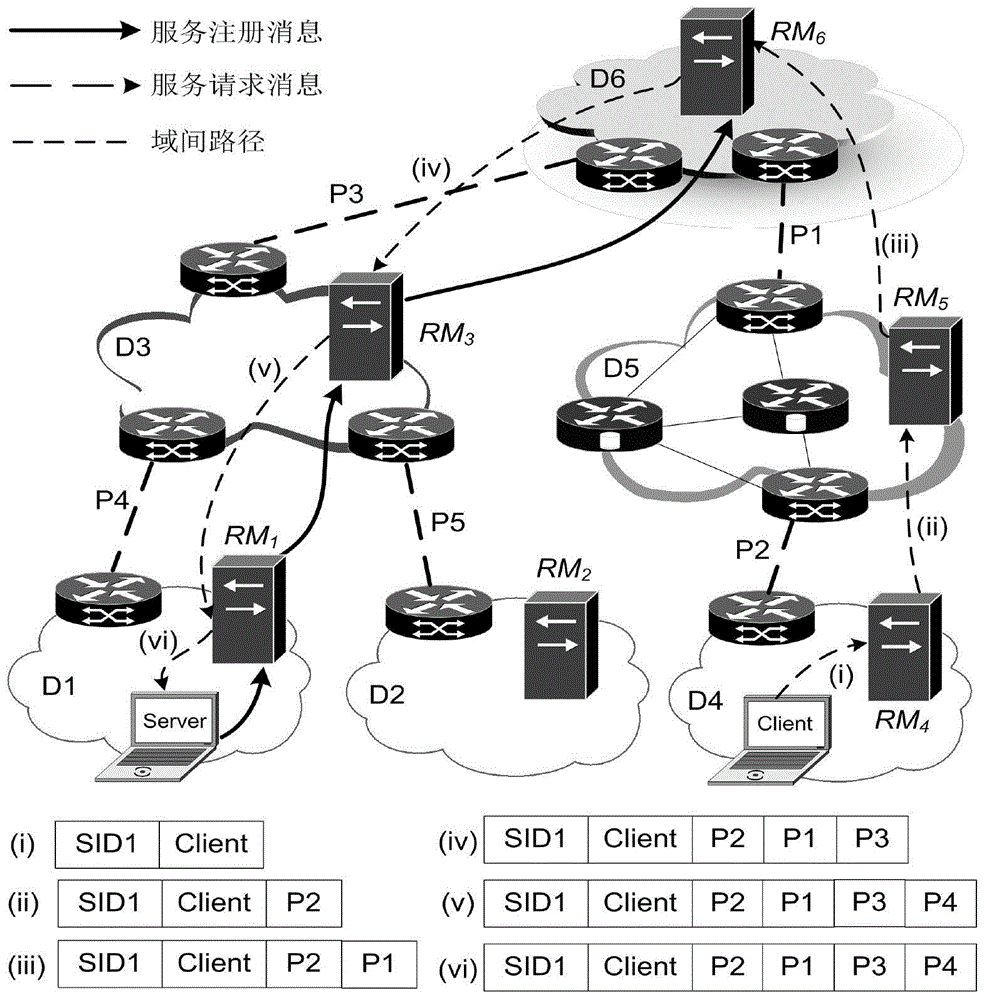

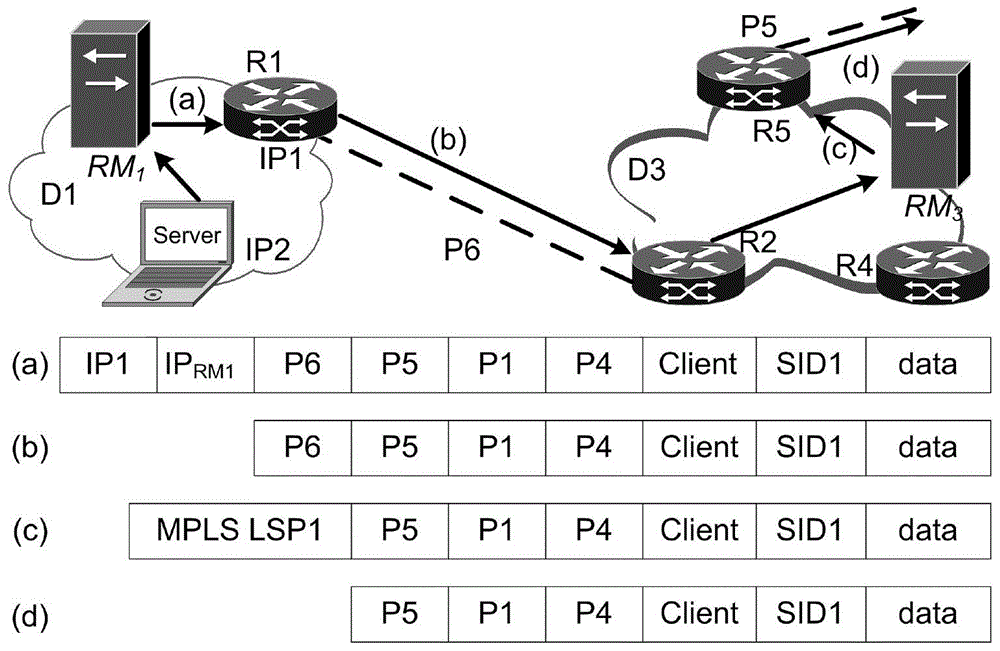

Cooperative caching method in intelligence cooperative network

The invention relates to a method for realizing cooperative caching in an intelligence cooperative network. The method comprises the steps of arranging a resource manager in a network, determining contents to be at a caching position in the network, maintaining a caching abstract table, and recording caching resources of a router of the network; when the resource manager receives a service request packet sent by a content requester, searching a corresponding table item from the caching abstract table; if the table item is found, directly sending a service request to a content router recorded by the table item; if no table item is found, inquiring a service registering table, and forwarding the service request to the resource manager of the adjacent network; when the resource manager receives a service data packet, caching the service data packet at the selected content router according to a content arrangement algorithm. According to the method disclosed by the invention, dynamic adaptation of the cached resources can be realized, and a hit request is routed to the content router of the service content through the resource manager, so that intra-domain caching cooperation can be realized, caching redundancy can be reduced, and the caching performance can be improved.

Owner:BEIJING JIAOTONG UNIV

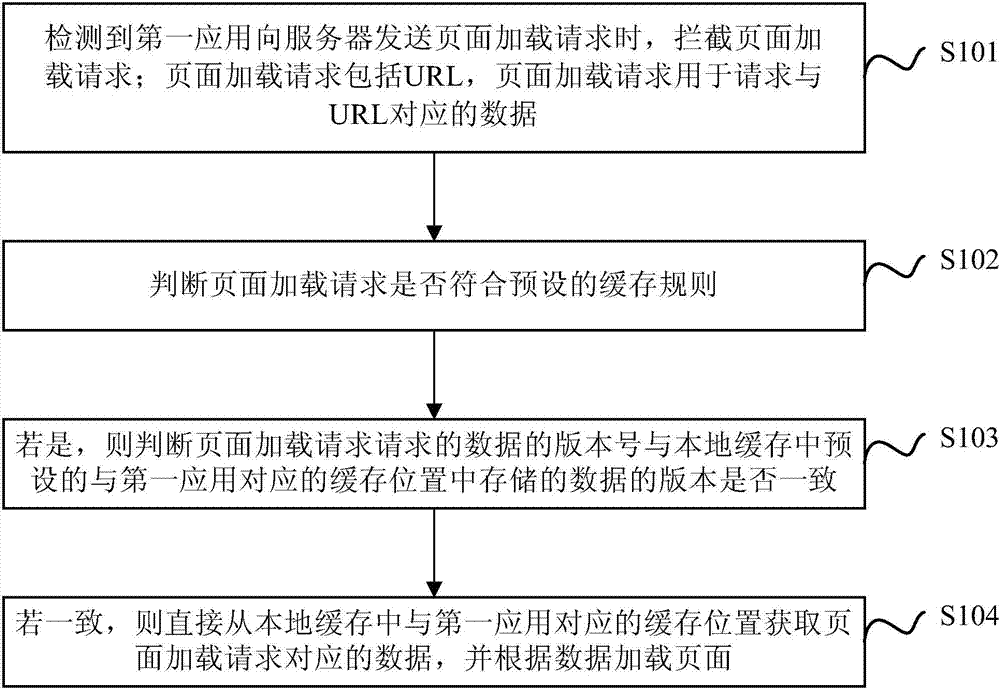

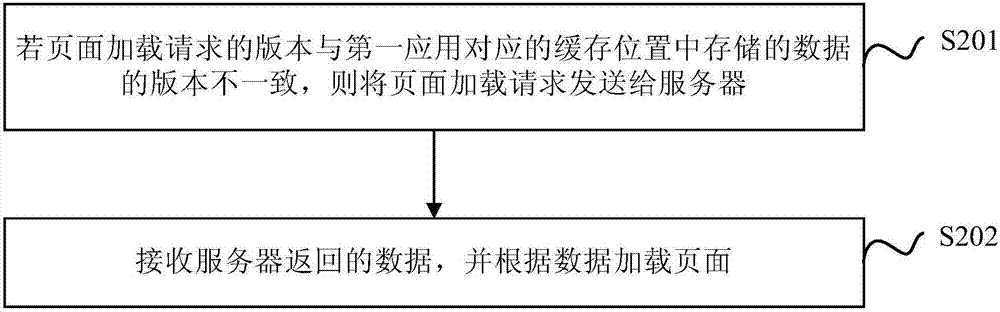

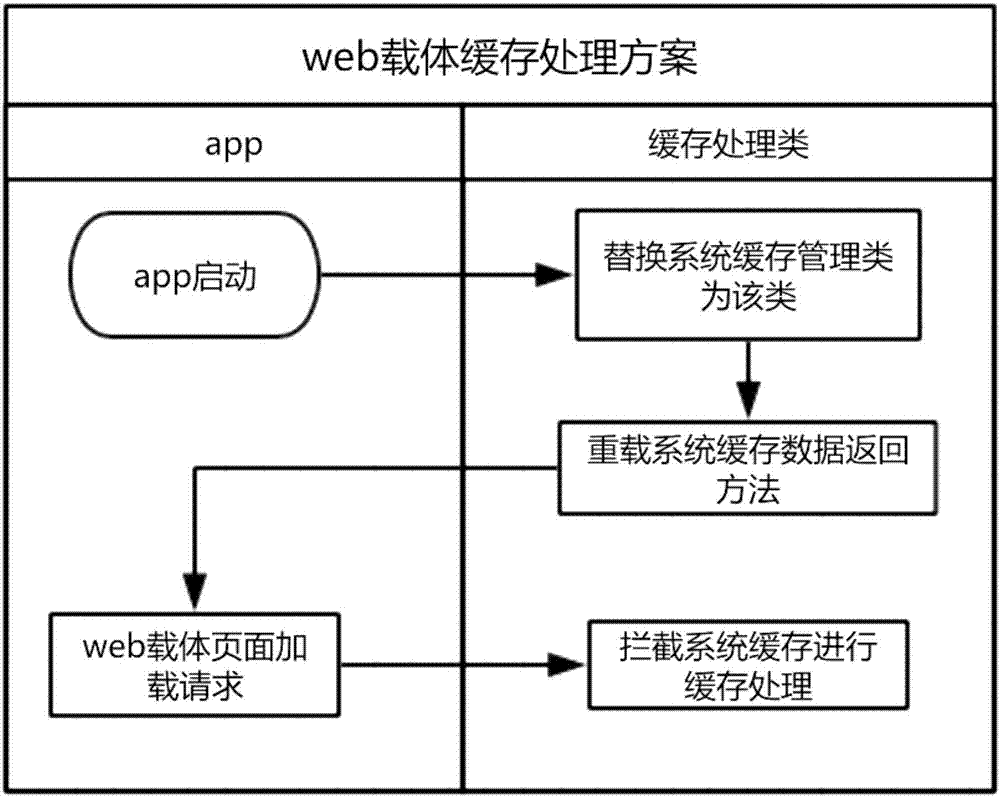

Hybrid application-based loading method and apparatus

ActiveCN107491320AImprove loading speedImprove fluencyWebsite content managementProgram loading/initiatingSoftware engineeringUniform resource locator

Embodiments of the invention provide a hybrid application-based loading method and apparatus. The method comprises the steps of intercepting a page loading request comprising a URL when it is detected that a first application sends a page loading request to a server; judging whether the page loading request meets a preset caching rule or not; if yes, judging whether a version number of data requested by the page loading request is consistent with a preset version of data stored in a cache position corresponding to the first application in a local cache or not; and if yes, obtaining the data corresponding to the page loading request directly from the cache position corresponding to the first application in the local cache, and according to the data, loading the page. The data does not need to be loaded from a network server, so that the page loading speed and fluency are improved, flow is saved, and the user experience is improved.

Owner:BEIJING 58 INFORMATION TTECH CO LTD

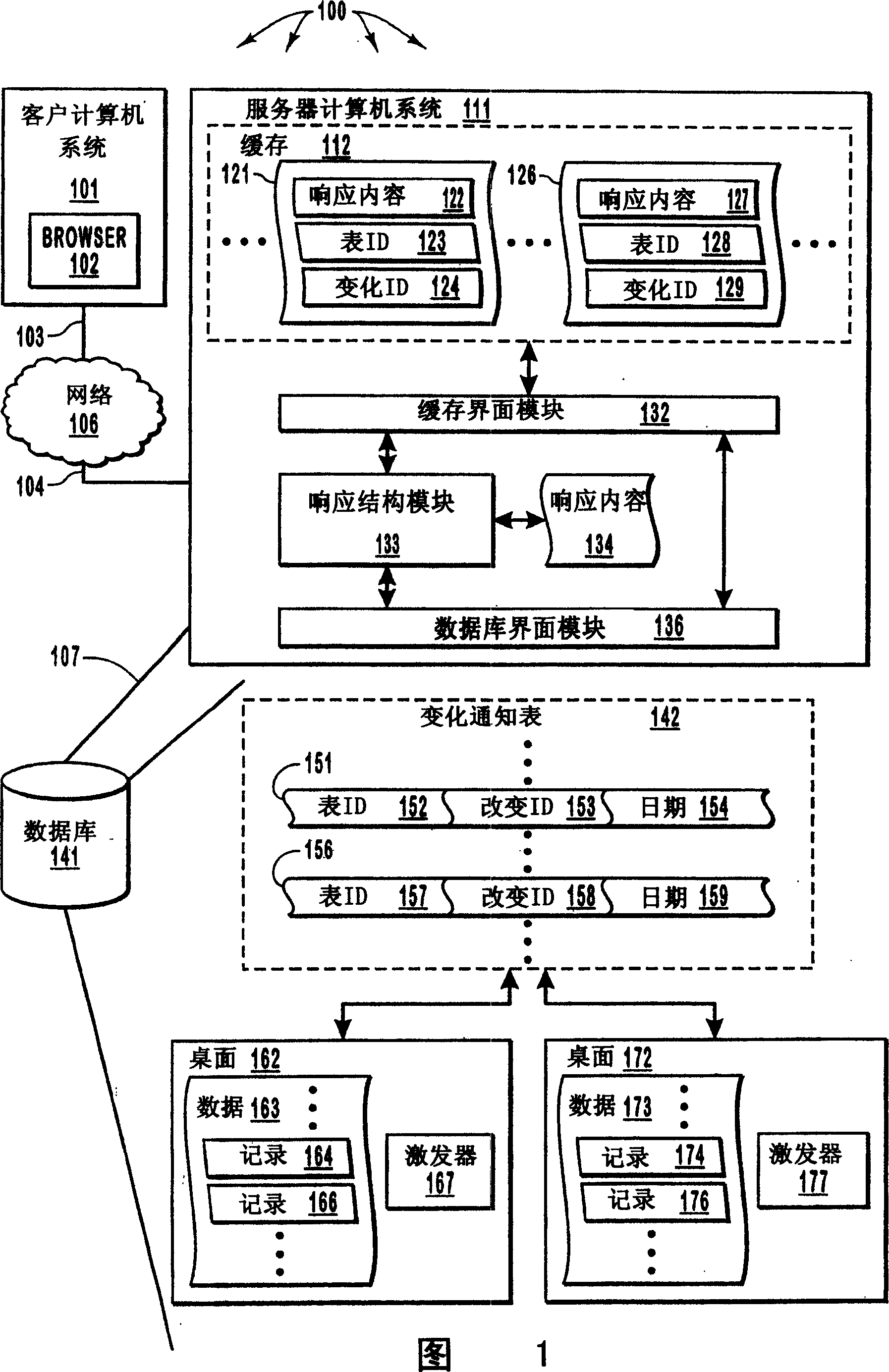

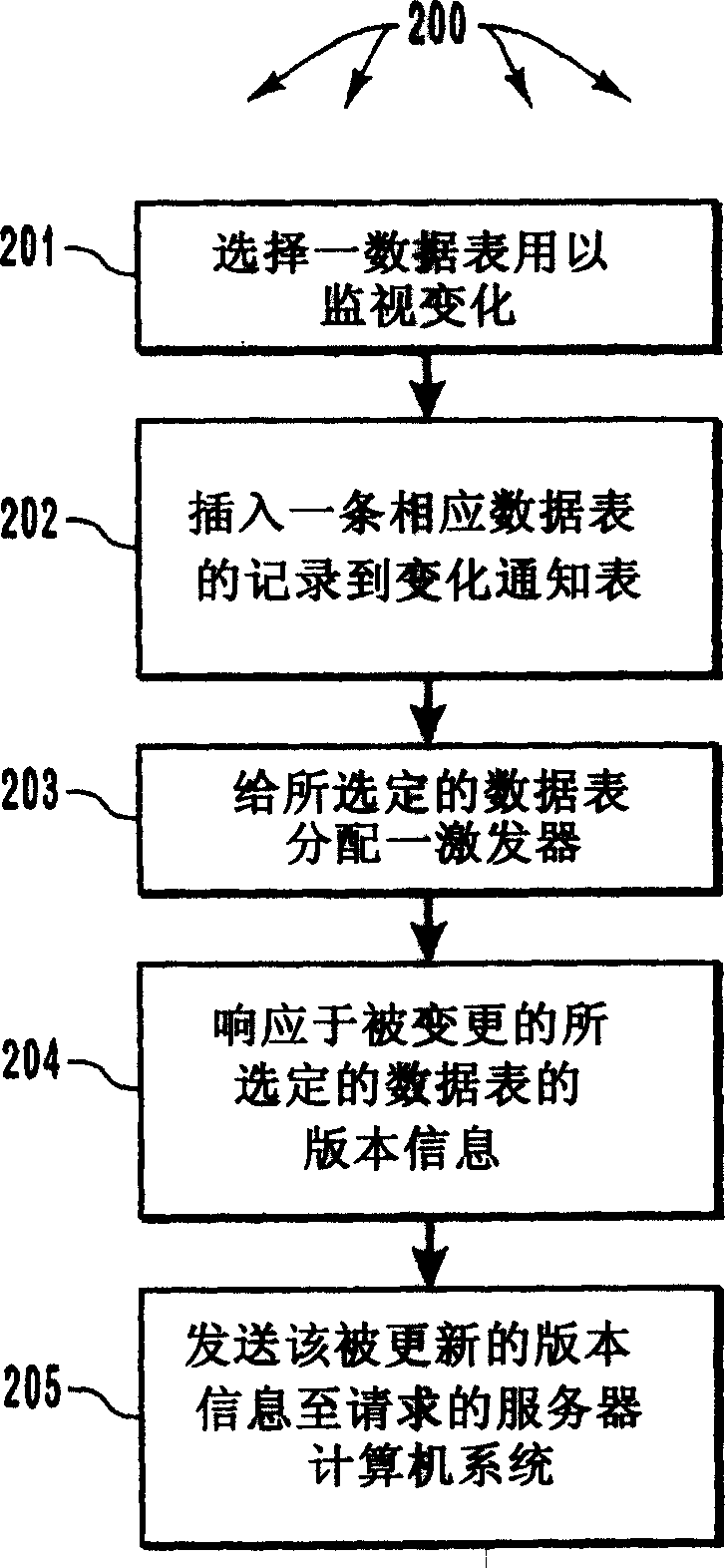

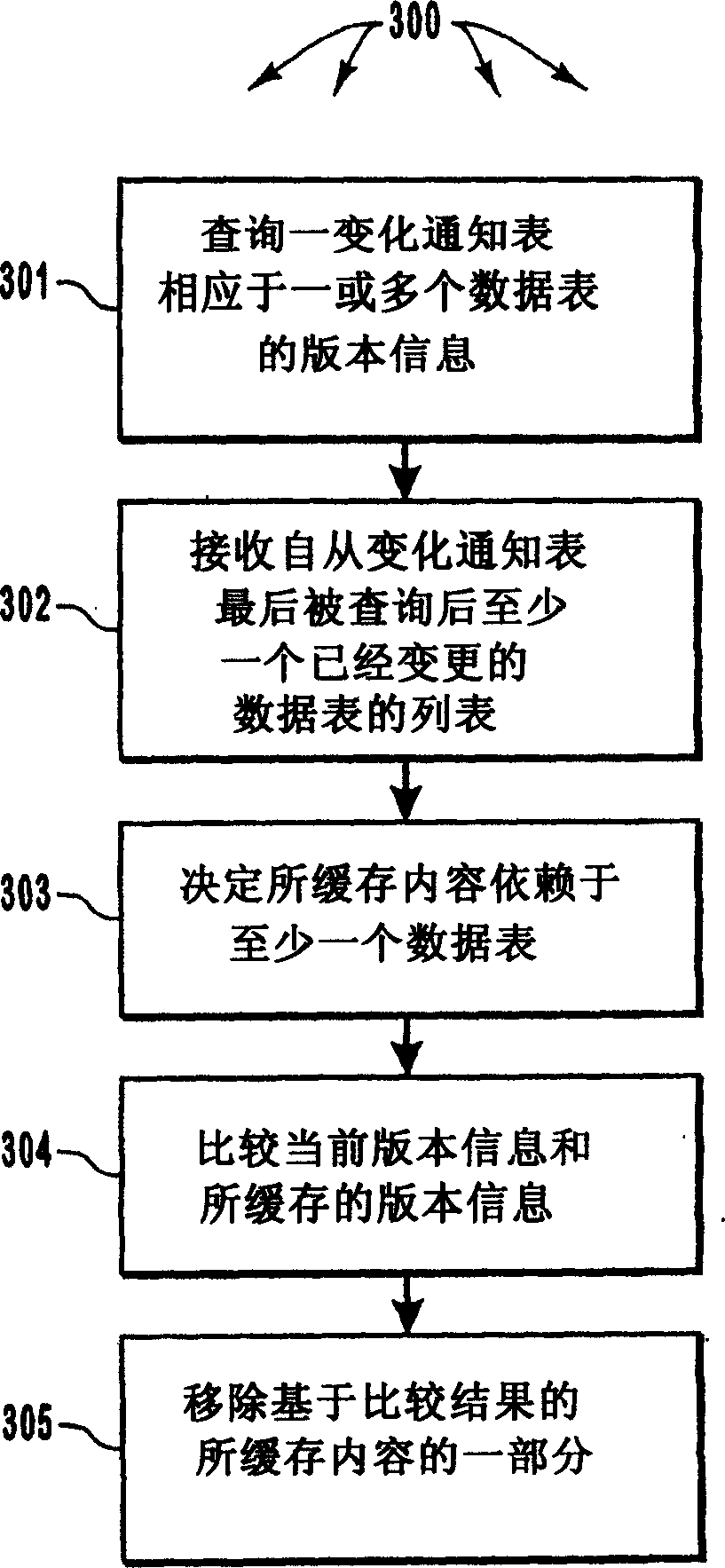

Registering for and retrieving database table change information that can be used to invalidate cache entries

InactiveCN1577327AData processing applicationsDigital data information retrievalClient-sideDatabase caching

Owner:MICROSOFT TECH LICENSING LLC

Method and apparatus for selecting cache locality for atomic operations

InactiveUS9250914B2Fault responseMemory adressing/allocation/relocationCache localityAtomic operations

Owner:INTEL CORP

Data memory system and method

ActiveCN102231137AImprove threading capabilitiesImprove reliabilityMemory adressing/allocation/relocationParallel computingData management

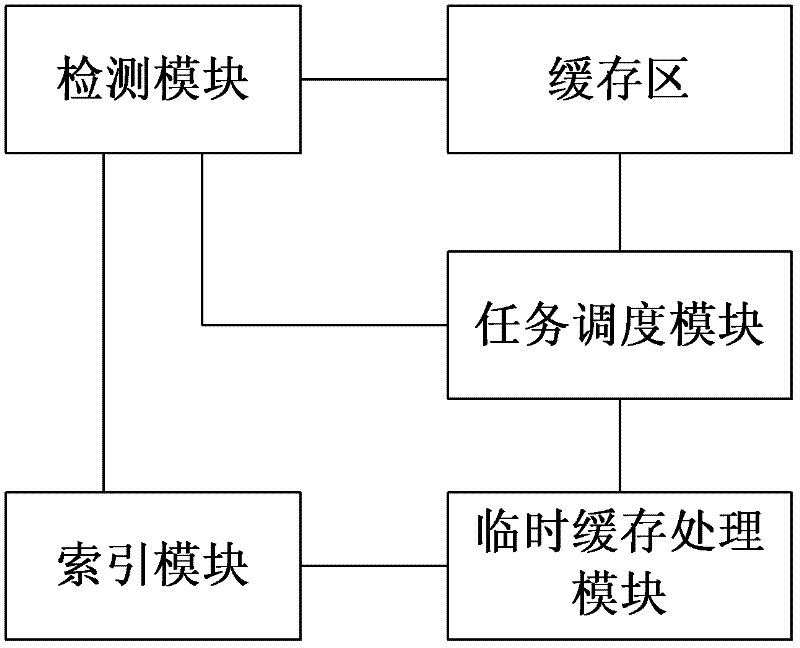

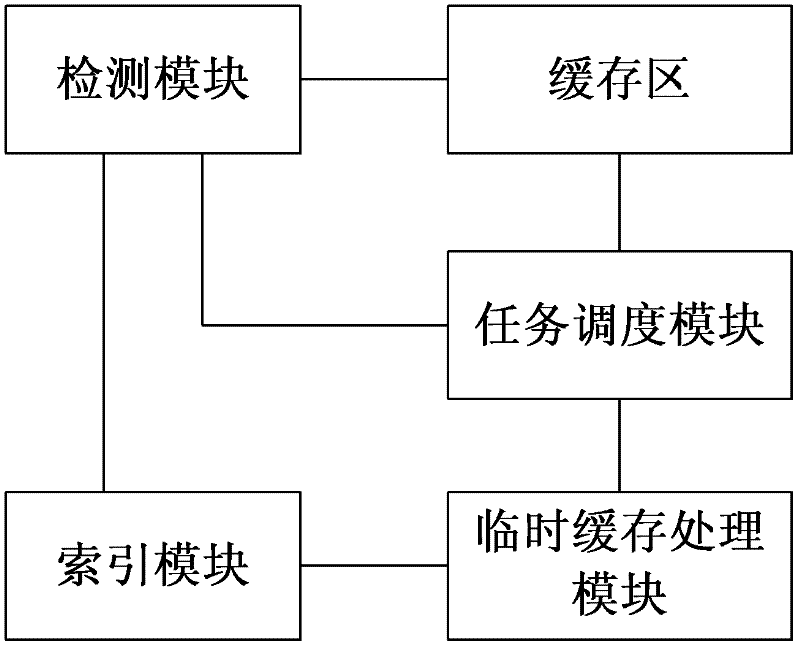

The invention discloses a data memory system and method and relates to a continuous data protection system for data management. The system disclosed by the invention comprises a detection module, a task scheduling module, a temporary cache processing module and an index module, wherein the detection module is used for detecting the cache status of a cache region in real time; the task scheduling module is used for triggering the cache region to carry out cache operation when the cache status of the cache region is normal and triggering the temporary cache processing module to carry out cache operation when the cache status of the cache region is busy; the temporary cache processing module is used for dividing a temporary cache space from a nonvolatile memory and caching the incremental data of the current disk into the temporary cache space while receiving the cache operation triggered by the task scheduling module; and the index module is used for recording index information of cached incremental data of the disk in sequence, wherein the cache information at least comprises cache location, cache time and original address of the incremental data of the disk. According to the technical scheme of the invention, the data cache space can be expanded effectively and flexibly, and the reliability of the cache data can be improved simultaneously.

Owner:INSPUR SUZHOU INTELLIGENT TECH CO LTD

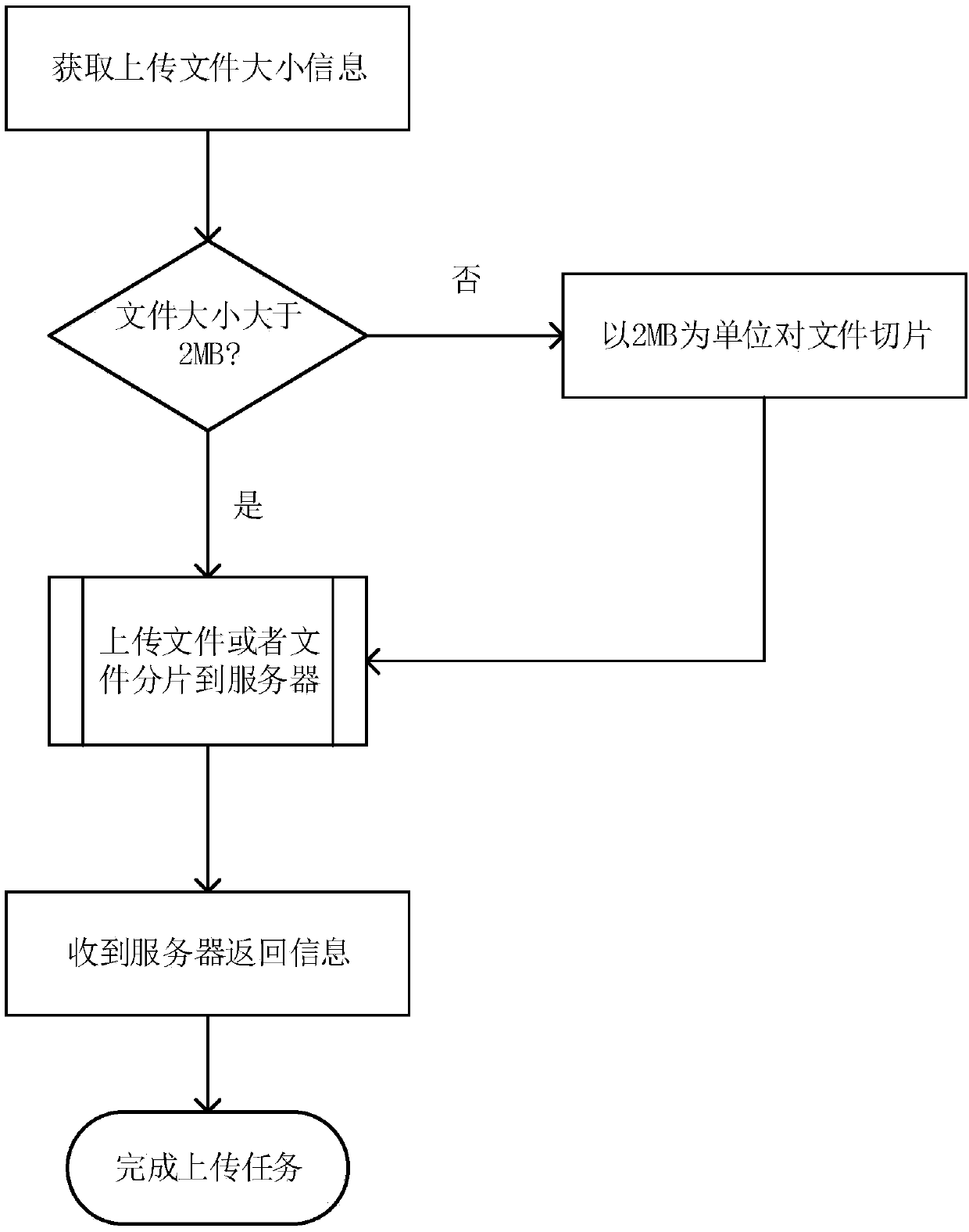

Multi-thread uploading optimization method based on memory allocation

ActiveCN109547566AReduce wasteImplement control transferError preventionFile access structuresNetwork conditionsCache locality

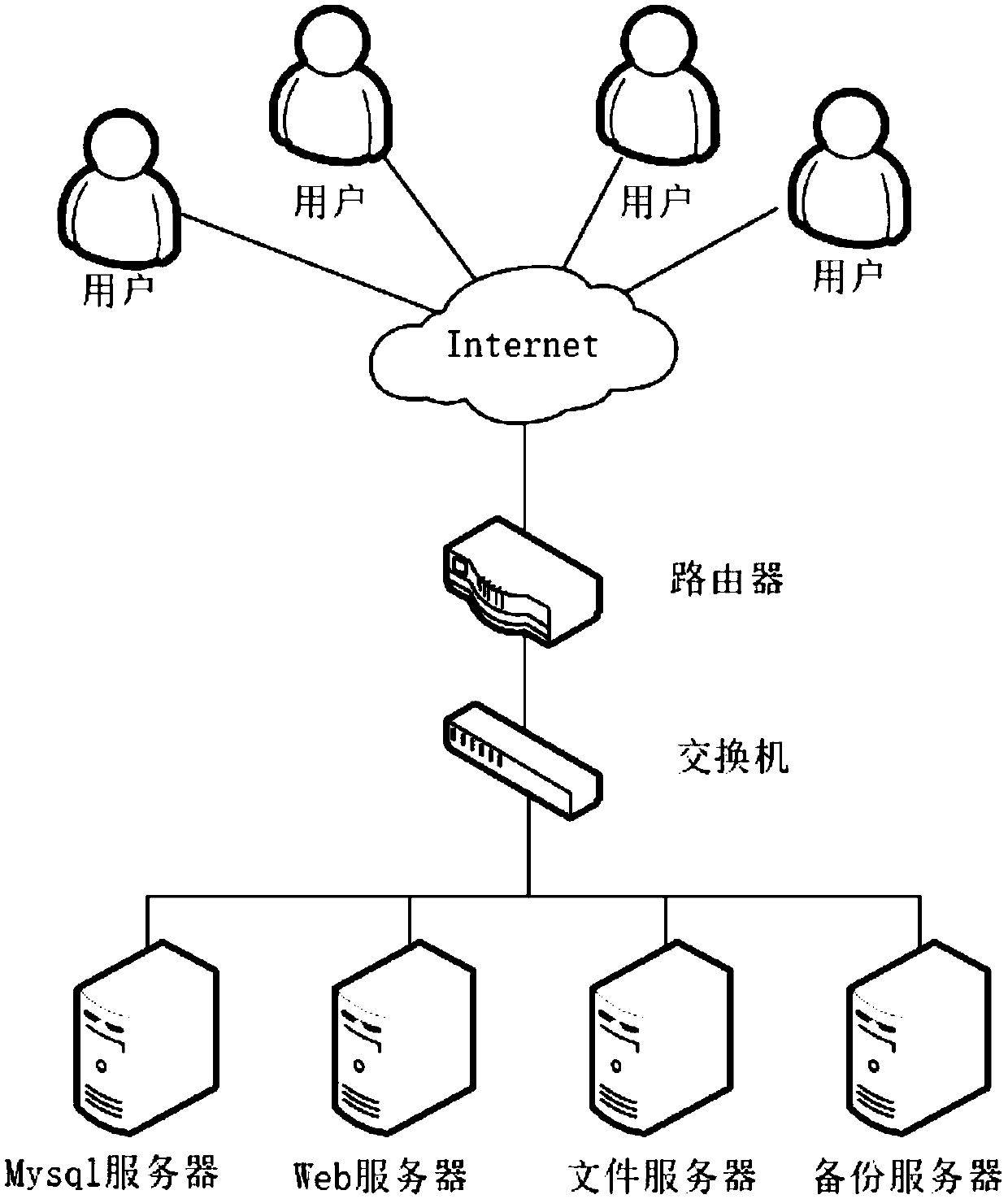

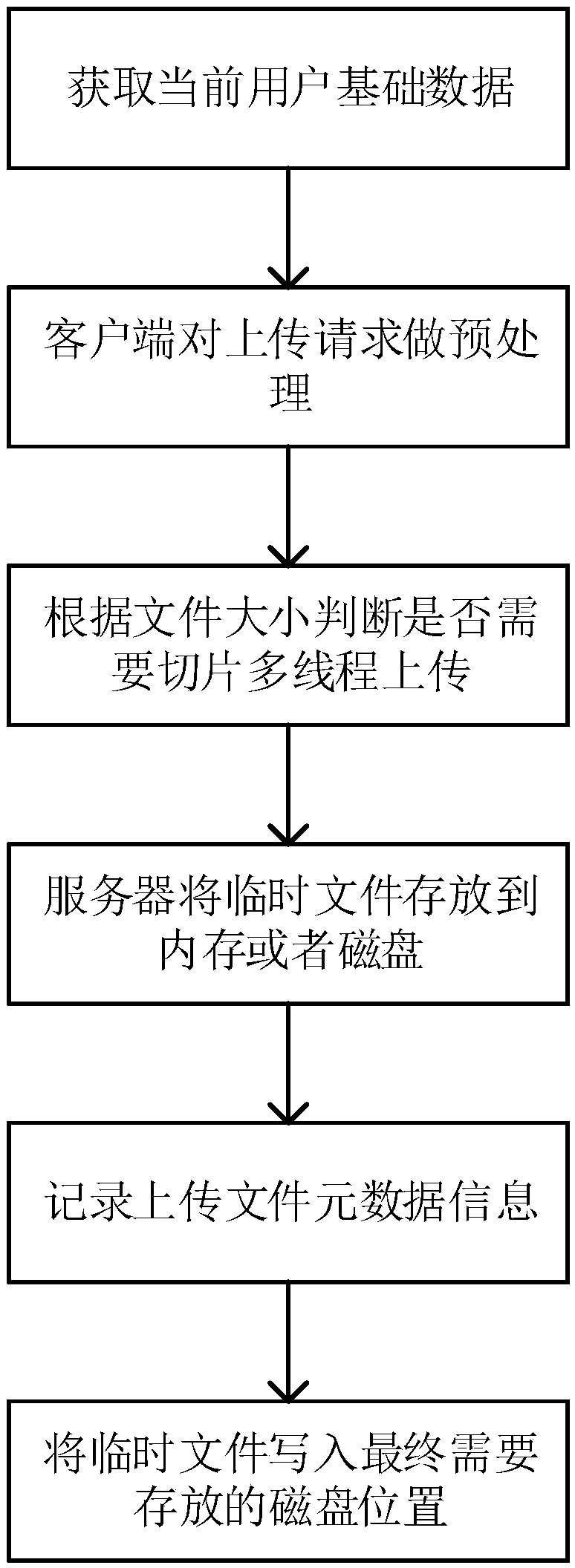

The invention discloses a multi-thread uploading optimization method based on memory allocation. The method comprises the steps of 1) acquiring basic data of a current operation user; 2) preprocessingan uploading request by utilizing obtained information; 3) judging whether slicing and multi-thread uploading are needed or not according to the size of an uploaded file; 4) storing a temporary fileof the uploaded file in a designated cache position according to a current idle memory condition of a server; 5) recording metadata information of the uploaded file in a database; and 6) writing the temporary file in a cache into a disk position in which the file finally needs to be stored. Starting from two aspects of service logic and server memory management, a client and the server cooperate,so that relatively high uploading success rate is achieved when network conditions are relatively poor; and meanwhile, a breakpoint resume function is supported, so that the uploading speed of the client and the bandwidth utilization rate of the server are improved.

Owner:SOUTH CHINA UNIV OF TECH

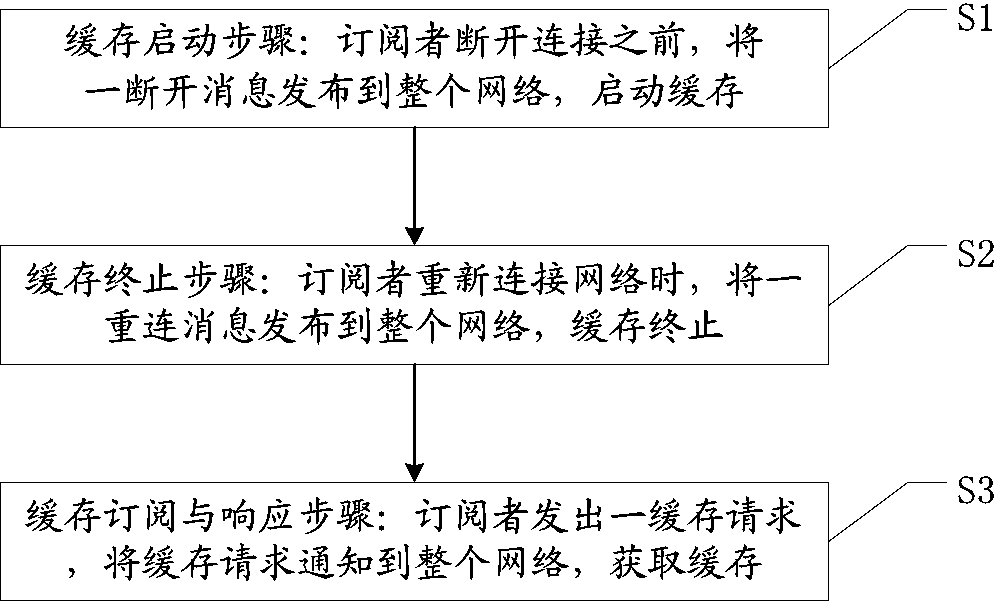

Cache method suitable for publish-subscribe system in mobile environment

ActiveCN103873465ALower latencyImprove efficiencyTransmissionNetwork data managementEqual probabilityParallel computing

The invention discloses a cache method suitable for a publish-subscribe system in a mobile environment. The method comprises the following steps: cache starting: before a subscriber is disconnected, publishing an interconnection message to an entire network, and starting cache; cache ending: when the subscriber reconnects the network, publishing a reconnection message to the entire network, and ending cache; cache subscribing and responding: when the subscriber sends a cache request, publishing the cache request to the entire network, and acquiring cache. The method has the beneficial effects that a proper cache position is selected under the comprehensive consideration of the mobility characteristic and network characteristic of the subscriber, and delay of event cache is reduced remarkably for the subscriber, so that the efficiency is increased; the method has high adaptability no matter the subscriber moves at equal probability among each position or moves frequently within a certain local range; a proper position cache event is selected in an event dispatching path, so that the network load is reduced, and the network performance is optimized.

Owner:江阴逐日信息科技有限公司

Handling of errors in a data processing apparatus having a cache storage and a replicated address storage

A data processing apparatus includes processing circuitry, a cache storage, and a replicated address storage having a plurality of entries. On detecting a cache record error, a record of a cache location avoid storage is allocated to store a cache record identifier for the accessed cache record. On detection of an entry error, use of the address indication currently stored in that accessed entry of the replicated address storage is prevented, and a command is issued to the cache location avoid storage. In response, a record of the cache location avoid storage is allocated to store the cache record identifier for the cache record of the cache storage associated with the accessed entry of the replicated address storage. Any cache record whose cache record identifier is stored in the cache location avoid storage is logically excluded from the plurality of cache records.

Owner:ARM LTD

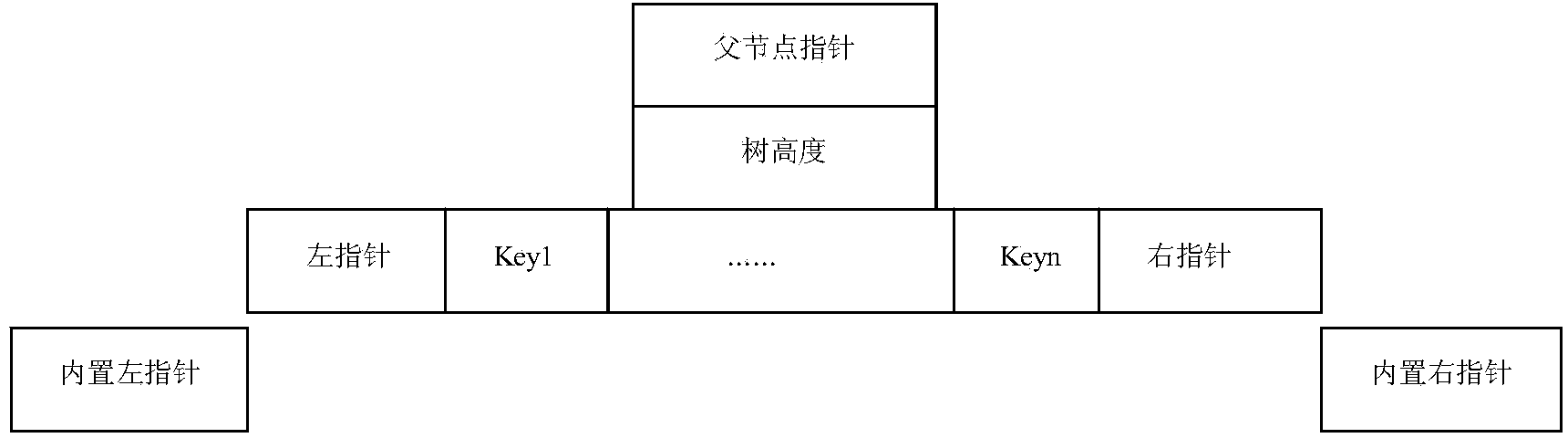

Method of read-optimized memory database T-tree index structure

InactiveCN103902693AShorten access timeValid single value lookupSpecial data processing applicationsData accessTheoretical computer science

Disclosed is a method of a read-optimized memory database T-tree index structure. The method includes: for creating a data structure of a t-T tree, building a T-tree index structure according to existing data, performing insertion operation on the data according to size N of nodes in a T-tree structure, guaranteeing orderliness of data in the nodes, further performing split operation if one node is filled up with the data so as to guarantee balance of the tree, and do not performing any operation on internal T-tree left-right subtree fields in the tree structure in the stage in the process of creating the t-T tree; performing data query operation in the built t-T tree, wherein query operation is divided into single-value query and range query. High data access efficiency provided by the T-tree structure is fully utilized, so that good read performance is provided on the whole; cache hit ratio is increased by the aid of a cache locality-sensitive algorithm and by reduction of use of pointers.

Owner:XI AN JIAOTONG UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com